"Contents"

- Demand 1: user active theme

- Requirement 2: user added theme

- Demand 3: user retention theme

- Demand 4: number of silent users

- Demand 5: number of returned users this week

- Demand 6: number of lost users

- Demand 7: number of active users in the last three consecutive weeks

- Demand 8: number of active users for three consecutive days in the last seven days

- Demand 9: total turnover of GMV (gross merchant volume) in a period of time

- Demand 10: conversion rate = new users / daily users

- Requirement 11: user behavior funnel analysis

- Demand 12: brand repurchase rate

- Demand XIII: analysis of ADS layer brand repurchase rate report

- Demand 14: find the ranking of the top ten commodities with the corresponding repurchase rate of users at each level

Demand 1: user active theme

DWS layer -- (wide surface layer of user behavior) goal: count the details of each equipment in the current day, current week and current month

1. Daily active equipment details DWD_ start_ log--->dws_ uv_ detail_ day

--Collect the same field_ Set to an array and press mid_id grouping (for later statistics)

collect_ Set de summarizes the values of a field to generate an array type field. For example: concat_ws('|', collect_set(user_id)) user_id,

Create partition table dws_uv_detail_day: partitioned by ('dt' string)

drop table if exists dws_uv_detail_day;

create table dws_uv_detail_day(

`mid_id` string COMMENT 'Unique identification of equipment',

`user_id` string COMMENT 'User ID',

`version_code` string COMMENT 'Program version number',

`version_name` string COMMENT 'Program version name',

`lang` string COMMENT 'System language',

`source` string COMMENT 'Channel number',

`os` string COMMENT 'Android version',

`area` string COMMENT 'region',

`model` string COMMENT 'Mobile phone model',

`brand` string COMMENT 'Mobile phone brand',

`sdk_version` string COMMENT 'sdkVersion',

`gmail` string COMMENT 'gmail',

`height_width` string COMMENT 'Screen width and height',

`app_time` string COMMENT 'Time when the client log was generated',

`network` string COMMENT 'Network mode',

`lng` string COMMENT 'longitude',

`lat` string COMMENT 'latitude'

) COMMENT 'Daily details of active users'

PARTITIONED BY ( `dt` string)

stored as parquet

location '/warehouse/gmall/dws/dws_uv_detail_day/'

;

Data import

Zoning by week; Filter out the data within one week; Group by device id; = = => Count (*) to get the final result;

partition(dt='2019-02-10') from dwd_ start_ log where dt='2019-02-10' group by mid_ ID (mid_id device unique identification)

Aggregation is based on the user's single day access as the key. If a user uses two operating systems, two system versions and multiple regions in a day, log in to different accounts and take only one of them

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dws_uv_detail_day partition(dt='2019-02-10')

select

mid_id,

concat_ws('|', collect_set(user_id)) user_id,

concat_ws('|', collect_set(version_code)) version_code,

concat_ws('|', collect_set(version_name)) version_name,

concat_ws('|', collect_set(lang))lang,

concat_ws('|', collect_set(source)) source,

concat_ws('|', collect_set(os)) os,

concat_ws('|', collect_set(area)) area,

concat_ws('|', collect_set(model)) model,

concat_ws('|', collect_set(brand)) brand,

concat_ws('|', collect_set(sdk_version)) sdk_version,

concat_ws('|', collect_set(gmail)) gmail,

concat_ws('|', collect_set(height_width)) height_width,

concat_ws('|', collect_set(app_time)) app_time,

concat_ws('|', collect_set(network)) network,

concat_ws('|', collect_set(lng)) lng,

concat_ws('|', collect_set(lat)) lat

from dwd_start_log

where dt='2019-02-10'

group by mid_id;

Query and import results;

hive (gmall)> select * from dws_uv_detail_day limit 1; ###The last count(*) is the number of active devices per day; hive (gmall)> select count(*) from dws_uv_detail_day;

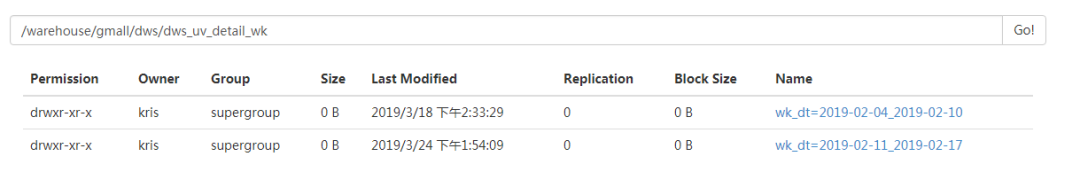

2 weekly (dws_uv_detail_wk) active equipment detail partition(wk_dt)

Monday to Sunday concat (date_add (next_day ('2019-02-10 ',' mo '), - 7),' _ ', date_ Add (next_day ('2019-02-10 ',' mo '), - 1)) is 2019-02-04_ 2019-02-10

Create partition table: partitioned by ('wk_dt 'string)

hive (gmall)>

drop table if exists dws_uv_detail_wk;

create table dws_uv_detail_wk(

`mid_id` string COMMENT 'Unique identification of equipment',

`user_id` string COMMENT 'User ID',

`version_code` string COMMENT 'Program version number',

`version_name` string COMMENT 'Program version name',

`lang` string COMMENT 'System language',

`source` string COMMENT 'Channel number',

`os` string COMMENT 'Android version',

`area` string COMMENT 'region',

`model` string COMMENT 'Mobile phone model',

`brand` string COMMENT 'Mobile phone brand',

`sdk_version` string COMMENT 'sdkVersion',

`gmail` string COMMENT 'gmail',

`height_width` string COMMENT 'Screen width and height',

`app_time` string COMMENT 'Time when the client log was generated',

`network` string COMMENT 'Network mode',

`lng` string COMMENT 'longitude',

`lat` string COMMENT 'latitude',

`monday_date` string COMMENT 'Monday date',

`sunday_date` string COMMENT 'Sunday date'

) COMMENT 'Details of active users by week'

PARTITIONED BY (`wk_dt` string)

stored as parquet

location '/warehouse/gmall/dws/dws_uv_detail_wk/'

;

Import data: divided by week; Filter out the data within one month and group by device id;

Monday: date_add(next_day('2019-05-16','MO'),-7);

Sunday: date_add(next_day('2019-05-16','MO'),-1);

Monday to Sunday: concat (date_add (next_day ('may 16, 2019 ',' mo '), - 7), "_", date_ add(next_day('2019-05-16', 'MO'), -1));

insert overwrite table dws_uv_detail_wk partition(wk_dt)

select mid_id,

concat_ws('|', collect_set(user_id)) user_id,

concat_ws('|', collect_set(version_code)) version_code,

concat_ws('|', collect_set(version_name)) version_name,

concat_ws('|', collect_set(lang)) lang,

concat_ws('|', collect_set(source)) source,

concat_ws('|', collect_set(os)) os,

concat_ws('|', collect_set(area)) area,

concat_ws('|', collect_set(model)) model,

concat_ws('|', collect_set(brand)) brand,

concat_ws('|', collect_set(sdk_version)) sdk_version,

concat_ws('|', collect_set(gmail)) gmail,

concat_ws('|', collect_set(height_width)) height_width,

concat_ws('|', collect_set(app_time)) app_time,

concat_ws('|', collect_set(network)) network,

concat_ws('|', collect_set(lng)) lng,

concat_ws('|', collect_set(lat)) lat,

date_add(next_day('2019-02-10', 'MO'), -7),

date_add(next_day('2019-02-10', 'MO'), -1),

concat(date_add(next_day('2019-02-10', 'MO'), -7), '_', date_add(next_day('2019-02-10', 'MO'), -1))

from dws_uv_detail_day

where dt >= date_add(next_day('2019-02-10', 'MO'), -7) and dt <= date_add(next_day('2019-02-10', 'MO'), -1)

group by mid_id;

Query import results

hive (gmall)> select * from dws_uv_detail_wk limit 1; hive (gmall)> select count(*) from dws_uv_detail_wk;

3. Monthly active equipment details DWS_ uv_ detail_ Mn partition (MN) - insert daily data

DWS layer creates partition table partitioned by(mn string)

hive (gmall)>

drop table if exists dws_uv_detail_mn;

create external table dws_uv_detail_mn(

`mid_id` string COMMENT 'Unique identification of equipment',

`user_id` string COMMENT 'User ID',

`version_code` string COMMENT 'Program version number',

`version_name` string COMMENT 'Program version name',

`lang` string COMMENT 'System language',

`source` string COMMENT 'Channel number',

`os` string COMMENT 'Android version',

`area` string COMMENT 'region',

`model` string COMMENT 'Mobile phone model',

`brand` string COMMENT 'Mobile phone brand',

`sdk_version` string COMMENT 'sdkVersion',

`gmail` string COMMENT 'gmail',

`height_width` string COMMENT 'Screen width and height',

`app_time` string COMMENT 'Time when the client log was generated',

`network` string COMMENT 'Network mode',

`lng` string COMMENT 'longitude',

`lat` string COMMENT 'latitude'

) COMMENT 'Monthly details of active users'

PARTITIONED BY (`mn` string)

stored as parquet

location '/warehouse/gmall/dws/dws_uv_detail_mn/'

;

Data import is divided by month; Filter out the data within one month and group it according to the device id;

data_format('2019-03-10', 'yyyy-MM') ---> 2019-03

where date_format('dt', 'yyyy-MM') = date_format('2019-02-10', 'yyyy-MM') group by mid_id;

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dws_uv_detail_mn partition(mn)

select

mid_id,

concat_ws('|', collect_set(user_id)) user_id,

concat_ws('|', collect_set(version_code)) version_code,

concat_ws('|', collect_set(version_name)) version_name,

concat_ws('|', collect_set(lang)) lang,

concat_ws('|', collect_set(source)) source,

concat_ws('|', collect_set(os)) os,

concat_ws('|', collect_set(area)) area,

concat_ws('|', collect_set(model)) model,

concat_ws('|', collect_set(brand)) brand,

concat_ws('|', collect_set(sdk_version)) sdk_version,

concat_ws('|', collect_set(gmail)) gmail,

concat_ws('|', collect_set(height_width)) height_width,

concat_ws('|', collect_set(app_time)) app_time,

concat_ws('|', collect_set(network)) network,

concat_ws('|', collect_set(lng)) lng,

concat_ws('|', collect_set(lat)) lat,

date_format('2019-02-10','yyyy-MM')

from dws_uv_detail_day

where date_format(dt,'yyyy-MM') = date_format('2019-02-10','yyyy-MM')

group by mid_id;

Query import results

hive (gmall)> select * from dws_uv_detail_mn limit 1; hive (gmall)> select count(*) from dws_uv_detail_mn ;

DWS layer loading data script

Create a script in the / home/kris/bin directory of Hadoop 101

[kris@hadoop101 bin]$ vim dws.sh

#!/bin/bash

# Define variables for easy modification

APP=gmall

hive=/opt/module/hive/bin/hive

# If it is the entered date, the entered date will be obtained according to the; If no date is entered, take the day before the current time

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table "$APP".dws_uv_detail_day partition(dt='$do_date')

select

mid_id,

concat_ws('|', collect_set(user_id)) user_id,

concat_ws('|', collect_set(version_code)) version_code,

concat_ws('|', collect_set(version_name)) version_name,

concat_ws('|', collect_set(lang)) lang,

concat_ws('|', collect_set(source)) source,

concat_ws('|', collect_set(os)) os,

concat_ws('|', collect_set(area)) area,

concat_ws('|', collect_set(model)) model,

concat_ws('|', collect_set(brand)) brand,

concat_ws('|', collect_set(sdk_version)) sdk_version,

concat_ws('|', collect_set(gmail)) gmail,

concat_ws('|', collect_set(height_width)) height_width,

concat_ws('|', collect_set(app_time)) app_time,

concat_ws('|', collect_set(network)) network,

concat_ws('|', collect_set(lng)) lng,

concat_ws('|', collect_set(lat)) lat

from "$APP".dwd_start_log

where dt='$do_date'

group by mid_id;

insert overwrite table "$APP".dws_uv_detail_wk partition(wk_dt)

select

mid_id,

concat_ws('|', collect_set(user_id)) user_id,

concat_ws('|', collect_set(version_code)) version_code,

concat_ws('|', collect_set(version_name)) version_name,

concat_ws('|', collect_set(lang)) lang,

concat_ws('|', collect_set(source)) source,

concat_ws('|', collect_set(os)) os,

concat_ws('|', collect_set(area)) area,

concat_ws('|', collect_set(model)) model,

concat_ws('|', collect_set(brand)) brand,

concat_ws('|', collect_set(sdk_version)) sdk_version,

concat_ws('|', collect_set(gmail)) gmail,

concat_ws('|', collect_set(height_width)) height_width,

concat_ws('|', collect_set(app_time)) app_time,

concat_ws('|', collect_set(network)) network,

concat_ws('|', collect_set(lng)) lng,

concat_ws('|', collect_set(lat)) lat,

date_add(next_day('$do_date','MO'),-7),

date_add(next_day('$do_date','SU'),-7),

concat(date_add( next_day('$do_date','MO'),-7), '_' , date_add(next_day('$do_date','MO'),-1)

)

from "$APP".dws_uv_detail_day

where dt>=date_add(next_day('$do_date','MO'),-7) and dt<=date_add(next_day('$do_date','MO'),-1)

group by mid_id;

insert overwrite table "$APP".dws_uv_detail_mn partition(mn)

select

mid_id,

concat_ws('|', collect_set(user_id)) user_id,

concat_ws('|', collect_set(version_code)) version_code,

concat_ws('|', collect_set(version_name)) version_name,

concat_ws('|', collect_set(lang))lang,

concat_ws('|', collect_set(source)) source,

concat_ws('|', collect_set(os)) os,

concat_ws('|', collect_set(area)) area,

concat_ws('|', collect_set(model)) model,

concat_ws('|', collect_set(brand)) brand,

concat_ws('|', collect_set(sdk_version)) sdk_version,

concat_ws('|', collect_set(gmail)) gmail,

concat_ws('|', collect_set(height_width)) height_width,

concat_ws('|', collect_set(app_time)) app_time,

concat_ws('|', collect_set(network)) network,

concat_ws('|', collect_set(lng)) lng,

concat_ws('|', collect_set(lat)) lat,

date_format('$do_date','yyyy-MM')

from "$APP".dws_uv_detail_day

where date_format(dt,'yyyy-MM') = date_format('$do_date','yyyy-MM')

group by mid_id;

"

$hive -e "$sql"

Add script execution permission Chmod 777 DWS sh

Script use[ kris@hadoop101 module]$ dws. sh 2019-02-11

Query results

hive (gmall)> select count(*) from dws_uv_detail_day; hive (gmall)> select count(*) from dws_uv_detail_wk; hive (gmall)> select count(*) from dws_uv_detail_mn ;

Script execution time; In enterprise development, it is usually from 30:00 to 1:00 every morning

Target of ADS layer: the number of active devices in the current day, week and month uses day_count table join wk_count join mn_count, connect the three tables together

Create table ads_uv_count table:

Field has day_count,wk_count,mn_count is_weekend if(date_add(next_day('2019-02-10', 'MO'), -1) = '2019-02-10', 'Y', 'N') is_monthend if(last_day('2019-02-10') = '2019-02-10', 'Y', 'N')

drop table if exists ads_uv_count; create external table ads_uv_count( `dt` string comment 'Statistical date', `day_count` bigint comment 'Daily user volume', `wk_count` bigint comment 'Number of users in the current week', `mn_count` bigint comment 'Number of users in current month', `is_weekend` string comment 'Y,N Is it a weekend,Used to get the final result of this week', `is_monthend` string comment 'Y,N Is it month end,Used to get the final result of this month' ) comment 'Number of active users per day' stored as parquet location '/warehouse/gmall/ads/ads_uv_count/';

Import data:

hive (gmall)>

insert overwrite table ads_uv_count

select

'2019-02-10' dt,

daycount.ct,

wkcount.ct,

mncount.ct,

if(date_add(next_day('2019-02-10','MO'),-1)='2019-02-10','Y','N') ,

if(last_day('2019-02-10')='2019-02-10','Y','N')

from

(

select

'2019-02-10' dt,

count(*) ct

from dws_uv_detail_day

where dt='2019-02-10'

)daycount join

(

select

'2019-02-10' dt,

count (*) ct

from dws_uv_detail_wk

where wk_dt=concat(date_add(next_day('2019-02-10','MO'),-7),'_' ,date_add(next_day('2019-02-10','MO'),-1) )

) wkcount on daycount.dt=wkcount.dt

join

(

select

'2019-02-10' dt,

count (*) ct

from dws_uv_detail_mn

where mn=date_format('2019-02-10','yyyy-MM')

)mncount on daycount.dt=mncount.dt

;

Query import results

hive (gmall)> select * from ads_uv_count ;

ADS layer loading data script

1) Create a script in the / home/kris/bin directory of Hadoop 101

[kris@hadoop101 bin]$ vim ads.sh

#!/bin/bash

# Define variables for easy modification

APP=gmall

hive=/opt/module/hive/bin/hive

# If it is the entered date, the entered date will be obtained according to the; If no date is entered, take the day before the current time

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert into table "$APP".ads_uv_count

select

'$do_date' dt,

daycount.ct,

wkcount.ct,

mncount.ct,

if(date_add(next_day('$do_date','MO'),-1)='$do_date','Y','N') ,

if(last_day('$do_date')='$do_date','Y','N')

from

(

select

'$do_date' dt,

count(*) ct

from "$APP".dws_uv_detail_day

where dt='$do_date'

)daycount join

(

select

'$do_date' dt,

count (*) ct

from "$APP".dws_uv_detail_wk

where wk_dt=concat(date_add(next_day('$do_date','MO'),-7),'_' ,date_add(next_day('$do_date','MO'),-1) )

) wkcount on daycount.dt=wkcount.dt

join

(

select

'$do_date' dt,

count (*) ct

from "$APP".dws_uv_detail_mn

where mn=date_format('$do_date','yyyy-MM')

)mncount on daycount.dt=mncount.dt;

"

$hive -e "$sql"

Add script execution permission chmod 777 ads.sh

The script uses ads.sh 2019-02-11

Query import result hive (GMALL) > select * from ads_ uv_ count ;

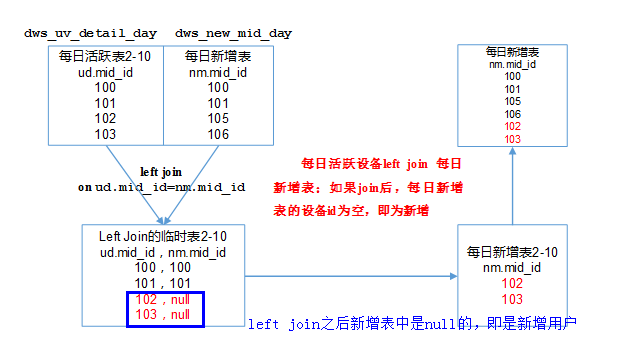

Requirement 2: user added theme

Users who use the application online for the first time. If a user opens an APP for the first time, the user is defined as a new user; Uninstalled and reinstalled devices will not be counted as a new addition. New users include daily new users, weekly new users and monthly new users.

Daily new (not including old users, who have not logged in before, but today is the first time to log in) devices -- there is no partition -- > there is no him in the previous new library, but he is active today, that is, new users;

1 DWS layer (daily newly added equipment list) create daily newly added equipment list: dws_new_mid_day

hive (gmall)>

drop table if exists dws_new_mid_day;

create table dws_new_mid_day

(

`mid_id` string COMMENT 'Unique identification of equipment',

`user_id` string COMMENT 'User ID',

`version_code` string COMMENT 'Program version number',

`version_name` string COMMENT 'Program version name',

`lang` string COMMENT 'System language',

`source` string COMMENT 'Channel number',

`os` string COMMENT 'Android version',

`area` string COMMENT 'region',

`model` string COMMENT 'Mobile phone model',

`brand` string COMMENT 'Mobile phone brand',

`sdk_version` string COMMENT 'sdkVersion',

`gmail` string COMMENT 'gmail',

`height_width` string COMMENT 'Screen width and height',

`app_time` string COMMENT 'Time when the client log was generated',

`network` string COMMENT 'Network mode',

`lng` string COMMENT 'longitude',

`lat` string COMMENT 'latitude',

`create_date` string comment 'Creation time'

) COMMENT 'Daily new equipment information'

stored as parquet

location '/warehouse/gmall/dws/dws_new_mid_day/';

dws_ uv_ detail_ Day (daily active equipment details) left join DWS_ new_ mid_ Day nm (previous new user table, new field create_time2019-02-10) nm mid_ id is null;

Import data

Use the daily active user table left join to add a daily device table. The associated condition is mid_ The IDs are equal. If it is a daily added device, it is null in the daily added device table.

from dws_uv_detail_day ud left join dws_new_mid_day nm on ud.mid_id=nm.mid_id

where ud.dt='2019-02-10' and nm.mid_id is null;

hive (gmall)>

insert into table dws_new_mid_day

select

ud.mid_id,

ud.user_id ,

ud.version_code ,

ud.version_name ,

ud.lang ,

ud.source,

ud.os,

ud.area,

ud.model,

ud.brand,

ud.sdk_version,

ud.gmail,

ud.height_width,

ud.app_time,

ud.network,

ud.lng,

ud.lat,

'2019-02-10'

from dws_uv_detail_day ud left join dws_new_mid_day nm on ud.mid_id=nm.mid_id

where ud.dt='2019-02-10' and nm.mid_id is null;

Query import data

hive (gmall)> select count(*) from dws_new_mid_day ;

2. Ads layer (daily newly added equipment table) creates daily newly added equipment table ads_new_mid_count

hive (gmall)>

drop table if exists `ads_new_mid_count`;

create table `ads_new_mid_count`

(

`create_date` string comment 'Creation time' ,

`new_mid_count` BIGINT comment 'Number of new equipment'

) COMMENT 'Number of new equipment information per day'

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ads/ads_new_mid_count/';

Import data count (*) DWS_ new_ mid_ Just use the day table

Add create_date must be group by create_time, otherwise an error is reported: not in GROUP BY key 'create_date'

hive (gmall)> insert into table ads_new_mid_count select create_date , count(*) from dws_new_mid_day where create_date='2019-02-10' group by create_date ;

Query import data

hive (gmall)> select * from ads_new_mid_count;

Extended monthly additions:

--New monthly

drop table if exists dws_new_mid_mn;

create table dws_new_mid_mn(

`mid_id` string COMMENT 'Unique identification of equipment',

`user_id` string COMMENT 'User ID',

`version_code` string COMMENT 'Program version number',

`version_name` string COMMENT 'Program version name',

`lang` string COMMENT 'System language',

`source` string COMMENT 'Channel number',

`os` string COMMENT 'Android version',

`area` string COMMENT 'region',

`model` string COMMENT 'Mobile phone model',

`brand` string COMMENT 'Mobile phone brand',

`sdk_version` string COMMENT 'sdkVersion',

`gmail` string COMMENT 'gmail',

`height_width` string COMMENT 'Screen width and height',

`app_time` string COMMENT 'Time when the client log was generated',

`network` string COMMENT 'Network mode',

`lng` string COMMENT 'longitude',

`lat` string COMMENT 'latitude'

)comment "Monthly new details"

partitioned by(mn string)

stored as parquet

location "/warehouse/gmall/dws/dws_new_mid_mn";

insert overwrite table dws_new_mid_mn partition(mn)

select

um.mid_id,

um.user_id ,

um.version_code ,

um.version_name ,

um.lang ,

um.source,

um.os,

um.area,

um.model,

um.brand,

um.sdk_version,

um.gmail,

um.height_width,

um.app_time,

um.network,

um.lng,

um.lat,

date_format('2019-02-10', 'yyyy-MM')

from dws_uv_detail_mn um left join dws_new_mid_mn nm on um.mid_id = nm.mid_id

where um.mn =date_format('2019-02-10', 'yyyy-MM') and nm.mid_id = null; ----Why is it empty?? I can't find the data

--##Note that date cannot be written here_ format(um.mn, 'yyyy-MM') =date_ format('2019-02-10', 'yyyy-MM')

|

Update to requirement 2 first, and I will continue to update the subsequent requirements...... Coming soon!!!!!