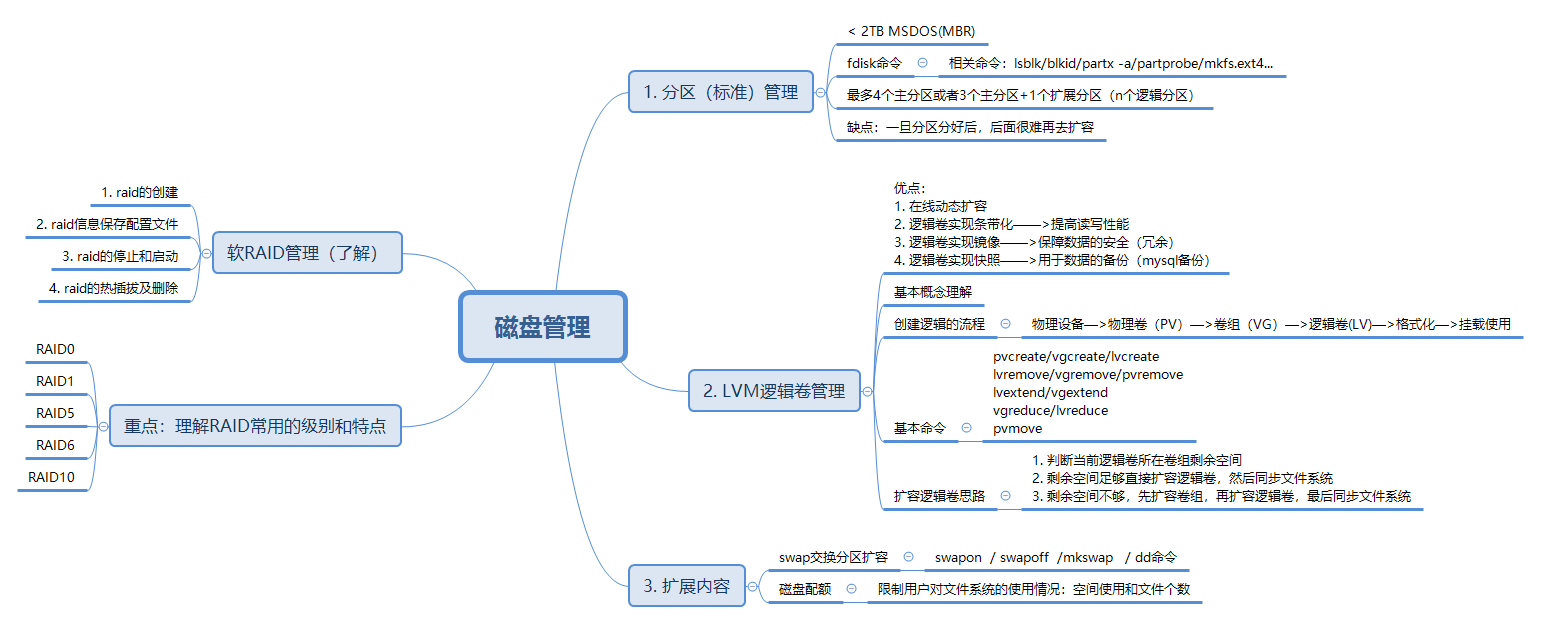

1, RAID introduction

RAID(Redundant Array of Independent Disk) technology was proposed by the University of California, Berkeley in 1987. It was originally developed to combine small cheap disks to replace large expensive disks, and hope that the access to data will not be lost when the disk fails. RAID is a redundant array composed of multiple cheap disks. It appears as an independent large storage device under the operating system. RAID can give full play to the advantages of multiple hard disks, improve the speed of the hard disk, increase the capacity, provide fault tolerance, ensure data security and easy management. It can continue to work in case of any hard disk problem without being affected by the damage of the hard disk.

2, Common RAID levels

1. RAID0

RAID0 features:

- At least two disks are required

- Data is striped and distributed to disk, with high read-write performance and 100% high storage space utilization

- There is no redundancy policy for data. If a disk fails, the data cannot be recovered

- Application scenario:

- Scenes with high performance requirements but low requirements for data security and reliability, such as audio and video storage.

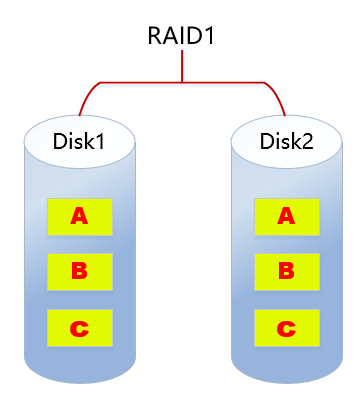

2. RAID1

RAID1 features:

- At least 2 disks are required

- The data mirror backup is written to the disk (working disk and mirror disk), with high reliability and 50% disk utilization

- The read performance is OK, but the write performance is poor

- A disk failure will not affect data reading and writing

- Application scenario:

- Scenarios with high requirements for data security and reliability, such as e-mail system, trading system, etc.

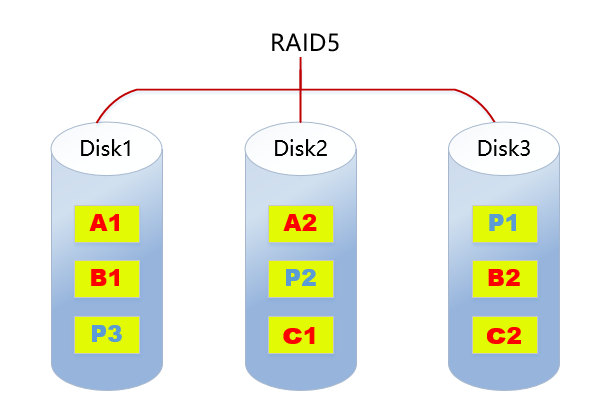

3. RAID5

RAID5 features:

- At least 3 disks are required

- The data is striped and stored on the disk, with good read-write performance and disk utilization of (n-1)/n

- Data redundancy with parity check (dispersion)

- If one disk fails, the damaged data can be reconstructed according to other data blocks and corresponding verification data (performance consumption)

- It is the data protection solution with the best comprehensive performance at present

- Taking into account storage performance, data security, storage cost and other factors (high cost performance)

- It is applicable to most application scenarios

4. RAID6

RAID6 features:

- At least 4 disks are required

- Data is striped and stored on disk, with good reading performance and strong fault tolerance

- Double check is adopted to ensure data security

- If two disks fail at the same time, the data of two disks can be reconstructed through two verification data

- The cost is higher and more complex than other levels

- It is generally used in occasions with very high requirements for data security

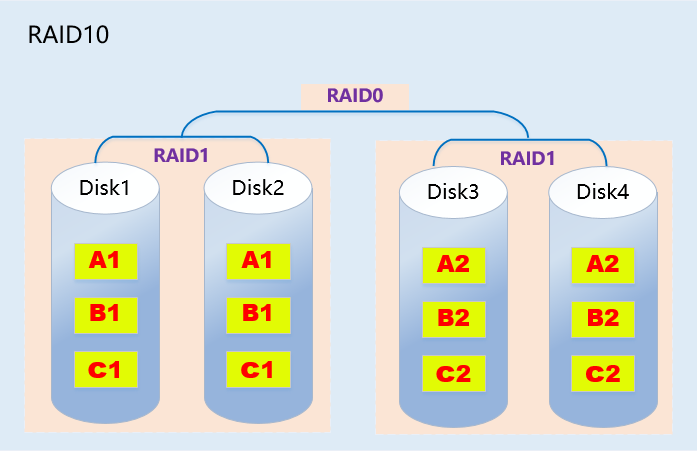

5. RAID10

RAID10 features:

- RAID10 is a combination of raid1+raid0

- At least 4 disks are required

- The two hard disks are a group. First do raid1, and then do raid0 for the two groups that do raid1

- Give consideration to data redundancy (raid1 mirroring) and read / write performance (raid0 data striping)

- Disk utilization is 50% and the cost is high

6. Summary

| type | Read write performance | reliability | Disk utilization | cost |

|---|---|---|---|---|

| RAID0 | best | minimum | 100% | Lower |

| RAID1 | Normal reading; Write two copies of the data | high | 50% | high |

| RAID5 | Read: approximate RAID0 write: too many checks | RAID0<RAID5<RAID1 | (n-1)/n | RAID0<RAID5<RAID1 |

| RAID6 | Read: approximate RAID0 write: double check | RAID6>RAID5 | RAID6<RAID5 | RAID6>RAID1 |

| RAID10 | Read: RAID10=RAID0 write: RAID10=RAID1 | high | 50% | highest |

3, Hard and soft RAID

1. Soft RAID

Soft RAID runs at the bottom of the operating system, virtualizes the physical disk submitted by SCSI or IDE controller into a virtual disk, and then submits it to the management program for management. Soft RAID has the following features:

- Occupied memory space

- Occupied CPU resources

- If the program or operating system fails, it cannot run

**Summary: * * based on the above defects, enterprises rarely use soft raid.

2. Hard RAID

The raid function is realized by hardware. The independent raid card and the raid chip integrated on the motherboard are all hard raid. Raid card is a board used to realize raid function. It is usually composed of a series of components such as I/O processor, hard disk controller, hard disk connector and cache. Different raid cards support different raid functions. Supports RAlD0, RAID1, RAID4, RAID5 and RAID10.

4, Soft raid creation

0. Environmental preparation

Add a 20G virtual hard disk and divide it into 10 partitions.

1. Creating raid

#####Create RAID0

install mdadm Tools:

yum -y install mdadm

establish raid0:

[root@server ~]# mdadm --create /dev/md0 --raid-devices=2 /dev/sdc1 /dev/sdc2 --level=0

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

perhaps

[root@server ~]# mdadm -C /dev/md0 -l 0 -n 2 /dev/sdc1 /dev/sdc2

-C:Create soft raid

-l: appoint raid level

-n: appoint raid Number of devices in

see RAID Information:

/proc/mdstat Documents all raid information

[root@server ~]# cat /proc/mdstat

Personalities : [raid0]

md0 : active raid0 sdc2[1] sdc1[0]

2119680 blocks super 1.2 512k chunks

unused devices: <none>

View the specified RAID Information:

[root@server ~]# mdadm -D /dev/md0

Format mount usage:

[root@server ~]# mkfs.ext4 /dev/md0

[root@server ~]# mount /dev/md0 /u01

Test:

[root@server ~]# iostat -m -d /dev/sdc[12] 2

[root@server ~]# dd if=/dev/zero of=/u01/file bs=1M count=1024

Create RAID1

establish raid1:

[root@server ~]# mdadm -C /dev/md1 -l1 -n 2 /dev/sdc[56]

View status information:

[root@server ~]# watch -n 1 cat /proc/mdstat The watch command monitors the changes of the file and displays it once a second

Or view it directly

[root@server ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc6[1] sdc5[0]

1059200 blocks super 1.2 [2/2] [UU]

[=====>...............] resync = 25.2% (267520/1059200) finish=0.3min speed=38217K/sec

The above information shows that the two disks are synchronizing, 100%Description synchronization complete

md0 : active raid0 sdc2[1] sdc1[0]

2119680 blocks super 1.2 512k chunks

unused devices: <none>

[root@server ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc6[1] sdc5[0]

1059200 blocks super 1.2 [2/2] [UU] Two UU Description status ok,A disk fault is displayed_U

md0 : active raid0 sdc2[1] sdc1[0]

2119680 blocks super 1.2 512k chunks

unused devices: <none>

see raid1 detailed information

[root@server ~]# mdadm -D /dev/md1

Format mount usage:

[root@server ~]# mkfs.ext4 /dev/md1

[root@server ~]# mount /dev/md1 /u02

Test and verify hot plug:

1. Simulate a disk failure (mark the disk as failed)

[root@server ~]# mdadm /dev/md1 -f /dev/sdc5

mdadm: set /dev/sdc5 faulty in /dev/md1

-f or --fail Indicates failure

2. see raid1 state

[root@server ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc6[1] sdc5[0](F) F Indicates failure

1059200 blocks super 1.2 [2/1] [_U] _Indicates that a disk has failed

md0 : active raid0 sdc2[1] sdc1[0]

2119680 blocks super 1.2 512k chunks

unused devices: <none>

[root@server ~]# mdadm -D /dev/md1

. . .

Number Major Minor RaidDevice State

0 0 0 0 removed

1 8 38 1 active sync /dev/sdc6

0 8 37 - faulty /dev/sdc5 Failed disk waiting to be removed

3. Remove faulty or failed hard disk (hot unplug)

[root@server ~]# mdadm /dev/md1 -r /dev/sdc5

mdadm: hot removed /dev/sdc5 from /dev/md1

-r or --remove Indicates removal

[root@server ~]# mdadm -D /dev/md1

. . .

Number Major Minor RaidDevice State

0 0 0 0 removed

1 8 38 1 active sync /dev/sdc6

4. Add new disk to raid1 Medium (hot plug)

[root@server ~]# mdadm /dev/md1 -a /dev/sdc5

mdadm: added /dev/sdc5

-a or --add Indicates an increase

[root@server ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc5[2] sdc6[1]

1059200 blocks super 1.2 [2/1] [_U]

[====>................] recovery = 21.4% (227392/1059200) finish=0.0min speed=227392K/sec

md0 : active raid0 sdc2[1] sdc1[0]

2119680 blocks super 1.2 512k chunks

unused devices: <none>

Create RAID5

establish raid5:

[root@server ~]# mdadm -C /dev/md5 -l 5 -n 3 -x 1 /dev/sdc{7,8,9,10}

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

-x, --spare-devices= Indicates the specified hot spare

[root@server ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdc9[4] sdc10[3](S) sdc8[1] sdc7[0]

2117632 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [UU_]

[=====>...............] recovery = 29.9% (317652/1058816) finish=0.3min speed=39706K/sec

Wait until synchronization is complete to view again:

[root@server ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdc9[4] sdc10[3](S) sdc8[1] sdc7[0] S express spare Backup disk

2117632 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

Note: a hot spare means that when one of the disks fails, the hot spare will be pushed up immediately without manual intervention.

[root@server ~]# mdadm -D /dev/md5 View details

. . .

Number Major Minor RaidDevice State

0 8 39 0 active sync /dev/sdc7

1 8 40 1 active sync /dev/sdc8

4 8 41 2 active sync /dev/sdc9

3 8 42 - spare /dev/sdc10

Format mount usage:

[root@server ~]# mkfs.ext4 /dev/md5

[root@server ~]# mount /dev/md1 /u03

View space usage:

[root@server ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg01-lv_root 18G 5.9G 11G 36% /

tmpfs 931M 0 931M 0% /dev/shm

/dev/sda1 291M 33M 244M 12% /boot

/dev/sr0 4.2G 4.2G 0 100% /mnt

/dev/md0 2.0G 1.1G 843M 57% /u01 Utilization rate 100%

/dev/md1 1019M 34M 934M 4% /u02 Utilization 50%

/dev/md5 2.0G 68M 1.9G 4% /u03 Utilization rate(n-1)/n,That is 3-1/3*3g=2g

Test the function of hot spare disk:

1. Mark a movable disk invalid

[root@server ~]# mdadm /dev/md5 -f /dev/sdc7

mdadm: set /dev/sdc7 faulty in /dev/md5

View status now:

[root@server ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdc9[4] sdc10[3] sdc8[1] sdc7[0](F)

explain:

sdc7(F)After failure, the original sdc10(S)The hot spare is immediately launched to synchronize data

2117632 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[==>..................] recovery = 13.0% (138052/1058816) finish=0.4min speed=34513K/sec

...

unused devices: <none>

[root@server ~]# mdadm -D /dev/md5

...

Number Major Minor RaidDevice State

3 8 42 0 active sync /dev/sdc10

1 8 40 1 active sync /dev/sdc8

4 8 41 2 active sync /dev/sdc9

0 8 39 - faulty /dev/sdc7

2. Remove the failed disk

[root@server ~]# mdadm /dev/md5 -r /dev/sdc7

mdadm: hot removed /dev/sdc7 from /dev/md5

[root@server ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdc9[4] sdc10[3] sdc8[1]

2117632 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

3. For future consideration, add another hot spare to raid5 in

[root@server ~]# mdadm /dev/md5 -a /dev/sdc7

mdadm: added /dev/sdc7

Class assignments:

1. Create a raid5,raid5 It consists of at least 3 disk devices

2. Simulate the fault of 1 piece of equipment and check it raid5 Can it be used normally

2. Save RAID information

Q: why save raid Information? A: if the information is not saved, restart the system raid Not automatically recognized (in rhel6 In the test, the name will change after restart). 1. create profile [root@server ~]# vim /etc/mdadm.conf DEVICES /dev/sdc[1256789] Note: the configuration file is not created by default. It is created manually. It is written as done raid All hard disk devices. 2. scanning raid Save information to configuration file [root@server ~]# mdadm -D --scan >> /etc/mdadm.conf [root@server ~]# cat /etc/mdadm.conf DEVICES /dev/sdc[1256789] ARRAY /dev/md0 metadata=1.2 name=web.itcast.cc:0 UUID=3379fd89:1e427f0b:63b0c984:1615eee0 ARRAY /dev/md1 metadata=1.2 name=server:1 UUID=e3547ea1:cca35c51:11894931:e90525d7 ARRAY /dev/md5 metadata=1.2 name=server:5 UUID=18a60636:71c82e50:724a2a8f:e763fdcf

3. raid stop and start

with RAID5 As an example:

stop it raid:

1. uninstall raid

[root@server ~]# umount /u03

2. Stop with command raid

[root@server ~]# mdadm --stop /dev/md5

mdadm: stopped /dev/md5

start-up raid:

1. configuration file(/etc/mdadm.conf)The following startup conditions exist

[root@server ~]# mdadm -A /dev/md5

mdadm: /dev/md5 has been started with 3 drives.

-A: Assemble a pre-existing array Indicates loading an existing raid

2. configuration file(/etc/mdadm.conf)The following startup does not exist

[root@server ~]# mdadm -A /dev/md5 /dev/sdc[789]

mdadm: /dev/md5 has been started with 3 drives.

3. If you don't know the device name, you can check the name of each device raid Information, using uuid hold raid Equipment reassembly

[root@server ~]# mdadm -E /dev/sdc7

/dev/sdc7:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : 18a60636:71c82e50:724a2a8f:e763fdcf

Description: same raid For each disk in the UUID It's all this value

. . .

[root@server ~]# mdadm -E /dev/sdc8

/dev/sdc8:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : 18a60636:71c82e50:724a2a8f:e763fdcf

Find and reassemble through the above methods, as follows:

[root@server ~]# mdadm -A --uuid=18a60636:71c82e50:724a2a8f:e763fdcf /dev/md5

mdadm: /dev/md5 has been started with 3 drives.

4. Delete raid

1. Uninstall device [root@server ~]# umount /u03 2. Remove all disks [root@server ~]# mdadm /dev/md5 -f /dev/sdc[789] mdadm: set /dev/sdc7 faulty in /dev/md5 mdadm: set /dev/sdc8 faulty in /dev/md5 mdadm: set /dev/sdc9 faulty in /dev/md5 [root@server ~]# mdadm /dev/md5 -r /dev/sdc[789] mdadm: hot removed /dev/sdc7 from /dev/md5 mdadm: hot removed /dev/sdc8 from /dev/md5 mdadm: hot removed /dev/sdc9 from /dev/md5 3. stop it raid [root@server ~]# mdadm --stop /dev/md5 mdadm: stopped /dev/md5 4. Wipe out the super block( superblock)Clear related information [root@server ~]# mdadm --misc --zero-superblock /dev/sdc[789]

5. After class practice

Create RAID10 and do relevant test verification.

5, Disk management summary