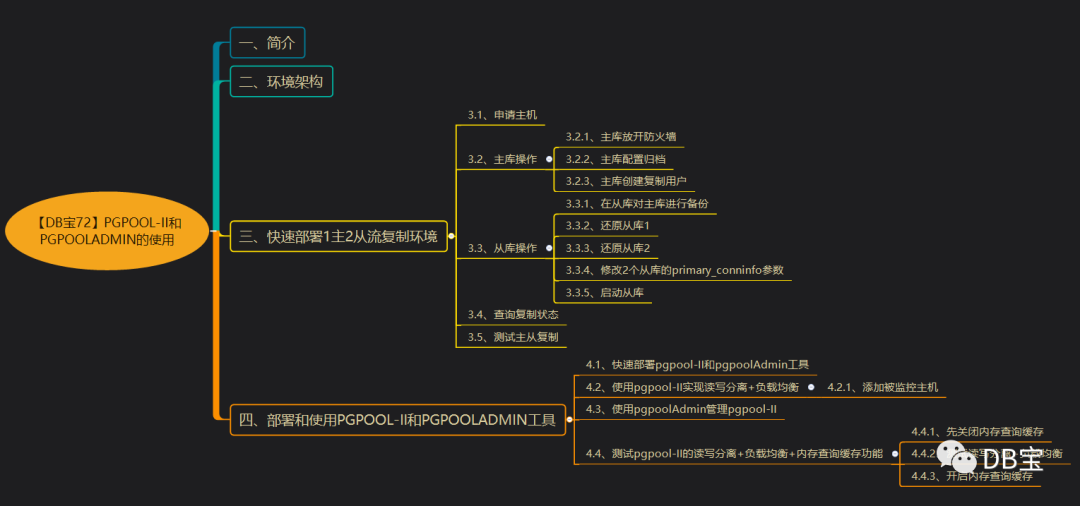

catalogue

1, Introduction

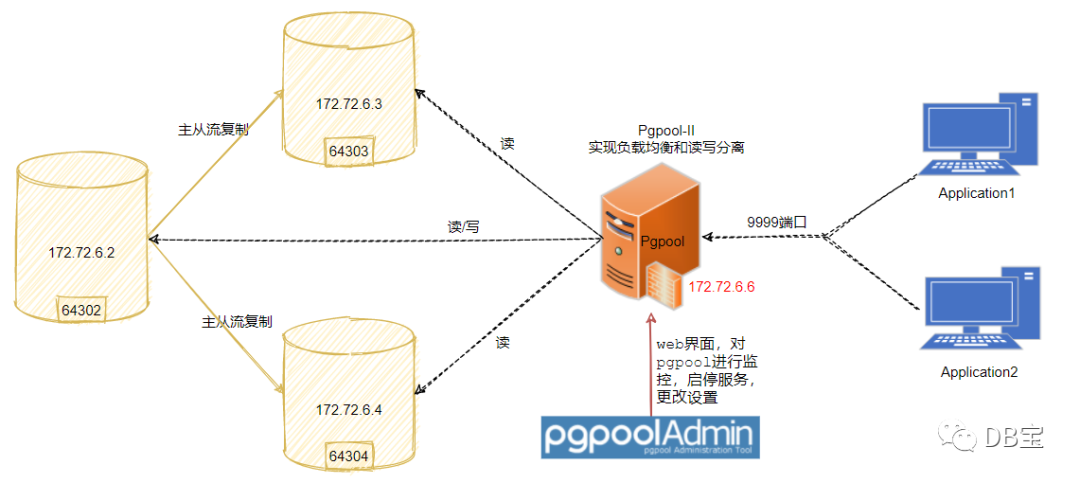

2, Environment architecture

3, Rapid deployment of 1master 2sslave stream replication environment

3.1,Application host

3.2,Main library operation

3.2.1,Release firewall of main database

3.2.2,Master database configuration Archive

3.2.3,Master library create replication user

3.3,Operation from library

3.3.1,Backup the master library from the slave library

3.3.2,Restore from library 1

3.3.3,Restore from library 2

3.3.4,Modify 2 slave Libraries primary_conninfo parameter

3.3.5,Start slave Library

3.4,Query replication status

3.5,Test master-slave replication

4, Deployment and use pgpool-II and pgpoolAdmin tool

4.1,Rapid deployment pgpool-II and pgpoolAdmin tool

4.2,use pgpool-II Realize read-write separation+load balancing

4.2.1,Add monitored host

4.3,use pgpoolAdmin Administration pgpool-II

4.4,test pgpool-II Read write separation+load balancing +Memory query cache function

4.4.1,Close the memory query cache first

4.4.2,Test read write separation+load balancing

4.4.3,Enable memory query cache

1, Introduction

Previously published 2 articles on master-slave stream replication in PG:

- [DB treasure 60] PG12 highly available 1 master 2 slave stream replication environment setup and handover test

- [DB treasure 61] PostgreSQL uses pgpool II to realize read-write separation + load balancing

Related documents about pgpool II:

- pgpool-II 3.2.5 manual

- pgpool-II-3.2.5 Getting Started tutorial

However, the previous article did not introduce the knowledge about pgpoolAdmin. This web interface tool developed for pgpool is still practical.

The pgpool project also provides a Web management tool written in PHP, called "pgpoolAdmin", which can realize the configuration of pgpool-II in the form of Web interface.

pgpoolAdmin Download: https://pgpool.net/mediawiki/index.php/Downloads

Pgpooladmin official document: https://www.pgpool.net/docs/pgpoolAdmin/index_en.html

The pgpool Administration Tool is management tool of pgpool. It is possible to monitor, start, stop pgpool and change setting for pgpool.

PgpoolAdmin is a WEB interface tool for managing pgpool. It can monitor pgpool, start and stop services and change settings.

Let's start with a picture:

Today, let's introduce the use of pgpool-II and pgpoolAdmin.

2, Environment architecture

👉 be careful: 192.168 in this environment 66.35 or 192.168 1.35 refers to the same host. All environments in the above figure are installed in the host.

3, Rapid deployment of 1master 2sslave stream replication environment

3.1 application host

-- Pull image docker pull postgres:12 -- Create a private network docker network create --subnet=172.72.6.0/24 pg-network -- Create host dependent mapping path mkdir -p /docker_data/pg/lhrpg64302/data mkdir -p /docker_data/pg/lhrpg64303/data mkdir -p /docker_data/pg/lhrpg64304/data -- Main library docker rm -f lhrpg64302 rm -rf /docker_data/pg/lhrpg64302/data docker run -d --name lhrpg64302 -h lhrpg64302 \ -p 64302:5432 --net=pg-network --ip 172.72.6.2 \ -v /docker_data/pg/lhrpg64302/data:/var/lib/postgresql/data \ -v /docker_data/pg/lhrpg64302/bk:/bk \ -e POSTGRES_PASSWORD=lhr \ -e TZ=Asia/Shanghai \ postgres:12 -- From library 1 docker rm -f lhrpg64303 rm -rf /docker_data/pg/lhrpg64303/data rm -rf /docker_data/pg/lhrpg64303/bk docker run -d --name lhrpg64303 -h lhrpg64303 \ -p 64303:5432 --net=pg-network --ip 172.72.6.3 \ -v /docker_data/pg/lhrpg64303/data:/var/lib/postgresql/data \ -v /docker_data/pg/lhrpg64303/bk:/bk \ -e POSTGRES_PASSWORD=lhr \ -e TZ=Asia/Shanghai \ postgres:12 -- From library 2 docker rm -f lhrpg64304 rm -rf /docker_data/pg/lhrpg64304/data rm -rf /docker_data/pg/lhrpg64304/bk docker run -d --name lhrpg64304 -h lhrpg64304 \ -p 64304:5432 --net=pg-network --ip 172.72.6.4 \ -v /docker_data/pg/lhrpg64304/data:/var/lib/postgresql/data \ -v /docker_data/pg/lhrpg64304/bk:/bk \ -e POSTGRES_PASSWORD=lhr \ -e TZ=Asia/Shanghai \ postgres:12 -- Remote login psql -U postgres -h 192.168.66.35 -p 64302 psql -U postgres -h 192.168.66.35 -p 64303 psql -U postgres -h 192.168.66.35 -p 64304

3.2. Main warehouse operation

3.2. 1. Release firewall of main database

cat << EOF > /docker_data/pg/lhrpg64302/data/pg_hba.conf # TYPE DATABASE USER ADDRESS METHOD local all all trust host all all 127.0.0.1/32 trust host all all 0.0.0.0/0 md5 host replication all 0.0.0.0/0 md5 EOF

👉 Note adding replication

3.2. 2. Master database configuration Archive

docker exec -it lhrpg64302 bash

-- The path also needs to be created from the library

mkdir -p /postgresql/archive

chown -R postgres.postgres /postgresql/archive

cat >> /var/lib/postgresql/data/postgresql.conf <<"EOF"

wal_level='replica'

archive_mode='on'

archive_command='test ! -f /postgresql/archive/%f && cp %p /postgresql/archive/%f'

restore_command='cp /postgresql/archive/%f %p'

max_wal_senders=10

wal_keep_segments=256

wal_sender_timeout=60s

EOF

-- restart

docker restart lhrpg64302

-- Or:

/usr/lib/postgresql/12/bin/pg_ctl restart -D /var/lib/postgresql/data/

psql -U postgres -h 192.168.66.35 -p 64302

select * from pg_settings where name in ('wal_level','archive_mode','archive_command');

-- Switch Archive

select pg_switch_wal();

Execution results:

postgres=# select * from pg_settings where name in ('wal_level','archive_mode','archive_command');

name | setting | unit | category | short_desc | extra_desc | context | vartype | source | min_val | max_val | enumvals | boot_val | reset_val | sourcefile | sourceline | pending_restart

-----------------+------------------------------------------------------------------+------+-----------------------------+-------------------------------------------------------------------+------------+------------+---------+--------------------+---------+---------+---------------------------+----------+------------------------------------------------------------------+------------------------------------------+------------+-----------------

archive_command | test ! -f /postgresql/archive/%f && cp %p /postgresql/archive/%f | | Write-Ahead Log / Archiving | Sets the shell command that will be called to archive a WAL file. | | sighup | string | configuration file | | | | | test ! -f /postgresql/archive/%f && cp %p /postgresql/archive/%f | /var/lib/postgresql/data/postgresql.conf | 753 | f

archive_mode | on | | Write-Ahead Log / Archiving | Allows archiving of WAL files using archive_command. | | postmaster | enum | configuration file | | | {always,on,off} | off | on | /var/lib/postgresql/data/postgresql.conf | 752 | f

wal_level | replica | | Write-Ahead Log / Settings | Set the level of information written to the WAL. | | postmaster | enum | configuration file | | | {minimal,replica,logical} | replica | replica | /var/lib/postgresql/data/postgresql.conf | 751 | f

(3 rows)

postgres=# select * from pg_stat_get_archiver();

-[ RECORD 1 ]------+-----------------------------------------

archived_count | 8

last_archived_wal | 000000010000000000000006.00000028.backup

last_archived_time | 2021-04-22 11:42:54.049649+00

failed_count | 0

last_failed_wal |

last_failed_time |

stats_reset | 2021-04-22 11:35:55.727069+00

postgres=# select pg_switch_wal();

-[ RECORD 1 ]-+----------

pg_switch_wal | 0/7015058

postgres=# select * from pg_stat_get_archiver();

-[ RECORD 1 ]------+------------------------------

archived_count | 9

last_archived_wal | 000000010000000000000007

last_archived_time | 2021-04-23 01:00:30.076916+00

failed_count | 0

last_failed_wal |

last_failed_time |

stats_reset | 2021-04-22 11:35:55.727069+00

-- Before switching Archive

root@lhrpg64302:/# ps -ef|grep post

postgres 1 0 0 01:28 ? 00:00:00 postgres

postgres 26 1 0 01:28 ? 00:00:00 postgres: checkpointer

postgres 27 1 0 01:28 ? 00:00:00 postgres: background writer

postgres 28 1 0 01:28 ? 00:00:00 postgres: walwriter

postgres 29 1 0 01:28 ? 00:00:00 postgres: autovacuum launcher

postgres 30 1 0 01:28 ? 00:00:00 postgres: archiver

postgres 31 1 0 01:28 ? 00:00:00 postgres: stats collector

postgres 32 1 0 01:28 ? 00:00:00 postgres: logical replication launcher

postgres 33 1 0 01:29 ? 00:00:00 postgres: postgres postgres 172.72.6.1(6884) idle

root 40 34 0 01:29 pts/0 00:00:00 grep post

root@lhrpg64302:/# cd /postgresql/archive/

root@lhrpg64302:/postgresql/archive# ls -l

total 0

-- Switch Archive

postgres=# select pg_switch_wal();

pg_switch_wal

---------------

0/1645528

(1 row)

-- After switching archiving

root@lhrpg64302:/postgresql/archive# ls -l

total 16384

-rw------- 1 postgres postgres 16777216 Apr 23 01:30 000000010000000000000001

root@lhrpg64302:/postgresql/archive# ps -ef|grep post

postgres 1 0 0 01:28 ? 00:00:00 postgres

postgres 26 1 0 01:28 ? 00:00:00 postgres: checkpointer

postgres 27 1 0 01:28 ? 00:00:00 postgres: background writer

postgres 28 1 0 01:28 ? 00:00:00 postgres: walwriter

postgres 29 1 0 01:28 ? 00:00:00 postgres: autovacuum launcher

postgres 30 1 0 01:28 ? 00:00:00 postgres: archiver last was 000000010000000000000001

postgres 31 1 0 01:28 ? 00:00:00 postgres: stats collector

postgres 32 1 0 01:28 ? 00:00:00 postgres: logical replication launcher

postgres 33 1 0 01:29 ? 00:00:00 postgres: postgres postgres 172.72.6.1(6884) idle

root 47 34 0 01:30 pts/0 00:00:00 grep post

- Parameter Max_ wal_ Introduction to senders:

Specifies the maximum number of concurrent connections from standby servers or streaming base backup clients (i.e., the maximum number of simultaneously running WAL sender processes). The default is zero, meaning replication is disabled. WAL sender processes count towards the total number of connections, so the parameter cannot be set higher than max_connections. This parameter can only be set at server start. wal_level must be set to archive or hot_standby to allow connections from standby servers.

In other words, this parameter is set on the host, which is the sum of the number of concurrent connections from the host to the host, so this parameter is a positive integer. The default value is 0, that is, there is no stream replication function by default. From the process point of view, the number of concurrent connections is the sum of the number of wal sender processes, which can be viewed through ps -ef|grep senders. Therefore, the value cannot exceed the maximum number of connections of the system (max_connections, the BUG was repaired in 9.1.5), which can exceed the actual number of stream replication users. This parameter change requires restarting the DB. For example, I only have one slave:

[postgres@ndb2 database]$ ps -ef|grep sender postgres 21257 21247 0 20:57 ? 00:00:00 postgres: wal sender process repuser 192.25.10.71(46161) streaming 0/4018ED8 postgres 22193 20949 0 23:02 pts/0 00:00:00 grep sender

- Parameter wal_keep_segments=256 introduction

Indicates how many WAL files are retained. If the source database is busy, you should increase this value accordingly.

In PG13, wal_keep_segments has been canceled. Use wal instead_ keep_ size

- Parameter wal_sender_timeout=60s introduction

Disconnect replication connections that are inactive for more than a specified number of milliseconds. This is useful for the sending server to detect a backup crash or network outage. Setting to 0 disables the timeout mechanism. This parameter can only be used in PostgreSQL Conf file or on the server command line. The default value is 60 seconds.

3.2. 3. Master library create replication user

create role replhr login encrypted password 'lhr' replication;

👉 To create a user, you need to add the replication option.

3.3. Slave library operation

3.3. 1. Backup the master library from the slave library

Here, we can backup from the library first.

docker exec -it lhrpg64303 bash mkdir -p /bk chown postgres:postgres /bk su - postgres pg_basebackup -h 172.72.6.2 -p 5432 -U replhr -l bk20210422 -F p -P -R -D /bk

After the execution is completed, the file standby. XML is generated Signal, as follows:

root@lhrpg64303:/# mkdir -p /bk root@lhrpg64303:/# chown postgres:postgres /bk root@lhrpg64303:/# root@lhrpg64303:/# su - postgres postgres@lhrpg64303:~$ postgres@lhrpg64303:~$ pg_basebackup -h 172.72.6.2 -p 5432 -U replhr -l bk20210422 -F p -P -R -D /postgresql/pgdata Password: 24560/24560 kB (100%), 1/1 tablespace postgres@lhrpg64303:~$ cd /postgresql/pgdata/ postgres@lhrpg64303:/postgresql/pgdata$ ll -bash: ll: command not found postgres@lhrpg64303:/postgresql/pgdata$ ls -l total 116 -rw------- 1 postgres postgres 3 Apr 22 10:52 PG_VERSION -rw------- 1 postgres postgres 209 Apr 22 10:52 backup_label drwx------ 5 postgres postgres 4096 Apr 22 10:52 base drwx------ 2 postgres postgres 4096 Apr 22 10:52 global drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_commit_ts drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_dynshmem -rw-r--r-- 1 postgres postgres 243 Apr 22 10:52 pg_hba.conf -rw------- 1 postgres postgres 1636 Apr 22 10:52 pg_ident.conf drwx------ 4 postgres postgres 4096 Apr 22 10:52 pg_logical drwx------ 4 postgres postgres 4096 Apr 22 10:52 pg_multixact drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_notify drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_replslot drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_serial drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_snapshots drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_stat drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_stat_tmp drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_subtrans drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_tblspc drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_twophase drwx------ 3 postgres postgres 4096 Apr 22 10:52 pg_wal drwx------ 2 postgres postgres 4096 Apr 22 10:52 pg_xact -rw------- 1 postgres postgres 255 Apr 22 10:52 postgresql.auto.conf -rw------- 1 postgres postgres 26756 Apr 22 10:52 postgresql.conf -rw------- 1 postgres postgres 0 Apr 22 10:52 standby.signal postgres@lhrpg64303:/postgresql/pgdata$

👉 Before PG12, recovery will be automatically generated after - R backup Conf file, which is used for stream replication to judge the master-slave synchronization information. But since PG12, this file is no longer needed. You only need to post gresql in the parameter file Configuring primary in conf_ The conninfo parameter is sufficient.

3.3. 2. Restore from library 1

-- Close slave library 1, delete the data file from the library, and overwrite the backup file with the data file from the library docker stop lhrpg64303 rm -rf /docker_data/pg/lhrpg64303/data/* cp -r /docker_data/pg/lhrpg64303/bk/* /docker_data/pg/lhrpg64303/data/

3.3. 3. Restore from library 2

-- Close slave library 2, delete the data file from the library, and overwrite the backup file with the data file from the library docker stop lhrpg64304 rm -rf /docker_data/pg/lhrpg64304/data/* cp -r /docker_data/pg/lhrpg64303/bk/* /docker_data/pg/lhrpg64304/data/

3.3. 4. Modify the primary nodes of two slave libraries_ Conninfo parameter

-- From library 1 cat >> /docker_data/pg/lhrpg64303/data/postgresql.conf <<"EOF" primary_conninfo = 'host=172.72.6.2 port=5432 user=replhr password=lhr' EOF -- From library 2 cat >> /docker_data/pg/lhrpg64304/data/postgresql.conf <<"EOF" primary_conninfo = 'host=172.72.6.2 port=5432 user=replhr password=lhr' EOF

3.3. 5. Start slave Library

docker start lhrpg64303 lhrpg64304

Main library process:

root@lhrpg64302:/# ps -ef|grep post postgres 1 0 0 11:35 ? 00:00:00 postgres postgres 26 1 0 11:35 ? 00:00:00 postgres: checkpointer postgres 27 1 0 11:35 ? 00:00:00 postgres: background writer postgres 28 1 0 11:35 ? 00:00:00 postgres: walwriter postgres 29 1 0 11:35 ? 00:00:00 postgres: autovacuum launcher postgres 30 1 0 11:35 ? 00:00:00 postgres: archiver last was 000000010000000000000006.00000028.backup postgres 31 1 0 11:35 ? 00:00:00 postgres: stats collector postgres 32 1 0 11:35 ? 00:00:00 postgres: logical replication launcher postgres 33 1 0 11:35 ? 00:00:00 postgres: postgres postgres 172.72.6.1(52776) idle postgres 129 1 0 11:48 ? 00:00:00 postgres: walsender replhr 172.72.6.3(40056) streaming 0/7000148

Process from library:

root@lhrpg64303:/# ps -ef|grep post postgres 1 0 0 11:48 ? 00:00:00 postgres postgres 26 1 0 11:48 ? 00:00:00 postgres: startup recovering 000000010000000000000007 postgres 27 1 0 11:48 ? 00:00:00 postgres: checkpointer postgres 28 1 0 11:48 ? 00:00:00 postgres: background writer postgres 29 1 0 11:48 ? 00:00:00 postgres: stats collector postgres 30 1 0 11:48 ? 00:00:00 postgres: walreceiver streaming 0/7000148 postgres 31 1 0 11:48 ? 00:00:00 postgres: postgres postgres 172.72.6.1(54413) idle

3.4. Query copy status

-- Main library view wal Log sending status select * from pg_stat_replication; -- View from library wal Log receiving status select * from pg_stat_wal_receiver; -- You can also view by this name pg_controldata | grep state -- You can also view this. The main library is f representative false ;Standby database is t,representative true select pg_is_in_recovery();

Master database query replication status:

postgres=# select * from pg_stat_replication; pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start | backend_xmin | state | sent_lsn | write_lsn | flush_lsn | replay_lsn | write_lag | flush_lag | replay_lag | sync_priority | sync_state | reply_time -----+----------+---------+------------------+-------------+-----------------+-------------+-------------------------------+--------------+-----------+-----------+-----------+-----------+------------+-----------+-----------+------------+---------------+------------+------------------------------- 33 | 16384 | replhr | walreceiver | 172.72.6.3 | | 33806 | 2021-04-23 14:51:18.044699+08 | | streaming | 0/6000060 | 0/6000060 | 0/6000060 | 0/6000060 | | | | 0 | async | 2021-04-23 15:23:31.27773+08 75 | 16384 | replhr | walreceiver | 172.72.6.4 | | 47540 | 2021-04-23 15:23:14.795969+08 | | streaming | 0/6000060 | 0/6000060 | 0/6000060 | 0/6000060 | | | | 0 | async | 2021-04-23 15:23:34.927623+08 (2 rows) postgres=# select client_addr,state,sync_state from pg_stat_replication; client_addr | state | sync_state -------------+-----------+------------ 172.72.6.3 | streaming | async 172.72.6.4 | streaming | async (2 rows) postgres=# postgres=# select pg_is_in_recovery(); pg_is_in_recovery ------------------- f (1 row)

pg_stat_replication is a view that is mainly used to monitor PG stream replication. In this system view, each record represents only one slave. Therefore, you can see who is connected and what tasks are being done. pg_stat_replication is also a good way to check whether the slave is connected.

Meaning of each field code:

• pid: This represents the wal responsible for the flow connection_ The process ID of the sender process. For example, "postgres: walsender replhr 172.72.6.3(40056) streaming 0/7000148".

• usesysid: each internal user has a unique number. The system works much like UNIX. Usesysid is the unique identifier of the (PostgreSQL) user connecting to the system.

• usename: (not the user name, note that R is missing), which stores the name of the usesysid associated with the user. This is what the client puts into the connection string.

• application_name: This is the normal setting for synchronous replication. It can be passed to the master through the connection string.

• client_addr: it will tell you where the stream connection comes from. It has the IP address of the client.

• client_hostname: in addition to the IP address of the client, you can also do this by identifying the client by its host name. You can use postgresql.com on the master Log in conf_ Hostname enables DNS reverse lookup.

• client_port: This is the TPC port number used by the client to communicate with the WALsender. If the local UNIX socket is not used, - 1 is displayed.

• backend_start: it tells us when the slave created the stream connection.

• state: this column tells us the connection status of the data. If things go according to plan, it should contain flow information.

• sent_lsn: This represents the location of the last transaction log sent to the connection. How many wals have been sent over the network?

• write_lsn: This is the last transaction log location written to the standby system disk. How many wals have been sent to the operating system? (not yet flushing)

• flush_lsn: This is the last location to be refreshed to the standby system. (note the difference between write and refresh here. Write does not mean refresh.) how many wals have been flushed to disk?

• replay_lsn: This is the last transaction log location replayed on the slave. How many wals have been replayed so that they are visible to the query?

• sync_priority: this field is the only one related to synchronous replication. Each synchronous replication will select a priority - sync_priority - will tell you which priority was selected.

• sync_state: finally, you will see which state the slave is in. This state can be async, sync, or potential. When there is a synchronized slave with higher priority, PostgreSQL marks the slave as potential.

People often say PG_ stat_ The replication view is on the primary side, which is wrong. The purpose of this view is to reveal information about the wal sender process. In other words: if you are running cascading replication, this view means that when the secondary is replicated to other slave, the PG on the secondary side will be changed_ stat_ entries are also displayed on the replication

Query wal log receiving status from library:

postgres=# select * from pg_stat_wal_receiver; -[ RECORD 1 ]---------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- pid | 30 status | streaming receive_start_lsn | 0/4000000 receive_start_tli | 1 received_lsn | 0/4000060 received_tli | 1 last_msg_send_time | 2021-04-23 14:33:12.462989+08 last_msg_receipt_time | 2021-04-23 14:33:12.463126+08 latest_end_lsn | 0/4000060 latest_end_time | 2021-04-23 14:32:42.441224+08 slot_name | sender_host | 172.72.6.2 sender_port | 5432 conninfo | user=replhr password=******** dbname=replication host=172.72.6.2 port=5432 fallback_application_name=walreceiver sslmode=prefer sslcompression=0 gssencmode=prefer krbsrvname=postgres target_session_attrs=any

You can also use PG_ Control data to query the status of the primary and standby databases, mainly based on the value of the field "Database cluster state":

root@lhrpg64302:/# pg_controldata pg_control version number: 1201 Catalog version number: 201909212 Database system identifier: 6954163543229509670 Database cluster state: in production pg_control last modified: Fri 23 Apr 2021 09:54:48 AM CST Latest checkpoint location: 0/5000098 Latest checkpoint's REDO location: 0/5000060 Latest checkpoint's REDO WAL file: 000000010000000000000005 Latest checkpoint's TimeLineID: 1 Latest checkpoint's PrevTimeLineID: 1 Latest checkpoint's full_page_writes: on Latest checkpoint's NextXID: 0:488 Latest checkpoint's NextOID: 24576 Latest checkpoint's NextMultiXactId: 1 Latest checkpoint's NextMultiOffset: 0 Latest checkpoint's oldestXID: 480 Latest checkpoint's oldestXID's DB: 1 Latest checkpoint's oldestActiveXID: 488 Latest checkpoint's oldestMultiXid: 1 Latest checkpoint's oldestMulti's DB: 1 Latest checkpoint's oldestCommitTsXid:0 Latest checkpoint's newestCommitTsXid:0 Time of latest checkpoint: Fri 23 Apr 2021 09:54:48 AM CST Fake LSN counter for unlogged rels: 0/3E8 Minimum recovery ending location: 0/0 Min recovery ending loc's timeline: 0 Backup start location: 0/0 Backup end location: 0/0 End-of-backup record required: no wal_level setting: replica wal_log_hints setting: off max_connections setting: 100 max_worker_processes setting: 8 max_wal_senders setting: 10 max_prepared_xacts setting: 0 max_locks_per_xact setting: 64 track_commit_timestamp setting: off Maximum data alignment: 8 Database block size: 8192 Blocks per segment of large relation: 131072 WAL block size: 8192 Bytes per WAL segment: 16777216 Maximum length of identifiers: 64 Maximum columns in an index: 32 Maximum size of a TOAST chunk: 1996 Size of a large-object chunk: 2048 Date/time type storage: 64-bit integers Float4 argument passing: by value Float8 argument passing: by value Data page checksum version: 0 Mock authentication nonce: 6006c7a6310b7d3904a3a1cf6b6ca96accce7a702df332c33e1c3fbc210e3623 root@lhrpg64302:/# pg_controldata | grep state Database cluster state: in production

From library:

root@lhrpg64303:/var/lib/postgresql/data# pg_controldata pg_control version number: 1201 Catalog version number: 201909212 Database system identifier: 6954163543229509670 Database cluster state: in archive recovery pg_control last modified: Fri 23 Apr 2021 09:55:28 AM CST Latest checkpoint location: 0/5000098 Latest checkpoint's REDO location: 0/5000060 Latest checkpoint's REDO WAL file: 000000010000000000000005 Latest checkpoint's TimeLineID: 1 Latest checkpoint's PrevTimeLineID: 1 Latest checkpoint's full_page_writes: on Latest checkpoint's NextXID: 0:488 Latest checkpoint's NextOID: 24576 Latest checkpoint's NextMultiXactId: 1 Latest checkpoint's NextMultiOffset: 0 Latest checkpoint's oldestXID: 480 Latest checkpoint's oldestXID's DB: 1 Latest checkpoint's oldestActiveXID: 488 Latest checkpoint's oldestMultiXid: 1 Latest checkpoint's oldestMulti's DB: 1 Latest checkpoint's oldestCommitTsXid:0 Latest checkpoint's newestCommitTsXid:0 Time of latest checkpoint: Fri 23 Apr 2021 09:54:48 AM CST Fake LSN counter for unlogged rels: 0/3E8 Minimum recovery ending location: 0/5000148 Min recovery ending loc's timeline: 1 Backup start location: 0/0 Backup end location: 0/0 End-of-backup record required: no wal_level setting: replica wal_log_hints setting: off max_connections setting: 100 max_worker_processes setting: 8 max_wal_senders setting: 10 max_prepared_xacts setting: 0 max_locks_per_xact setting: 64 track_commit_timestamp setting: off Maximum data alignment: 8 Database block size: 8192 Blocks per segment of large relation: 131072 WAL block size: 8192 Bytes per WAL segment: 16777216 Maximum length of identifiers: 64 Maximum columns in an index: 32 Maximum size of a TOAST chunk: 1996 Size of a large-object chunk: 2048 Date/time type storage: 64-bit integers Float4 argument passing: by value Float8 argument passing: by value Data page checksum version: 0 Mock authentication nonce: 6006c7a6310b7d3904a3a1cf6b6ca96accce7a702df332c33e1c3fbc210e3623 root@lhrpg64303:/var/lib/postgresql/data# pg_controldata | grep state Database cluster state: in archive recovery

3.5 test master-slave replication

select pg_is_in_recovery();-- The main library is f, which stands for false; The standby database is t, which represents true

-- Main library addition table

postgres=# \c sbtest

psql (13.2, server 12.2 (Debian 12.2-2.pgdg100+1))

You are now connected to database "sbtest" as user "postgres".

sbtest=# select count(*) from sbtest1;

count | 10

sbtest=# select count(*) from sbtest11;

ERROR: relation "sbtest11" does not exist

LINE 1: select count(*) from sbtest11;

^

sbtest=# create table sbtest11 as select * from sbtest1;

SELECT 10

sbtest=# select count(*) from sbtest11;

count | 10

sbtest=# select pg_is_in_recovery();

pg_is_in_recovery

-------------------

f

(1 row)

-- Query from library

sbtest=# select count(*) from sbtest11;

count

-------

10

(1 row)

sbtest=# select pg_is_in_recovery();

pg_is_in_recovery

-------------------

t

(1 row)

sbtest=# create database lhrdb;

ERROR: cannot execute CREATE DATABASE in a read-only transaction

-- Main library insert

sbtest=# insert into sbtest11 select * from sbtest11;

INSERT 0 10

sbtest=#

sbtest=# select count(*) from sbtest11;

count

-------

20

(1 row)

-- Query from library

sbtest=# select count(*) from sbtest11;

count

-------

20

(1 row)

As you can see, the master and slave are synchronized.

4, Deploy and use pgpool II and pgpoolAdmin tools

4.1. Rapid deployment of pgpool II and pgpoolAdmin tools

Since the installation and configuration are cumbersome, we mainly focus on the use. Therefore, the installation and configuration process is omitted here. For details, please refer to: [DB Bao 61] PostgreSQL uses Pgpool-II to realize read-write separation + load balancing

We can directly use the image configured by Mr. Mai, which integrates pgpool II and pgpoolAdmin tools, as shown below:

docker rm -f lhrpgpool docker run -d --name lhrpgpool -h lhrpgpool \ --net=pg-network --ip 172.72.6.66 \ -p 19999:9999 -p 19898:9898 -p 180:80 -p 13389:3389 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true lhrbest/lhrpgpool:4.2.2 \ /usr/sbin/init docker network connect bridge lhrpgpool docker restart lhrpgpool docker exec -it lhrpgpool bash -- lhrbest/lhrpgpool:4.2.2 Only installed pgpool -- lhrbest/lhrpgpool:4.2.2_01 Installed pgpool And configured -- lhrbest/lhrpgpool:4.2.2_02 Installed pgpool and pgpoolAdmin And configured

After starting the container, we have pgpool-II and pgpoolAdmin tools.

4.2. Use pgpool II to realize read-write separation + load balancing

[root@lhrpgpool /]# systemctl status pgpool

● pgpool.service - Pgpool-II

Loaded: loaded (/usr/lib/systemd/system/pgpool.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2021-06-24 15:06:22 CST; 9min ago

Main PID: 181 (pgpool)

CGroup: /docker/d45b808bb68bd3fa21db1881b6fc82a1ae194abc88fcbb6b8667875d9257ac54/system.slice/pgpool.service

├─181 /postgresql/pgpool/bin/pgpool -f /postgresql/pgpool/etc/pgpool.conf -n

├─218 pgpool: wait for connection request

├─219 pgpool: wait for connection request

├─220 pgpool: wait for connection request

├─221 pgpool: wait for connection request

├─222 pgpool: wait for connection request

├─223 pgpool: wait for connection request

├─224 pgpool: wait for connection request

├─225 pgpool: wait for connection request

├─226 pgpool: wait for connection request

├─227 pgpool: wait for connection request

├─229 pgpool: wait for connection request

├─230 pgpool: wait for connection request

├─231 pgpool: wait for connection request

├─235 pgpool: wait for connection request

├─236 pgpool: wait for connection request

├─237 pgpool: wait for connection request

├─239 pgpool: wait for connection request

├─240 pgpool: wait for connection request

├─241 pgpool: wait for connection request

├─243 pgpool: wait for connection request

├─245 pgpool: wait for connection request

├─246 pgpool: wait for connection request

├─249 pgpool: wait for connection request

├─251 pgpool: PCP: wait for connection request

├─252 pgpool: worker process

├─767 pgpool: wait for connection request

├─784 pgpool: wait for connection request

├─829 pgpool: wait for connection request

├─840 pgpool: wait for connection request

├─841 pgpool: wait for connection request

├─845 pgpool: wait for connection request

├─846 pgpool: wait for connection request

├─915 pgpool: wait for connection request

└─916 pgpool: wait for connection request

Jun 24 15:15:16 lhrpgpool pgpool[251]: [150-1] 2021-06-24 15:15:16: pid 251: LOG: PCP process with pid: 911 exits with status 0

Jun 24 15:15:16 lhrpgpool pgpool[251]: [151-1] 2021-06-24 15:15:16: pid 251: LOG: forked new pcp worker, pid=913 socket=8

Jun 24 15:15:16 lhrpgpool pgpool[251]: [152-1] 2021-06-24 15:15:16: pid 251: LOG: PCP process with pid: 913 exit with SUCCESS.

Jun 24 15:15:16 lhrpgpool pgpool[251]: [153-1] 2021-06-24 15:15:16: pid 251: LOG: PCP process with pid: 913 exits with status 0

Jun 24 15:15:16 lhrpgpool pgpool[774]: [20-1] 2021-06-24 15:15:16: pid 774: FATAL: pgpool is not accepting any new connections

Jun 24 15:15:16 lhrpgpool pgpool[181]: [49-1] 2021-06-24 15:15:16: pid 181: LOG: child process with pid: 774 exits with status 256

Jun 24 15:15:16 lhrpgpool pgpool[181]: [50-1] 2021-06-24 15:15:16: pid 181: LOG: fork a new child process with pid: 915

Jun 24 15:15:16 lhrpgpool pgpool[773]: [18-1] 2021-06-24 15:15:16: pid 773: FATAL: pgpool is not accepting any new connections

Jun 24 15:15:16 lhrpgpool pgpool[181]: [51-1] 2021-06-24 15:15:16: pid 181: LOG: child process with pid: 773 exits with status 256

Jun 24 15:15:16 lhrpgpool pgpool[181]: [52-1] 2021-06-24 15:15:16: pid 181: LOG: fork a new child process with pid: 916

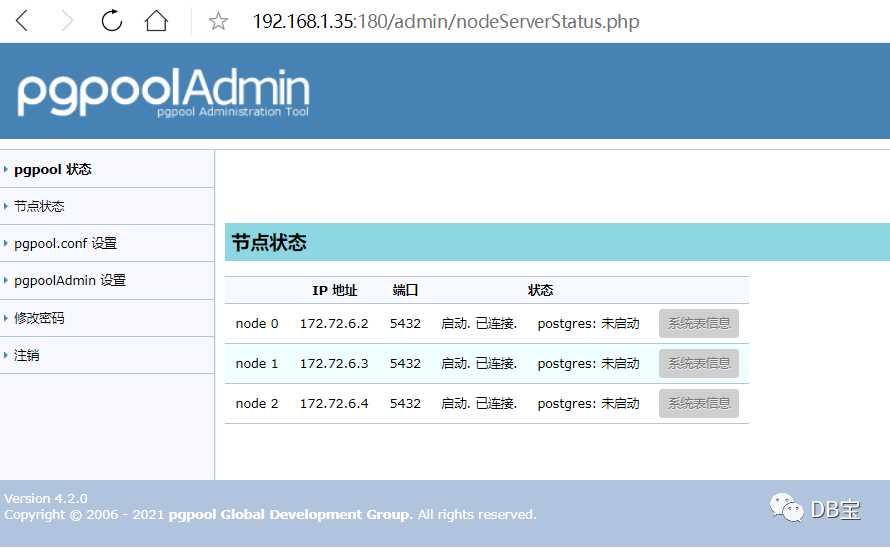

4.2. 1. Add monitored host

vi /postgresql/pgpool/etc/pgpool.conf # - Backend Connection Settings - backend_hostname0 = '172.72.6.2' backend_port0 = 5432 backend_weight0 = 1 backend_data_directory0 = '/var/lib/postgresql/data' backend_flag0 = 'ALLOW_TO_FAILOVER' backend_application_name0 = 'lhrpg64302' backend_hostname1 = '172.72.6.3' backend_port1 = 5432 backend_weight1 = 1 backend_data_directory1 = '/var/lib/postgresql/data' backend_flag1 = 'ALLOW_TO_FAILOVER' backend_application_name1 = 'lhrpg64303' backend_hostname2 = '172.72.6.4' backend_port2 = 5432 backend_weight2 = 1 backend_data_directory2 = '/var/lib/postgresql/data' backend_flag2 = 'ALLOW_TO_FAILOVER' backend_application_name2 = 'lhrpg64304'

👉 be careful: Write will only be sent to 172.72 6.2, and the read will be sent to 172.72 6.2,172.72. 6.3 and 172.72 6.4 upper. If you want to read, just send it to 172.72 6.3 and 172.72 On 6.4, 172.72 needs to be modified 6.2 weight backend_weight0 = 0

After configuration, restart the pgpool service:

systemctl restart pgpool

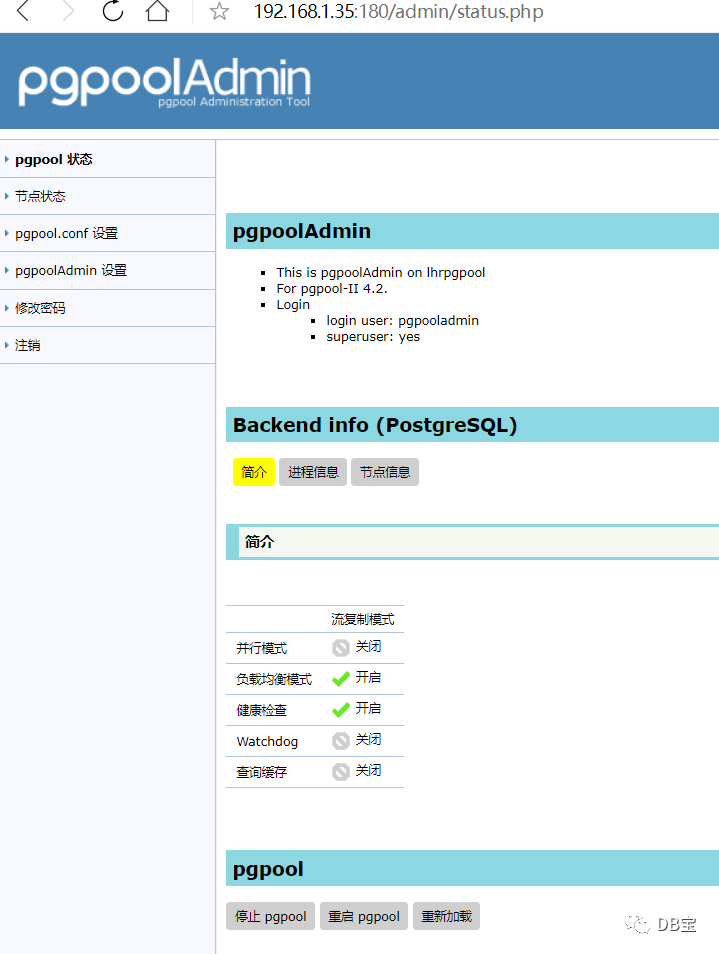

4.3. Use pgpoolAdmin to manage pgpool II

[root@lhrpgpool /]# systemctl enable httpd

Created symlink from /etc/systemd/system/multi-user.target.wants/httpd.service to /usr/lib/systemd/system/httpd.service.

[root@lhrpgpool /]#

[root@lhrpgpool /]# systemctl start httpd

[root@lhrpgpool /]# systemctl status httpd

● httpd.service - The Apache HTTP Server

Loaded: loaded (/usr/lib/systemd/system/httpd.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2021-06-24 15:11:58 CST; 10s ago

Docs: man:httpd(8)

man:apachectl(8)

Main PID: 602 (httpd)

Status: "Total requests: 0; Current requests/sec: 0; Current traffic: 0 B/sec"

CGroup: /docker/d45b808bb68bd3fa21db1881b6fc82a1ae194abc88fcbb6b8667875d9257ac54/system.slice/httpd.service

├─602 /usr/sbin/httpd -DFOREGROUND

├─603 /usr/sbin/httpd -DFOREGROUND

├─604 /usr/sbin/httpd -DFOREGROUND

├─605 /usr/sbin/httpd -DFOREGROUND

├─606 /usr/sbin/httpd -DFOREGROUND

└─607 /usr/sbin/httpd -DFOREGROUND

Jun 24 15:11:58 lhrpgpool systemd[1]: Starting The Apache HTTP Server...

Jun 24 15:11:58 lhrpgpool httpd[602]: AH00558: httpd: Could not reliably determine the server's fully qualified domain name, using 172.17.0.2. Set the 'ServerName' directive globally to suppress this message

Jun 24 15:11:58 lhrpgpool systemd[1]: Started The Apache HTTP Server.

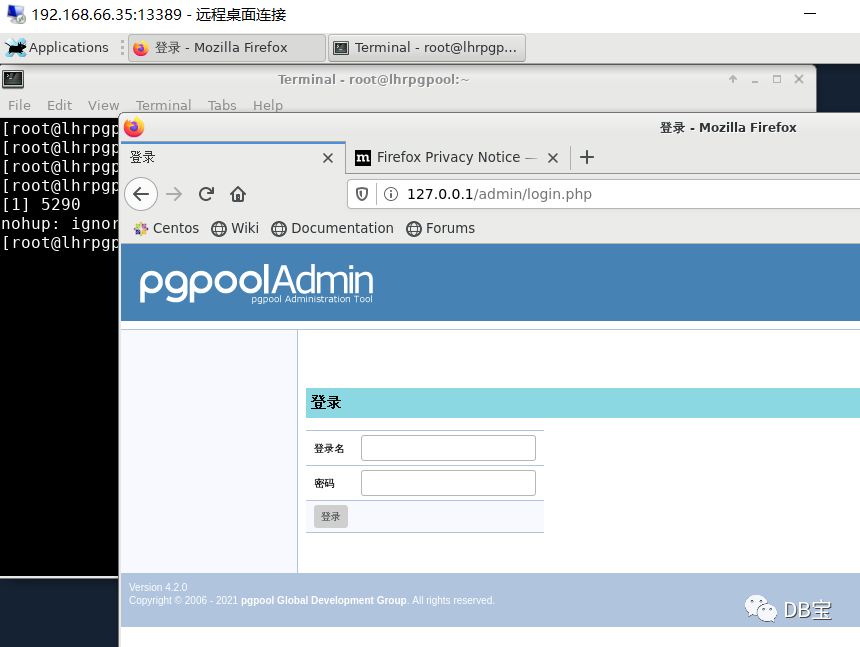

Web login address:

- Host login: http://192.168.1.35:180/admin/login.php

User name and password: pgpooladmin/lhr

- Login in container: http://127.0.0.1/admin/login.php

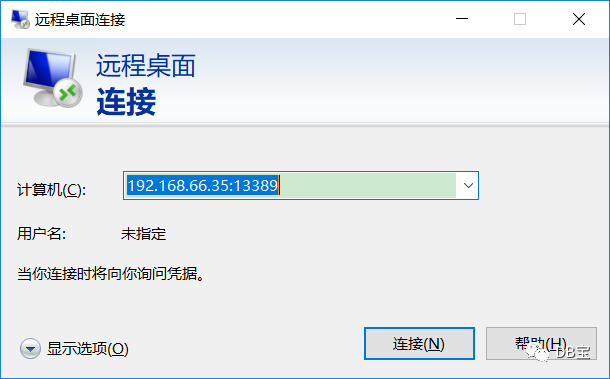

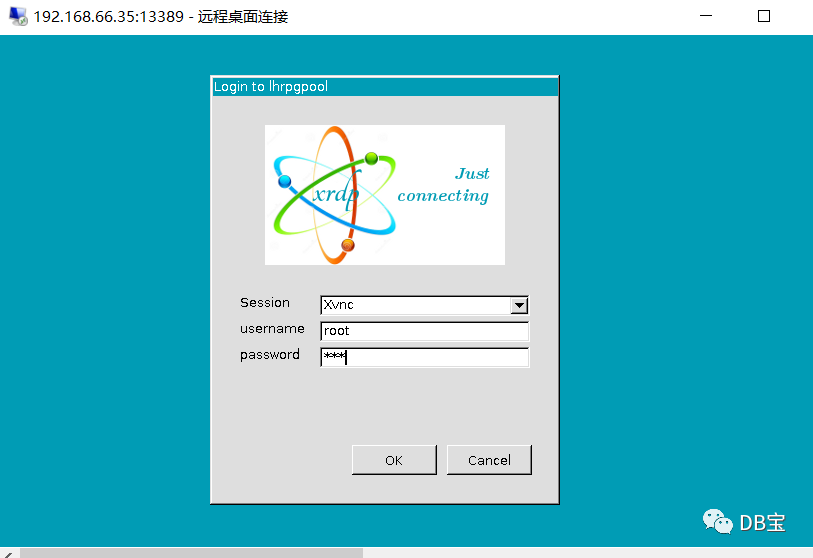

To log in the container, you need to log in to the Linux desktop. We log in through the remote desktop:

image-20210624160349941

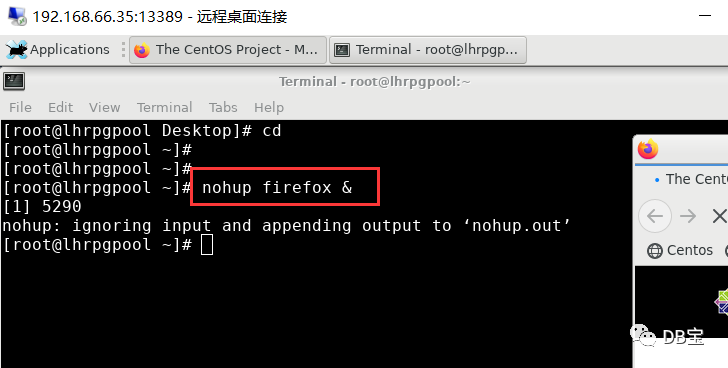

image-20210624160420852

User name and password: root/lhr

image-20210624160513763

Open the Firefox browser and enter the following address: http://127.0.0.1/admin/login.php

image-20210624160605074

You can also log in.

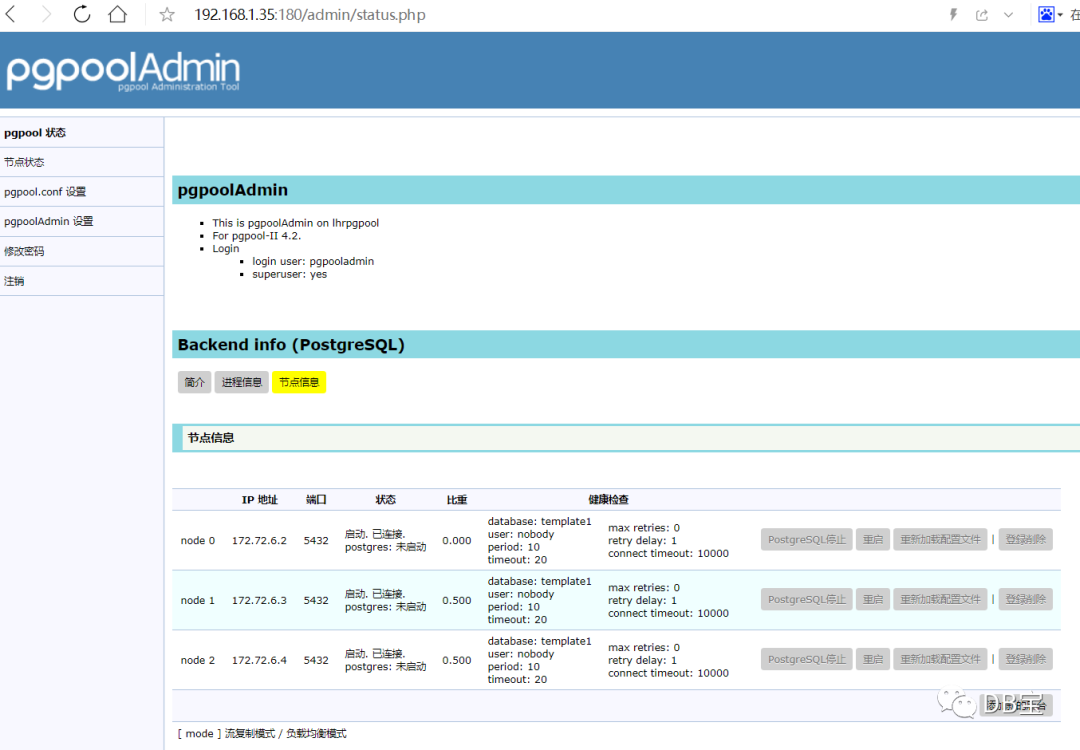

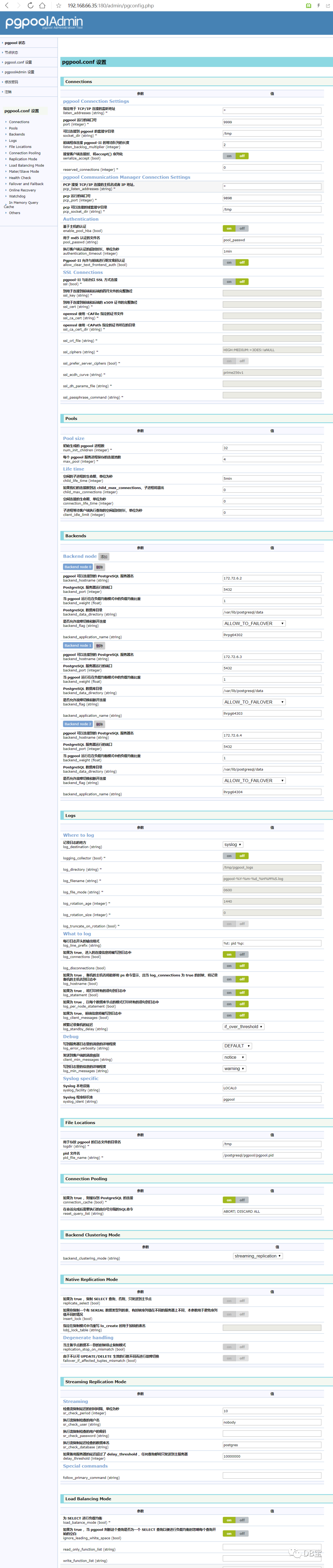

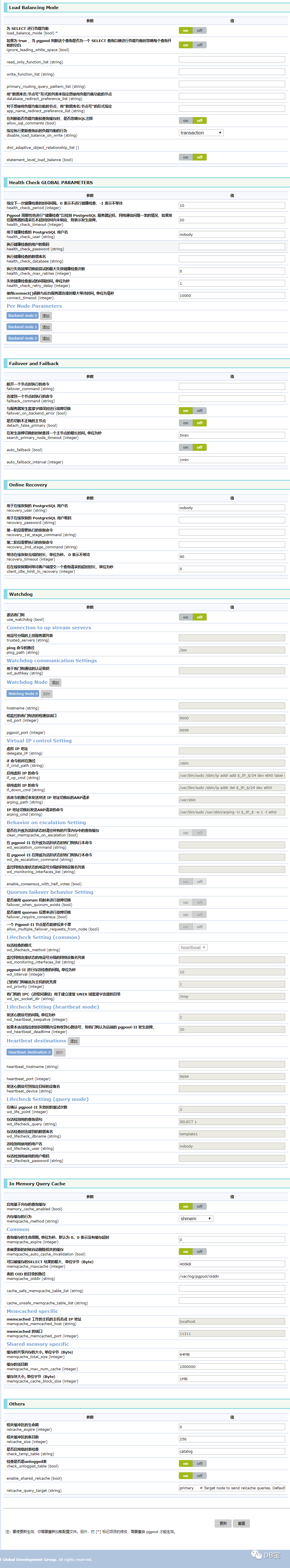

The interface you can see after logging in:

Each parameter has a detailed description. You can also refer to:

- pgpool-II 3.2.5 manual

- pgpool-II-3.2.5 Getting Started tutorial

4.4. Test the read-write separation + load balancing + memory query cache function of pgpool II

4.4. 1. Close the memory query cache first

Let's turn off the memory query cache function and turn it on later.

vi /postgresql/pgpool/etc/pgpool.conf memory_cache_enabled = off

After configuration, restart the pgpool service:

systemctl restart pgpool

4.4. 2. Test read / write separation + load balancing

Test process:

-- Open 3 windows with password lhr psql -U postgres -h 192.168.66.35 -p 19999 -d sbtest create table test(id int); insert into test values(1); select * from test; show pool_nodes; show pool_backend_stats;

--Get pgPool_II version information show pool_version; --View profile show pool_status; --Get node information show pool_nodes; --Get pgpool II process information show pool_processes; --Get all connection pool information of pgpool II show pool_pools; --Command execution statistics show pool_backend_stats; --Health Statistics show pool_health_check_stats; --Cache statistics show pool_cache;

C:\Users\lhrxxt>psql -U postgres -h 192.168.66.35 -p 19999 -d sbtest Password for user postgres: psql (13.3, server 12.2 (Debian 12.2-2.pgdg100+1)) Type "help" for help. sbtest=# sbtest=# show pool_nodes; node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay | replication_state | replication_sync_state | last_status_change ---------+------------+------+--------+-----------+---------+------------+-------------------+-------------------+-------------------+------------------------+--------------------- 0 | 172.72.6.2 | 5432 | up | 0.333333 | primary | 0 | false | 0 | | | 2021-06-24 15:38:35 1 | 172.72.6.3 | 5432 | up | 0.333333 | standby | 0 | false | 0 | | | 2021-06-24 15:38:35 2 | 172.72.6.4 | 5432 | up | 0.333333 | standby | 0 | true | 0 | | | 2021-06-24 15:38:35 (3 rows) sbtest=# show pool_backend_stats; node_id | hostname | port | status | role | select_cnt | insert_cnt | update_cnt | delete_cnt | ddl_cnt | other_cnt | panic_cnt | fatal_cnt | error_cnt ---------+------------+------+--------+---------+------------+------------+------------+------------+---------+-----------+-----------+-----------+----------- 0 | 172.72.6.2 | 5432 | up | primary | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 1 | 172.72.6.3 | 5432 | up | standby | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 2 | 172.72.6.4 | 5432 | up | standby | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 (3 rows) sbtest=# sbtest=# create table test(id int); CREATE TABLE sbtest=# insert into test values(1); INSERT 0 1 sbtest=# select * from test; id ---- 1 (1 row) sbtest=# show pool_backend_stats; node_id | hostname | port | status | role | select_cnt | insert_cnt | update_cnt | delete_cnt | ddl_cnt | other_cnt | panic_cnt | fatal_cnt | error_cnt ---------+------------+------+--------+---------+------------+------------+------------+------------+---------+-----------+-----------+-----------+----------- 0 | 172.72.6.2 | 5432 | up | primary | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 1 | 172.72.6.3 | 5432 | up | standby | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 2 | 172.72.6.4 | 5432 | up | standby | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 (3 rows) sbtest=# select * from test; id ---- 1 (1 row) sbtest=# select * from test; id ---- 1 (1 row) sbtest=# show pool_backend_stats; node_id | hostname | port | status | role | select_cnt | insert_cnt | update_cnt | delete_cnt | ddl_cnt | other_cnt | panic_cnt | fatal_cnt | error_cnt ---------+------------+------+--------+---------+------------+------------+------------+------------+---------+-----------+-----------+-----------+----------- 0 | 172.72.6.2 | 5432 | up | primary | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 1 | 172.72.6.3 | 5432 | up | standby | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 2 | 172.72.6.4 | 5432 | up | standby | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 (3 rows)

Use the shell to test load balancing:

[pgsql@lhrpgpool ~]$ for i in $(seq 1 20); do psql -U postgres -h 127.0.0.1 -p 9999 -d sbtest -c 'SELECT inet_server_addr()'; done | egrep '172.' 172.72.6.4 172.72.6.2 172.72.6.4 172.72.6.4 172.72.6.4 172.72.6.3 172.72.6.3 172.72.6.3 172.72.6.4 172.72.6.3 172.72.6.2 172.72.6.4 172.72.6.3 172.72.6.4 172.72.6.4 172.72.6.2 172.72.6.3 172.72.6.4 172.72.6.2 172.72.6.2 sbtest=# show pool_backend_stats; node_id | hostname | port | status | role | select_cnt | insert_cnt | update_cnt | delete_cnt | ddl_cnt | other_cnt | panic_cnt | fatal_cnt | error_cnt ---------+------------+------+--------+---------+------------+------------+------------+------------+---------+-----------+-----------+-----------+----------- 0 | 172.72.6.2 | 5432 | up | primary | 23 | 1 | 0 | 0 | 1 | 61 | 0 | 0 | 0 1 | 172.72.6.3 | 5432 | up | standby | 18 | 0 | 0 | 0 | 0 | 18 | 0 | 0 | 0 2 | 172.72.6.4 | 5432 | up | standby | 28 | 0 | 0 | 0 | 0 | 26 | 0 | 0 | 1 (3 rows)

As you can see, 172.72 6.2,172.72. 6.3 and 172.72 6.4 the server is read balanced.

Next, the parameter file / PostgreSQL / pgpool / etc / pgpool Backend in conf_ Change weight0 to 0 and restart pgpool to test:

[pgsql@lhrpgpool ~]$ for i in $(seq 1 50); do psql -U postgres -h 127.0.0.1 -p 9999 -d sbtest -c 'SELECT inet_server_addr()'; done | egrep '172.' 172.72.6.3 172.72.6.3 172.72.6.4 .............. 172.72.6.3 172.72.6.3 172.72.6.3 172.72.6.4 172.72.6.4 172.72.6.4 sbtest=# show pool_backend_stats; node_id | hostname | port | status | role | select_cnt | insert_cnt | update_cnt | delete_cnt | ddl_cnt | other_cnt | panic_cnt | fatal_cnt | error_cnt ---------+------------+------+--------+---------+------------+------------+------------+------------+---------+-----------+-----------+-----------+----------- 0 | 172.72.6.2 | 5432 | up | primary | 0 | 0 | 0 | 0 | 0 | 50 | 0 | 0 | 0 1 | 172.72.6.3 | 5432 | up | standby | 30 | 0 | 0 | 0 | 0 | 30 | 0 | 0 | 0 2 | 172.72.6.4 | 5432 | up | standby | 20 | 0 | 0 | 0 | 0 | 20 | 0 | 0 | 0 (3 rows)

As you can see, all the reads were sent to 172.72 6.3 and 172.72 6.4 on.

4.4. 3. Enable memory query cache

https://www.pgpool.net/docs/latest/en/html/runtime-in-memory-query-cache.html

https://www.pgpool.net/docs/pgpool-II-3.2.5/pgpool-zh_cn.html#memqcache

You can use memory based query caching in any mode. It is different from the above query cache because the memory based query cache is much faster because the cache is stored in memory. In addition, if the cache is small, you don't need to restart pgpool-II because the related tables have been updated.

The memory based cache stores the SELECT statement (and its bound parameters, if SELECT is an extended query) and the corresponding data. If it is the same SELECT statement, the cached value will be returned directly. In fact, it will be very fast because there are no SQL analysis or calls to PostgreSQL.

Otherwise, it will be slower because it adds some load to the cache. In addition, when a table is updated, pgpool automatically deletes the cache of the related table. Therefore, in a system with many updates, the performance will be reduced. If cache_ hit_ If the ratio is less than 70% (you can query show pool_cache;), it is recommended that you turn off the memory based cache.

Modify parameter memory_cache_enabled=on will enable the query cache function. In addition, you need to set memqcache_ The oiddir path, create the directory / var/log/pgpool/oiddir, and enable.

vi /postgresql/pgpool/etc/pgpool.conf memory_cache_enabled = on mkdir -p /var/log/pgpool/oiddir chown -R pgsql.pgsql /var/log/pgpool/oiddir

After configuration, restart the pgpool service:

systemctl restart pgpool

Next, test the cache function:

sbtest=# show pool_backend_stats; node_id | hostname | port | status | role | select_cnt | insert_cnt | update_cnt | delete_cnt | ddl_cnt | other_cnt | panic_cnt | fatal_cnt | error_cnt ---------+------------+------+--------+---------+------------+------------+------------+------------+---------+-----------+-----------+-----------+----------- 0 | 172.72.6.2 | 5432 | up | primary | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 1 | 172.72.6.3 | 5432 | up | standby | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 2 | 172.72.6.4 | 5432 | up | standby | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 (3 rows) [pgsql@lhrpgpool ~]$ for i in $(seq 1 50); do psql -U postgres -h 127.0.0.1 -p 9999 -d sbtest -c 'SELECT * from test'; done | egrep 'row' | wc -l 50 sbtest=# show pool_backend_stats; node_id | hostname | port | status | role | select_cnt | insert_cnt | update_cnt | delete_cnt | ddl_cnt | other_cnt | panic_cnt | fatal_cnt | error_cnt ---------+------------+------+--------+---------+------------+------------+------------+------------+---------+-----------+-----------+-----------+----------- 0 | 172.72.6.2 | 5432 | up | primary | 0 | 0 | 0 | 0 | 0 | 50 | 0 | 0 | 0 1 | 172.72.6.3 | 5432 | up | standby | 0 | 0 | 0 | 0 | 0 | 22 | 0 | 0 | 0 2 | 172.72.6.4 | 5432 | up | standby | 1 | 0 | 0 | 0 | 0 | 28 | 0 | 0 | 0 (3 rows) sbtest=# delete from test; DELETE 1 sbtest=# select * from test; id ---- (0 rows) sbtest=# show pool_health_check_stats; node_id | hostname | port | status | role | last_status_change | total_count | success_count | fail_count | skip_count | retry_count | average_retry_count | max_retry_count | max_duration | min_duration | average_duration | last_health_check | last_successful_health_check | last_skip_health_check | last_failed_health_check ---------+------------+------+--------+---------+---------------------+-------------+---------------+------------+------------+-------------+---------------------+-----------------+--------------+--------------+------------------+---------------------+------------------------------+------------------------+-------------------------- 0 | 172.72.6.2 | 5432 | up | primary | 2021-06-24 16:20:08 | 57 | 57 | 0 | 0 | 0 | 0.000000 | 0 | 8 | 7 | 7.035088 | 2021-06-24 16:29:29 | 2021-06-24 16:29:29 | | 1 | 172.72.6.3 | 5432 | up | standby | 2021-06-24 16:20:08 | 57 | 57 | 0 | 0 | 0 | 0.000000 | 0 | 7 | 7 | 7.000000 | 2021-06-24 16:29:29 | 2021-06-24 16:29:29 | | 2 | 172.72.6.4 | 5432 | up | standby | 2021-06-24 16:20:08 | 57 | 57 | 0 | 0 | 0 | 0.000000 | 0 | 8 | 7 | 7.017544 | 2021-06-24 16:29:29 | 2021-06-24 16:29:29 | | (3 rows)

You can see that I have executed SELECT * from test 50 times, but only one record (select_cnt) is actually recorded. In fact, the data is obtained from the cache.

This article ends.