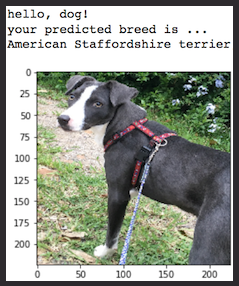

In this notebook, you will take the first step to develop algorithms that can be part of mobile or Web applications. At the end of this project, your program will be able to take any image provided by the user as input. If a dog can be detected from the image, it will output the prediction of dog breed. If the image shows a human face, it predicts the kind of dog most similar to it. The following figure shows the possible output results after completing the project.

- Step 0: import dataset

- Step 1: face detection

- Step 2: detect dogs

- Step 3: create a CNN from scratch to classify dog breeds

- Step 4: use a CNN to distinguish dog breeds (using transfer learning)

- Step 5: complete your algorithm

- Step 6: test your algorithm

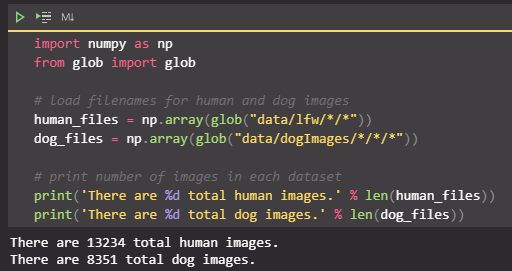

Step 0: import dataset

Download dog dataset: dog dataset , place it in the data folder and unzip it.

Downloaders dataset: human dataset (a human face recognition data set), place it in the data folder and unzip it.

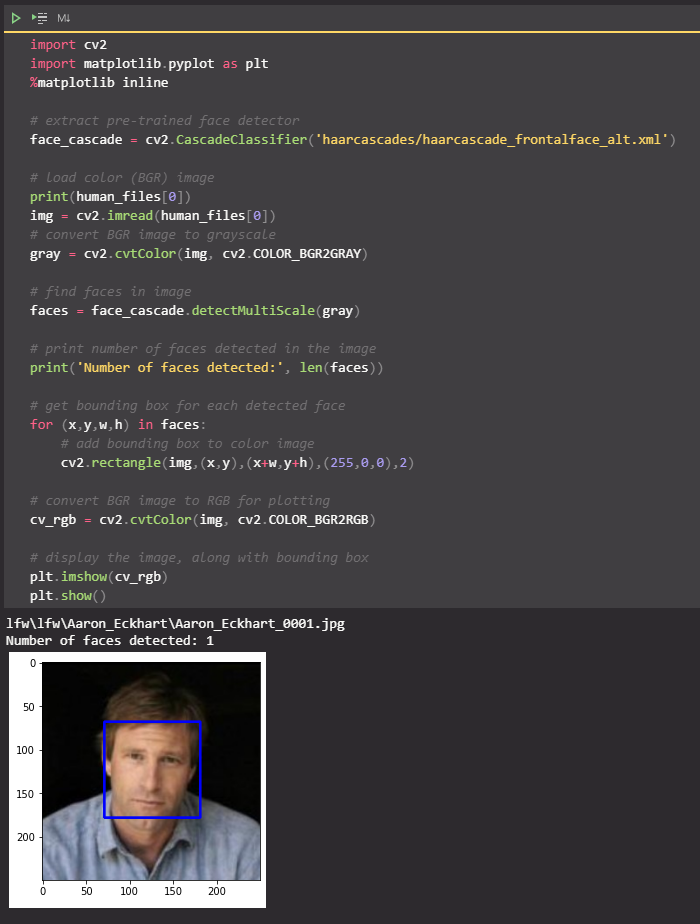

Step 1: detect face

We will use the in OpenCV Haar feature-based cascade classifiers To detect the face in the image. OpenCV provides many pre trained face detection models, which are saved in XML files github . We have downloaded one of the detection models and stored it in the haarcascades directory.

In the following code unit, we will demonstrate how to use this detection model to find faces in sample images.

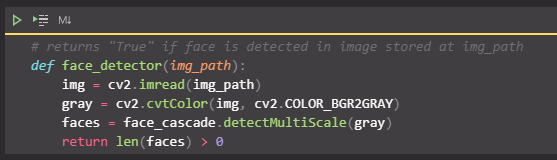

Write a face detector

We can use this program to write a function. If a face is detected in the image, it returns True, otherwise it returns False. This function is called face_detector, which takes the file path of the image as input and appears in the following code block.

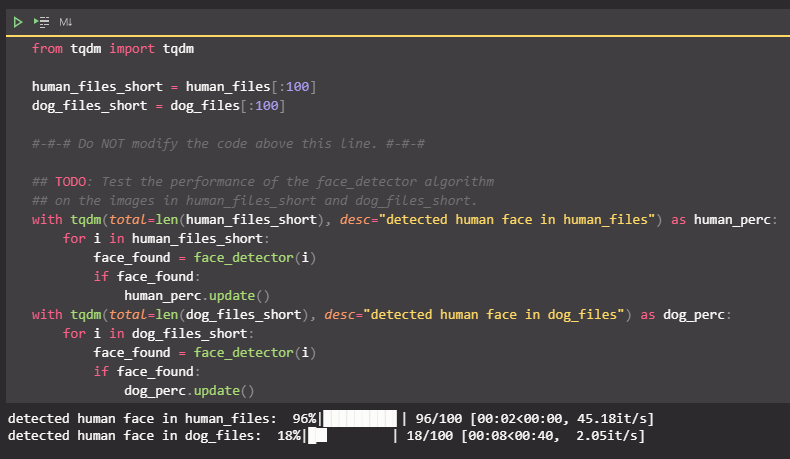

Evaluate face detector

Question 1: use the following code cell to test face_ Performance of the detector function.

- In human_ What is the probability of detecting a face in the first 100 images of files?

- In dog_ What is the probability of detecting a face in the first 100 images of files?

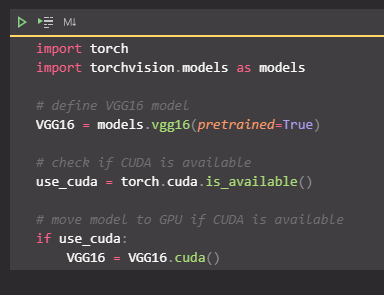

Step 2: detect dogs

In this part, we use the pre trained vgg16 model to detect dogs in the image.

Given an image, the pre trained vgg16 model will return a prediction (from 1000 possible categories in ImageNet) for the objects contained in the image.

Use the pre trained model for prediction

In the next code cell, you will write a function that takes the path of the image as input and returns the index corresponding to the ImageNet class predicted by the pre trained vgg -16 model. The output should always be an integer between 0 and 999 (including 999).

from PIL import Image

import torchvision.transforms as transforms

# Set PIL to be tolerant of image files that are truncated.

from PIL import ImageFile

ImageFile.LOAD_TRUNCATED_IMAGES = True

def VGG16_predict(img_path):

'''

Use pre-trained VGG-16 model to obtain index corresponding to

predicted ImageNet class for image at specified path

Args:

img_path: path to an image

Returns:

Index corresponding to VGG-16 model's prediction

'''

## TODO: Complete the function.

## Load and pre-process an image from the given img_path

## Return the *index* of the predicted class for that image

normalize = transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]

)

transform = transforms.Compose([transforms.Resize((224,224)),

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize

])

image = Image.open(img_path)

#print(image.size)

image = transform(image)

image.unsqueeze_(0)

#print(image.size)

if use_cuda:

image = image.cuda()

output = VGG16(image)

if use_cuda:

output = output.cuda()

class_index = output.data.cpu().numpy().argmax()

return class_index # predicted class index

#print(dog_files[0])

VGG16_predict(dog_files[0])

result:

252

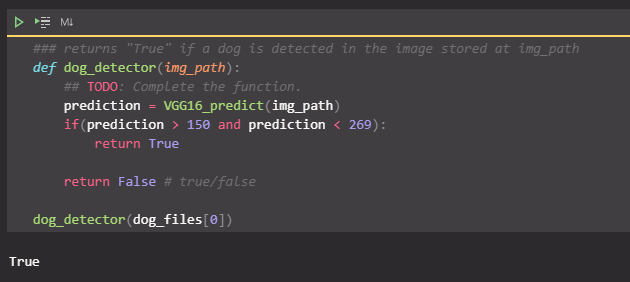

Write a dog detector

Studying the detailed list You will notice that the serial number corresponding to the dog category is 151-268. Therefore, when checking the pre training model to determine whether the image contains dogs, we only need to check vgg16 above_ Whether the predict function returns a value between 151 and 268 (including the end of the interval).

We use these ideas to complete the "dog" below_ The detector function returns {True if a dog is detected from the image, otherwise} False.

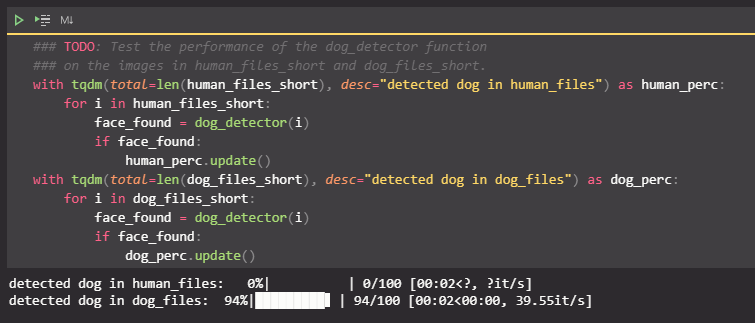

Question 2: use the following code cell to test the dog_ Performance of the detector function.

- In human_ files_ What percentage of dogs are detected in short?

- In dog_ files_ What percentage of dogs are detected in short?

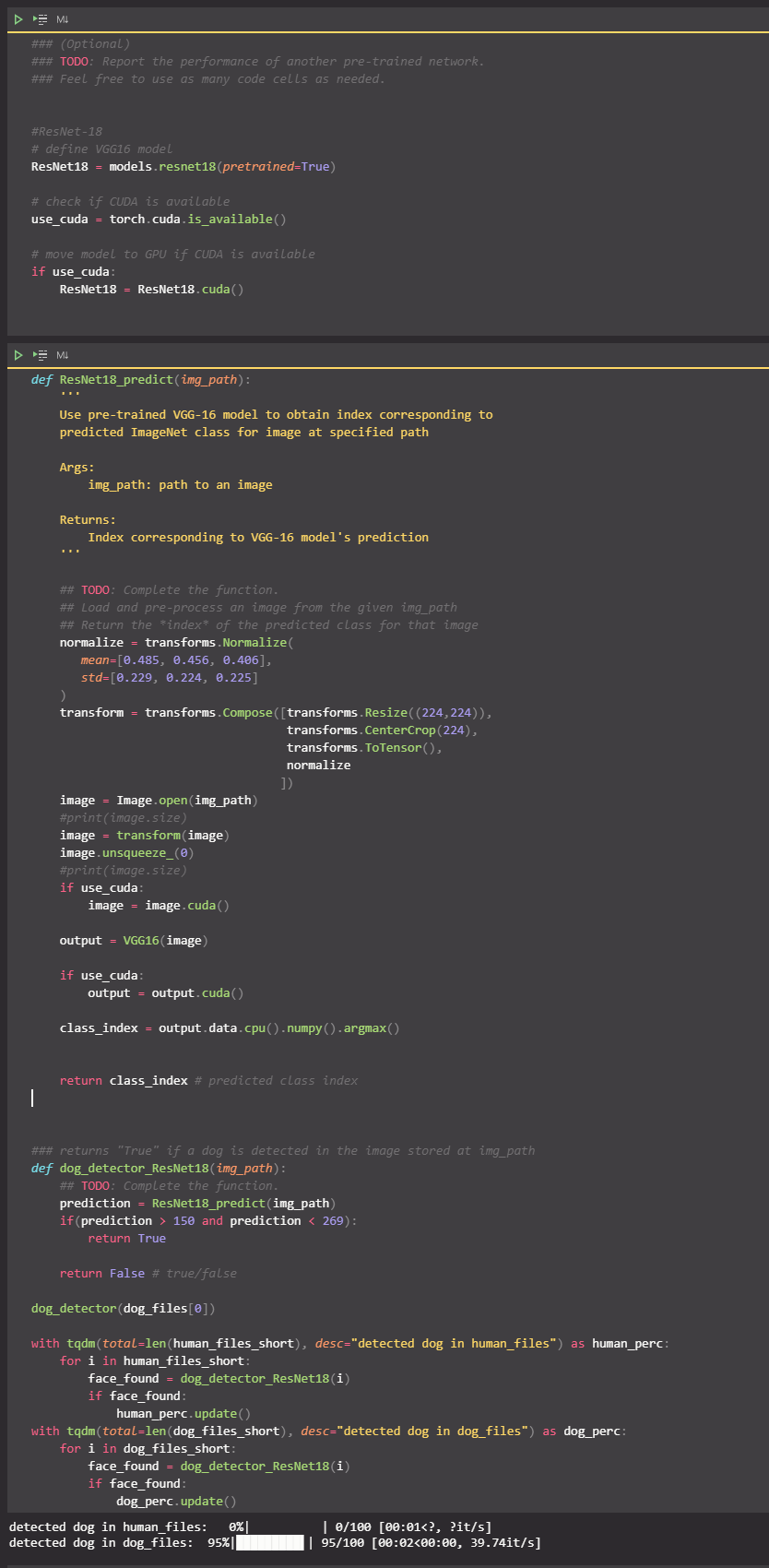

You are free to explore other pre training networks (such as Inception-v3, ResNet-50, etc.). Please use the following code unit to test other pre trained PyTorch models. If you decide to perform this optional task, please report to human_files_short and dog_files_short performance.

You are free to explore other pre training networks (such as Inception-v3, ResNet-50, etc.). Please use the following code unit to test other pre trained PyTorch models. If you decide to perform this optional task, please report to human_files_short and dog_files_short performance.

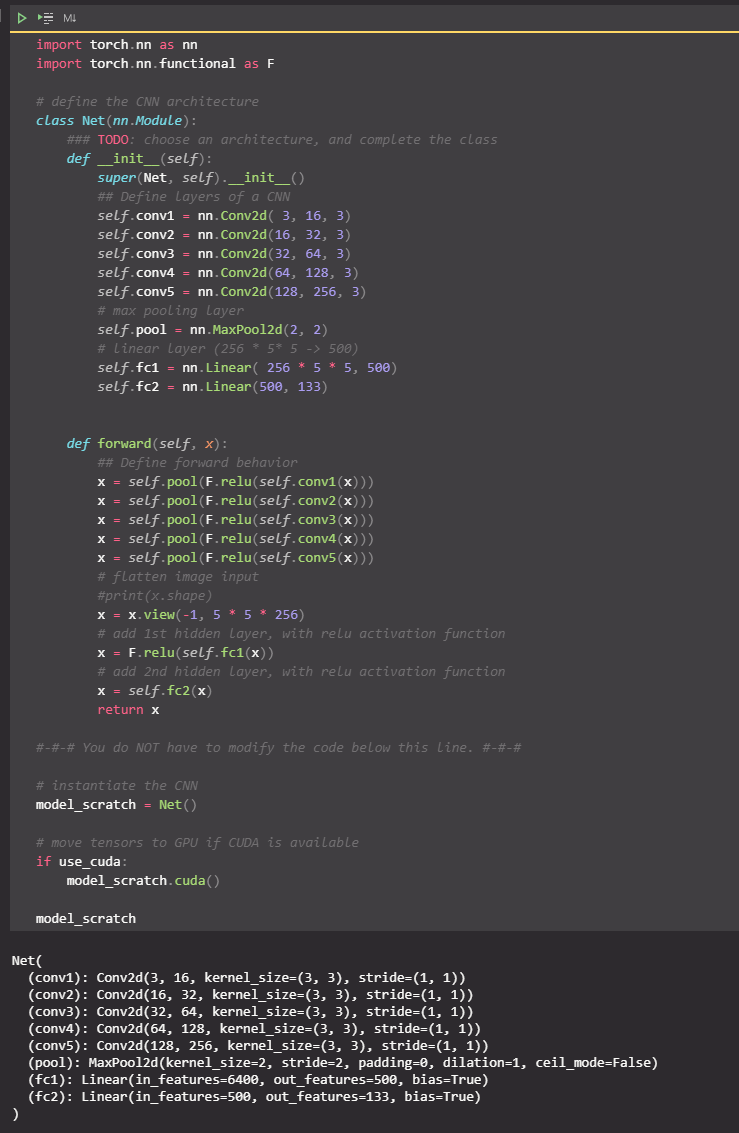

Step 3: create a CNN from scratch to classify dog breeds

Now we have implemented a function that can recognize humans and dogs in images. But we need further methods to identify the category of dogs. In this step, you need to implement a convolutional neural network to classify dog breeds. You need to implement your convolutional neural network from scratch (at this stage, you can't use transfer learning), and you need to achieve an accuracy of more than 1% of the test set. In the five steps of this project, you also have the opportunity to use transfer learning to achieve a model with greatly improved accuracy.

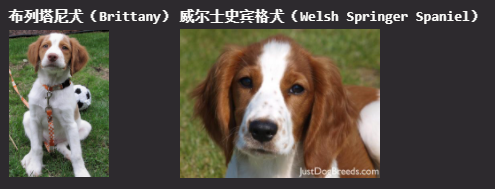

It is worth noting that classifying dog images is a very challenging task. Because even a normal person, it is difficult to distinguish between the Litani and the Welsh Springer.

It is not difficult to find that other dog breeds have small class differences (such as golden retriever and American water hound).

Similarly, labradors are yellow, brown and black. Then your vision based algorithm will have to overcome this high class difference in order to divide these dogs of different colors into the same breed.

We also mentioned that random classification will get a very low result: regardless of the influence of slight imbalance of varieties, the probability of randomly guessing the correct varieties is 1 / 133, and the corresponding accuracy is less than 1%.

Remember, in the field of deep learning, practice is much higher than theory. Try a lot of different frameworks and trust your intuition! Of course, have fun!

Specifies the data loader for the Dog dataset

Question 3: describe the data preprocessing process you selected.

- How does your code resize the image (crop, stretch, etc.)? What is the size of the input tensor and why?

- Have you decided to expand the data set? If so, how (by translation, flipping, rotation, etc.)? If not, why?

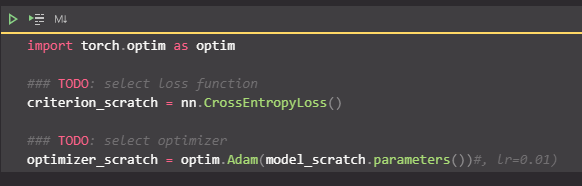

Specify loss function and optimizer

Training and validation model

# the following import is required for training to be robust to truncated images

from PIL import ImageFile

ImageFile.LOAD_TRUNCATED_IMAGES = True

def train(n_epochs, loaders, model, optimizer, criterion, use_cuda, save_path):

"""returns trained model"""

# initialize tracker for minimum validation loss

valid_loss_min = np.Inf

for epoch in range(1, n_epochs+1):

# initialize variables to monitor training and validation loss

train_loss = 0.0

valid_loss = 0.0

###################

# train the model #

###################

model.train()

for batch_idx, (data, target) in enumerate(loaders['train']):

# move to GPU

if use_cuda:

data, target = data.cuda(), target.cuda()

## find the loss and update the model parameters accordingly

## record the average training loss, using something like

## train_loss = train_loss + ((1 / (batch_idx + 1)) * (loss.data - train_loss))

optimizer.zero_grad()

## find the loss and update the model parameters accordingly

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

## record the average training loss, using something like

## train_loss = train_loss + ((1 / (batch_idx + 1)) * (loss.data - train_loss))

train_loss += ((1 / (batch_idx + 1)) * (loss.data - train_loss))

# print training/validation statistics

if (batch_idx+1) % 40 == 0:

print('Epoch: {} \tBatch: {} \tTraining Loss: {:.6f}'.format(epoch, batch_idx + 1, train_loss))

######################

# validate the model #

######################

model.eval()

for batch_idx, (data, target) in enumerate(loaders['valid']):

# move to GPU

if use_cuda:

data, target = data.cuda(), target.cuda()

## update the average validation loss

output = model(data)

loss = criterion(output, target)

valid_loss += ((1 / (batch_idx + 1)) * (loss.data - valid_loss))

# print training/validation statistics

if (batch_idx+1) % 40 == 0:

print('Epoch: {} \tBatch: {} \tValidation Loss: {:.6f}'.format(epoch, batch_idx + 1, valid_loss))

# print training/validation statistics

print('Epoch: {} \tTraining Loss: {:.6f} \tValidation Loss: {:.6f}'.format(

epoch,

train_loss,

valid_loss

))

## TODO: save the model if validation loss has decreased

if valid_loss <= valid_loss_min:

print('Validation loss decreased ({:.6f} --> {:.6f}). Saving model ...'.format(

valid_loss_min,

valid_loss))

torch.save(model.state_dict(), save_path)

valid_loss_min = valid_loss

# return trained model

return model

epoch = 10

# train the model

model_scratch = train(epoch, loaders_scratch, model_scratch, optimizer_scratch,

criterion_scratch, use_cuda, 'model_scratch.pt')

# load the model that got the best validation accuracy

model_scratch.load_state_dict(torch.load('model_scratch.pt'))result:

Epoch: 1 Batch: 40 Training Loss: 4.889728 Epoch: 1 Batch: 80 Training Loss: 4.887787 Epoch: 1 Batch: 120 Training Loss: 4.887685 Epoch: 1 Batch: 160 Training Loss: 4.885648 Epoch: 1 Batch: 200 Training Loss: 4.881847 Epoch: 1 Batch: 240 Training Loss: 4.873278 Epoch: 1 Batch: 280 Training Loss: 4.862270 Epoch: 1 Batch: 320 Training Loss: 4.854340 Epoch: 1 Batch: 40 Validation Loss: 4.712207 Epoch: 1 Training Loss: 4.851301 Validation Loss: 4.704982 Validation loss decreased (inf --> 4.704982). Saving model ... Epoch: 2 Batch: 40 Training Loss: 4.730308 Epoch: 2 Batch: 80 Training Loss: 4.719476 Epoch: 2 Batch: 120 Training Loss: 4.701708 Epoch: 2 Batch: 160 Training Loss: 4.695746 Epoch: 2 Batch: 200 Training Loss: 4.692133 Epoch: 2 Batch: 240 Training Loss: 4.675904 Epoch: 2 Batch: 280 Training Loss: 4.663143 Epoch: 2 Batch: 320 Training Loss: 4.650386 Epoch: 2 Batch: 40 Validation Loss: 4.488307 Epoch: 2 Training Loss: 4.643542 Validation Loss: 4.494160 Validation loss decreased (4.704982 --> 4.494160). Saving model ... Epoch: 3 Batch: 40 Training Loss: 4.474283 Epoch: 3 Batch: 80 Training Loss: 4.501595 Epoch: 3 Batch: 120 Training Loss: 4.477735 Epoch: 3 Batch: 160 Training Loss: 4.488136 Epoch: 3 Batch: 200 Training Loss: 4.490754 Epoch: 3 Batch: 240 Training Loss: 4.487989 Epoch: 3 Batch: 280 Training Loss: 4.490090 Epoch: 3 Batch: 320 Training Loss: 4.481546 Epoch: 3 Batch: 40 Validation Loss: 4.262285 Epoch: 3 Training Loss: 4.479444 Validation Loss: 4.275317 Validation loss decreased (4.494160 --> 4.275317). Saving model ... Epoch: 4 Batch: 40 Training Loss: 4.402790 Epoch: 4 Batch: 80 Training Loss: 4.372338 Epoch: 4 Batch: 120 Training Loss: 4.365306 Epoch: 4 Batch: 160 Training Loss: 4.367325 Epoch: 4 Batch: 200 Training Loss: 4.374326 Epoch: 4 Batch: 240 Training Loss: 4.369847 Epoch: 4 Batch: 280 Training Loss: 4.365964 Epoch: 4 Batch: 320 Training Loss: 4.363493 Epoch: 4 Batch: 40 Validation Loss: 4.249445 Epoch: 4 Training Loss: 4.364571 Validation Loss: 4.248449 Validation loss decreased (4.275317 --> 4.248449). Saving model ... Epoch: 5 Batch: 40 Training Loss: 4.229365 Epoch: 5 Batch: 80 Training Loss: 4.267400 Epoch: 5 Batch: 120 Training Loss: 4.269664 Epoch: 5 Batch: 160 Training Loss: 4.257591 Epoch: 5 Batch: 200 Training Loss: 4.261866 Epoch: 5 Batch: 240 Training Loss: 4.247512 Epoch: 5 Batch: 280 Training Loss: 4.239336 Epoch: 5 Batch: 320 Training Loss: 4.230827 Epoch: 5 Batch: 40 Validation Loss: 4.043582 Epoch: 5 Training Loss: 4.231559 Validation Loss: 4.039588 Validation loss decreased (4.248449 --> 4.039588). Saving model ... Epoch: 6 Batch: 40 Training Loss: 4.180193 Epoch: 6 Batch: 80 Training Loss: 4.140314 Epoch: 6 Batch: 120 Training Loss: 4.153989 Epoch: 6 Batch: 160 Training Loss: 4.140887 Epoch: 6 Batch: 200 Training Loss: 4.151268 Epoch: 6 Batch: 240 Training Loss: 4.153749 Epoch: 6 Batch: 280 Training Loss: 4.153314 Epoch: 6 Batch: 320 Training Loss: 4.156451 Epoch: 6 Batch: 40 Validation Loss: 3.940857 Epoch: 6 Training Loss: 4.149529 Validation Loss: 3.945810 Validation loss decreased (4.039588 --> 3.945810). Saving model ... Epoch: 7 Batch: 40 Training Loss: 4.060485 Epoch: 7 Batch: 80 Training Loss: 4.065772 Epoch: 7 Batch: 120 Training Loss: 4.056967 Epoch: 7 Batch: 160 Training Loss: 4.068470 Epoch: 7 Batch: 200 Training Loss: 4.076772 Epoch: 7 Batch: 240 Training Loss: 4.087616 Epoch: 7 Batch: 280 Training Loss: 4.074337 Epoch: 7 Batch: 320 Training Loss: 4.080192 Epoch: 7 Batch: 40 Validation Loss: 3.860693 Epoch: 7 Training Loss: 4.078263 Validation Loss: 3.884382 Validation loss decreased (3.945810 --> 3.884382). Saving model ... Epoch: 8 Batch: 40 Training Loss: 3.960585 Epoch: 8 Batch: 80 Training Loss: 3.983979 Epoch: 8 Batch: 120 Training Loss: 3.965129 Epoch: 8 Batch: 160 Training Loss: 3.965021 Epoch: 8 Batch: 200 Training Loss: 3.965830 Epoch: 8 Batch: 240 Training Loss: 3.976013 Epoch: 8 Batch: 280 Training Loss: 3.975547 Epoch: 8 Batch: 320 Training Loss: 3.978744 Epoch: 8 Batch: 40 Validation Loss: 3.784086 Epoch: 8 Training Loss: 3.980776 Validation Loss: 3.779312 Validation loss decreased (3.884382 --> 3.779312). Saving model ... Epoch: 9 Batch: 40 Training Loss: 3.917738 Epoch: 9 Batch: 80 Training Loss: 3.967938 Epoch: 9 Batch: 120 Training Loss: 3.934165 Epoch: 9 Batch: 160 Training Loss: 3.917138 Epoch: 9 Batch: 200 Training Loss: 3.910391 Epoch: 9 Batch: 240 Training Loss: 3.909857 Epoch: 9 Batch: 280 Training Loss: 3.907439 Epoch: 9 Batch: 320 Training Loss: 3.901893 Epoch: 9 Batch: 40 Validation Loss: 3.826410 Epoch: 9 Training Loss: 3.903304 Validation Loss: 3.824970 Epoch: 10 Batch: 40 Training Loss: 3.910845 Epoch: 10 Batch: 80 Training Loss: 3.910709 Epoch: 10 Batch: 120 Training Loss: 3.915924 Epoch: 10 Batch: 160 Training Loss: 3.900949 Epoch: 10 Batch: 200 Training Loss: 3.883792 Epoch: 10 Batch: 240 Training Loss: 3.882242 Epoch: 10 Batch: 280 Training Loss: 3.875963 Epoch: 10 Batch: 320 Training Loss: 3.858594 Epoch: 10 Batch: 40 Validation Loss: 3.638186 Epoch: 10 Training Loss: 3.861175 Validation Loss: 3.647465 Validation loss decreased (3.779312 --> 3.647465). Saving model ... <All keys matched successfully>

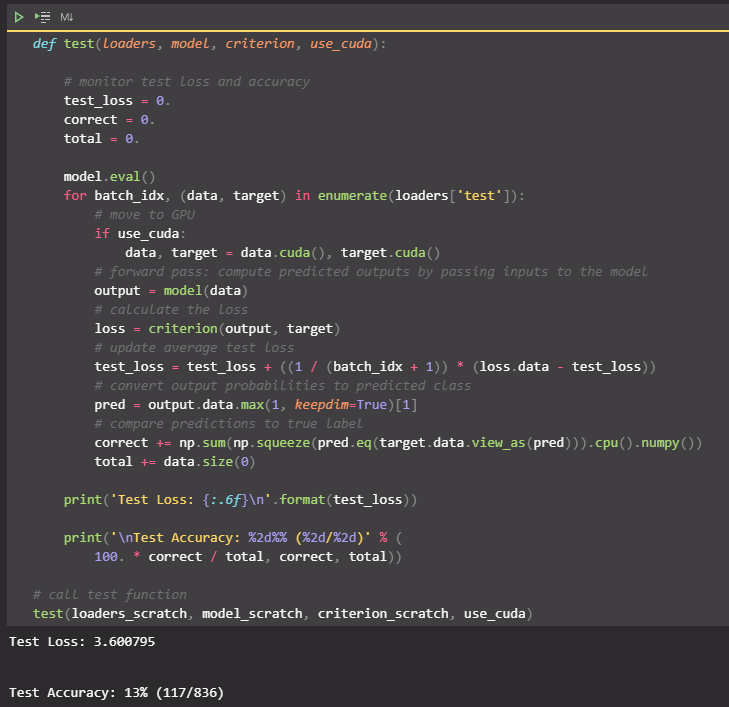

test model

Try your model in the test data set of dog pictures. Use the following code unit to calculate and print test loss and accuracy. Make sure your test accuracy is greater than 10%.

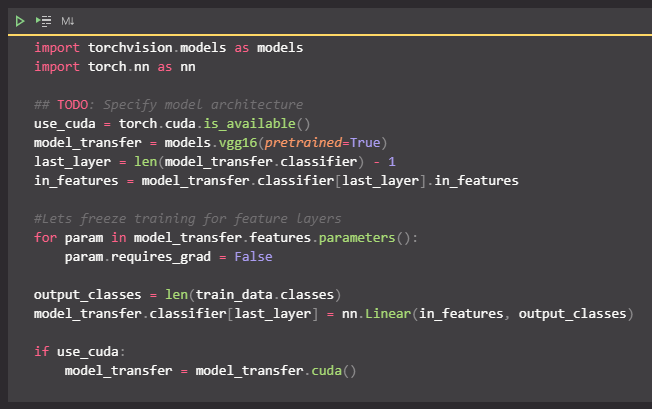

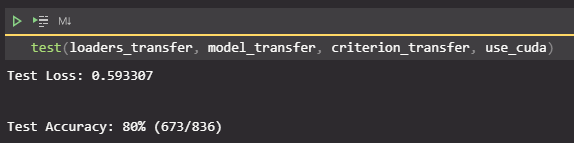

Step 4: use CNN migration learning to distinguish dog breeds

Using the method of Transfer Learning can help us greatly reduce the training time without losing accuracy. In the following steps, you can try to use Transfer Learning to train your own CNN.

Model architecture

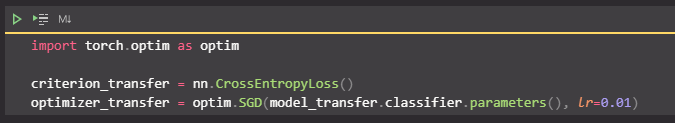

Specify loss function and optimizer

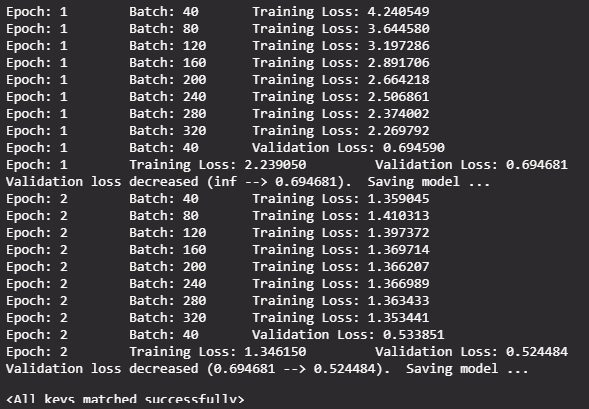

Training and validation model

# train the model

from PIL import ImageFile

ImageFile.LOAD_TRUNCATED_IMAGES = True

def train(n_epochs, loaders, model, optimizer, criterion, use_cuda, save_path):

"""returns trained model"""

# initialize tracker for minimum validation loss

valid_loss_min = np.Inf

for epoch in range(1, n_epochs+1):

# initialize variables to monitor training and validation loss

train_loss = 0.0

valid_loss = 0.0

###################

# train the model #

###################

model.train()

for batch_idx, (data, target) in enumerate(loaders['train']):

# move to GPU

if use_cuda:

data, target = data.cuda(), target.cuda()

optimizer.zero_grad()

## find the loss and update the model parameters accordingly

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

## record the average training loss, using something like

## train_loss = train_loss + ((1 / (batch_idx + 1)) * (loss.data - train_loss))

train_loss += ((1 / (batch_idx + 1)) * (loss.data - train_loss))

# print training/validation statistics

if (batch_idx+1) % 40 == 0:

print('Epoch: {} \tBatch: {} \tTraining Loss: {:.6f}'.format(epoch, batch_idx + 1, train_loss))

######################

# validate the model #

######################

model.eval()

for batch_idx, (data, target) in enumerate(loaders['valid']):

# move to GPU

if use_cuda:

data, target = data.cuda(), target.cuda()

## update the average validation loss

output = model(data)

loss = criterion(output, target)

valid_loss += ((1 / (batch_idx + 1)) * (loss.data - valid_loss))

# print training/validation statistics

if (batch_idx+1) % 40 == 0:

print('Epoch: {} \tBatch: {} \tValidation Loss: {:.6f}'.format(epoch, batch_idx + 1, valid_loss))

# print training/validation statistics

print('Epoch: {} \tTraining Loss: {:.6f} \tValidation Loss: {:.6f}'.format(

epoch,

train_loss,

valid_loss

))

## TODO: save the model if validation loss has decreased

if valid_loss <= valid_loss_min:

print('Validation loss decreased ({:.6f} --> {:.6f}). Saving model ...'.format(

valid_loss_min,

valid_loss))

torch.save(model.state_dict(), save_path)

valid_loss_min = valid_loss

# return trained model

return model

n_epochs = 2

model_transfer = train(n_epochs, loaders_transfer, model_transfer, optimizer_transfer, criterion_transfer, use_cuda, 'model_transfer.pt')

# load the model that got the best validation accuracy (uncomment the line below)

model_transfer.load_state_dict(torch.load('model_transfer.pt'))result:

test model

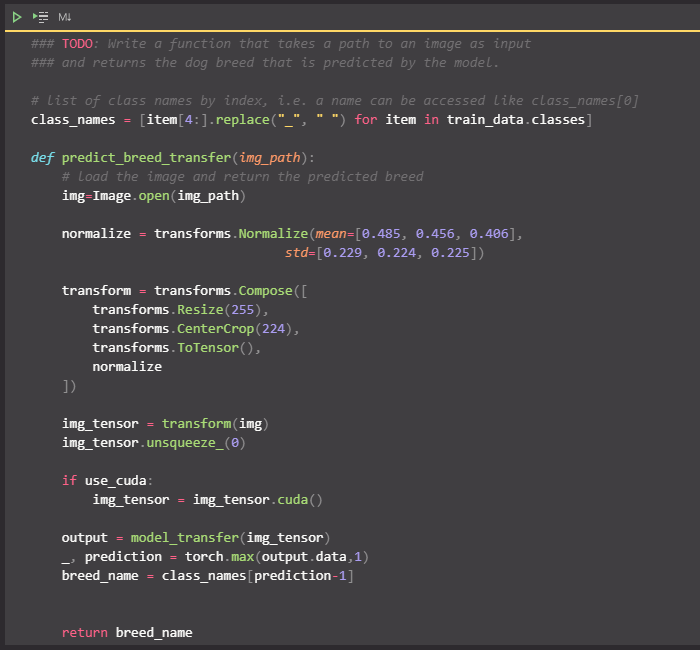

Prediction of dog breeds by model

Write a function that takes the image path as input and returns the breed of dog predicted by your model

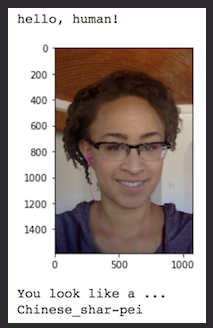

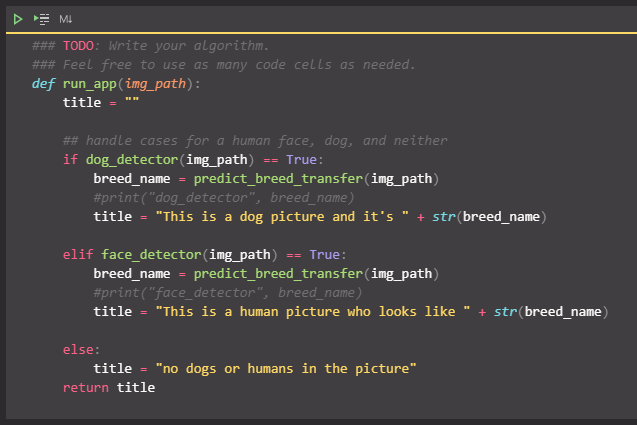

Step 5: complete your algorithm

Implement an algorithm whose input is the path of the image. It can distinguish whether the image contains a person, a dog or neither, and then:

- If a dog is detected from the image, the predicted breed is returned.

- If a person is detected from the image, the most similar dog breed is returned.

- If neither can be detected in the image, an error message is output.

We welcome you to write your own function to detect humans and dogs in the image. You can use the function completed above at will_ Detector and dog_detector function. You need to use your CNN to predict dog breeds in step 5.

The example output of the algorithm is provided below, but you are free to design your own model!

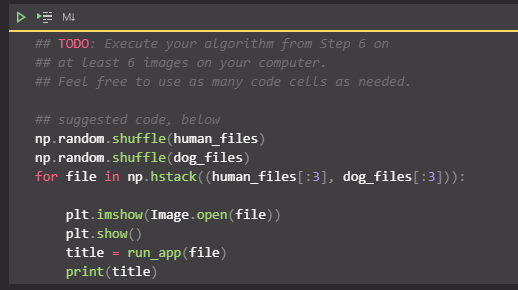

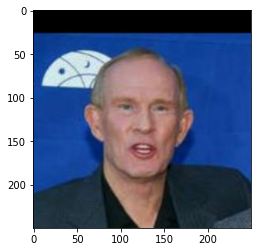

Step 6: test your algorithm

In this section, you will try your new algorithm! What kind of dog does the algorithm think you look like? If you have a dog, can it accurately predict your dog's breed? If you have a cat, will it misjudge your cat as a dog?

result:

This is a human picture who looks like Norwich terrier

This is a human picture who looks like Curly-coated retriever

This is a human picture who looks like Curly-coated retriever

This is a dog picture and it's English cocker spaniel

This is a dog picture and it's Bloodhound

This is a dog picture and it's Lowchen