Deploy zookeeper

Node 128 129 130

- Install jdk before deployment

Portal: zookeeper3.4.14

[root@ceshi-128 local]# java -version java version "1.8.0_221" Java(TM) SE Runtime Environment (build 1.8.0_221-b11) Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode)

Download and configure

[root@ceshi-128 ~]# tar -xf zookeeper-3.4.14.tar.gz -C /usr/local/ [root@ceshi-128 ~]# cd /usr/local/ [root@ceshi-128 local]# ln -s /usr/local/zookeeper-3.4.14/ /usr/local/zookeeper [root@ceshi-128 conf]# pwd /usr/local/zookeeper/conf [root@ceshi-128 conf]# cp zoo_sample.cfg zoo.cfg configuration parameter [root@ceshi-128 conf]# vi zoo.cfg # The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. dataDir=/data/zookeeper/data dataLogDir=/data/zookeeper/logs # the port at which the clients will connect clientPort=2181 server.1=zk1.od.com:2888:3888 server.2=zk2.od.com:2888:3888 server.3=zk3.od.com:2888:3888 [root@ceshi-128 conf]# mkdir -p /data/zookeeper/data [root@ceshi-128 conf]# mkdir -p /data/zookeeper/logs

Add DNS OD com. The above domain name can be resolved successfully

Configure cluster myid

[root@ceshi-128 conf]# vi /data/zookeeper/data/myid 1 [root@ceshi-129 conf]# vi /data/zookeeper/data/myid 2 [root@ceshi-130 conf]# vi /data/zookeeper/data/myid 3 [root@ceshi-128 bin]# /usr/local/zookeeper/bin/zkServer.sh start ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [root@ceshi-128 bin]# netstat -tnlp | grep 2181 tcp 0 0 0.0.0.0:2181 0.0.0.0:* LISTEN 55304/java 130 Node is master [root@ceshi-130 bin]# ./zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Mode: leader

Deploy jenkins to k8s cluster

Mirror portal: dockerhub

Node 132

[root@ceshi-132 ~]# docker pull jenkins/jenkins:v2.222.4 2.263.4: Pulling from jenkins/jenkins 9a0b0ce99936: Pull complete db3b6004c61a: Pull complete 4e96cf3bdc20: Pull complete e47bd954be8f: Pull complete b2d9d6b1cd91: Pull complete fa537a81cda1: Pull complete Digest: sha256:64576b8bd0a7f5c8ca275f4926224c29e7aa3f3167923644ec1243cd23d611f3 Status: Downloaded newer image for jenkins/jenkins:v2.222.4 docker.io/jenkins/jenkins:v2.222.4 [root@ceshi-132 ~]# docker tag 22b8b9a84dbe harbor.od.com/public/jenkins:v2.222.4 [root@ceshi-132 ~]# docker push harbor.od.com/public/jenkins:v2.222.4 The push refers to repository [harbor.od.com/public/jenkins] e0485b038afa: Pushed 2950fdd45d03: Pushed 6ce697717948: Pushed 911119b5424d: Pushed b8f8aeff56a8: Pushed 97041f29baff: Pushed v2.190.3: digest: sha256:64576b8bd0a7f5c8ca275f4926224c29e7aa3f3167923644ec1243cd23d611f3 size: 4087

Configure dockerfile

[root@ceshi-132 ~]# ssh-keygen -t rsa -b 2048 -C "liu_jiangxu@163.com" -N "" -f /root/.ssh/id_rsa

Generating public/private rsa key pair.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:jo0UhlYUk+xszsNIpHt64iUvasvyWSzTaZAE7Xhcfd4 liu_jiangxu@163.com

The key's randomart image is:

+---[RSA 2048]----+

|.. +=o |

| .. oo+.. |

|o.+X. |

|+*=+. |

+----[SHA256]-----+

[root@ceshi-132 ~]# mkdir -p /data/dockerfile/jenkins/

[root@ceshi-132 jenkins]# vi Dockerfile

obtain jenkins image

FROM harbor.od.com/public/jenkins:v2.222.4

use root User execution

USER root

Copy time zone to container

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

Add key file to container

ADD id_rsa /root/.ssh/id_rsa

Add login private warehouse file

ADD config.json /root/.docker/config.json

install docker client

ADD get-docker.sh /get-docker.sh

modify ssh Client authentication

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

/get-docker.sh

Copy key to current directory

[root@ceshi-132 jenkins]# cp ~/.ssh/id_rsa .

Copy docker Configure to current directory

[root@ceshi-132 jenkins]# cp ~/.docker/config.json .

[root@ceshi-132 jenkins]# wget https://get.docker.com/

[root@ceshi-132 jenkins]# chmod +x get-docker.sh

[root@ceshi-132 jenkins]# vi get-docker.sh

#!/bin/sh

set -e

# This script is meant for quick & easy install via:

# $ curl -fsSL get.docker.com -o get-docker.sh

# $ sh get-docker.sh

#

# For test builds (ie. release candidates):

# $ curl -fsSL test.docker.com -o test-docker.sh

# $ sh test-docker.sh

#

# NOTE: Make sure to verify the contents of the script

# you downloaded matches the contents of install.sh

# located at https://github.com/docker/docker-install

# before executing.

#

# Git commit from https://github.com/docker/docker-install when

# the script was uploaded (Should only be modified by upload job):

SCRIPT_COMMIT_SHA=e749601

# This value will automatically get changed for:

# * edge

# * test

# * experimental

DEFAULT_CHANNEL_VALUE="edge"

if [ -z "$CHANNEL" ]; then

CHANNEL=$DEFAULT_CHANNEL_VALUE

fi

DEFAULT_DOWNLOAD_URL="https://download.docker.com"

if [ -z "$DOWNLOAD_URL" ]; then

DOWNLOAD_URL=$DEFAULT_DOWNLOAD_URL

fi

SUPPORT_MAP="

x86_64-centos-7

x86_64-fedora-26

x86_64-fedora-27

x86_64-debian-wheezy

x86_64-debian-jessie

x86_64-debian-stretch

x86_64-debian-buster

x86_64-ubuntu-trusty

x86_64-ubuntu-xenial

x86_64-ubuntu-artful

s390x-ubuntu-xenial

s390x-ubuntu-artful

ppc64le-ubuntu-xenial

ppc64le-ubuntu-artful

aarch64-ubuntu-xenial

aarch64-debian-jessie

aarch64-debian-stretch

aarch64-fedora-26

aarch64-fedora-27

aarch64-centos-7

armv6l-raspbian-jessie

armv7l-raspbian-jessie

armv6l-raspbian-stretch

armv7l-raspbian-stretch

armv7l-debian-jessie

armv7l-debian-stretch

armv7l-debian-buster

armv7l-ubuntu-trusty

armv7l-ubuntu-xenial

armv7l-ubuntu-artful

"

mirror=''

DRY_RUN=${DRY_RUN:-}

while [ $# -gt 0 ]; do

case "$1" in

--mirror)

mirror="$2"

shift

;;

--dry-run)

DRY_RUN=1

;;

--*)

echo "Illegal option $1"

;;

esac

shift $(( $# > 0 ? 1 : 0 ))

done

case "$mirror" in

Aliyun)

DOWNLOAD_URL="https://mirrors.aliyun.com/docker-ce"

;;

AzureChinaCloud)

DOWNLOAD_URL="https://mirror.azure.cn/docker-ce"

;;

esac

command_exists() {

command -v "$@" > /dev/null 2>&1

}

is_dry_run() {

if [ -z "$DRY_RUN" ]; then

return 1

else

return 0

fi

}

deprecation_notice() {

distro=$1

date=$2

echo

echo "DEPRECATION WARNING:"

echo " The distribution, $distro, will no longer be supported in this script as of $date."

echo " If you feel this is a mistake please submit an issue at https://github.com/docker/docker-install/issues/new"

echo

sleep 10

}

get_distribution() {

lsb_dist=""

# Every system that we officially support has /etc/os-release

if [ -r /etc/os-release ]; then

lsb_dist="$(. /etc/os-release && echo "$ID")"

fi

# Returning an empty string here should be alright since the

# case statements don't act unless you provide an actual value

echo "$lsb_dist"

}

add_debian_backport_repo() {

debian_version="$1"

backports="deb http://ftp.debian.org/debian $debian_version-backports main"

if ! grep -Fxq "$backports" /etc/apt/sources.list; then

(set -x; $sh_c "echo \"$backports\" >> /etc/apt/sources.list")

fi

}

echo_docker_as_nonroot() {

if is_dry_run; then

return

fi

if command_exists docker && [ -e /var/run/docker.sock ]; then

(

set -x

$sh_c 'docker version'

) || true

fi

your_user=your-user

[ "$user" != 'root' ] && your_user="$user"

# intentionally mixed spaces and tabs here -- tabs are stripped by "<<-EOF", spaces are kept in the output

echo "If you would like to use Docker as a non-root user, you should now consider"

echo "adding your user to the \"docker\" group with something like:"

echo

echo " sudo usermod -aG docker $your_user"

echo

echo "Remember that you will have to log out and back in for this to take effect!"

echo

echo "WARNING: Adding a user to the \"docker\" group will grant the ability to run"

echo " containers which can be used to obtain root privileges on the"

echo " docker host."

echo " Refer to https://docs.docker.com/engine/security/security/#docker-daemon-attack-surface"

echo " for more information."

}

# Check if this is a forked Linux distro

check_forked() {

# Check for lsb_release command existence, it usually exists in forked distros

if command_exists lsb_release; then

# Check if the `-u` option is supported

set +e

lsb_release -a -u > /dev/null 2>&1

lsb_release_exit_code=$?

set -e

# Check if the command has exited successfully, it means we're in a forked distro

if [ "$lsb_release_exit_code" = "0" ]; then

# Print info about current distro

cat <<-EOF

You're using '$lsb_dist' version '$dist_version'.

EOF

# Get the upstream release info

lsb_dist=$(lsb_release -a -u 2>&1 | tr '[:upper:]' '[:lower:]' | grep -E 'id' | cut -d ':' -f 2 | tr -d '[:space:]')

dist_version=$(lsb_release -a -u 2>&1 | tr '[:upper:]' '[:lower:]' | grep -E 'codename' | cut -d ':' -f 2 | tr -d '[:space:]')

# Print info about upstream distro

cat <<-EOF

Upstream release is '$lsb_dist' version '$dist_version'.

EOF

else

if [ -r /etc/debian_version ] && [ "$lsb_dist" != "ubuntu" ] && [ "$lsb_dist" != "raspbian" ]; then

if [ "$lsb_dist" = "osmc" ]; then

# OSMC runs Raspbian

lsb_dist=raspbian

else

# We're Debian and don't even know it!

lsb_dist=debian

fi

dist_version="$(sed 's/\/.*//' /etc/debian_version | sed 's/\..*//')"

case "$dist_version" in

9)

dist_version="stretch"

;;

8|'Kali Linux 2')

dist_version="jessie"

;;

7)

dist_version="wheezy"

;;

esac

fi

fi

fi

}

semverParse() {

major="${1%%.*}"

minor="${1#$major.}"

minor="${minor%%.*}"

patch="${1#$major.$minor.}"

patch="${patch%%[-.]*}"

}

ee_notice() {

echo

echo

echo " WARNING: $1 is now only supported by Docker EE"

echo " Check https://store.docker.com for information on Docker EE"

echo

echo

}

do_install() {

echo "# Executing docker install script, commit: $SCRIPT_COMMIT_SHA"

if command_exists docker; then

docker_version="$(docker -v | cut -d ' ' -f3 | cut -d ',' -f1)"

MAJOR_W=1

MINOR_W=10

semverParse "$docker_version"

shouldWarn=0

if [ "$major" -lt "$MAJOR_W" ]; then

shouldWarn=1

fi

if [ "$major" -le "$MAJOR_W" ] && [ "$minor" -lt "$MINOR_W" ]; then

shouldWarn=1

fi

cat >&2 <<-'EOF'

Warning: the "docker" command appears to already exist on this system.

If you already have Docker installed, this script can cause trouble, which is

why we're displaying this warning and provide the opportunity to cancel the

installation.

If you installed the current Docker package using this script and are using it

EOF

if [ $shouldWarn -eq 1 ]; then

cat >&2 <<-'EOF'

again to update Docker, we urge you to migrate your image store before upgrading

to v1.10+.

You can find instructions for this here:

https://github.com/docker/docker/wiki/Engine-v1.10.0-content-addressability-migration

EOF

else

cat >&2 <<-'EOF'

again to update Docker, you can safely ignore this message.

EOF

fi

cat >&2 <<-'EOF'

You may press Ctrl+C now to abort this script.

EOF

( set -x; sleep 20 )

fi

user="$(id -un 2>/dev/null || true)"

sh_c='sh -c'

if [ "$user" != 'root' ]; then

if command_exists sudo; then

sh_c='sudo -E sh -c'

elif command_exists su; then

sh_c='su -c'

else

cat >&2 <<-'EOF'

Error: this installer needs the ability to run commands as root.

We are unable to find either "sudo" or "su" available to make this happen.

EOF

exit 1

fi

fi

if is_dry_run; then

sh_c="echo"

fi

# perform some very rudimentary platform detection

lsb_dist=$( get_distribution )

lsb_dist="$(echo "$lsb_dist" | tr '[:upper:]' '[:lower:]')"

case "$lsb_dist" in

ubuntu)

if command_exists lsb_release; then

dist_version="$(lsb_release --codename | cut -f2)"

fi

if [ -z "$dist_version" ] && [ -r /etc/lsb-release ]; then

dist_version="$(. /etc/lsb-release && echo "$DISTRIB_CODENAME")"

fi

;;

debian|raspbian)

dist_version="$(sed 's/\/.*//' /etc/debian_version | sed 's/\..*//')"

case "$dist_version" in

9)

dist_version="stretch"

;;

8)

dist_version="jessie"

;;

7)

dist_version="wheezy"

;;

esac

;;

centos)

if [ -z "$dist_version" ] && [ -r /etc/os-release ]; then

dist_version="$(. /etc/os-release && echo "$VERSION_ID")"

fi

;;

rhel|ol|sles)

ee_notice "$lsb_dist"

exit 1

;;

*)

if command_exists lsb_release; then

dist_version="$(lsb_release --release | cut -f2)"

fi

if [ -z "$dist_version" ] && [ -r /etc/os-release ]; then

dist_version="$(. /etc/os-release && echo "$VERSION_ID")"

fi

;;

esac

# Check if this is a forked Linux distro

check_forked

# Check if we actually support this configuration

if ! echo "$SUPPORT_MAP" | grep "$(uname -m)-$lsb_dist-$dist_version" >/dev/null; then

cat >&2 <<-'EOF'

Either your platform is not easily detectable or is not supported by this

installer script.

Please visit the following URL for more detailed installation instructions:

https://docs.docker.com/engine/installation/

EOF

exit 1

fi

# Run setup for each distro accordingly

case "$lsb_dist" in

ubuntu|debian|raspbian)

pre_reqs="apt-transport-https ca-certificates curl"

if [ "$lsb_dist" = "debian" ]; then

if [ "$dist_version" = "wheezy" ]; then

add_debian_backport_repo "$dist_version"

fi

# libseccomp2 does not exist for debian jessie main repos for aarch64

if [ "$(uname -m)" = "aarch64" ] && [ "$dist_version" = "jessie" ]; then

add_debian_backport_repo "$dist_version"

fi

fi

# TODO: August 31, 2018 delete from here,

if [ "$lsb_dist" = "ubuntu" ] && [ "$dist_version" = "artful" ]; then

deprecation_notice "$lsb_dist $dist_version" "August 31, 2018"

fi

# TODO: August 31, 2018 delete to here,

if ! command -v gpg > /dev/null; then

pre_reqs="$pre_reqs gnupg"

fi

apt_repo="deb [arch=$(dpkg --print-architecture)] $DOWNLOAD_URL/linux/$lsb_dist $dist_version $CHANNEL"

(

if ! is_dry_run; then

set -x

fi

$sh_c 'apt-get update -qq >/dev/null'

$sh_c "apt-get install -y -qq $pre_reqs >/dev/null"

$sh_c "curl -fsSL \"$DOWNLOAD_URL/linux/$lsb_dist/gpg\" | apt-key add -qq - >/dev/null"

$sh_c "echo \"$apt_repo\" > /etc/apt/sources.list.d/docker.list"

if [ "$lsb_dist" = "debian" ] && [ "$dist_version" = "wheezy" ]; then

$sh_c 'sed -i "/deb-src.*download\.docker/d" /etc/apt/sources.list.d/docker.list'

fi

$sh_c 'apt-get update -qq >/dev/null'

)

pkg_version=""

if [ ! -z "$VERSION" ]; then

if is_dry_run; then

echo "# WARNING: VERSION pinning is not supported in DRY_RUN"

else

# Will work for incomplete versions IE (17.12), but may not actually grab the "latest" if in the test channel

pkg_pattern="$(echo "$VERSION" | sed "s/-ce-/~ce~.*/g" | sed "s/-/.*/g").*-0~$lsb_dist"

search_command="apt-cache madison 'docker-ce' | grep '$pkg_pattern' | head -1 | cut -d' ' -f 4"

pkg_version="$($sh_c "$search_command")"

echo "INFO: Searching repository for VERSION '$VERSION'"

echo "INFO: $search_command"

if [ -z "$pkg_version" ]; then

echo

echo "ERROR: '$VERSION' not found amongst apt-cache madison results"

echo

exit 1

fi

pkg_version="=$pkg_version"

fi

fi

(

if ! is_dry_run; then

set -x

fi

$sh_c "apt-get install -y -qq --no-install-recommends docker-ce$pkg_version >/dev/null"

)

echo_docker_as_nonroot

exit 0

;;

centos|fedora)

yum_repo="$DOWNLOAD_URL/linux/$lsb_dist/docker-ce.repo"

if [ "$lsb_dist" = "fedora" ]; then

if [ "$dist_version" -lt "26" ]; then

echo "Error: Only Fedora >=26 are supported"

exit 1

fi

pkg_manager="dnf"

config_manager="dnf config-manager"

enable_channel_flag="--set-enabled"

pre_reqs="dnf-plugins-core"

pkg_suffix="fc$dist_version"

else

pkg_manager="yum"

config_manager="yum-config-manager"

enable_channel_flag="--enable"

pre_reqs="yum-utils"

pkg_suffix="el"

fi

(

if ! is_dry_run; then

set -x

fi

$sh_c "$pkg_manager install -y -q $pre_reqs"

$sh_c "$config_manager --add-repo $yum_repo"

if [ "$CHANNEL" != "stable" ]; then

$sh_c "$config_manager $enable_channel_flag docker-ce-$CHANNEL"

fi

$sh_c "$pkg_manager makecache"

)

pkg_version=""

if [ ! -z "$VERSION" ]; then

if is_dry_run; then

echo "# WARNING: VERSION pinning is not supported in DRY_RUN"

else

pkg_pattern="$(echo "$VERSION" | sed "s/-ce-/\\\\.ce.*/g" | sed "s/-/.*/g").*$pkg_suffix"

search_command="$pkg_manager list --showduplicates 'docker-ce' | grep '$pkg_pattern' | tail -1 | awk '{print \$2}'"

pkg_version="$($sh_c "$search_command")"

echo "INFO: Searching repository for VERSION '$VERSION'"

echo "INFO: $search_command"

if [ -z "$pkg_version" ]; then

echo

echo "ERROR: '$VERSION' not found amongst $pkg_manager list results"

echo

exit 1

fi

# Cut out the epoch and prefix with a '-'

pkg_version="-$(echo "$pkg_version" | cut -d':' -f 2)"

fi

fi

(

if ! is_dry_run; then

set -x

fi

$sh_c "$pkg_manager install -y -q docker-ce$pkg_version"

)

echo_docker_as_nonroot

exit 0

;;

esac

exit 1

}

# wrapped up in a function so that we have some protection against only getting

# half the file during "curl | sh"

do_install

Build dockerfile

- New infra private project in harbor

[root@ceshi-132 jenkins]# docker build . -t harbor.od.com/infra/jenkins:v2.222.4 [root@ceshi-132 jenkins]# docker push harbor.od.com/infra/jenkins:v2.222.4

Create namespace

Node 130

[root@ceshi-130 bin]# kubectl create ns infra namespace/infra created

Authorize the cluster to pull harbor private projects

[root@ceshi-130 bin]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=12345 -n infra secret/harbor created Type: docker-registry name: harbor Private warehouse Name: infra

Download nfs

Node 130 131 132

[root@ceshi-130 bin]# yum install nfs-utils -y

Configure nfs server

Node 132

[root@ceshi-132 ~]# vi /etc/exports /data/nfsvolume 192.168.108.0/24(rw,no_root_squash) [root@ceshi-132 ~]# mkdir /data/nfsvolume/jenkins_home [root@ceshi-132 ~]# systemctl start nfs [root@ceshi-132 ~]# systemctl enable nfs Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

Create jenkins resource list

[root@ceshi-132 k8s-yaml]# mkdir jenkins [root@ceshi-132 k8s-yaml]# cd jenkins/

[root@ceshi-132 jenkins]# cat dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

labels:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data

nfs:

server: ceshi-132.host.com

path: /data/nfsvolume/jenkins_home

- name: docker

hostPath:

path: /run/docker.sock

type: ''

containers:

- name: jenkins

image: harbor.od.com/infra/jenkins:v2.222.4

imagePullPolicy: IfNotPresent If not, go to the remote warehouse to pull the image

ports:

- containerPort: 8080 Container port

protocol: TCP agreement

env: environment variable

- name: JAVA_OPTS

value: -Xmx512m -Xms512m Minimum and maximum heap memory 512 m

volumeMounts: Mount path

- name: data

mountPath: /var/jenkins_home

- name: docker

mountPath: /run/docker.sock Host will be docker sock File mounted to jenkins,That is to say jenkins The container is like a host docker,see docker The mirror operation container has the same effect as the host

imagePullSecrets: You must add a secondary parameter to pull the private warehouse image, otherwise it cannot be pulled

- name: harbor Declaration name

securityContext:

runAsUser: 0 root start-up

strategy:

type: RollingUpdate Default rolling upgrade

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600 Startup failure timeout length

[root@ceshi-132 jenkins]# cat svc.yaml

kind: Service

apiVersion: v1

metadata:

name: jenkins

namespace: infra

spec:

ports:

- protocol: TCP

port: 80 Cluster network port

targetPort: 8080 Container port

selector:

app: jenkins

[root@ceshi-132 jenkins]# cat ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

spec:

rules:

- host: jenkins.od.com

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 80

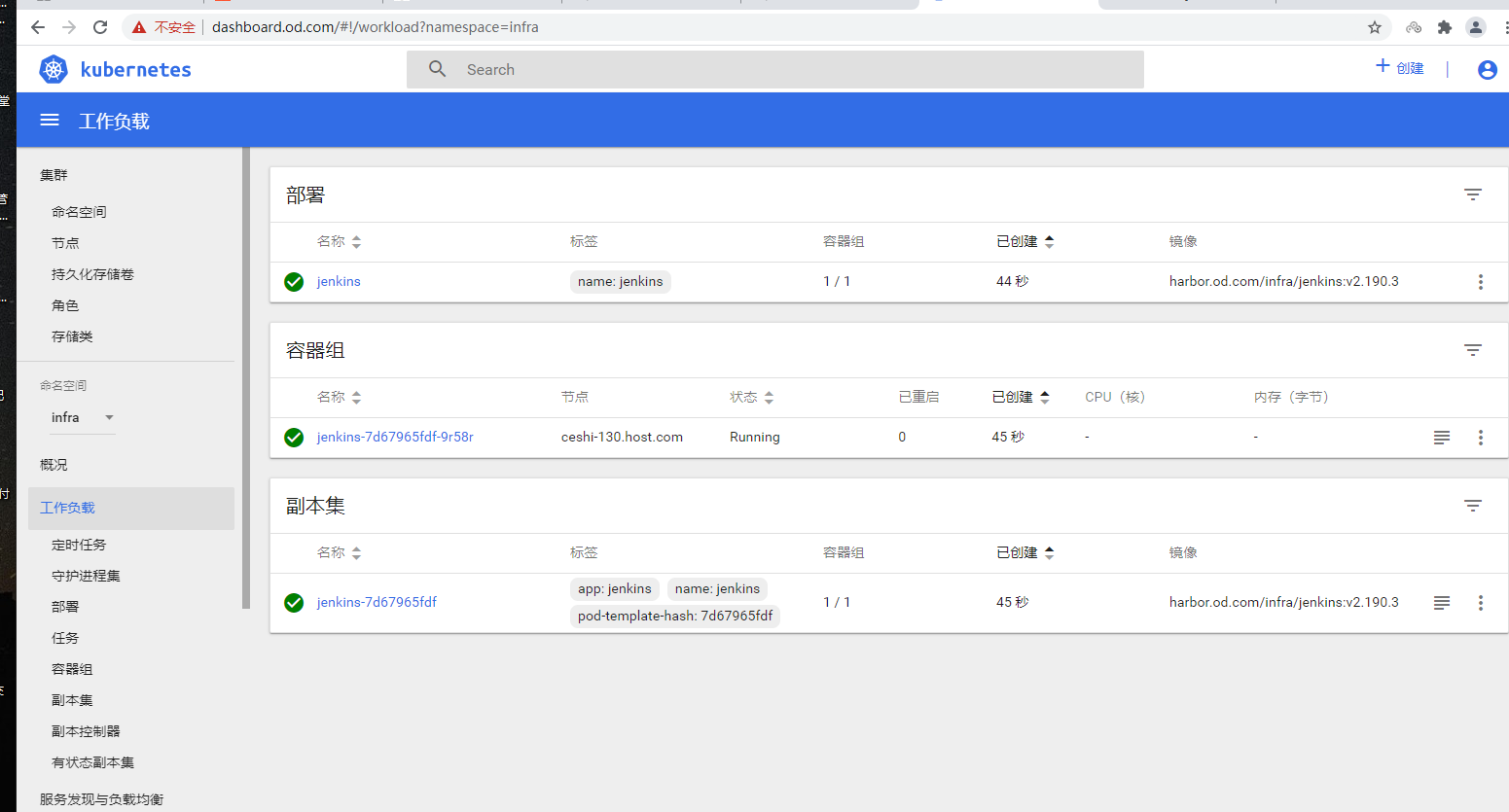

Building pods

[root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/jenkins/dp.yaml deployment.extensions/jenkins created [root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/jenkins/svc.yaml service/jenkins created [root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/jenkins/ingress.yaml ingress.extensions/jenkins created

Verify pod

Node 130 131

- Before testing git, you must upload the public key to the SSH public key of Gitee security settings before image packaging (using the SSH public key allows you to use a secure connection when your computer communicates with Gitee)

[root@ceshi-130 ~]# kubectl get pod -n infra NAME READY STATUS RESTARTS AGE jenkins-698b4994c8-hm5wf 1/1 Running 0 5h21m [root@ceshi-130 ~]# kubectl exec -it jenkins-698b4994c8-hm5wf bash -n infra root@jenkins-698b4994c8-hm5wf:/# whoami root root@jenkins-698b4994c8-hm5wf:/# date Wed Aug 18 16:33:53 CST 2021 Test to ssh Connectivity root@jenkins-698b4994c8-hm5wf:/# ssh -i /root/.ssh/id_rsa -T git@gitee.com Hi Liu Jiangxu! You've successfully authenticated, but GITEE.COM does not provide shell access. test harbor Warehouse connectivity root@jenkins-698b4994c8-hm5wf:/# docker login harbor.od.com Authenticating with existing credentials... WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Login Succeeded

Deploy maven

Node 132

Portal: maven:3.6.2

Configure maven

[root@ceshi-132 ~]# mkdir /data/nfsvolume/jenkins_home/maven-3.6.2-8u242

[root@ceshi-132 ~]# tar xf apache-maven-3.6.2-bin.tar.gz

[root@ceshi-132 ~]# mv apache-maven-3.6.2/* /data/nfsvolume/jenkins_home/maven-3.6.2-8u242/

[root@ceshi-132 ~]# vi /data/nfsvolume/jenkins_home/maven-3.6.2-8u242/conf/settings.xml

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>*</mirrorOf>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</mirror>

Download the image required for java operation

[root@ceshi-132 ~]# docker pull stanleyws/jre8:8u112

[root@ceshi-132 ~]# docker tag fa3a085d6ef1 harbor.od.com/public/jre8:8u112

[root@ceshi-132 ~]# docker push harbor.od.com/public/jre8:8u112

The push refers to repository [harbor.od.com/public/jre8]

[root@ceshi-132 ~]# mkdir /data/dockerfile/jre8

[root@ceshi-132 jre8]# vi Dockerfile

From private warehouse

FROM harbor.od.com/public/jre8:8u112

Set time zone

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

Add monitoring profile

ADD config.yml /opt/prom/config.yml

collect jvm information

ADD jmx_javaagent-0.3.1.jar /opt/prom/

working directory

WORKDIR /opt/project_dir

docker Run default startup script

ADD entrypoint.sh /entrypoint.sh

CMD ["/entrypoint.sh"]

[root@ceshi-132 jre8]# wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar

[root@ceshi-132 jre8]# vi config.yml

---

rules:

- pattern: '.*'

[root@ceshi-132 jre8]# vi entrypoint.sh

#!/bin/sh

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=${C_OPTS}

JAR_BALL=${JAR_BALL}

exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL}

structure dockerfile

[root@ceshi-132 jre8]# docker build . -t harbor.od.com/base/jre8:8u112

Successfully built 7f36e75aac28

Successfully tagged harbor.od.com/base/jre8:8u112

[root@ceshi-132 jre8]# docker push harbor.od.com/base/jre8:8u112

8d4d1ab5ff74: Mounted from public/jre8

8u112: digest: sha256:72d4bd870605ae17f9f23e5cb9c453c34906d7ff86ce97c0c2ef89b68c1dcb6f size: 2405

Download the Jenkins plug-in

Blue Ocean

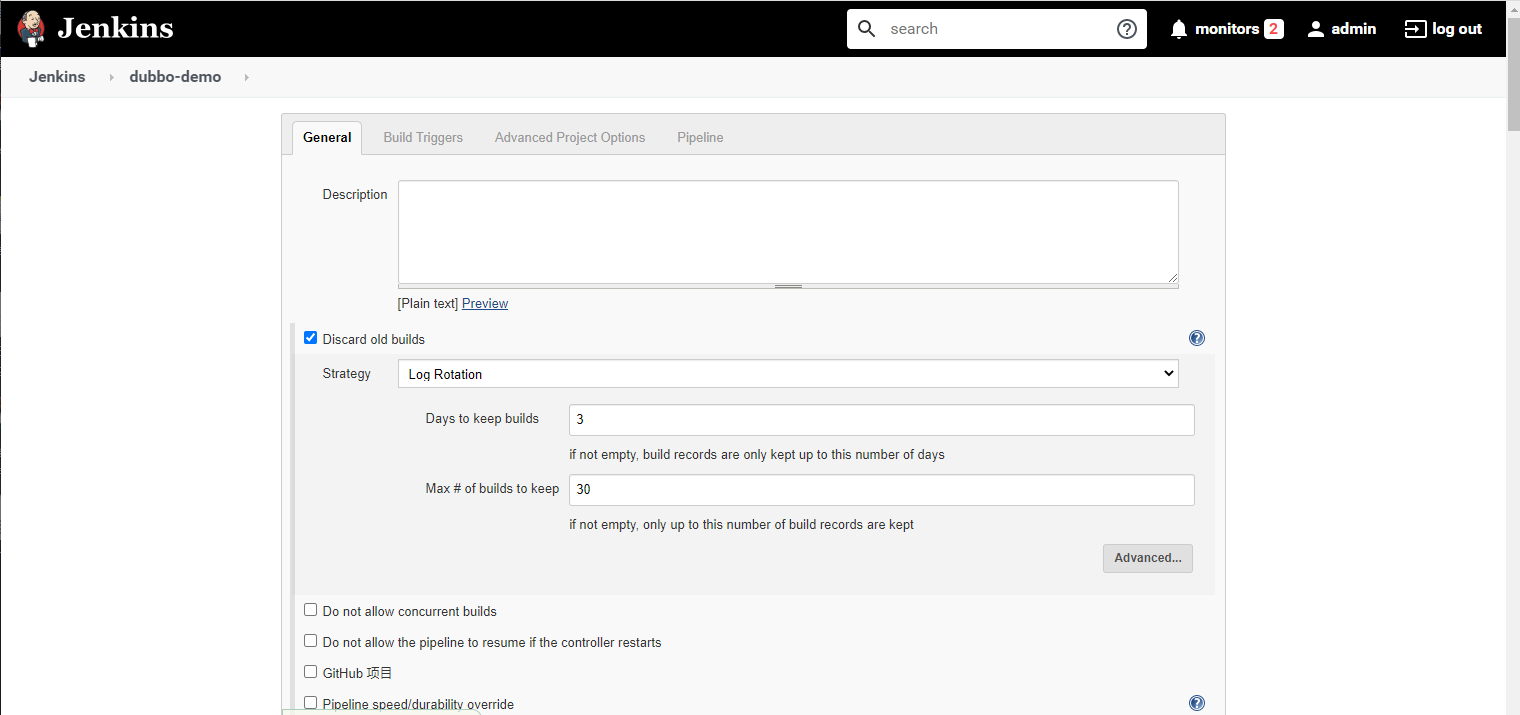

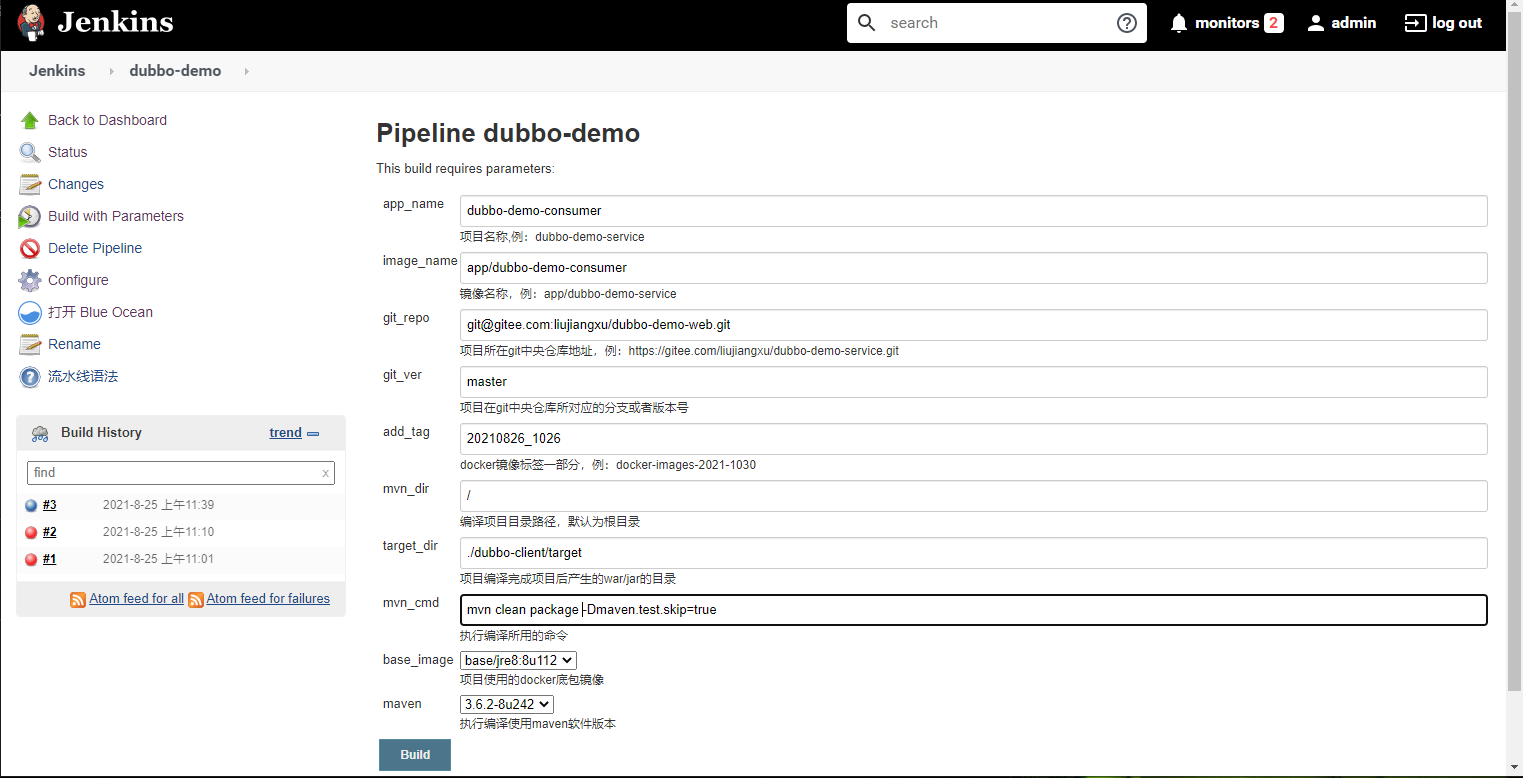

Jenkins new flow project

New Item>pipeline>Configure>Discard old builds

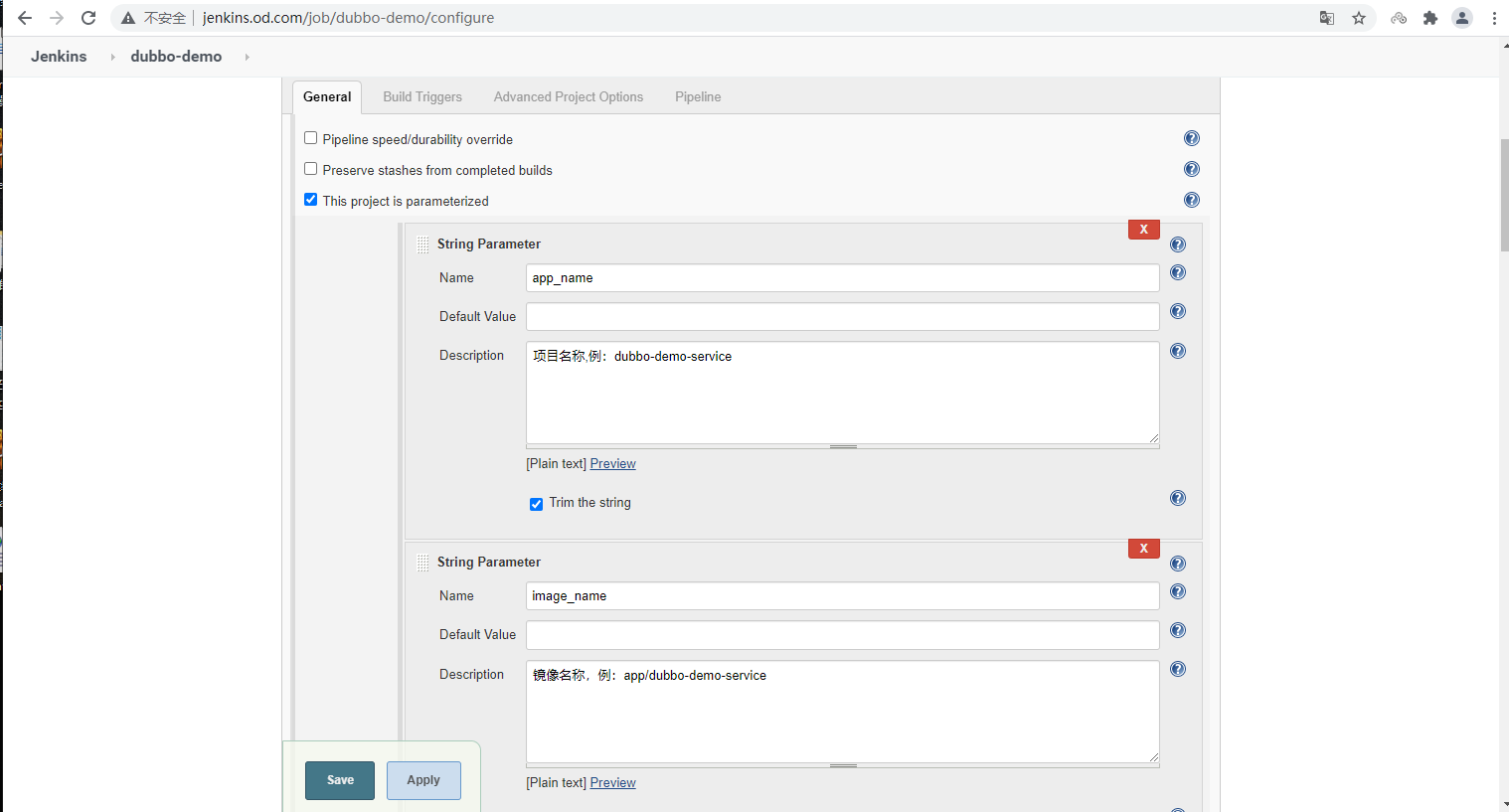

New Item>pipeline>Configure>This project is parameterized

-

Add Parameter -> String Parameter

Name : app_name

Default Value :

Description: project name -

Add Parameter -> String Parameter

Name : image_name

Default Value :

Description: image name -

Add Parameter -> String Parameter

Name : git_repo

Default Value :

Description: address of git central warehouse where the project is located -

Add Parameter -> String Parameter

Name : git_ver

Default Value :

Description: the branch or version number corresponding to the project in git central warehouse -

Add Parameter -> String Parameter

Name : add_tag

Default Value :

Description: docker image label time part -

Add Parameter -> String Parameter

Name : mvn_dir

Default Value : ./

Description: compile project directory path -

Add Parameter -> String Parameter

Name : target_dir

Default Value : ./target

Description: the directory of war/jar generated after the project is compiled -

Add Parameter -> String Parameter

Name : mvn_cmd

Default Value : mvn clean package -Dmaven.test.skip=true

Description: execute the command used for compilation -

Add Parameter -> Choice Paramete

Name : base_image

Default Value :

base/jre7:7u80

base/jre8:8u112

Description: the project uses the bottom package as the image -

Add Parameter -> Choice Parameter

Name : maven

Default Value :

3.6.0-8u181

3.2.5-6u025

Description: perform compilation using maven software version

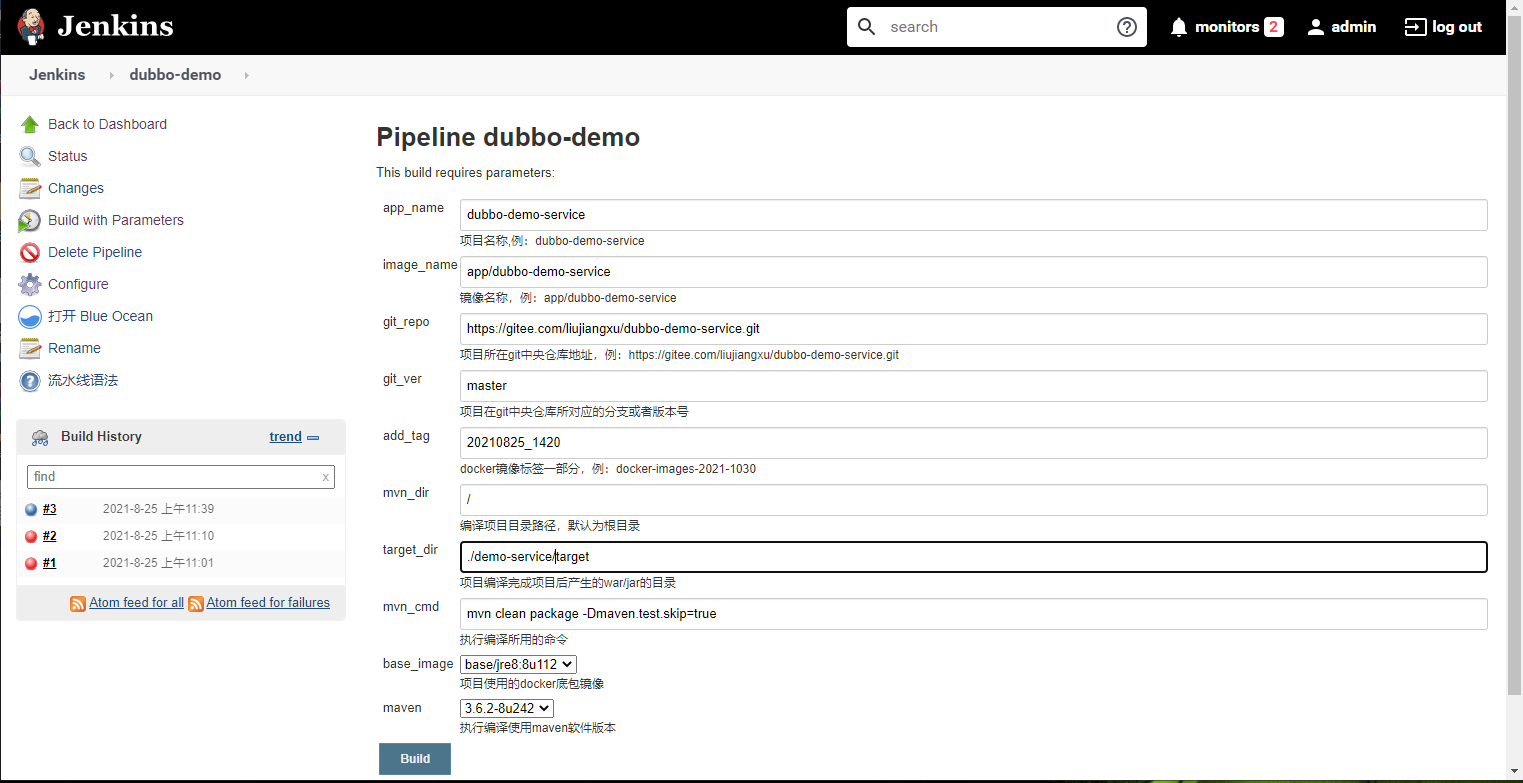

Pipeline>

pipeline {

agent any

stages {

stage('pull') { //get project code from repo

steps {

sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"

}

}

stage('build') { //exec mvn cmd

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"

}

}

stage('package') { //move jar file into project_dir

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && mv *.jar ./project_dir"

}

}

stage('image') { //build image and push to registry

steps {

writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.od.com/${params.base_image}

ADD ${params.target_dir}/project_dir /opt/project_dir"""

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag}"

}

}

}

}

Build project

Edit resource configuration list

Node 132

[root@ceshi-132 ~]# cd /data/k8s-yaml/

[root@ceshi-132 k8s-yaml]# mkdir dubbo-demo-service

[root@ceshi-132 k8s-yaml]# cd dubbo-demo-service/

[root@ceshi-132 dubbo-demo-service]# vi dp.yaml

kind: Deployment Define the resource category. To create POD Just write as pod,Deployment,StatefulSet etc.

apiVersion: extensions/v1beta1 Define version

metadata: Metadata information, including resource name namespace Wait. namespace Used to classify resources. There will be one by default default Namespace

name: dubbo-demo-service name

namespace: app Namespace

labels: label

name: dubbo-demo-service name

spec: Declare the attribute state of the resource, that is, you want to deployment What is it like

replicas: 1 Number of copies

selector: Controller selector, which specifies the controller to manage those pod

matchLabels: labels Matching rules

name: dubbo-demo-service name

template: Template. When the number of copies is insufficient, create according to the following template pod copy

metadata: metadata

labels: label

app: dubbo-demo-service Label yourself

name: dubbo-demo-service name

spec: Declare the attribute state of the resource, that is, you want to deployment What is it like

containers: Create container

- name: dubbo-demo-service name

image: harbor.od.com/app/dubbo-demo-service:master_20210825_1139 Mirror Address

ports: port settings

- containerPort: 20880 Exposed 20880 port

protocol: TCP agreement

env: set variable

- name: JAR_BALL JAR_BALL=dubbo-server.jar

value: dubbo-server.jar

imagePullPolicy: IfNotPresent Give priority to local image,No more local Downloads

imagePullSecrets: Reference creation secrets,Private warehouse must add this parameter

- name: harbor Created at that time secret Name when

restartPolicy: Always Restart policy when container stops running

terminationGracePeriodSeconds: 30 wait for pod The buffer duration is 30 seconds by default, such as when you start pod Not in more than 30 seconds running Will be forced to end. This value is based on the actual business

securityContext: All processes in the container UserID 0 Run as

runAsUser: 0 root Mode start

schedulerName: default-scheduler Scheduling operation node: default scheduling method

strategy: Will existing pod Replace with new pod Deployment strategy for

type: RollingUpdate Rolling update configuration parameters, only if the type is RollingUpdate

rollingUpdate:

maxUnavailable: 1 And expectations ready Proportion of copies

maxSurge: 1 Maximum generated by rolling update process pod quantity

revisionHistoryLimit: 7 Defines the number of upgrade records to keep

progressDeadlineSeconds: 600 The maximum time for rolling upgrade is 600 seconds

kubectl Add namespace [root@ceshi-130 ~]# kubectl create namespace app namespace/app created kubectl Authorize private warehouse image pull permission [root@ceshi-130 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=12345 -n app secret/harbor created [root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-service/dp.yaml deployment.extensions/dubbo-demo-service created

Dubbo monitor tool

Portal: dubbo-monitor-master

Node 132

[root@ceshi-132 ~]# unzip dubbo-monitor-master.zip [root@ceshi-132 conf]# vi /root/dubbo-monitor/dubbo-monitor-simple/conf/dubbo_origin.properties dubbo.container=log4j,spring,registry,jetty dubbo.application.name=simple-monitor dubbo.application.owner=liujiangxu dubbo.registry.address=zookeeper://zk1.od.com:2181?backup=zk2.od.com:2181,zk3.od.com:2181 dubbo.protocol.port=20880 dubbo.jetty.port=8080 dubbo.jetty.directory=/dubbo-monitor-simple/monitor dubbo.charts.directory=/dubbo-monitor-simple/charts dubbo.statistics.directory=/dubbo-monitor-simple/statistics dubbo.log4j.file=logs/dubbo-monitor-simple.log dubbo.log4j.level=WARN structure dockerfile [root@ceshi-132 ~]# cp -a dubbo-monitor /data/dockerfile/ [root@ceshi-132 ~]# cd /data/dockerfile/dubbo-monitor [root@ceshi-132 dubbo-monitor]# docker build . -t harbor.od.com/infra/dubbo-monitor:latest [root@ceshi-132 dubbo-monitor]# docker push harbor.od.com/infra/dubbo-monitor:latest

Delivery k8s configuration resource list

[root@ceshi-132 dubbo-monitor]# cat dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

labels:

name: dubbo-monitor

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-monitor

template:

metadata:

labels:

app: dubbo-monitor

name: dubbo-monitor

spec:

containers:

- name: dubbo-monitor

image: harbor.od.com/infra/dubbo-monitor:latest

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

[root@ceshi-132 dubbo-monitor]# cat svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-monitor

namespace: infra

spec:

ports:

- protocol: TCP

port: 8080 Cluster network port (cluster) ip There is only one service, which can be configured randomly according to the port)

targetPort: 8080 Port in container

selector:

app: dubbo-monitor

[root@ceshi-132 dubbo-monitor]# cat ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

spec:

rules:

- host: dubbo-monitor.od.com

http:

paths:

- path: /

backend:

serviceName: dubbo-monitor

servicePort: 8080 corresponding service.yaml in port port configuration

DNS resolves the host domain name in the ingress configuration

[root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/dp.yaml deployment.extensions/dubbo-monitor created [root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/svc.yaml service/dubbo-monitor created [root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/ingress.yaml ingress.extensions/dubbo-monitor created [root@ceshi-130 ~]# kubectl get pods -n infra -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES dubbo-monitor-5bb45c8b97-fnwt7 1/1 Running 0 6m59s 172.7.21.9 ceshi-130.host.com <none> <none> jenkins-698b4994c8-hm5wf 1/1 Running 0 7d6h 172.7.22.9 ceshi-131.host.com <none> <none>

Delivering dubbo services to consumers

Edit resource configuration list

Node 132

[root@ceshi-132 ~]# cd /data/k8s-yaml/

[root@ceshi-132 k8s-yaml]# mkdir dubbo-demo-web

[root@ceshi-132 k8s-yaml]# cd dubbo-demo-web/

[root@ceshi-132 dubbo-demo-web]# vi dp.yaml

kind: Deployment Define the resource category. To create POD Just write as pod,Deployment,StatefulSet etc.

apiVersion: extensions/v1beta1 Define version

metadata: Metadata information, including resource name namespace Wait. namespace Used to classify resources. There will be one by default default Namespace

name: dubbo-demo-consumer name

namespace: app Namespace

labels: label

name: dubbo-demo-consumer name

spec: Declare the attribute state of the resource, that is, you want to deployment What is it like

replicas: 1 Number of copies

selector: Controller selector, which specifies the controller to manage those pod

matchLabels: labels Matching rules

name: dubbo-demo-consumer name

template: Template. When the number of copies is insufficient, create according to the following template pod copy

metadata: metadata

labels: label

app: dubbo-demo-consumer Label yourself

name: dubbo-demo-consumer name

spec: Declare the attribute state of the resource, that is, you want to deployment What is it like

containers: Create container

- name: dubbo-demo-consumer name

image: harbor.od.com/app/dubbo-demo-consumer:master_20210826_1040 Mirror Address

ports: port settings

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

env: set variable

- name: JAR_BALL JAR_BALL=dubbo-client.jar

value: dubbo-client.jar

imagePullPolicy: IfNotPresent Give priority to local image,No more local Downloads

imagePullSecrets: Reference creation secrets,Private warehouse must add this parameter

- name: harbor Created at that time secret Name when

restartPolicy: Always Restart policy when container stops running

terminationGracePeriodSeconds: 30 wait for pod The buffer duration is 30 seconds by default, such as when you start pod Not in more than 30 seconds running Will be forced to end. This value is based on the actual business

securityContext: All processes in the container UserID 0 Run as

runAsUser: 0 root Mode start

schedulerName: default-scheduler Scheduling operation node: default scheduling method

strategy: Will existing pod Replace with new pod Deployment strategy for

type: RollingUpdate Rolling update configuration parameters, only if the type is RollingUpdate

rollingUpdate:

maxUnavailable: 1 And expectations ready Proportion of copies

maxSurge: 1 Maximum generated by rolling update process pod quantity

revisionHistoryLimit: 7 Defines the number of upgrade records to keep

progressDeadlineSeconds: 600 The maximum time for rolling upgrade is 600 seconds

[root@ceshi-132 dubbo-demo-web]# vi svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-demo-consumer

[root@ceshi-132 dubbo-demo-web]# vi ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

rules:

- host: demo.od.com

http:

paths:

- path: /

backend:

serviceName: dubbo-demo-consumer

servicePort: 8080

[root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-web/dp.yaml deployment.extensions/dubbo-demo-consumer created [root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-web/svc.yaml service/dubbo-demo-consumer created [root@ceshi-130 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-web/ingress.yaml ingress.extensions/dubbo-demo-consumer created