Recently, a kafka service needs to be set up locally. It's more convenient for docker to pull an image. Record the process of setting up

1. Install virtual machine

I prefer virtualbox, which gives people a very lightweight feeling. The simplest version of CentOS 7.3 installed in Linux. I used to be fond of Linux desktop, but virtual machine resources are limited. I'd better install a simple version.

2. Install docker

Add Docker's repository

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

Install docker CE

yum install -y docker-ce

Start Docker

systemctl start docker

Test run Hello World

docker run hello-world

Reference resources: https://blog.csdn.net/TangXuZ/article/details/100082144

3. Install docker compose

In fact, if you deploy a single machine, you can query an image directly by docker search kafka, and then you can pull one. If you deploy a pseudo cluster, the advantages of docker compose are very obvious:

First, you need to install Python 3-pip:

yum install python-pip

Then, install docker compose

pip3 install docker-compose

4. Create docker internal network

The internal network is used for communication between containers:

docker network create --subnet 172.23.0.0/25 --gateway 172.23.0.1 baccano

5. Deploy zookeeper cluster

Many online strategies put zookeeper and kafka in one arrangement. I think it will be easier to manage if they are separated. The docker-compose.yml of zookeeper is as follows:

version: '3' services: zoo1: image: zookeeper container_name: zoo1 restart: always hostname: zoo1 ports: - 2181:2181 environment: ZOO_MY_ID: 1 ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181 zoo2: image: zookeeper container_name: zoo2 restart: always hostname: zoo2 ports: - 2182:2181 environment: ZOO_MY_ID: 2 ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zoo3:2888:3888;2181 zoo3: image: zookeeper container_name: zoo3 restart: always hostname: zoo3 ports: - 2183:2181 environment: ZOO_MY_ID: 3 ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181 networks: default: external: name: baccano

You can save the configuration to a specific folder, such as / usr/local/dockerconfig/zookeeper, and then execute it in the directory:

docker-compose up -d

In this way, a zookeeper service with three nodes is set up. With docker ps, you can see the started container:

605d6d6679fa zookeeper "/docker-entrypoint...." 34 hours ago Up 34 hours 2888/tcp, 3888/tcp, 8080/tcp, 0.0.0.0:2183->2181/tcp zoo3 7cc46763292d zookeeper "/docker-entrypoint...." 34 hours ago Up 34 hours 2888/tcp, 3888/tcp, 8080/tcp, 0.0.0.0:2182->2181/tcp zoo2 7ca9de7fc9a9 zookeeper "/docker-entrypoint...." 34 hours ago Up 34 hours 2888/tcp, 3888/tcp, 0.0.0.0:2181->2181/tcp, 8080/tcp zoo1

6. Kafka cluster construction

The steps are basically the same as those of zookeeper. Take three nodes for example, the contents of docker-compose.yml are as follows:

version: '2' services: kafka1: image: wurstmeister/kafka restart: always hostname: kafka1 container_name: kafka1 ports: - 9092:9092 environment: KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka1:9092 KAFKA_LISTENERS: PLAINTEXT://kafka1:9092 KAFKA_ADVERTISED_HOST_NAME: kafka1 KAFKA_ADVERTISED_PORT: 9092 KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181 volumes: - "/data/kafka/kafka1/:/kafka" external_links: - zoo1 - zoo2 - zoo3 kafka2: image: wurstmeister/kafka restart: always hostname: kafka2 container_name: kafka2 ports: - 9093:9093 environment: KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka2:9093 KAFKA_LISTENERS: PLAINTEXT://kafka2:9093 KAFKA_ADVERTISED_HOST_NAME: kafka2 KAFKA_ADVERTISED_PORT: 9093 KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181 volumes: - "/data/kafka/kafka2/:/kafka" external_links: # Connect container s other than this compose file - zoo1 - zoo2 - zoo3 kafka3: image: wurstmeister/kafka restart: always hostname: kafka3 container_name: kafka3 ports: - 9094:9094 environment: KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka3:9094 KAFKA_LISTENERS: PLAINTEXT://kafka3:9094 KAFKA_ADVERTISED_HOST_NAME: kafka3 KAFKA_ADVERTISED_PORT: 9094 KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181 volumes: - "/data/kafka/kafka3/:/kafka" external_links: # Connect container s other than this compose file - zoo1 - zoo2 - zoo3 networks: default: external: # Use created network name: baccano

Start:

docker-compose up -d

7. External access and test of virtual machine

So far, kafka and zookeeper have been set up. docker ps can also see the startup container. If the startup error is reported, you can remove "- d" to see where the error is, or directly view the container log by the docker log container name.

Next, an important point in external access is:

Configure windows Host!!!!!! I've been stuck here for a long time. Be sure to write the addresses of kafka1, kafka2, kafka3 and virtual machine to host

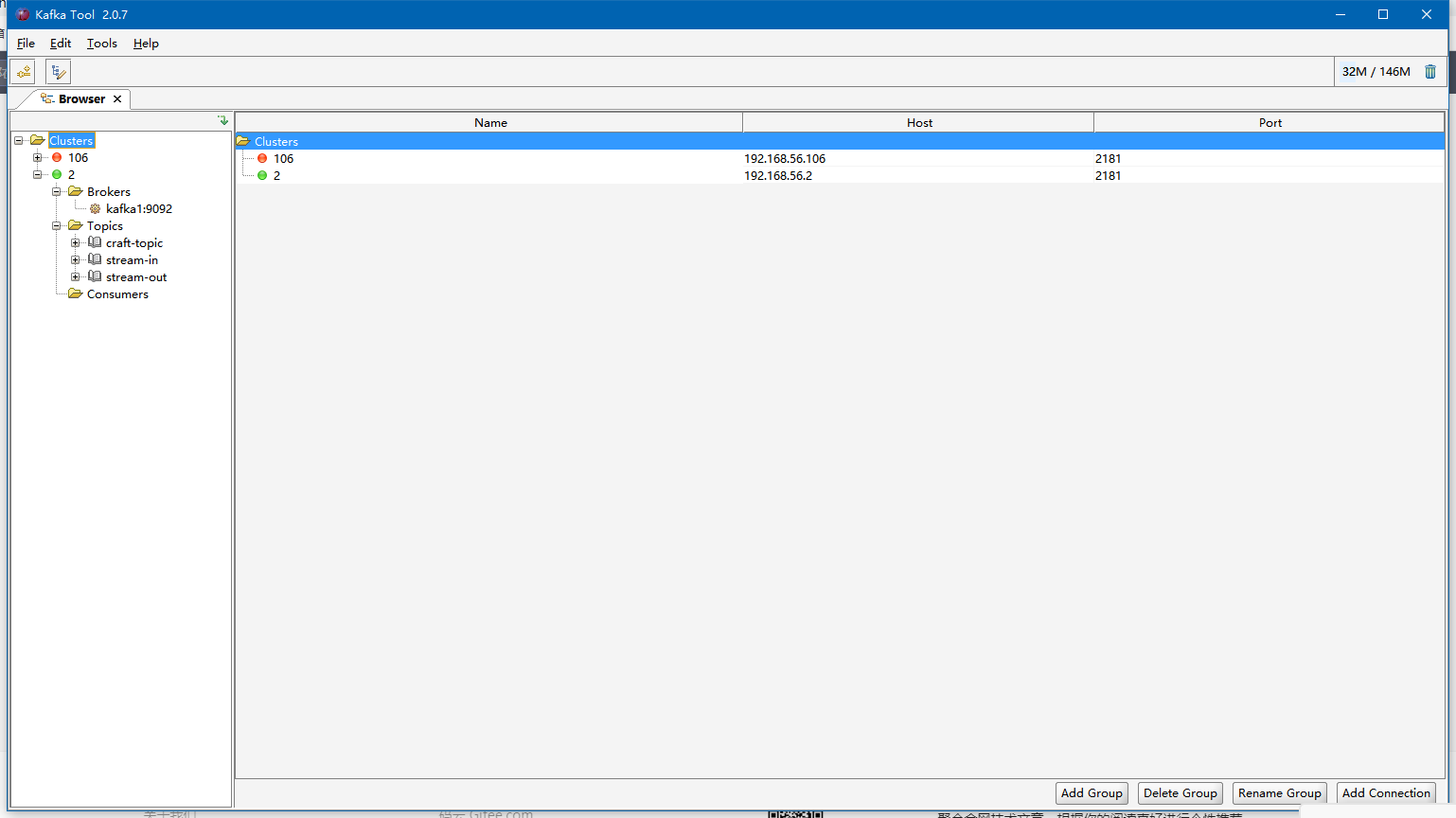

After configuration, use Kafka tool to connect to zookeeper:

You can view the broker, topic, and consumers. If you can click to prove success, write code or command-line self-test!