Principle and application of prototype pattern

If the creation cost of an object is relatively large, and there is little difference between different objects of the same class (most fields are the same), in this case, we can use the existing object (prototype) to copy (or copy) to create a new object, which has achieved the purpose of saving creation time. This method of creating objects based on prototypes is called Prototype Design Pattern, which is called prototype pattern for short.

What is "the creation cost of objects is relatively large"

- In fact, the process of applying for memory contained in the object and assigning values to member variables does not take much time, or for most business systems, this time can be ignored. Applying a complex pattern can only get a little performance improvement, which is the so-called over design.

- However, if the data in the object needs complex calculations (such as sorting and calculating hash values), or needs to be read from very slow IO S such as RPC, network, database and file system, in this case, we can use the prototype mode to directly copy it from other existing objects instead of creating a new object every time, Repeat these time-consuming operations.

Look at an example.

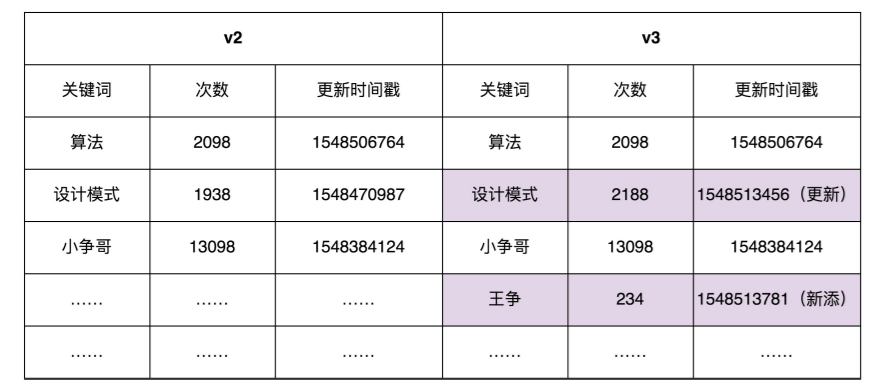

- Suppose that about 100000 pieces of "search keyword" information are stored in the database, and each information includes keywords, the number of times keywords are searched, the time when the information has been updated recently, etc. When system A starts, it will load this data into memory to process some other business requests. In order to find the information corresponding to A keyword conveniently and quickly, we establish A hash table index for the keyword

- You can directly use the HashMap container provided in the language. The key of HashMap is the search keyword, and the value is the keyword details (such as search times). We just need to read the data from the database and put it into HashMap.

- However, we also have another system B, which is specially used to analyze the search log, update the data in the database regularly (for example, every 10 minutes) and mark it as a new data version. For example, in the following example figure, we update the data of v2 version to get the data of v3 version. Here, we assume that there are only updates and new keywords, and there is no behavior of deleting keywords.

- In order to ensure the real-time performance of the data in system A (not necessarily very real-time, but the data can not be too old), system A needs to regularly update the index and data in memory according to the data in the database

How should we achieve this demand?

- We only need to record the update time Ta corresponding to the version Va of the current data in system A, and retrieve all search keywords with an update time greater than Ta from the database, that is, find the "difference set" between the Va version and the latest version of the data, and then process each key word in the difference set.

- If it already exists in the hash table, we update the corresponding search times, update time and other information; If it does not exist in the hash table, we insert it into the hash table

As follows:

public class Demo {

private ConcurrentHashMap<String, SearchWord> currentKeywords = new Concurren

private long lastUpdateTime = -1;

public void refresh() {

// Take the data with update time > lastupdatetime from the database and put it into currentKeywords

List<SearchWord> toBeUpdatedSearchWords = getSearchWords(lastUpdateTime);

long maxNewUpdatedTime = lastUpdateTime;

for (SearchWord searchWord : toBeUpdatedSearchWords) {

if (searchWord.getLastUpdateTime() > maxNewUpdatedTime) {

maxNewUpdatedTime = searchWord.getLastUpdateTime();

}

if (currentKeywords.containsKey(searchWord.getKeyword())) {

currentKeywords.replace(searchWord.getKeyword(), searchWord);

} else {

currentKeywords.put(searchWord.getKeyword(), searchWord);

}

}

lastUpdateTime = maxNewUpdatedTime;

}

private List<SearchWord> getSearchWords(long lastUpdateTime) {

// TODO: fetch the data with update time > lastupdatetime from the database

return null;

}

}

Now, however, we ask:

- At any time, all data in system a must be the same version, either version a or version b. there can be neither version a nor version b. The just updated method can't meet this requirement

- In addition, when updating memory data, system A cannot be in an unavailable state, that is, it cannot stop to update data

So how?

- We define the version of the data in use as "service version". When we want to update the data in memory, we do not directly update the service version (assuming updating on version a data), but re create another version data (assuming version b data). After the new version data creation number, Switch the service version from version a to version b again.

- This ensures that the data is always available and avoids the existence of intermediate states

The implementation is as follows:

public class Demo {

private HashMap<String, SearchWord> currentKeywords=new HashMap<>();

public void refresh() {

HashMap<String, SearchWord> newKeywords = new LinkedHashMap<>();

// Take out all data from the database and put it into newKeywords

List<SearchWord> toBeUpdatedSearchWords = getSearchWords();

for (SearchWord searchWord : toBeUpdatedSearchWords) {

newKeywords.put(searchWord.getKeyword(), searchWord);

}

currentKeywords = newKeywords;

}

private List<SearchWord> getSearchWords() {

// TODO: fetch all data from the database

return null;

}

}

However, in the above code implementation, the cost of building newkeywords is relatively high. We need to read the 100000 pieces of data from the database, then calculate the hash value and build newkeywords. This process is obviously time-consuming. To improve efficiency, you can use the prototype pattern.

We copy the currentKeyWords data to newKeywords, and then only new or updated keywords are retrieved from the database and updated to newKeywords. Compared with 100000 data, the number of keywords added or updated each time is relatively small. Therefore, this strategy greatly improves the efficiency of data update.

The implementation is as follows:

public class Demo {

private HashMap<String, SearchWord> currentKeywords=new HashMap<>();

private long lastUpdateTime = -1;

public void refresh() {

// The prototype pattern is as simple as copying the data of existing objects and updating a small amount of data

HashMap<String, SearchWord> newKeywords = (HashMap<String, SearchWord>) currentKeywords.clone();

// Take the data with update time > lastupdatetime from the database and put it into newKeywords

List<SearchWord> toBeUpdatedSearchWords = getSearchWords(lastUpdateTime);

long maxNewUpdatedTime = lastUpdateTime;

for (SearchWord searchWord : toBeUpdatedSearchWords) {

if (searchWord.getLastUpdateTime() > maxNewUpdatedTime) {

maxNewUpdatedTime = searchWord.getLastUpdateTime();

}

if (newKeywords.containsKey(searchWord.getKeyword())) {

SearchWord oldSearchWord = newKeywords.get(searchWord.getKeyword());

oldSearchWord.setCount(searchWord.getCount());

oldSearchWord.setLastUpdateTime(searchWord.getLastUpdateTime());

} else {

newKeywords.put(searchWord.getKeyword(), searchWord);

}

}

lastUpdateTime = maxNewUpdatedTime;

currentKeywords = newKeywords;

}

private List<SearchWord> getSearchWords(long lastUpdateTime) {

// TODO: fetch the data with update time > lastupdatetime from the database

return null;

}

}

Here we use the clone() syntax in Java to copy an object. If the language you are familiar with does not have this syntax, it is acceptable to take the data one by one from currentKeywords, then recalculate the hash value and put it into newKeywords. After all, the most time-consuming operation is the operation of fetching data from the database. Compared with the IO operation of the database, the time-consuming of memory operation and CPU calculation can be ignored.

However, there is a problem with the code implementation just now. To understand what the problem is, we need to understand two other concepts: Deep Copy and Shallow Copy.

Implementation of prototype pattern: deep copy and shallow copy

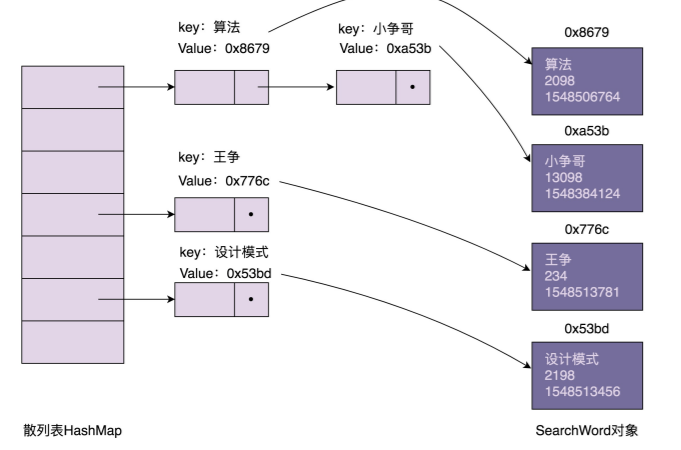

Let's see how the search keyword information organized by hash table is stored in memory. We drew a schematic diagram, the general structure is as follows. From the figure, we can find that in the hash table index, the key stored in each node is the search keyword, and the value is the memory address of the SearchWord object. The SearchWord object itself is stored in memory outside the hash table.

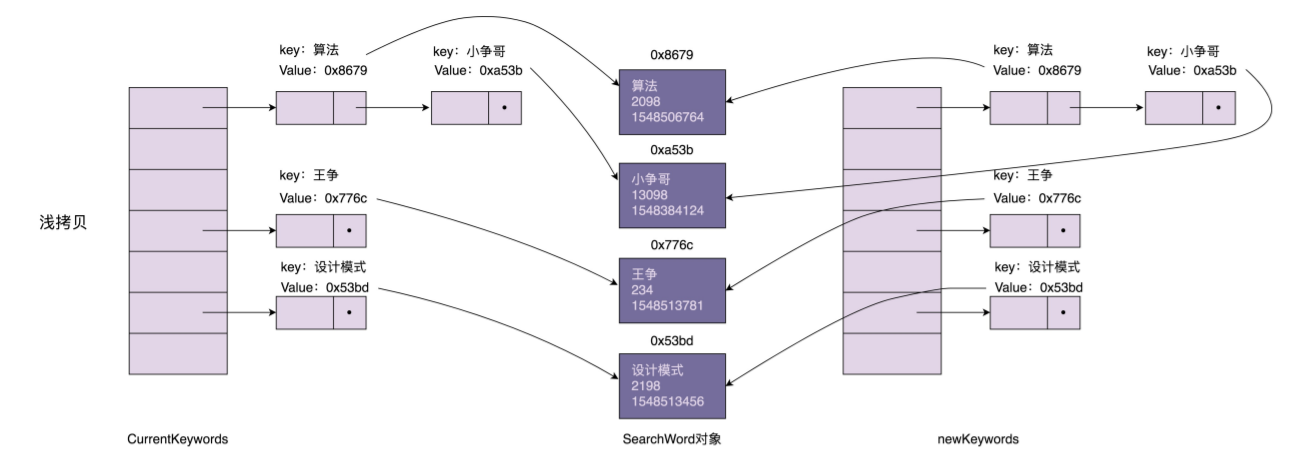

The difference between shallow copy and deep copy is that shallow copy only copies the index (hash table) in the graph and does not copy the data (SearchWord object) itself. In contrast, deep copy replicates not only the index, but also the data itself. The object obtained by shallow copy (newKeywords) shares data (SearchWord object) with the original object (currentKeywords), while the object obtained by deep copy is a completely independent object. The specific comparison is shown in the figure below:

In the Java language, the clone() method of the Object class executes the shallow copy we just said. It will only copy the data of basic data types (such as int and long) in the Object and the memory address of the reference Object (SearchWord), and will not copy the reference Object itself recursively.

In the above code, we implement the prototype pattern by calling the clone() shallow copy method on the HashMap. When we update the SearchWord object through newKeywords (for example, update the access times of the search keyword "design pattern"), newKeywords and currentKeywords point to the same group of SearchWord objects, which will lead to some old versions and some new versions of SearchWord in currentKeywords, We can't meet our previous needs: the data in currentKeywords is the same version at any time, and there is no intermediate state between the old version and the new version.

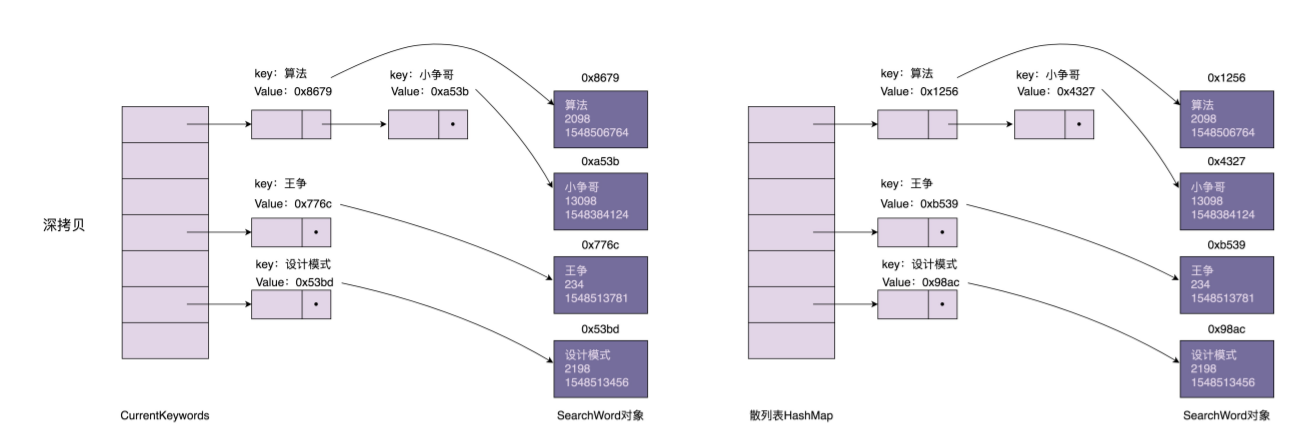

Now, how can we solve this problem? We can replace the shallow copy with the deep copy. newKeywords not only copies the index of currentKeywords, but also copies a SearchWord object. In this way, newKeywords and currentKeywords point to different SearchWord objects, and there is no problem that updating the data of newKeywords will lead to the updating of the data of currentKeywords.

How to implement deep copy? To sum up, there are two methods.

- The first method: recursively copy the object, the reference object of the object and the reference object of the reference object... Until the object to be copied contains only basic data type data and no reference object. As follows:

public class Demo {

private HashMap<String, SearchWord> currentKeywords=new HashMap<>();

private long lastUpdateTime = -1;

public void refresh() {

// Deep copy

HashMap<String, SearchWord> newKeywords = new HashMap<>();

for (HashMap.Entry<String, SearchWord> e : currentKeywords.entrySet()) {

SearchWord searchWord = e.getValue();

SearchWord newSearchWord = new SearchWord(searchWord.getKeyword(), searchWord.getCount(), searchWord.getLasnewKeywords.put(e.getKey(), newSearchWord);

}

// Take the data with update time > lastupdatetime from the database and put it into newKeywords

List<SearchWord> toBeUpdatedSearchWords = getSearchWords(lastUpdateTime);

long maxNewUpdatedTime = lastUpdateTime;

for (SearchWord searchWord : toBeUpdatedSearchWords) {

if (searchWord.getLastUpdateTime() > maxNewUpdatedTime) {

maxNewUpdatedTime = searchWord.getLastUpdateTime();

}

if (newKeywords.containsKey(searchWord.getKeyword())) {

SearchWord oldSearchWord = newKeywords.get(searchWord.getKeyword());

oldSearchWord.setCount(searchWord.getCount());

oldSearchWord.setLastUpdateTime(searchWord.getLastUpdateTime());

} else {

newKeywords.put(searchWord.getKeyword(), searchWord);

}

}

lastUpdateTime = maxNewUpdatedTime;

currentKeywords = newKeywords;

}

private List<SearchWord> getSearchWords(long lastUpdateTime) {

// TODO: fetch the data with update time > lastupdatetime from the database

return null;

}

}

(2) The second method: first serialize the object, and then deserialize it into a new object. The specific example code is as follows:

public Object deepCopy(Object object) {

ByteArrayOutputStream bo = new ByteArrayOutputStream();

ObjectOutputStream oo = new ObjectOutputStream(bo);

oo.writeObject(object);

ByteArrayInputStream bi = new ByteArrayInputStream(bo.toByteArray());

ObjectInputStream oi = new ObjectInputStream(bi);

return oi.readObject();

}

No matter which of the two implementation methods just adopted, deep copy is more time-consuming and memory space consuming than shallow copy. Is there a faster and more memory saving implementation for our application scenario?

We can first create newKeywords by shallow copy. For the SearchWord object that needs to be updated, we use deep copy to create a new object to replace the old object in newKeywords. After all, there is very little data to update. This method not only makes use of the advantages of shallow copy to save time and space, but also ensures that the data in currentKeywords is the data of the old version. The specific code implementation is as follows. This is also the fastest way to clone hash in our application scenario.

public class Demo {

private HashMap<String, SearchWord> currentKeywords=new HashMap<>();

private long lastUpdateTime = -1;

public void refresh() {

// Shallow copy

HashMap<String, SearchWord> newKeywords = (HashMap<String, SearchWord>) cu

// Take the data with update time > lastupdatetime from the database and put it into newKeywords

List<SearchWord> toBeUpdatedSearchWords = getSearchWords(lastUpdateTime);

long maxNewUpdatedTime = lastUpdateTime;

for (SearchWord searchWord : toBeUpdatedSearchWords) {

if (searchWord.getLastUpdateTime() > maxNewUpdatedTime) {

maxNewUpdatedTime = searchWord.getLastUpdateTime();

}

if (newKeywords.containsKey(searchWord.getKeyword())) {

newKeywords.remove(searchWord.getKeyword());

}

newKeywords.put(searchWord.getKeyword(), searchWord);

}

lastUpdateTime = maxNewUpdatedTime;

currentKeywords = newKeywords;

}

private List<SearchWord> getSearchWords(long lastUpdateTime) {

// TODO: fetch the data with update time > lastupdatetime from the database

return null;

}

}

summary

(1) What is a prototype pattern:

- If the creation cost of an object is relatively large, and there is little difference between different objects of the same class (most fields are the same), in this case, we can use the method of copying (or copying) existing objects (prototypes) to create new objects, so as to save creation time. This way of creating objects based on prototypes is called prototype design pattern, which is called prototype pattern for short.

(2) Two implementation methods of prototype pattern

- There are two implementation methods of prototype pattern, deep copy and shallow copy. Shallow copy will only copy the basic data type data in the object and the memory address of the reference object, and will not recursively copy the reference object and the reference object of the reference object... While deep copy will get a completely independent object. Therefore, compared with shallow copy, deep copy is more time-consuming and memory space consuming.

- If the object to be copied is immutable, shallow copy sharing immutable objects is no problem, but for variable objects, the objects obtained by shallow copy and the original objects will share some data, which may lead to the risk of data modification and become much more complex. Unless you need to load 100000 pieces of data from the database and build a hash table index, the operation is very time-consuming. It is recommended to use shallow copy. Otherwise, there is no good reason not to use shallow copy for a little performance improvement.