After reading this article, you can not only learn the algorithm routine, but also win the following topics on LeetCode:

-----------

Many readers have always said that they want me to use it Frame thinking To talk about the basic sorting algorithm, I think we really have to talk about it. After all, learning everything requires a comprehensive understanding. Only by deeply understanding its essence can it be used freely.

This article will first talk about merging and sorting, give a set of code templates, and then talk about its application in algorithm problems. Before reading this article, I hope you have read it Brush the binary tree hand in hand (program part).

I am here Hand brush binary tree (phase I) When talking about binary trees, I mentioned merge sort, saying that merge sort is the post order traversal of binary trees. At that time, many readers left messages saying that they were impressed.

Do you know why many readers feel brain burn when they encounter recursive algorithms? Because it is still in the stage of "looking at mountains is mountains, looking at water is water".

Let's say merge and sort. If I show you the code and ask you to fill in the process of merge and sort, what scene will appear in your mind?

This is an array sorting algorithm, so you can supplement the GIF of an array and exchange elements one by one? If so, the pattern will be low.

But if you have a binary tree in your mind, or even the scene of traversing the binary tree in sequence, the pattern is high, and you probably master what I often emphasize Frame thinking , learning algorithms with this abstract ability saves much effort.

So, merge sort is obviously an array algorithm. What's the relationship with binary tree? Next, I'll talk about it in detail.

Algorithm idea

Let's just say that all recursive algorithms, no matter what they do, are essentially traversing a (recursive) tree, and then executing code on the nodes (the first, middle and last order positions). If you want to write a recursive algorithm, you essentially want to tell each node what to do.

Look at the code framework of merging and sorting:

// Definition: sort nums[lo..hi]

void sort(int[] nums, int lo, int hi) {

if (lo == hi) {

return;

}

int mid = (lo + hi) / 2;

// Using the definition, sort nums[lo..mid]

sort(nums, lo, mid);

// Using the definition, sort nums[mid+1..hi]

sort(nums, mid + 1, hi);

/****** Post sequence position******/

// At this point, the two molecular arrays have been ordered

// Merge two ordered arrays to make num [Lo.. hi] ordered

merge(nums, lo, mid, hi);

/*********************/

}

// The ordered array nums[lo..mid] and the ordered array nums[mid+1..hi]

// Merge into ordered array nums[lo..hi]

void merge(int[] nums, int lo, int mid, int hi);Looking at this framework, we can understand the classic summary: merge sorting is to arrange the left half array first, then the right half array, and then merge the two half arrays.

The above code is very similar to the post order traversal of binary tree:

/* Binary tree traversal framework */

void traverse(TreeNode root) {

if (root == null) {

return;

}

traverse(root.left);

traverse(root.right);

/****** Post sequence position******/

print(root.val);

/*********************/

}Further, think about the algorithm code for finding the maximum depth of binary tree:

// Definition: enter the root node and return the maximum depth of the binary tree

int maxDepth(TreeNode root) {

if (root == null) {

return 0;

}

// Using the definition, calculate the maximum depth of the left and right subtrees

int leftMax = maxDepth(root.left);

int rightMax = maxDepth(root.right);

// The maximum depth of the whole tree is equal to the maximum depth of the left and right subtrees, and the maximum value is taken,

// Then add the root node itself

int res = Math.max(leftMax, rightMax) + 1;

return res;

}Is it more like?

Above Brush the binary tree hand in hand (program part) It is said that the binary tree problem can be divided into two kinds of ideas, one is the idea of traversing the binary tree, and the other is the idea of decomposing the problem. According to the above analogy, it is obvious that merging and sorting uses the idea of decomposing the problem (divide and conquer algorithm).

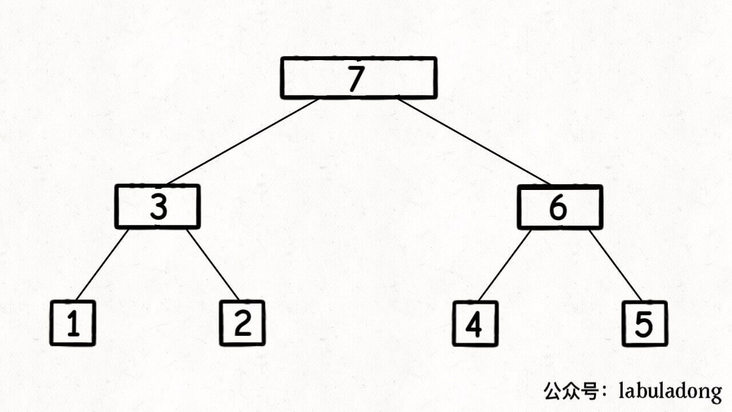

The process of merging and sorting can be logically abstracted into a binary tree. The value of each node in the tree can be considered as num [Lo.. hi], and the value of leaf node is a single element in the array:

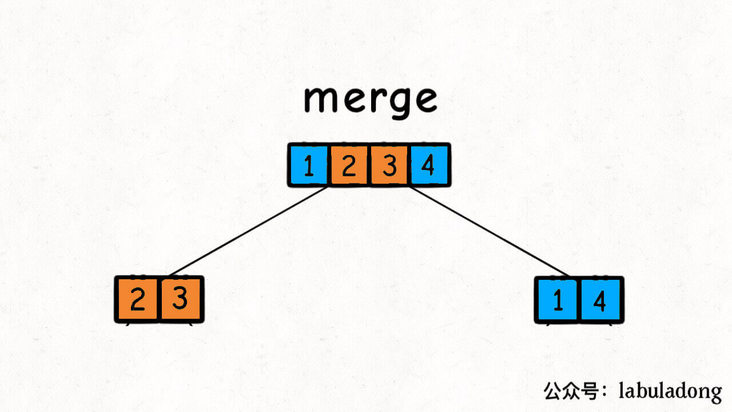

Then, execute the merge function at the post order position of each node (the left and right child nodes have been ordered) to merge the sub arrays on the two child nodes:

This merge operation will be executed once on each node of the binary tree, and the execution order is the order of traversal after the binary tree.

After traversing the binary tree, you should be familiar with it. The traversal sequence is as follows:

Combined with the above basic analysis, we understand num [Lo.. hi] as a node of a binary tree, and srot function as an ergodic function of a binary tree. The execution process of merging and sorting is described in the following GIF:

In this way, the core idea of merging and sorting is analyzed. Next, just translate the idea into code.

Code implementation and analysis

As long as you have the right way of thinking, it is not difficult to understand the idea of the algorithm, but it is also a test of one's programming ability to realize the idea into code.

After all, the time complexity of the algorithm is only a theoretical measure, and the actual operation efficiency of the algorithm needs to consider more factors, such as avoiding the frequent allocation and release of memory, and the code logic should be as concise as possible.

After comparison, the merging and sorting code given in algorithm 4 has the characteristics of simplicity and efficiency, so we can refer to the code given in the book as the merging algorithm template:

class Merge {

// Used to help merge ordered arrays

private static int[] temp;

public static void sort(int[] nums) {

// First open up memory space for the auxiliary array

temp = new int[nums.length];

// Sort the entire array (modify in place)

sort(nums, 0, nums.length - 1);

}

// Definition: sort the subarray num [Lo.. hi]

private static void sort(int[] nums, int lo, int hi) {

if (lo == hi) {

// Single elements do not need to be sorted

return;

}

// This is written to prevent overflow, and the effect is equivalent to (hi + lo) / 2

int mid = lo + (hi - lo) / 2;

// Sort the left half array nums[lo..mid] first

sort(nums, lo, mid);

// Then sort the right half of the array nums[mid+1..hi]

sort(nums, mid + 1, hi);

// Combine two ordered arrays into one ordered array

merge(nums, lo, mid, hi);

}

// Combine the two ordered arrays of num [Lo.. mid] and num [mid + 1.. hi] into one ordered array

private static void merge(int[] nums, int lo, int mid, int hi) {

// First copy num [Lo.. hi] to the auxiliary array

// So that the merged results can be directly stored in nums

for (int i = lo; i <= hi; i++) {

temp[i] = nums[i];

}

// Array double pointer technique, merging two ordered arrays

int i = lo, j = mid + 1;

for (int p = lo; p <= hi; p++) {

if (i == mid + 1) {

// The left half array has been merged

nums[p] = temp[j++];

} else if (j == hi + 1) {

// The right half array has been merged

nums[p] = temp[i++];

} else if (temp[i] > temp[j]) {

nums[p] = temp[j++];

} else {

nums[p] = temp[i++];

}

}

}

}With the previous foreshadowing, we only need to focus on the merge function.

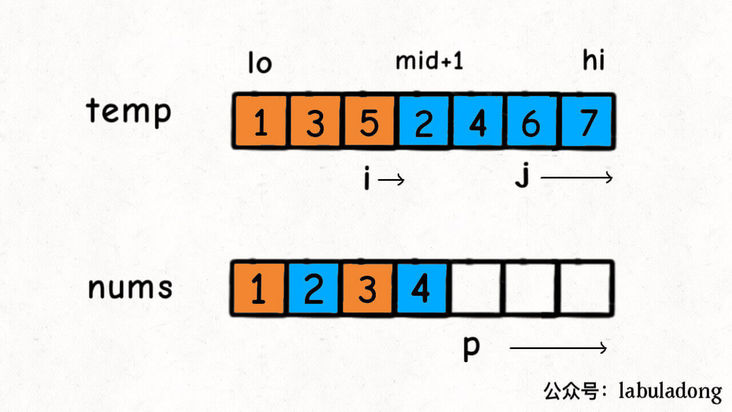

After the sort function recursively sorts nums[lo..mid] and nums[mid+1..hi], we can't merge them in place, so we need to copy them into the temp array, and then Six skills of single linked list The double pointer technique of merging ordered linked lists in combines nums[lo..hi] into an ordered array:

Note that instead of creating a new auxiliary array during the execution of the merge function, we have created a new auxiliary array of temp in advance, thus avoiding the performance problems that may arise from frequent memory allocation and release during recursion.

Let's talk about the time complexity of merging and sorting. Although everyone should know that it is O(NlogN), not everyone knows how to calculate this complexity.

Above Detailed explanation of dynamic planning It has been said that the complexity calculation of recursive algorithm is the complexity of solving a subproblem by the number of subproblems x. For merge sorting, the time complexity obviously focuses on the process of the merge function traversing num [Lo.. hi], but the lo and hi input by each merge are different, so it is not easy to see the time complexity intuitively.

How many times has the merge function been executed? What is the time complexity of each execution? What is the total time complexity? This should be seen in combination with the picture drawn before:

The number of execution times is the number of binary tree nodes. The complexity of each execution is the length of the sub array represented by each node, so the total time complexity is the number of "array elements" in the whole tree.

Therefore, on the whole, the height of the binary tree is logN, in which the number of elements in each layer is the length N of the original array, so the total time complexity is O(NlogN).

Li Kou's question 912 "sorting arrays" is to let you sort arrays. We can directly apply the merge sorting code template:

class Solution {

public int[] sortArray(int[] nums) {

// Merge sort sorts the array in place

Merge.sort(nums);

return nums;

}

}

class Merge {

// See above

}Other applications

In addition to the most basic sorting problem, merge sorting can also be used to solve the 315 question "calculate the number of elements less than the current element on the right":

Let's not talk about the violent solution of patting the head. Nested for loops have square level complexity.

What is the relationship between this question and merging and sorting? It is mainly in the merge function. When we merge two ordered arrays, we can actually know how many numbers are smaller than x after a number x.

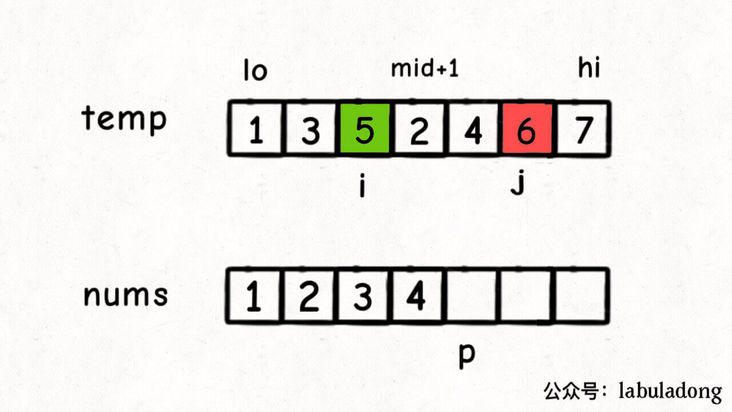

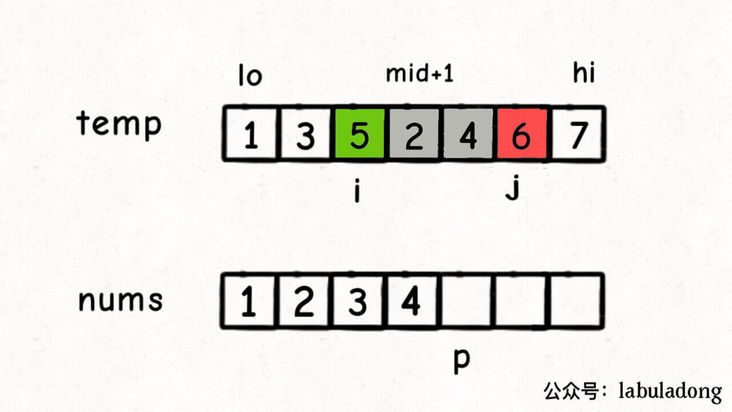

Specifically, for example, this scenario:

At this time, we should put temp[i] on nums[p], because temp[i] < temp [J].

But in this scenario, we can also know a message: the number of elements smaller than 5 after 5 is the number of elements between j and mid + 1, that is, 2.

In other words, in the process of merging nuns[lo..hi], whenever num [P] = temp[i] is executed, it can be determined that the number of elements smaller than temp[i] is j - mid - 1.

Of course, Num [Lo.. hi] itself is only a sub array. This sub array will be merged later, and the position of the elements will still change. But this is a problem that other recursive nodes need to consider. We just need to do some tricks in the merge function and superimpose the results recorded during each merge.

After discovering this rule, we can solve this problem by adding two lines of code to the merge. Look at the solution code:

class Solution {

private class Pair {

int val, id;

Pair(int val, int id) {

// Record the element value of the array

this.val = val;

// Record the original index of the element in the array

this.id = id;

}

}

// Auxiliary array used for merge sort

private Pair[] temp;

// Record the number of elements smaller than yourself after each element

private int[] count;

// Main function

public List<Integer> countSmaller(int[] nums) {

int n = nums.length;

count = new int[n];

temp = new Pair[n];

Pair[] arr = new Pair[n];

// Record the original index position of the element to update the result in the count array

for (int i = 0; i < n; i++)

arr[i] = new Pair(nums[i], i);

// Perform merge sorting, and the result of this question is recorded in the count array

sort(arr, 0, n - 1);

List<Integer> res = new LinkedList<>();

for (int c : count) res.add(c);

return res;

}

// Merge sort

private void sort(Pair[] arr, int lo, int hi) {

if (lo == hi) return;

int mid = lo + (hi - lo) / 2;

sort(arr, lo, mid);

sort(arr, mid + 1, hi);

merge(arr, lo, mid, hi);

}

// Merge two ordered arrays

private void merge(Pair[] arr, int lo, int mid, int hi) {

for (int i = lo; i <= hi; i++) {

temp[i] = arr[i];

}

int i = lo, j = mid + 1;

for (int p = lo; p <= hi; p++) {

if (i == mid + 1) {

arr[p] = temp[j++];

} else if (j == hi + 1) {

arr[p] = temp[i++];

// Update count array

count[arr[p].id] += j - mid - 1;

} else if (temp[i].val > temp[j].val) {

arr[p] = temp[j++];

} else {

arr[p] = temp[i++];

// Update count array

count[arr[p].id] += j - mid - 1;

}

}

}

}Because the index position of each element will change constantly during sorting, we use a Pair class to encapsulate each element and its index in the original array num, so that the count array records the number of elements less than it after each element.

Now look back and realize what I said at the beginning of this article:

All recursive algorithms are essentially traversing a (recursive) tree, and then executing code on nodes (in the first, middle and last order). When you write a recursive algorithm, you essentially tell each node what to do.

Have you tasted anything?

Finally, this paper summarizes the core idea and code implementation of merging and sorting from the perspective of binary tree, and talks about an algorithm related to merging and sorting. This algorithm problem is actually the logic of merging and sorting algorithm mixed with some private goods, but it is still difficult. You may need to do it yourself to understand it.

Let me leave a question for thinking. I will talk about quick sort in the next article. Can you try to understand quick sort from the perspective of binary tree? If you were asked to summarize the logic of quick sort in one sentence, how would you describe it?

Well, the answer will be revealed in the next article.

Click on my avatar See more high-quality algorithm articles, take your brush force buckle with your hand, and strive to explain the algorithm clearly! my Tutorial algorithm 100k star has been obtained. Welcome to like it!