Catalogue of series articles

preface

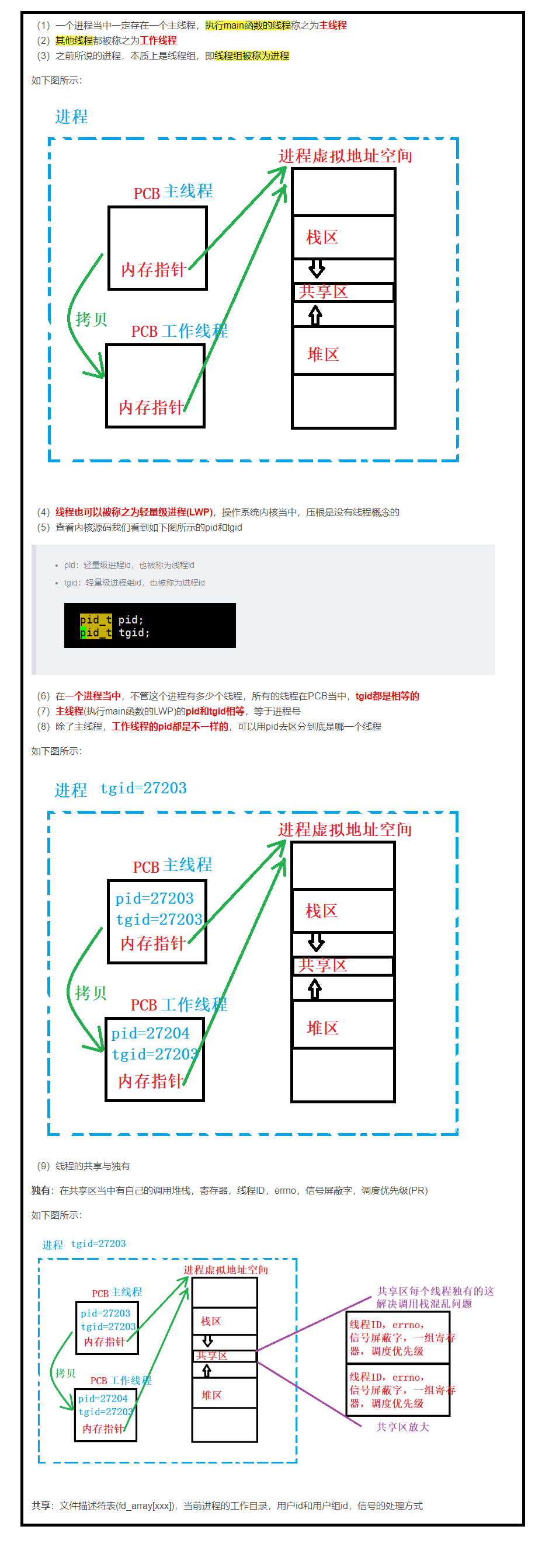

1, Linux thread concept

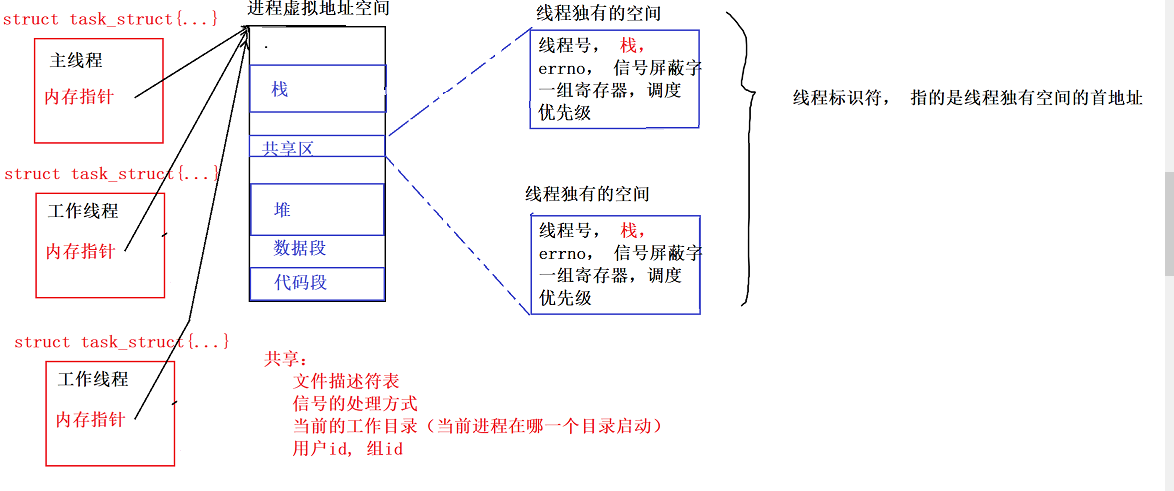

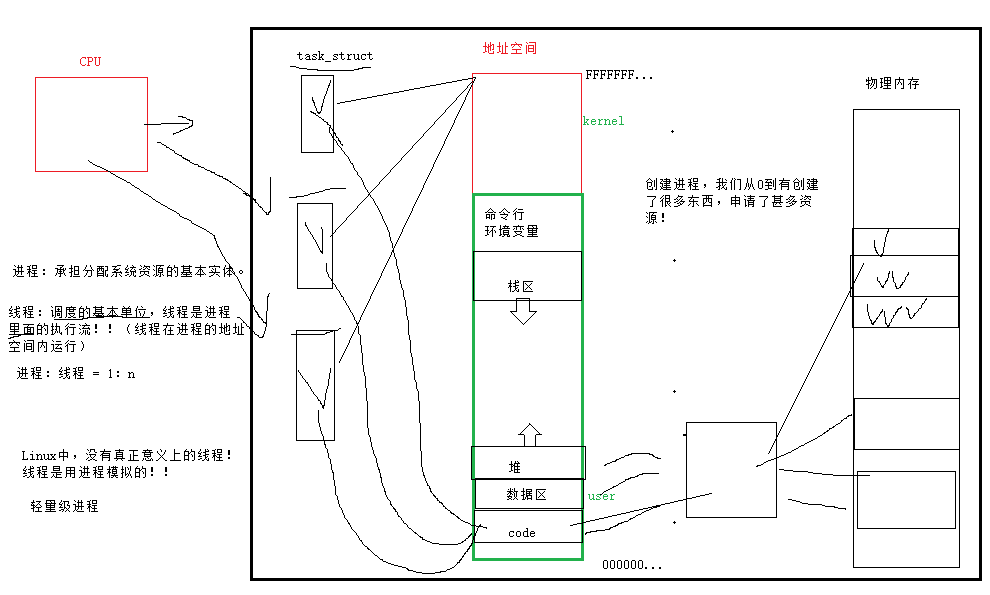

1. What is a thread

- An execution route in a program is called a thread. A more accurate definition is that a thread is "the internal control sequence of a process".

- All processes have at least one execution thread.

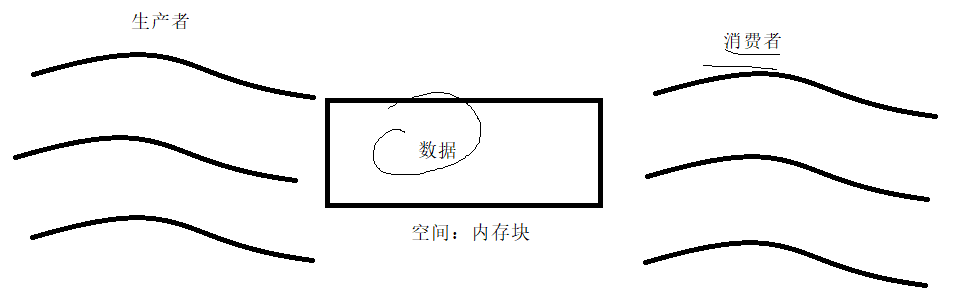

- Threads run inside a process, essentially in the process address space.

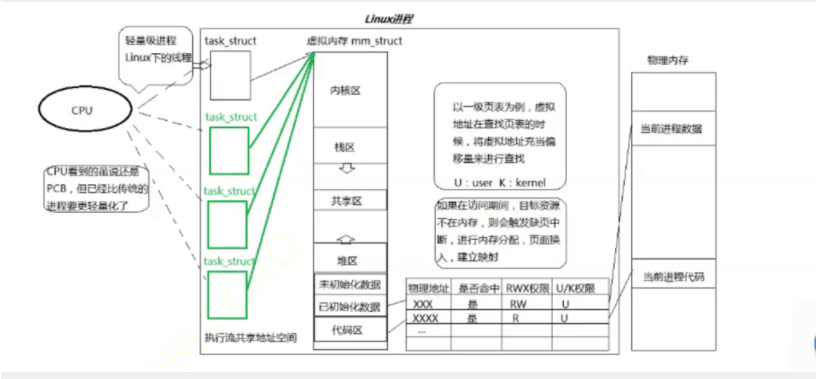

- In Linux system, in the eyes of CPU, PCB is lighter than traditional process.

- Most of the execution space of each process can be allocated to the virtual process through the address of each thread.

2. Advantages of threads

- The cost of creating a new thread is much less than creating a new process.

- Compared with switching between processes, switching between threads requires much less work from the operating system.

- Threads occupy much less resources than processes.

- It can make full use of the parallelizable number of multiprocessors.

- While waiting for the slow I/O operation to end, the program can perform other computing tasks.

- For computing intensive applications, in order to run on multiprocessor systems, computing is decomposed into multiple threads.

- For I/O-Intensive applications, I/O operations are overlapped in order to improve performance. Threads can wait for different I/O operations at the same time.

3. Disadvantages of threads

- Performance loss

- A compute intensive thread that is rarely blocked by external events often cannot share the same processor with other threads. If the number of compute intensive threads is more than the available processors, there may be a large performance loss. Here, the performance loss refers to the additional synchronization and scheduling overhead, while the available resources remain unchanged.

- Reduced robustness

- Writing multithreading requires more comprehensive and in-depth consideration. In a multithreaded program, there is a great possibility of adverse effects due to subtle deviations in time allocation or sharing variables that should not be shared. In other words, there is a lack of protection between threads.

- Lack of access control

- Process is the basic granularity of access control, and calling some OS functions in a thread will have an impact on the whole process.

- Increased programming difficulty

- Writing and debugging a multithreaded program is much more difficult than a single threaded program

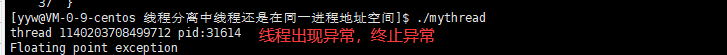

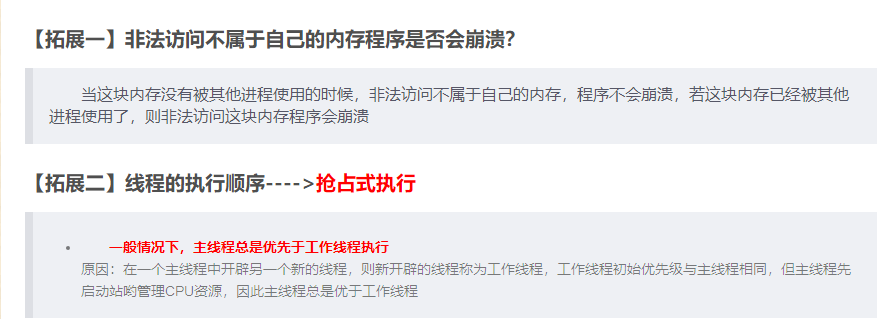

4. Thread exception

- If a single thread is divided by zero, the wild pointer problem will cause the thread to crash, and the process will also crash.

- A thread is the executing branch of a process. If a thread is abnormal, it is similar to if a process is abnormal, and then trigger the signal mechanism to terminate the process. If the process terminates, all threads in the process will exit immediately.

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 #include<sys/types.h>

5 using namespace std;

6

7 void* thread_run(void* arg)

8 {

9 pthread_detach(pthread_self());

10 while(1)

11 {

12 cout<<(char*)arg<<pthread_self()<<" pid:"<<getpid()<<endl;

13 sleep(1);

14 break;

15 }

16 int a=10;

17 a=a/0;

18 return (void*)10;

19 }

20 int main()

21 {

22 pthread_t tid;

23 int ret=0;

24 ret= pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

25 if(ret!=0)

26 {

27 return -1;

28 }

29

30 sleep(10);

31 pthread_cancel(tid);

32 cout<<"new thread "<<tid<<" be cancled!"<<endl;

33 void* tmp=NULL;

34 pthread_join(tid,&tmp);

35 cout<<"thread qiut code:"<<(long long )ret<<endl;

36 return 100;

37 }

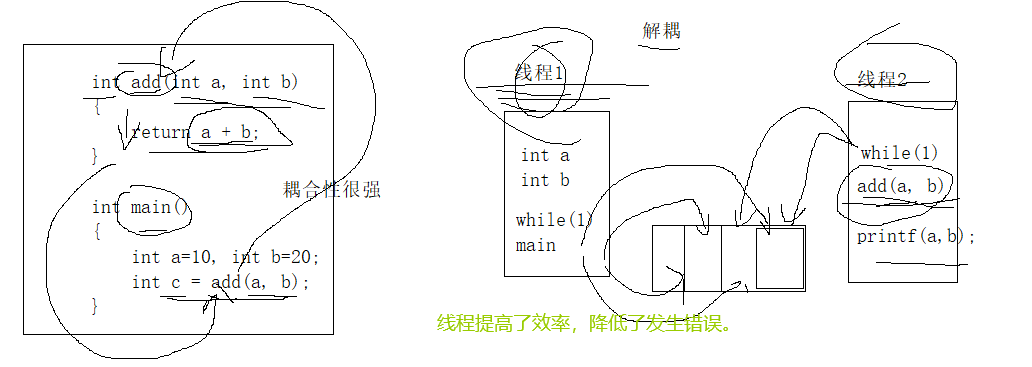

5. Purpose of thread

- Reasonable use of multithreading can improve the execution efficiency of CPU intensive programs

- Rational use of multithreading can improve the user experience of IO intensive programs (for example, in life, we download development tools while writing code, which is a manifestation of multithreading).

2, Process and thread comparison

1. Processes and threads

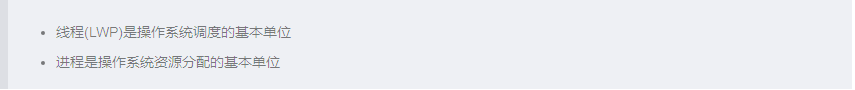

- Process is the basic unit of resource allocation

- Thread is the basic unit of scheduling

- Threads share process data, but they also have part of their own data

- Multiple threads of a process share the same address space, so Text Segment and Data Segment are shared. If a function is defined, it can be called in each thread. If a global variable is defined, it can be accessed in each thread. In addition, each thread also shares the following process resources and environment.

2. What are the multi process application scenarios?

3, Thread control

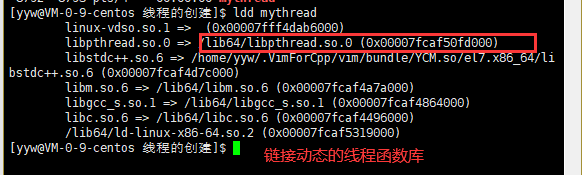

1.POSIX thread library

- Thread related functions form a complete series, and most functions are named "pthread_" Lead.

- To use these libraries, you introduce the header < pthread h>.

- Use the "- lpthread" option of the compiler command when linking these thread function libraries.

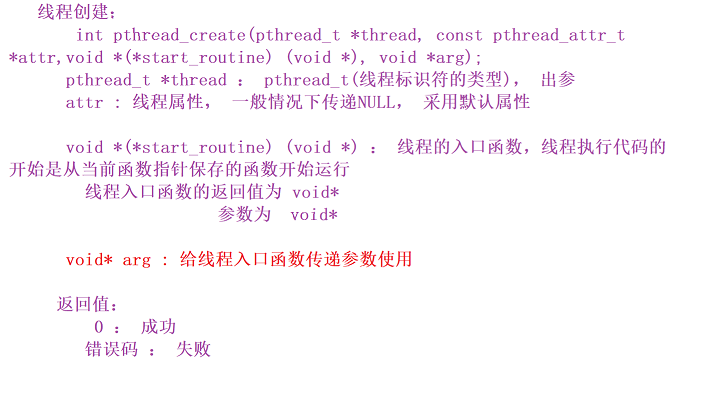

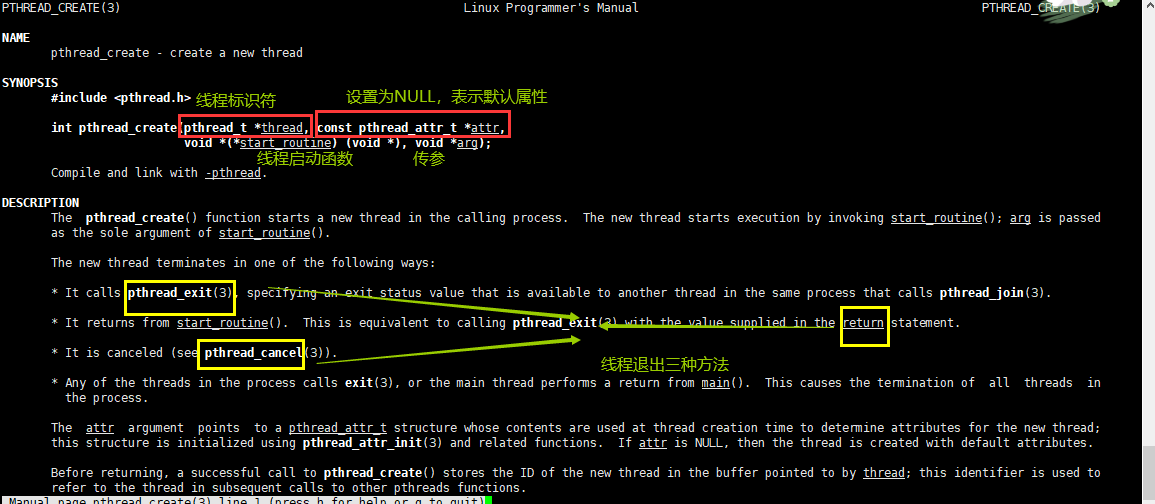

2. Create thread

- int pthread_create(pthread_t *thread, const pthread_attr_t *attr,void *(*start_routine) (void *), void *arg);

- pthread_t: The thread identifier is essentially the first address of the thread in the unique space of the shared area.

-pthread_t: Is an output parameter, and the value is determined by pthread_ The value assigned by the create function.

-Thread: the property of creating a thread. Generally, it is specified as NULL, and the default property is adopted. - pthread_attr_t: The function pointer receives a function address with a return value of void * and a parameter of void *, which is the thread entry function.

- void *(*start_routine) (void *): parameters passed to the thread entry function; Since the parameter type is void * and the return value type is void *, the program is given unlimited ways to pass parameters (char *, int *, structure pointer, this)

- Return value:

Failed: < 0

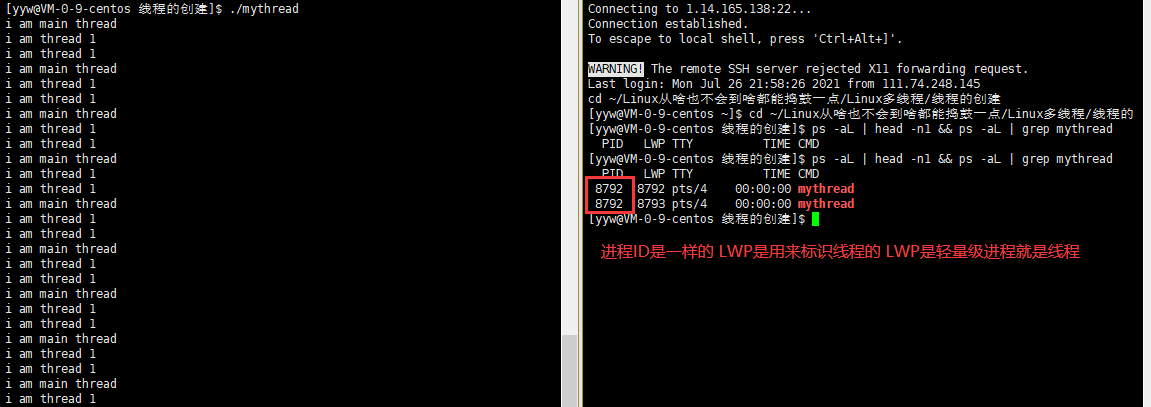

Create a worker thread in the main thread, and neither the main thread nor the secondary thread exits.

mythead.cpp

1 #include<iostream>

2 #include<pthread.h>

3 using namespace std;

4 #include<unistd.h>

5

6 void* thread_run(void* arg)

7 {

8 while(1)

9 {

10 cout<<"i am "<<(char*)arg<<endl;

11 sleep(1);

12 }

13 }

14 int main()

15 {

16 pthread_t tid;

17 int ret=pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

18 if(ret!=0)

19 {

20 return -1;

21 }

22 while(1)

23 {

24 cout<<"i am main thread"<<endl;

25 sleep(2);

26 }

27 return 0;

28 }

makefile

1 mythread:mythread.cpp

2 g++ $^ -o $@ -lpthread

3 .PHONY:clean

4 clean:

5 rm -f mythread

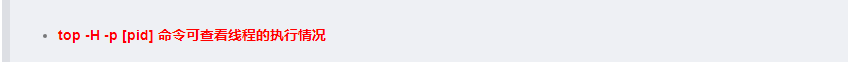

Create a secondary thread in the main thread to let the main thread exit, and the secondary thread does not exit.

1 #include<iostream>

2 #include<pthread.h>

3 using namespace std;

4 #include<unistd.h>

5

6 void* thread_run(void* arg)

7 {

8 while(1)

9 {

10 cout<<"i am "<<(char*)arg<<endl;

11 sleep(1);

12 }

13 }

14 int main()

15 {

16 pthread_t tid;

17 int ret=pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

18 if(ret!=0)

19 {

20 return -1;

21 }

22 return 0;

23 }

The following figure shows that the main process exits and the process exits.

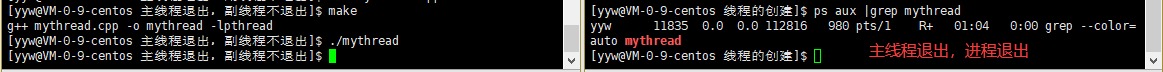

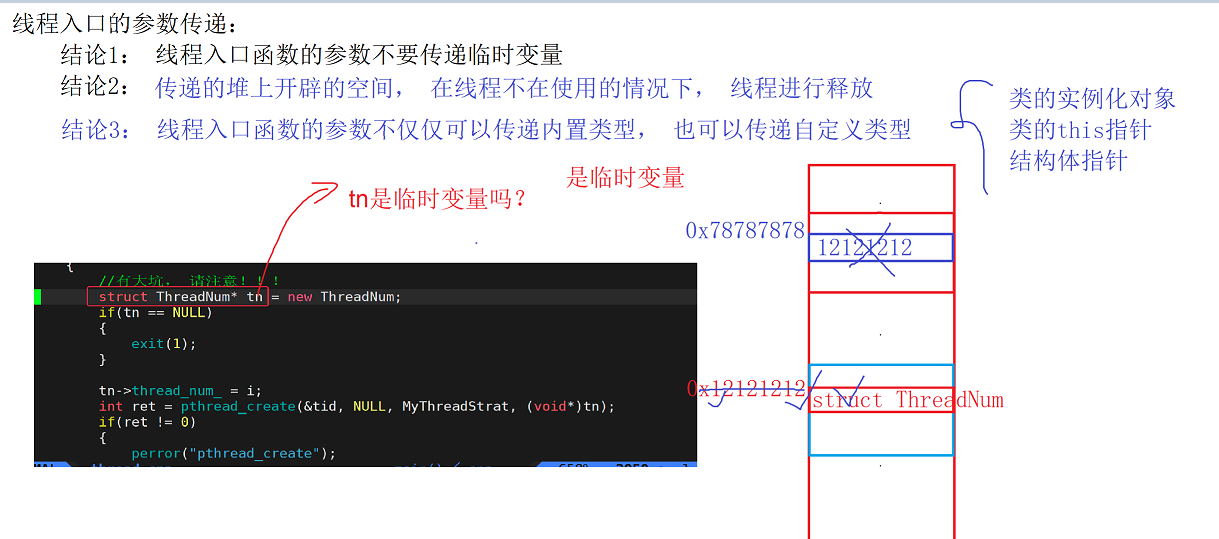

- Validation of parameter transmission problem:

- Suppose you want to pass a parameter 1 into the created worker thread, first convert the parameter to (void *) type, and then pass in the address of the parameter. For use in the worker thread, you only need to convert (void *) to (int *).

1 #include<iostream>

2 #include<unistd.h>

3 #include<pthread.h>

4 using namespace std;

5

6 void* MyThreadStrat(void* arg)

7 {

8 int* i=(int*)arg;

9 while(1)

10 {

11 cout<<"MyThreadStrat:"<<*i<<endl;

12 sleep(1);

13 }

14 return NULL;

15 }

16 int main()

17 {

18 pthread_t tid;

19 int i=1;

20 int ret=pthread_create(&tid,NULL,MyThreadStrat,(void*)&i);

21 if(ret!=0)

22 {

23 cout<<"Thread creation failed!"<<endl;

24 return 0;

25 }

26 while(1)

27 {

28 sleep(1);

29 cout<<"i am main thread"<<endl;

30 }

31 return 0;

32 }

Although the parameter can be passed in normally, there are some errors, because the life cycle of the local variable i is not over when it is passed in, but the life cycle is over when it is passed to the worker thread, the area opened by the local variable will be released automatically, and the worker thread is still accessing this address at this time, and illegal access will occur.

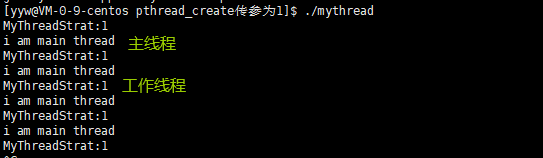

Change code to loop:

1 #include<iostream>

2 #include<unistd.h>

3 #include<pthread.h>

4 using namespace std;

5

6 void* MyThreadStrat(void* arg)

7 {

8 int* i=(int*)arg;

9 while(1)

10 {

11 cout<<"MyThreadStrat:"<<*i<<endl;

12 sleep(1);

13 }

14 return NULL;

15 }

16 int main()

17 {

18 pthread_t tid;

19 int i=0;

20 for( i=0;i<4;i++)

21 {

22 int ret=pthread_create(&tid,NULL,MyThreadStrat,(void*)&i);

23 if(ret!=0)

24 {

25 cout<<"Thread creation failed!"<<endl;

26 return 0;

27 }

28 }

29 while(1)

30 {

31 sleep(1);

32 cout<<"i am main thread"<<endl;

33 }

34 return 0;

35 }

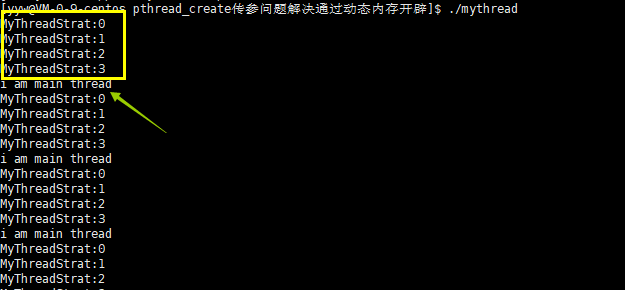

Because the for loop is 4 times, and finally 4 working threads are opened, the opening thread passes in the address of i, and the value in i is added from 0 to 4, and i exits from 5. At this time, i has been added to 4, and the value stored in the address of i is 4. Finally, 4 will be output.

Problem solving ---- > dynamic memory development:

Pass this pointer:

class MyThread

{

public:

MyThread()

{

}

~MyThread()

{

}

int Start()

{

int ret = pthread_create(&tid_, NULL, MyThreadStart, (void*)this);

if(ret < 0)

{

return -1;

}

return 0;

}

static void* MyThreadStart(void* arg)

{

MyThread* mt = (MyThread*)arg;

printf("%p\n", mt->tid_);

}

private:

pthread_t tid_;

};

int main()

{

return 0;

}

Pass structure pointer:

1 #include<iostream>

2 #include<unistd.h>

3 #include<pthread.h>

4 using namespace std;

5

6 struct ThreadId

7 {

8 int thread_id;

9 };

10 void* MyThreadStrat(void* arg)

11 {

12 struct ThreadId* tid=(struct ThreadId*)arg;

13 while(1)

14 {

15 cout<<"MyThreadStrat:"<<tid->thread_id<<endl;

16 sleep(1);

17 }

18 delete tid;

19 }

20 int main()

21 {

22 pthread_t tid;

23 int i=0;

24 for( i=0;i<4;i++)

25 {

26 struct ThreadId* id=new ThreadId();

27 id->thread_id=i;

28 int ret=pthread_create(&tid,NULL,MyThreadStrat,(void*)id);

29 if(ret!=0)

30 {

31 cout<<"Thread creation failed!"<<endl;

32 return 0;

33 }

34 }

35 while(1)

36 {

37 sleep(1);

38 cout<<"i am main thread"<<endl;

39 }

40 return 0;

41 }

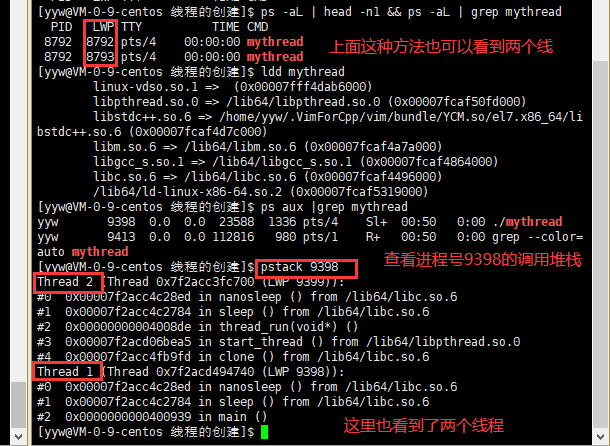

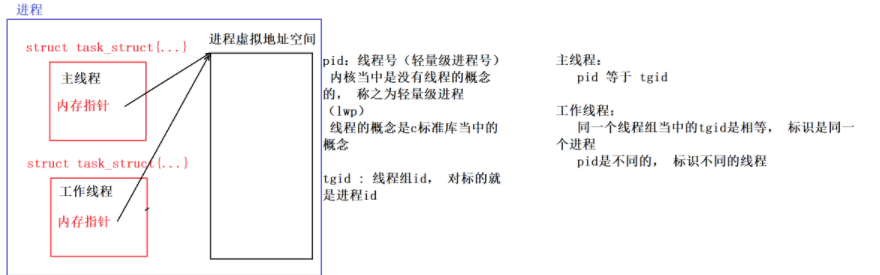

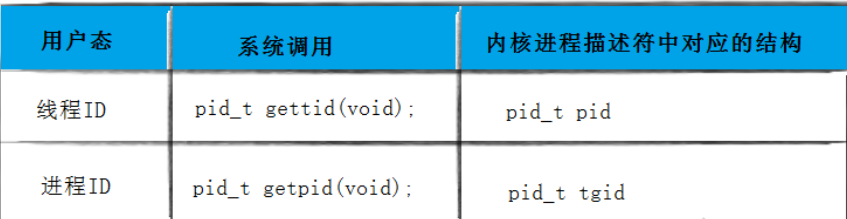

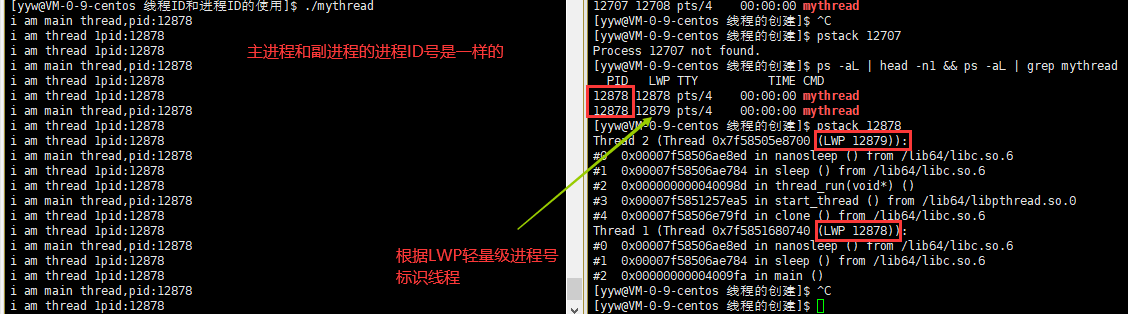

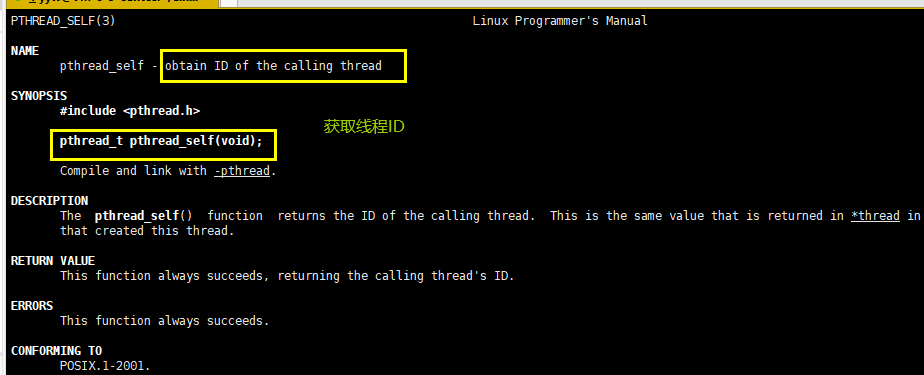

3. Process ID and thread ID

- In Linux, the current thread implementation is Native POSIX Thread Libaray, or NPTL for short. In this implementation, threads are also called light weighted processes. Each user thread corresponds to a scheduling entity in the kernel and also has its own process descriptor (task_struct structure).

- Before there is no thread, a process corresponds to a process descriptor in the kernel and a process ID. However, after the introduction of the thread concept, the situation has changed. A user process governs N user threads. Each thread, as an independent scheduling entity, has its own process descriptor in the kernel state. The descriptor of the process and the kernel suddenly becomes a 1:N relationship. POSIX standard requires all threads in the process to return the same process ID when calling getpid function, How to solve the above problems?

- Multithreaded processes are also called thread groups. Each thread in a thread group has a task_struct corresponding to it in the kernel. On the surface, the pid in the process descriptor structure corresponds to the process ID, but in fact, it corresponds to the thread ID; tgid in the process descriptor means Thread Group ID, which corresponds to the user level process ID.

1 #include<iostream>

2 #include<pthread.h>

3 using namespace std;

4 #include<sys/types.h>

5 #include<unistd.h>

6

7 void* thread_run(void* arg)

8 {

9 while(1)

10 {

11 cout<<"i am "<<(char*)arg<<"pid:"<<getpid()<<endl;

12 sleep(1);

13 }

14 }

15 int main()

16 {

17 pthread_t tid;

18 int ret=pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

19 if(ret!=0)

20 {

21 return -1;

22 }

23 while(1)

24 {

25 cout<<"i am main thread,pid:"<<getpid()<<endl;

26 sleep(2);

27 }

28 return 0;

29 }

-

The - L option in the ps command displays the following information:

-

LWP: thread ID, which is the return value of gettid() system call.

-

NLWP: number of threads in the thread group.

-

As can be seen from the above, the ID of the process is 12878, and the ID of a thread below is also 12878. This is no coincidence. The first thread in the thread group is called the main thread in the user state and the group leader in the kernel. When creating the first thread, the kernel will set the ID value of the thread group to the thread ID of the first thread, group_ The leader pointer points to itself, that is, the process descriptor of the main thread. Therefore, in the thread group, there is a thread whose ID is equal to the process ID, and this thread is the main thread of the thread group.

-

As for the thread group, the ID of other threads is allocated by the kernel. The thread group ID is always consistent with the thread group ID of the main thread, whether the main thread directly creates a thread or the created thread creates a thread again.

-

It should be emphasized that a thread is different from a process. A process has the concept of a parent process, but in a thread group, all threads are peer-to-peer.

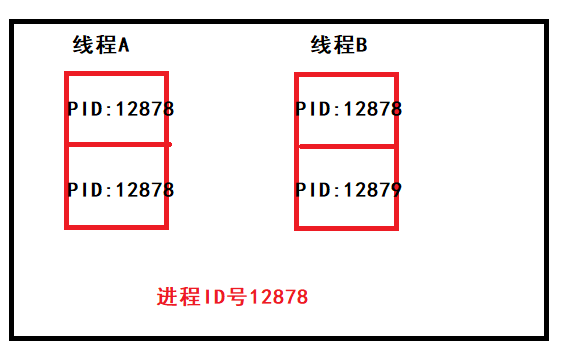

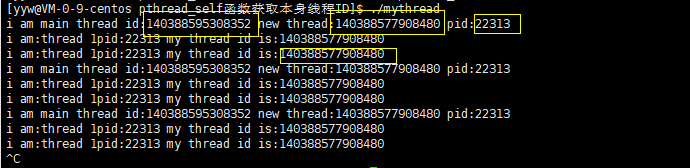

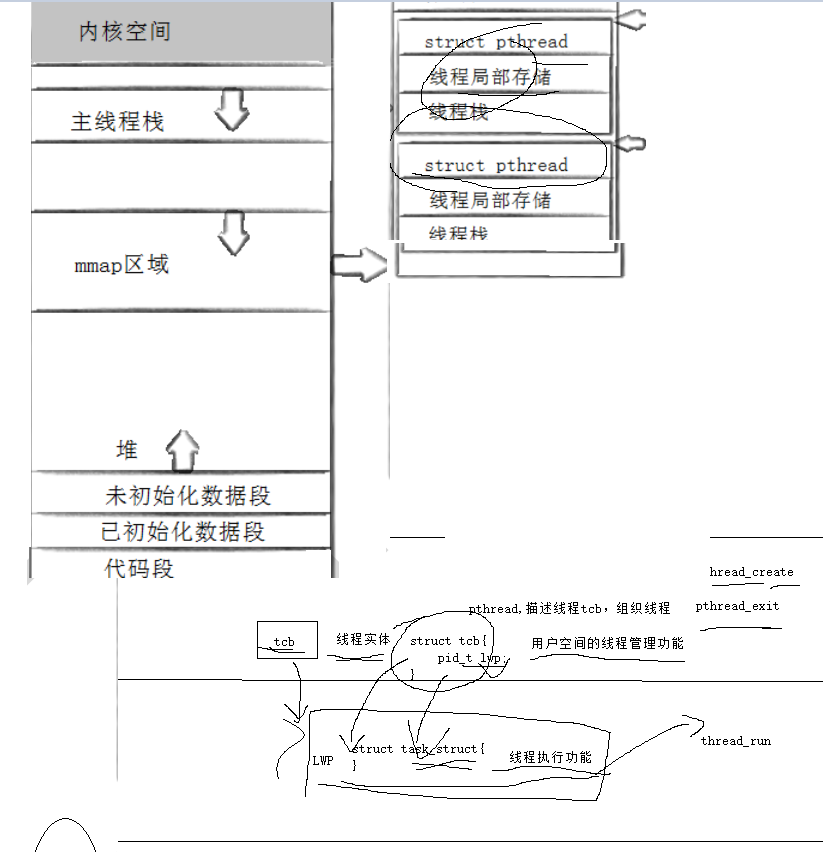

4. Thread ID and process address space layout

- pthread_ The create function generates a thread ID, which is stored in the address pointed to by the first parameter. This thread ID is different from the thread ID mentioned earlier.

- The thread ID mentioned earlier belongs to the category of process scheduling. Because a thread is a lightweight process and the smallest unit of the operating system scheduler, a value is required to uniquely represent the thread.

- pthread_ The first parameter of the create function points to a virtual memory unit. The address of the memory unit is the thread ID of the newly created thread, which belongs to the category of NPTL thread library. The subsequent operation of the thread library is to operate the thread according to the thread ID.

- The thread library NPTL provides pthread_ self function, you can get the ID of the thread itself.

1 #include<iostream>

2 #include<pthread.h>

3 using namespace std;

4 #include<sys/types.h>

5 #include<unistd.h>

6

7 void* thread_run(void* arg)

8 {

9 while(1)

10 {

11 cout<<"i am:"<<(char*)arg<<"pid:"<<getpid()<<" "<<"my thread id is:"<<pthread_self()<<endl;

12 sleep(1);

13 }

14 }

15 int main()

16 {

17 pthread_t tid;

18 int ret=pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

19 if(ret!=0)

20 {

21 return -1;

22 }

23 while(1)

24 {

25 cout<<"i am main thread id:"<<pthread_self()<<" "<<"new thread:"<<tid<<" "<<"pid:"<<getpid()<<endl;

26 sleep(2);

27 }

28 return 0;

29 }

pthread_ What type is t? Depends on implementation. For the NPTL implementation currently implemented by Linux, pthread_ The thread ID of type T is essentially an address in a process address space.

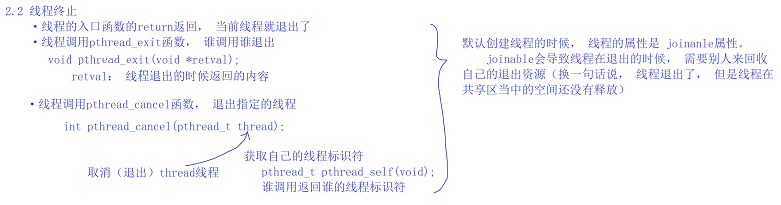

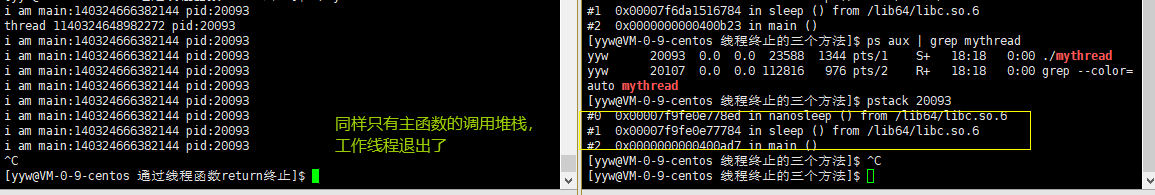

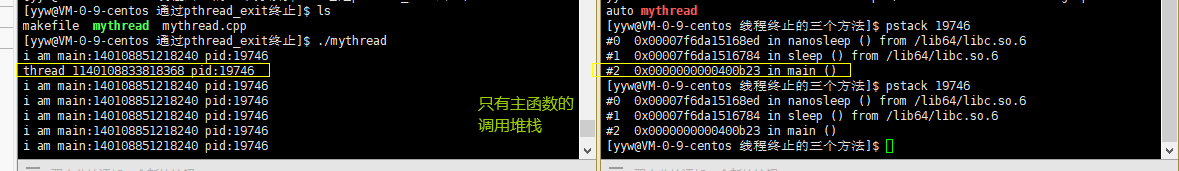

5. Thread termination

- If you need to terminate only one thread without terminating the whole process, there are three methods:

- return from the thread function. This method is not applicable to the main thread. Returning from the main function is equivalent to calling exit.

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 #include<sys/types.h>

5 using namespace std;

6

7 void* thread_run(void* arg)

8 {

9 // while(1)

10 // {

11 cout<<(char*)arg<<pthread_self()<<" pid:"<<getpid()<<endl;

12 sleep(1);

13 return (void*)10;

14 // }

15 }

16 int main()

17 {

18 pthread_t tid;

19 int ret=0;

20 ret= pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

21 if(ret!=0)

22 {

23 return -1;

24 }

25

26 while(1)

27 {

28 cout<<"i am main:"<<pthread_self()<<" pid:"<<getpid()<<endl;

29 sleep(2);

30 }

31 void* tmp=NULL;

32 pthread_join(tid,&tmp);

33 cout<<"thread eixt code:"<<(long long) tmp<<endl;

34 return 0;

35 }

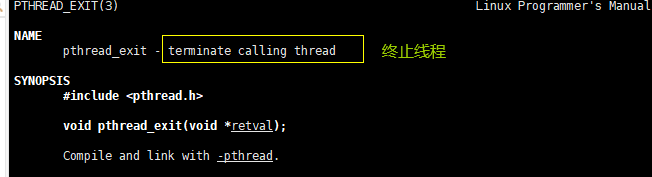

- Threads can call pthread_ exit terminates itself. Whoever invokes it will exit.

Function: thread termination

prototype

void pthread_exit(void *value_ptr);

parameter

value_ptr:value_ptr should not point to a local variable.

Return value: no return value. Like a process, it cannot return to its caller (itself) when the thread ends

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 #include<sys/types.h>

5 using namespace std;

6

7 void* thread_run(void* arg)

8 {

9 // while(1)

10 // {

11 cout<<(char*)arg<<pthread_self()<<" pid:"<<getpid()<<endl;

12 sleep(1);

13 pthread_exit((void*)10);

14 // }

15 }

16 int main()

17 {

18 pthread_t tid;

19 int ret=0;

20 ret= pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

21 if(ret!=0)

22 {

23 return -1;

24 }

25

26 while(1)

27 {

28 cout<<"i am main:"<<pthread_self()<<" pid:"<<getpid()<<endl;

29 sleep(2);

30 }

31 void* tmp=NULL;

32 pthread_join(tid,&tmp);

33 cout<<"thread quit code:"<<(long long)ret<<endl;

34 return 0;

35 }

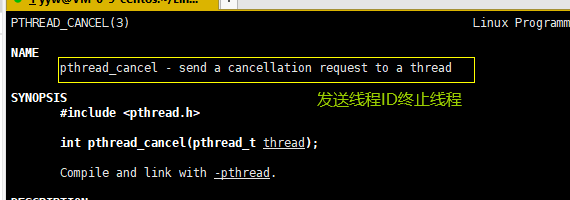

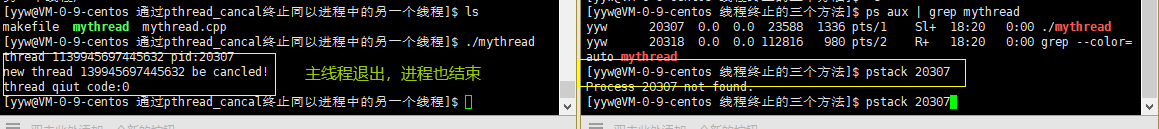

- A thread can call pthread_ cancel terminates another thread in the same process.

Function: cancel an executing thread

prototype

int pthread_cancel(pthread_t thread);

parameter

Thread: thread ID

Return value: 0 is returned successfully; Failure return error code

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 #include<sys/types.h>

5 using namespace std;

6

7 void* thread_run(void* arg)

8 {

9 while(1)

10 {

11 cout<<(char*)arg<<pthread_self()<<" pid:"<<getpid()<<endl;

12 sleep(10);

13 }

14 }

15 int main()

16 {

17 pthread_t tid;

18 int ret=0;

19 ret= pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

20 if(ret!=0)

21 {

22 return -1;

23 }

24

25 sleep(10);

26 pthread_cancel(tid);

27 cout<<"new thread "<<tid<<" be cancled!"<<endl;

28 void* tmp=NULL;

29 pthread_join(tid,&tmp);

30 cout<<"thread qiut code:"<<(long long )ret<<endl;

31 return 0;

32 }

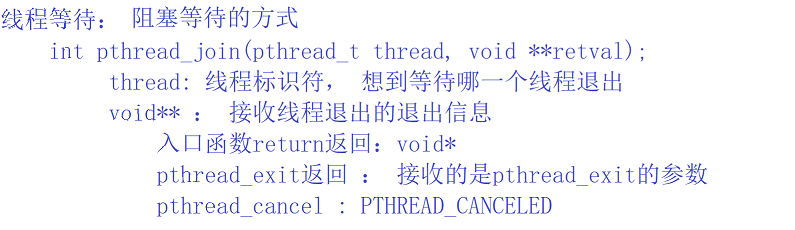

6. Thread waiting

- Why do I need a thread to wait?

- The space of the exited thread has not been released and is still in the address space of the process.

- Creating a new thread does not reuse the address space of the thread that just exited.

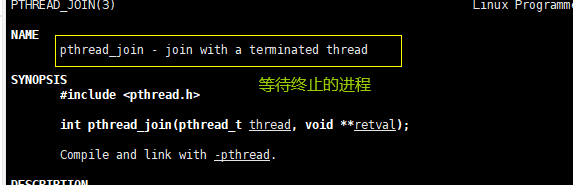

Function: wait for the thread to end

prototype

int pthread_join(pthread_t thread, void **value_ptr);

parameter

Thread: thread ID

value_ptr: it points to a pointer that points to the return value of the thread

Return value: 0 is returned successfully; Failure return error code

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 #include<sys/types.h>

5 using namespace std;

6

7 void* thread_run(void* arg)

8 {

9 while(1)

10 {

11 cout<<(char*)arg<<pthread_self()<<" pid:"<<getpid()<<endl;

12 sleep(10);

13 }

14 }

15 int main()

16 {

17 pthread_t tid;

18 int ret=0;

19 ret= pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

20 if(ret!=0)

21 {

22 return -1;

23 }

24

25 // while(1)

26 // {

27 cout<<"i am main:"<<pthread_self()<<" pid:"<<getpid()<<endl;

28 sleep(2);

29 // }

30 void* tmp=NULL;

31 pthread_join(tid,&tmp);

32 return 0;

33 }

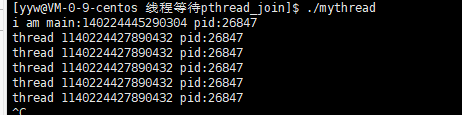

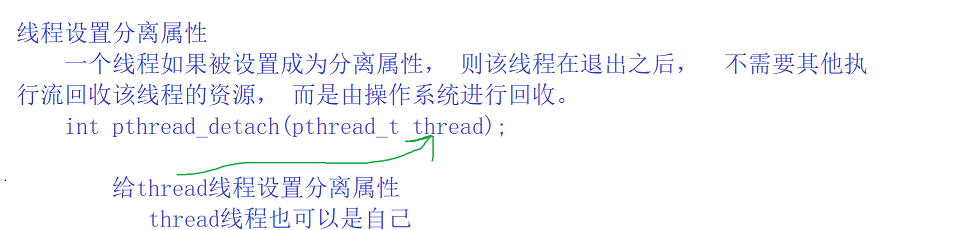

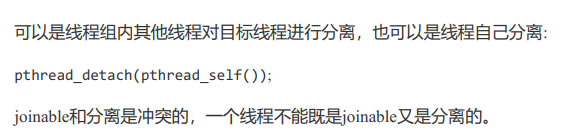

7. Thread separation

- By default, the newly created thread is joinable. After the thread exits, pthread it_ Join operation, otherwise resources cannot be released, resulting in system leakage.

- If we don't care about the return value of the thread, join is a burden. At this time, we can tell the system to automatically release thread resources when the thread exits.

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 #include<sys/types.h>

5 using namespace std;

6

7 void* thread_run(void* arg)

8 {

9 pthread_detach(pthread_self());

10 while(1)

11 {

12 cout<<(char*)arg<<pthread_self()<<" pid:"<<getpid()<<endl;

13 sleep(1);

14 break;

15 }

16 return (void*)10;

17 }

18 int main()

19 {

20 pthread_t tid;

21 int ret=0;

22 ret= pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

23 if(ret!=0)

24 {

25 return -1;

26 }

27

28 sleep(10);

29 pthread_cancel(tid);

30 cout<<"new thread "<<tid<<" be cancled!"<<endl;

31 void* tmp=NULL;

32 pthread_join(tid,&tmp);

33 cout<<"thread qiut code:"<<(long long )ret<<endl;

34 return 100;

35 }

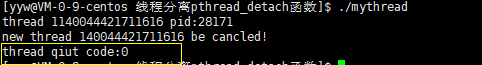

Separate threads are in the same process address space. Mutual threads will not want to interfere with each other, but if the separated thread crashes, the process will also crash.

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 #include<sys/types.h>

5 using namespace std;

6

7 void* thread_run(void* arg)

8 {

9 pthread_detach(pthread_self());

10 while(1)

11 {

12 cout<<(char*)arg<<pthread_self()<<" pid:"<<getpid()<<endl;

13 sleep(1);

14 break;

15 }

16 int a=10;

17 a=a/0;

18 return (void*)10;

19 }

20 int main()

21 {

22 pthread_t tid;

23 int ret=0;

24 ret= pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

25 if(ret!=0)

26 {

27 return -1;

28 }

29

30 sleep(10);

31 pthread_cancel(tid);

32 cout<<"new thread "<<tid<<" be cancled!"<<endl;

33 void* tmp=NULL;

34 pthread_join(tid,&tmp);

35 cout<<"thread qiut code:"<<(long long )ret<<endl;

36 return 100;

37 }

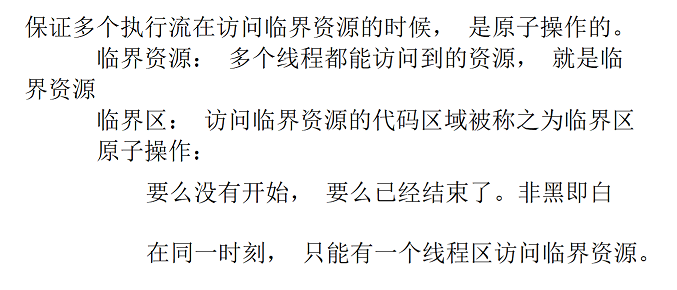

4, Thread mutual exclusion

1. Concepts related to mutual exclusion between processes and threads

- Critical resources: resources shared by multithreaded execution streams are called critical resources.

- Critical area: the code inside each thread that accesses critical self entertainment is called critical area.

- Mutual exclusion: at any time, mutual exclusion ensures that only one execution flow enters the critical area and accesses the critical resources. It usually protects the critical resources.

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 #include<sys/types.h>

5 using namespace std;

6

7 int a=10;

8 void* thread_run(void* arg)

9 {

10 while(1)

11 {

12 cout<<(char*)arg<<pthread_self()<<" pid:"<<getpid()<<endl;

13 cout<<(char*)arg<<" global-variable:"<<a<<" &a:"<<&a<<endl;

14 sleep(1);

15 }

16 return (void*)10;

17 }

18 int main()

19 {

20 pthread_t tid;

21 pthread_t tid1;

22 pthread_create(&tid,NULL,thread_run,(void*)"thread 1");

23 pthread_create(&tid1,NULL,thread_run,(void*)"thread 1");

24 cout<<"main:"<<pthread_self()<<" pid:"<<getpid()<<endl;

25

26 cout<<"before:"<<main<<" global-variable:"<<a<<" %p:"<<&a<<endl;

27 sleep(5);

28 a=100;

29 cout<<"after:"<<main<<" globa-variable:"<<a<<" %p:"<<&a<<endl;

30

31 pthread_cancel(tid);

32 void* tmp=NULL;

33 pthread_join(tid,&tmp);

34 cout<<"thread qiut code:"<<(long long )tmp<<endl;

35 return 100;

36 }

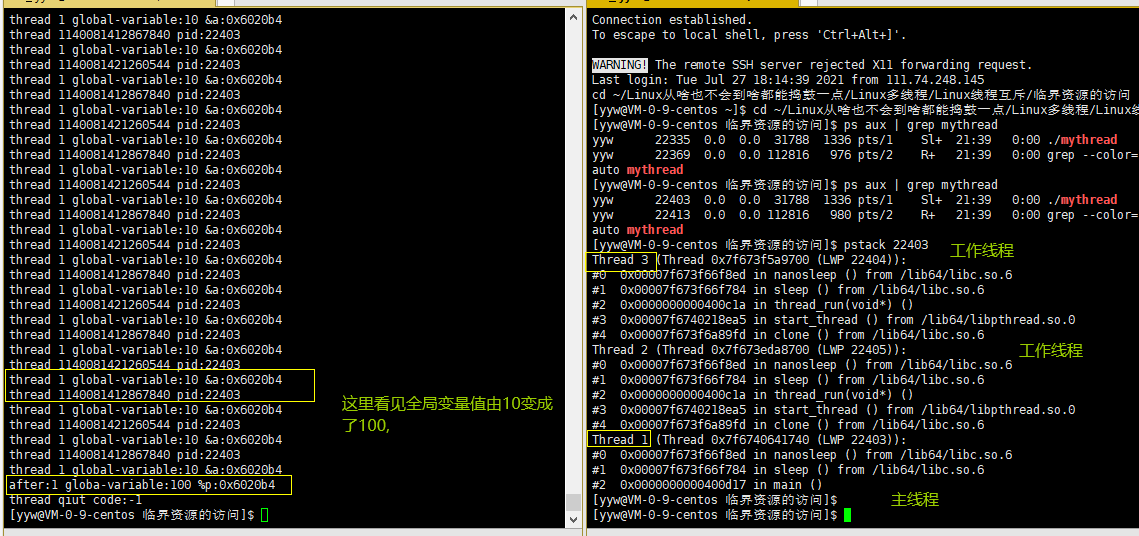

Critical resources are modified here.

- Atomicity (how to implement it will be discussed later): an operation that will not be interrupted by any scheduling mechanism. The operation has only two states, either completed or incomplete.

2. Thread safety

When multiple threads are concurrent with the same piece of code, different results will not appear. Multiple execution flows, accessing critical resources, will not cause ambiguity in the program.

- Execution flow: understood as thread

- Access: refers to the operation of critical resources

- Critical resources: resources that can be accessed by multiple threads

- eg: global variable, a structure (not defined in a thread entry function) and an instantiation pointer of a class

- Critical area: the code area where code operates critical resources is called critical area

- Ambiguity: there will be multiple results

3. Thread unsafe

1. Thread safe + + operation

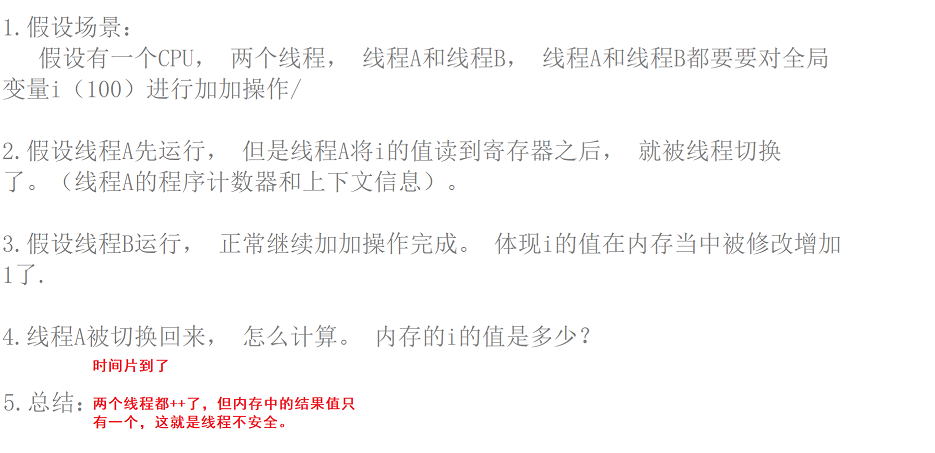

Under normal circumstances, suppose we define a variable I, which must be stored in the memory stack. When we want to calculate this variable I, it is calculated by the CPU (two core functions: arithmetic operation and logic operation). If we want to + 1 the variable i = 10, first inform the register that the value of I in the memory stack is 10. At this time, There is a value 10 in the register. Let the CPU perform + 1 operation on the 10 in the register. After the CPU +1 operation is completed, the result 11 is written back to the register. At this time, the value in the register is changed to 11, and then the value in the register is written back to the memory. At this time, the value of I is 11.

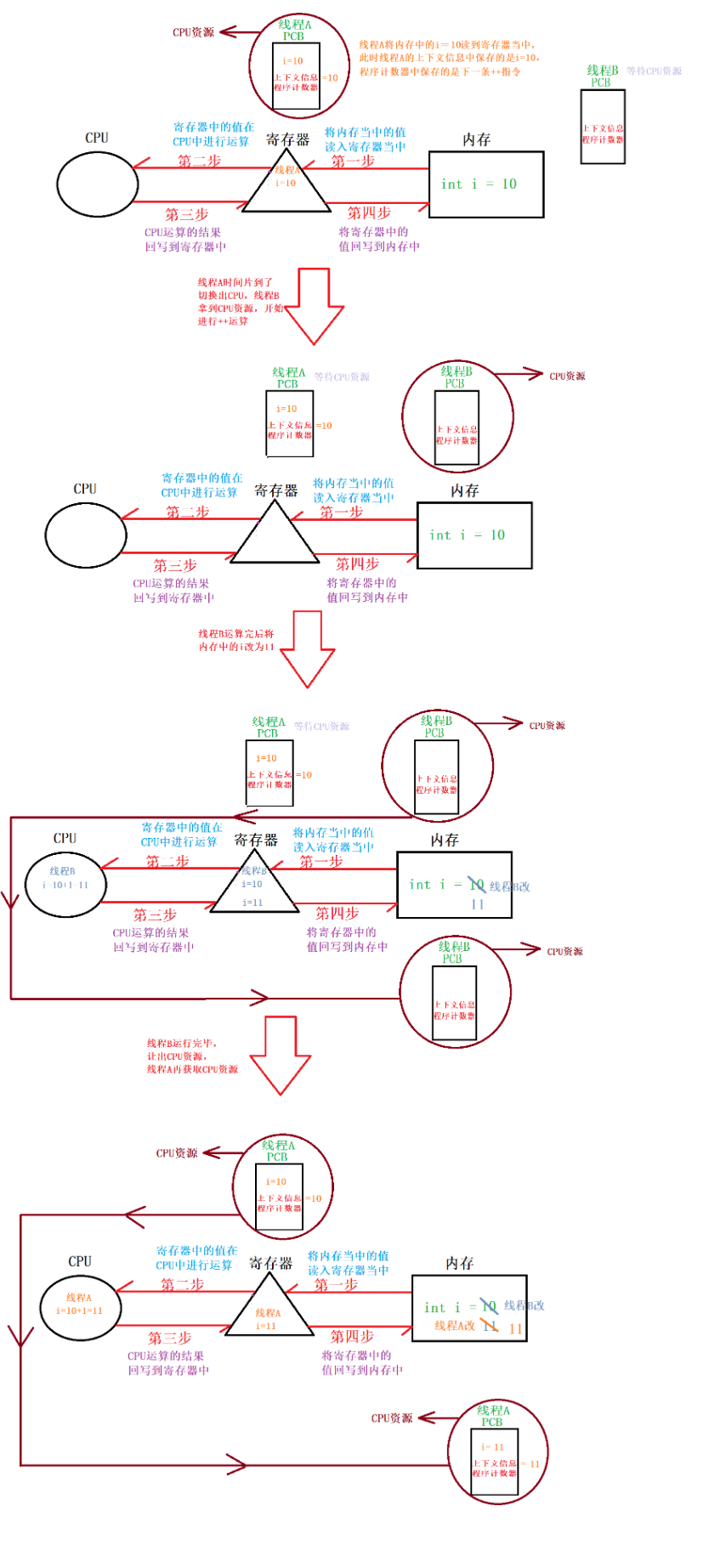

2. Thread unsafe + + operation

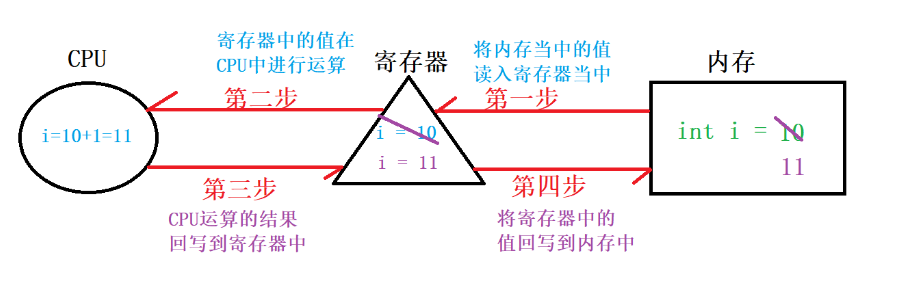

- Based on the principle of 3.1 normal variable operation, it is described in the following steps:

- Suppose that there are two threads in the scenario, and each thread performs + + operations.

Suppose there are two threads, thread A and thread B. both thread A and thread B want to + + the global variable i.

- Two thread descriptions are reflected: thread switching, context information, and program counters.

Assuming that the value of the global variable I is 10, thread A reads the global variable i = 10 from memory to the register. At this time, the time slice of thread A is up, thread A is switched out, the context information of thread A stores I = 10 in the register, and the program counter stores the next + + instruction to be executed. If thread B obtains CPU resources at this time, I also want to perform + + operation on global variable I, because thread A does not return the operation result to memory at this time, so thread B reads the value of global variable I from memory as 10, then reads the value of I to the register, and then performs + + operation in CPU, and then writes the result 11 after + + back to the register, and then the register back to memory, At this time, the value of I in the memory has been changed to 11 by thread B + +, and then thread B gives up the CPU resources. At this time, when thread A switches back, the next instruction it needs to execute is to perform + + operation on I saved in the program counter, while thread A obtains the value of I in + + from the context information, I = 10 in the context information, At this time, thread A completes the + + operation on I in the CPU, and then writes the result 11 back to the register, and then the register writes back to the memory. At this time, the I in the memory is changed to 11 by thread B. Although thread A and thread B + + the global variable I, it is reasonable that the final value of the global variable I should be 12, but at this time, the value of the global variable I is 11.

- summary

Thread A adds global variable i once, and thread B adds global variable i once. At this time, the value of global variable is 11 instead of 12, which may cause ambiguity when multiple threads operate critical resources at the same time (thread insecurity)

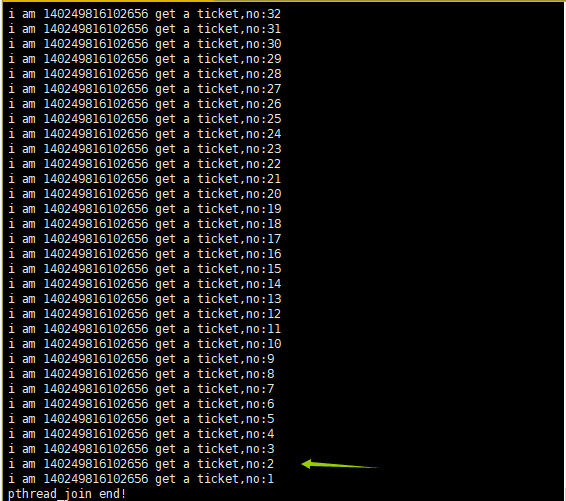

3. Implementation of thread unsafe code (scalpers grab tickets)

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 using namespace std;

5 int ticket=1000;

6

7 void* get_ticket(void* arg)

8 {

9 while(1)

10 {

11 if(ticket>0)

12 {

13 cout<<"i am "<<pthread_self()<<" get a ticket,no:"<<ticket<<endl;

14 ticket--;

15 }

16 else

17 {

18 break;

19 }

20 }

21 return NULL;

22 }

23 int main()

24 {

25 pthread_t tid[4];

26 for(int i=0;i<4;i++)

27 {

28 int ret=pthread_create(&tid[i],NULL,get_ticket,NULL);

29 if(ret!=0)

30 {

31 cout<<"Thread creation failed!"<<endl;

32 }

33

34 }

35 for(int i=0;i<4;i++)

36 {

37 pthread_join(tid[i],NULL);

38 }

39

40 cout<<"pthread_join end!"<<endl;

41 return 0;

42 }

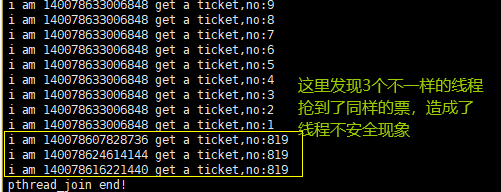

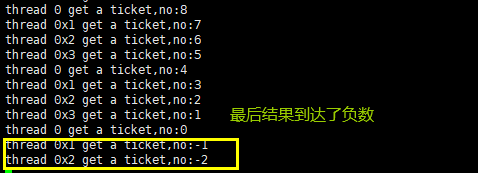

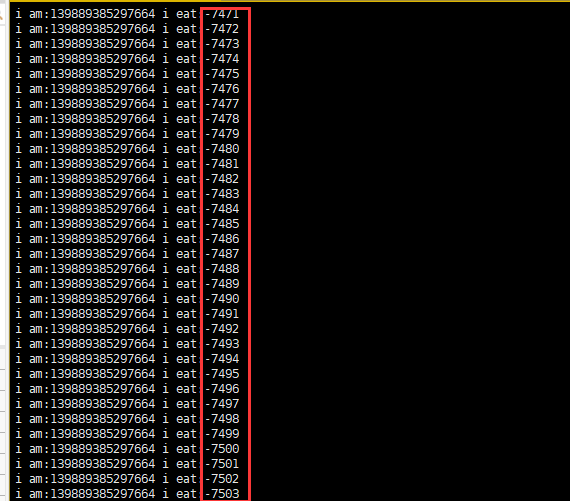

As shown in the figure above, we can see that both threads got the 819th ticket, which leads to ambiguity, that is, thread insecurity.

- There are two problems:

- When a thread takes a ticket, multiple threads may get the same ticket. If there are many CPU s, they may get a negative number (mutex lock solves this problem).

- It is unreasonable for A thread to take tickets. One thread A may take all tickets, while another thread B only takes one ticket, which is the same as thread A.

mutex 4. mutex

- In most cases, the data used by threads are local variables, and the address space of variables is in the process stack space. In this case, variables belong to a single thread, and other threads cannot obtain such variables.

- But sometimes, many variables need to be shared between threads. Such variables are called shared variables, which can complete the interaction between threads through data sharing.

- The concurrent operation of multiple threads sharing variables will bring some problems.

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 using namespace std;

5 int ticket=100;

6

7 void* get_ticket(void* arg)

8 {

9 int* num=(int*)arg;

10 while(1)

11 {

12 if(ticket>0)

13 {

14 sleep(1);

15 cout<<"thread "<<num<<" get a ticket,no:"<<ticket<<endl;

16 ticket--;

17 }

18 else

19 {

20 break;

21 }

22 }

23 return NULL;

24 }

25 int main()

26 {

27 pthread_t tid[4];

28 for(int i=0;i<4;i++)

29 {

30 pthread_create(tid+1,NULL,get_ticket,(void*)i);

31

32 }

33 for(int i=0;i<4;i++)

34 {

35 pthread_join(tid[i],NULL);

36 }

37 return 0;

38 }

- Why may the results not be achieved?

- After the if statement determines that the condition is true, the code can switch to other threads concurrently.

- sleep is a process that simulates a long business process. In this long business process, many threads may enter the code segment.

- – the ticket operation itself is not an atomic operation.

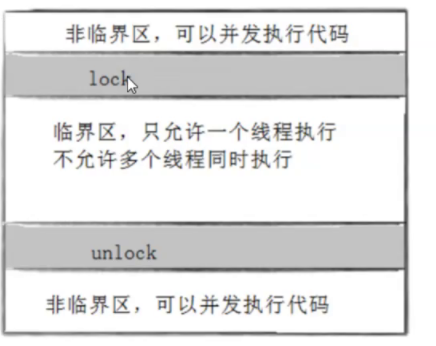

- To solve the above problems, we need to do three things

- Code must have mutually exclusive behavior: when the code enters the critical area for execution, other threads are not allowed to enter the critical area.

- If multiple threads request to execute the code of the critical area at the same time, and no thread is executing in the critical area, only one thread can be allowed to enter the critical area.

- If a thread does not execute in the critical area, the thread cannot prevent other threads from entering the critical area.

To achieve these three points, in essence, we need a lock. The lock provided on Linux is called mutex.

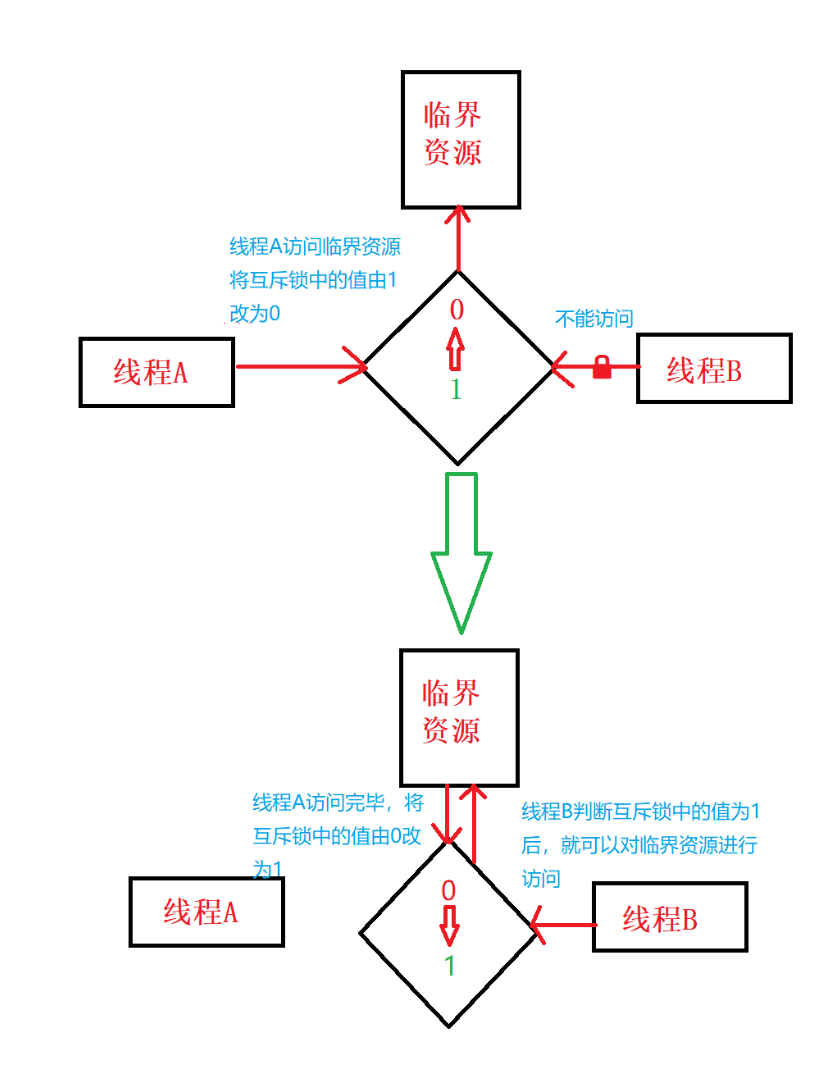

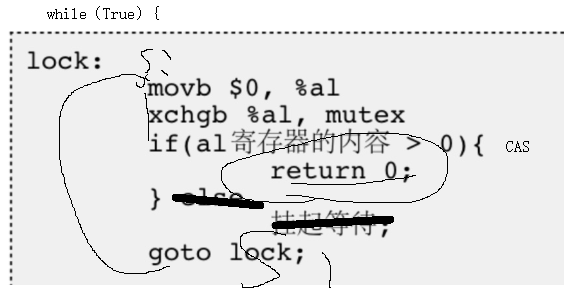

5. Principle of mutex

1. What is a mutex

- The bottom layer of the mutex lock is a mutex, and the essence of the mutex is a counter. There are only two kinds of counter values, one is 1 and the other is 0;

- Indicates that the current critical resource can be accessed.

- 0: indicates that the current critical resource cannot be accessed.

2. Mutex logic

- Call the locking interface, and check whether the value of the internal counter of the locking interface is 1. If it is 1, it can be accessed; When the yoke is locked successfully, the value of the counter will be changed from 1 to 0; If 0, it cannot be accessed.

- -Call the unlocking logic, and the value of the counter changes from 0 to 1, indicating that the resource can be used.

Suppose there is A critical resource, A thread A and A thread B. according to the previous idea of scalpers grabbing tickets, as long as the thread has A time slice, it can access this critical resource. Now we add A mutex to thread A and thread B. suppose that thread A wants to access the critical resource, it must first obtain the mutex, and the value in the mutex is 1, It means that thread A can access the critical resource at present, and then change 1 in the mutex to 0. At this time, if thread B wants to access the critical resource, it needs to obtain the mutex first, and the value in the mutex is 0, so thread B cannot access the critical resource at this time. After thread A accesses it, the lock will be released, and the value in the mutex will change from 0 to 1, At this time, thread B judges that the value in the mutex becomes 1 and can access the critical resources; Mutex ensures that the current critical resource can only be accessed by one execution flow at the same time.

Note: if multiple threads need to access critical resources with mutually exclusive access attributes, they must lock the same lock in multiple threads, so that each thread must obtain the lock before accessing critical resources. If the value in the lock is 1, it can be accessed, and if it is 0, it cannot be accessed; If only thread A is locked and thread B is not locked, thread A determines that the value in the lock is 1, accesses the critical resource and changes the value in the lock to 0. If thread B adds this lock, it can directly access the critical resource without obtaining the lock and determining whether the value in the lock is 1, which will lead to thread insecurity.

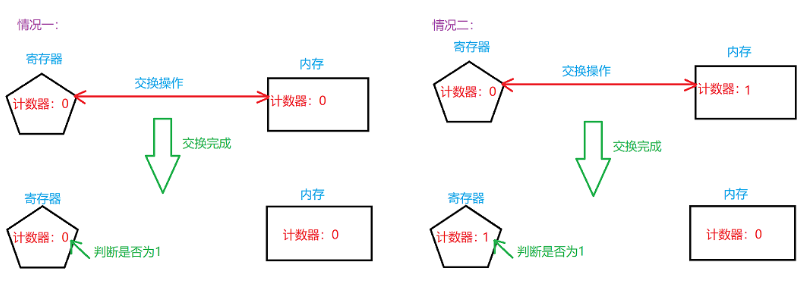

3. Locking logic

When locking, a value 0 saved in the counter of the register will be saved in advance. No matter how many values are saved in the counter of the memory, the value 0 saved in the register will interact with the value saved in the memory counter, and then judge whether the value in the register is 1. If it is 1, the lock can be added. If it is not 1, the lock cannot be added.

- Through the above examples, we have realized that pure i + + or + + i are not atomic, and there may be data consistency problems.

- In order to realize the mutex operation, most architectures provide swap or exchange instructions, which are used to exchange the data of registers and memory units. Since there is only one instruction, the atomicity is guaranteed. Even for multiprocessor platforms, the bus cycles of accessing memory are sequential, When the exchange instruction on one processor is executed, the exchange instruction of another processor can only wait for the bus cycle. Now let's change the pseudo code of lock and unlock.

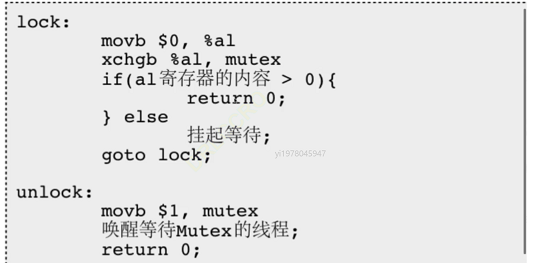

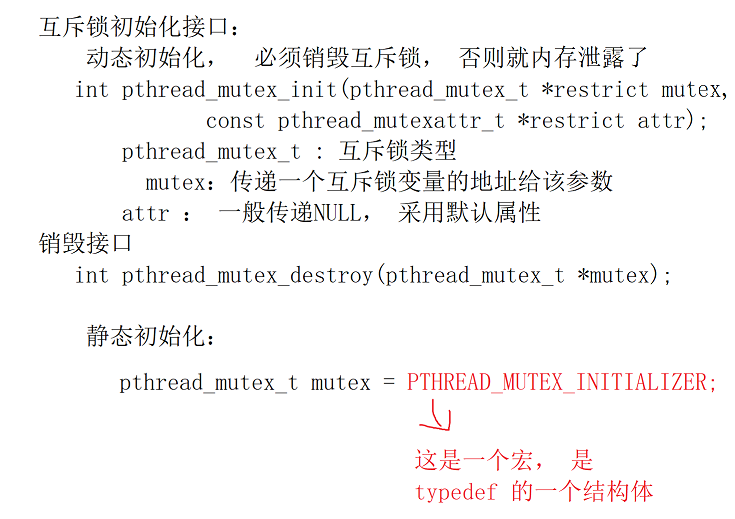

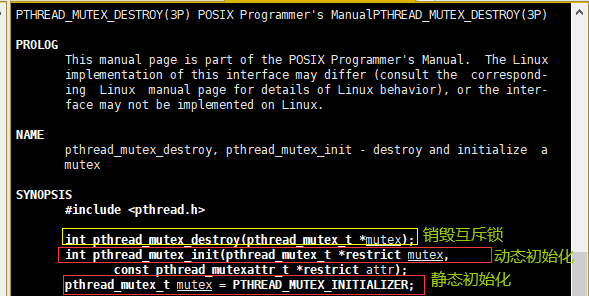

6. Interface of mutex

1. Initialize mutex

- There are two ways to initialize mutexes:

- Method 1, static allocation.

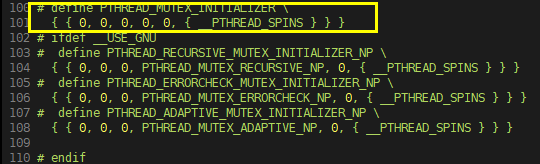

pthread_mutex_t mutex = PTHREAD_MUTEX_INITIALIZER

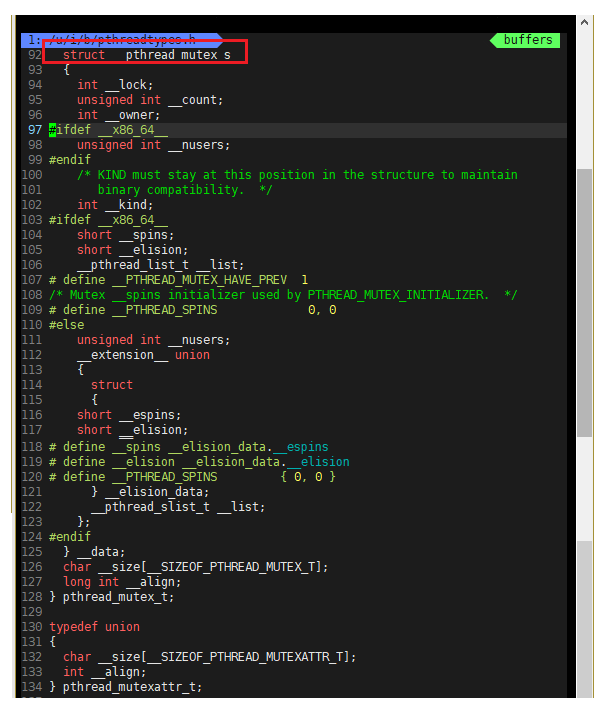

Use VIM / usr / include / pthread H path to view the macro definition. Pthread in this macro_ MUTEX_ Initializer is to initialize pthread_ mutex_ The of this variable is shown in the following figure:

pthread_mutex_t is actually a consortium, VIM / usr / include / bits / pthreadtypes H path, static initialization actually initializes the union with the macro above, as shown in the figure below

- Method 2, dynamic allocation:

int pthread_mutex_init(pthread_mutex_t restrict mutex, const pthread_mutexattr_trestrict attr);

Parameters:

- Mutex: mutex to initialize

- attr: NULL, set to NULL, representing the default attribute

2. Destroy the mutex

- Use pthread_ MUTEX_ The mutex initialized by initializer does not need to be destroyed.

- Do not destroy a mutex that has been locked.

- For the mutex that has been destroyed, ensure that no thread will try to lock it later.

int pthread_mutex_destroy(pthread_mutex_t *mutex); The dynamic mutex needs to be destroyed, otherwise the dynamic mutex will be destroyed

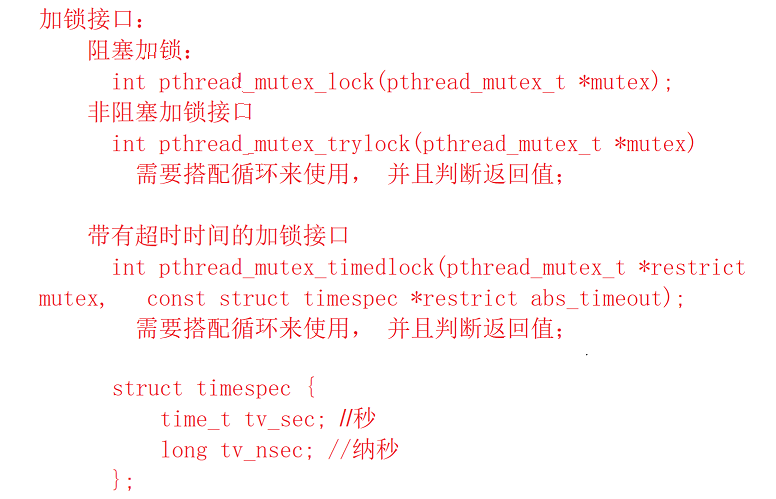

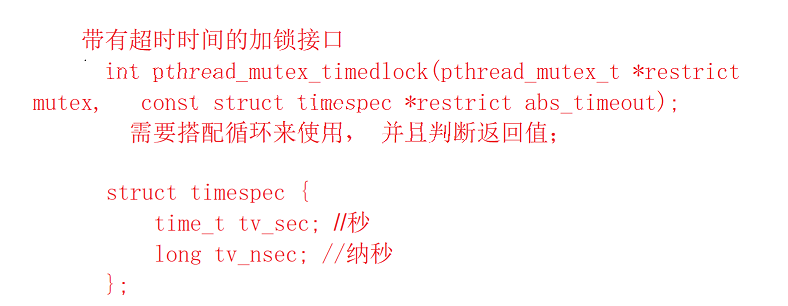

3. Locking and unlocking of mutex lock

1. Block the locking interface

int pthread_mutex_lock(pthread_mutex_t *mutex);

- If the value of the counter in the mutex variable is 1 and the interface is called, the locking succeeds. After the interface is called, the function returns.

- If the value of the counter in the mutex variable is 0 and the interface is called, the execution flow calling the interface is blocked inside the current interface.

2. Non blocking locking interface

int pthread_mutex_trylock(pthread_mutex_t *mutex);

- No matter whether the locking is successful or not, it will be returned. Therefore, it is necessary to judge the result returned by locking to determine whether to lock. If the locking is successful, the critical resource will be operated. On the contrary, you need to cycle to obtain the mutex until you get the mutex

3. Locking interface with timeout

4. Unlock the interface

int pthread_mutex_unlock(pthread_mutex_t *mutex);

- Whether it is blocking locking / non blocking locking / locking with timeout, the mutex lock with successful locking can be unlocked using this interface.

4. Use of mutex

1. When to use initialization mutex

First initialize the mutex and create the thread.

2. When to use destroy mutex

After all threads using the mutex exit, the mutex can be destroyed

3. When to use locking

Before a thread accesses a critical resource, it performs a lock operation

4. When to unlock

Unlock all possible exits of the thread

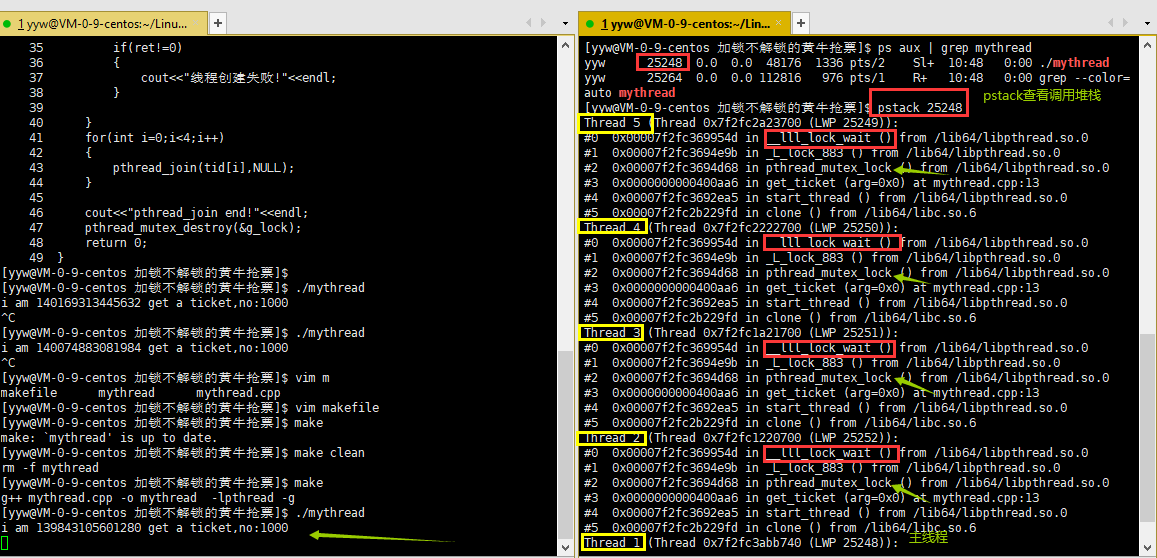

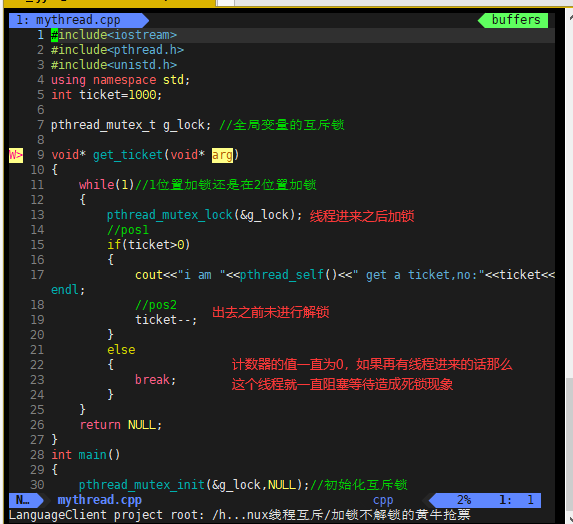

5. Do not unlock after locking

What happens when you lock a critical resource before accessing it and then do not unlock it.

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 using namespace std;

5 int ticket=1000;

6

7 pthread_mutex_t g_lock; //Mutex of global variables

8

9 void* get_ticket(void* arg)

10 {

11 while(1)//Lock in position 1 or lock in position 2

12 {

13 pthread_mutex_lock(&g_lock);

14 //pos1

15 if(ticket>0)

16 {

17 cout<<"i am "<<pthread_self()<<" get a ticket,no:"<<ticket<<endl;

18 //pos2

19 ticket--;

20 }

21 else

22 {

23 break;

24 }

25 }

26 return NULL;

27 }

28 int main()

29 {

30 pthread_mutex_init(&g_lock,NULL);//Initialize mutex

31 pthread_t tid[4];

32 for(int i=0;i<4;i++)

33 {

34 int ret=pthread_create(&tid[i],NULL,get_ticket,NULL);

35 if(ret!=0)

36 {

37 cout<<"Thread creation failed!"<<endl;

38 }

39

40 }

41 for(int i=0;i<4;i++)

42 {

43 pthread_join(tid[i],NULL);

44 }

45

46 cout<<"pthread_join end!"<<endl;

47 pthread_mutex_destroy(&g_lock);

48 return 0;

49 }

It is observed that only one ticket will not be implemented.

In the above figure, it is found that all four working threads are blocked. One working thread locks and then does not unlock. The reason for the above error is that one thread does not unlock after execution. When other working threads go to obtain the lock again, the value in the counter in the mutex lock is still 0, they will be blocked and wait: so remember to unlock after locking, Otherwise, it will cause deadlock.

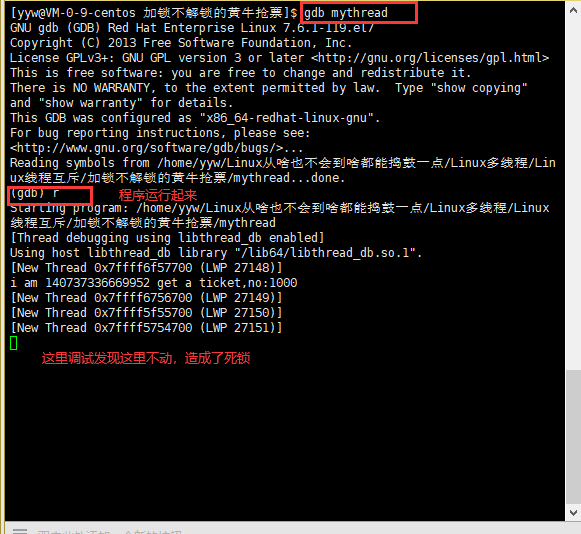

6.gdb debugging: which worker thread does not unlock after locking

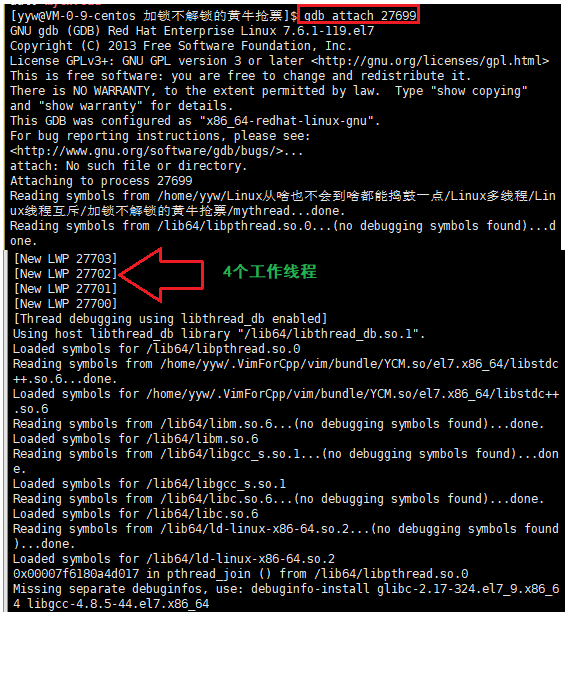

First, mythread is running, and then use the gdb attach [pid] Command (debug a running process with gdb) to enter the gdb debugging interface, as shown in the following figure:

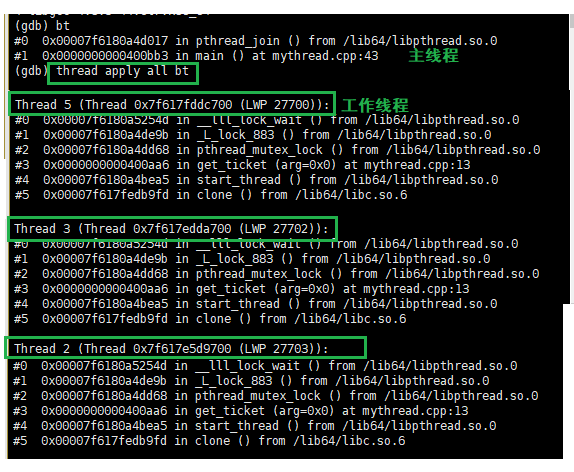

View the call stack with bt and all call stacks with thread apply all bit, as shown in the following figure:

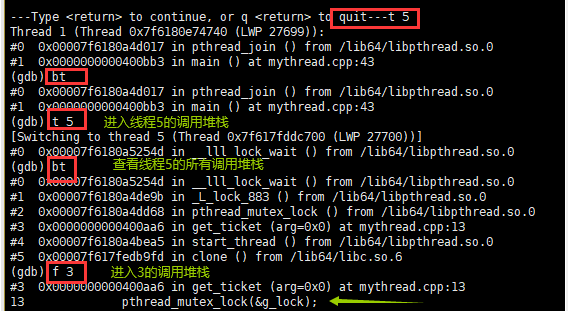

Then jump to the stack of a thread through t [thread number], check the call stack of the thread through bt, and jump to a specific stack through f, as shown in the above figure. We can debug

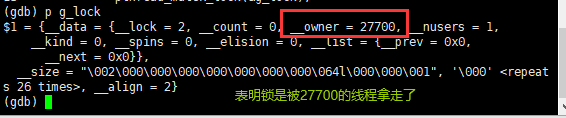

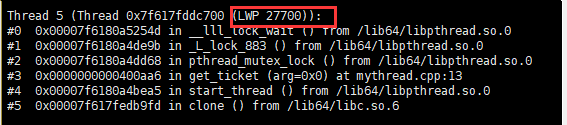

Then print the mutex variable My_lock, and we can see one in the mutex variable__ Owner = 27700, and this 27700 is thread 5. Thread 5 adds the lock at this time, but when it obtains the lock, it will be blocked in the locking logic (that is, thread 5 succeeds in locking for the first time, and will be blocked in the locking logic when it obtains the lock for the second time)

5. Correct use of mutex

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 using namespace std;

5 int ticket=1000;

6

7 pthread_mutex_t g_lock; //Mutex of global variables

8

9 void* get_ticket(void* arg)

10 {

11 while(1)//Lock in position 1 or lock in position 2

12 {

13 pthread_mutex_lock(&g_lock);

14 //pos1

15 if(ticket>0)

16 {

17 usleep(1);

18 cout<<"i am "<<pthread_self()<<" get a ticket,no:"<<ticket<<endl;

19 //pos2

20 ticket--;

21 pthread_mutex_unlock(&g_lock);

22 }

23 else

24 {

25 pthread_mutex_unlock(&g_lock);

26 break;

27 }

28 }

29 return NULL;

30 }

31 int main()

32 {

33 pthread_mutex_init(&g_lock,NULL);//Initialize mutex

34 pthread_t tid[4];

35 for(int i=0;i<4;i++)

36 {

37 int ret=pthread_create(&tid[i],NULL,get_ticket,NULL);

38 if(ret!=0)

39 {

40 cout<<"Thread creation failed!"<<endl;

41 }

42

43 }

44 for(int i=0;i<4;i++)

45 {

46 pthread_join(tid[i],NULL);

47 }

48

49 cout<<"pthread_join end!"<<endl;

50 pthread_mutex_destroy(&g_lock);

51 return 0;

52 }

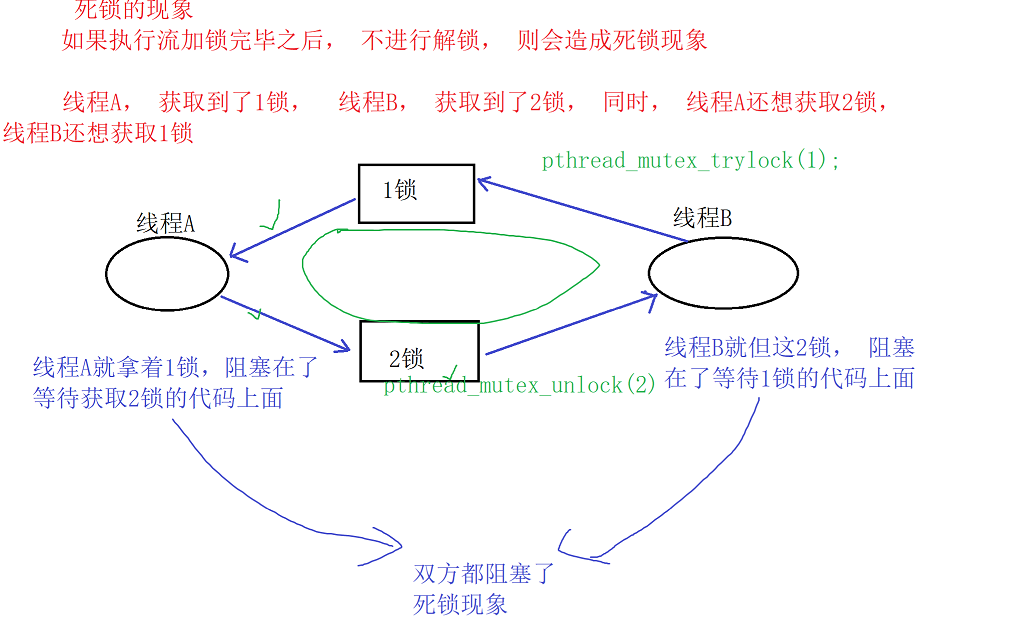

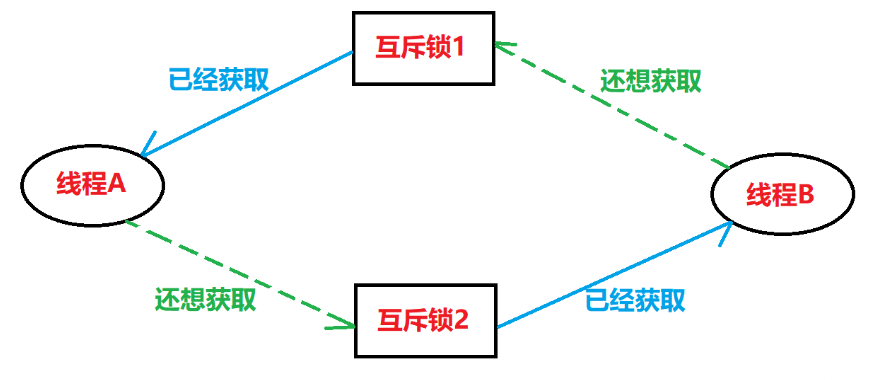

7. Deadlock

1. Definition of deadlock

Deadlock refers to a permanent waiting state in which each process in a group occupies resources that will not be released, but is in a state of permanent waiting due to mutual application for resources that will not be released to be used by other processes.

When thread A obtains mutex 1 and thread B obtains mutex 2, thread A and thread B also want to obtain the lock in each other's hand (thread A also wants to obtain mutex 2 and thread B also wants to obtain mutex 1), which will lead to deadlock

2. Simulation of deadlock

1 #include<iostream>

2 #include<unistd.h>

3 #include<pthread.h>

4 using namespace std;

5

6 pthread_mutex_t g_lock1;

7 pthread_mutex_t g_lock2;

8 void* ThreadStart1(void* args)

9 {

10 (void)args;

11 pthread_mutex_lock(&g_lock1);

12 sleep(5);

13 pthread_mutex_lock(&g_lock2);

14 return NULL;

15 }

16 void* ThreadStart2(void* args)

17 {

18 (void)args;

19 pthread_mutex_lock(&g_lock2);

20 sleep(5);

21 pthread_mutex_lock(&g_lock1);

22 return NULL;

23 }

24 int main()

25 {

26 pthread_mutex_init(&g_lock1,NULL);

27 pthread_mutex_init(&g_lock2,NULL);

28

29 pthread_t tid;

30 int ret=pthread_create(&tid,NULL,ThreadStart1,NULL);

31 if(ret<0)

32 {

33 cout<<"Thread creation failed!"<<endl;

34 return 0;

35 }

36

37 ret=pthread_create(&tid,NULL,ThreadStart2,NULL);

38 if(ret<0)

39 {

40 cout<<"Thread creation failed!"<<endl;

41 return 0;

42 }

43

44 while(1)

45 {

46 ;

47 }

48 pthread_mutex_destroy(&g_lock1);

49 pthread_mutex_destroy(&g_lock2);

50 return 0;

51 }

The results are as follows:

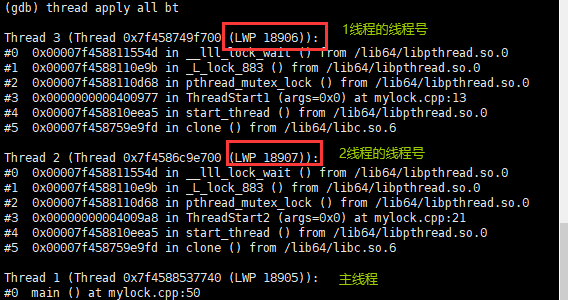

The existing process through gdb debugging is shown in the figure below:

View call stacks for all threads:

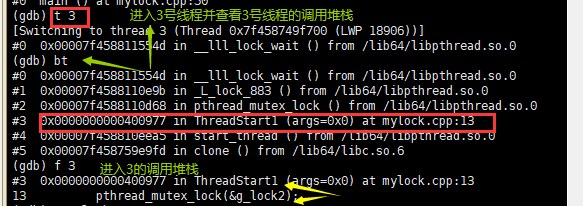

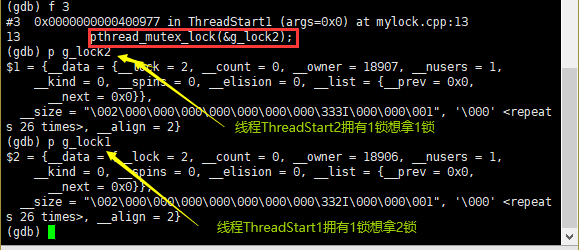

Enter thread 3, view the call stack of thread 3, and enter one of the call stacks.

Thread ThreadStart1 has g_lock1 wants g_lock2 lock, thread ThreadStart2 owns g_lock2 wants g_lock1 lock, so the two threads cause deadlock.

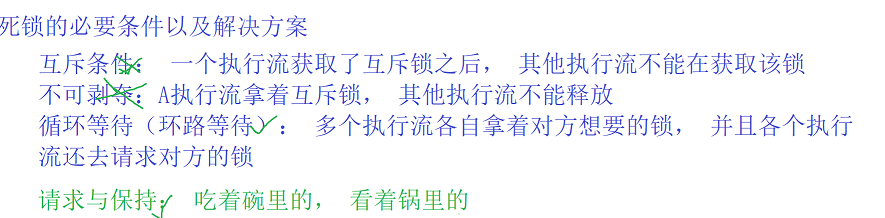

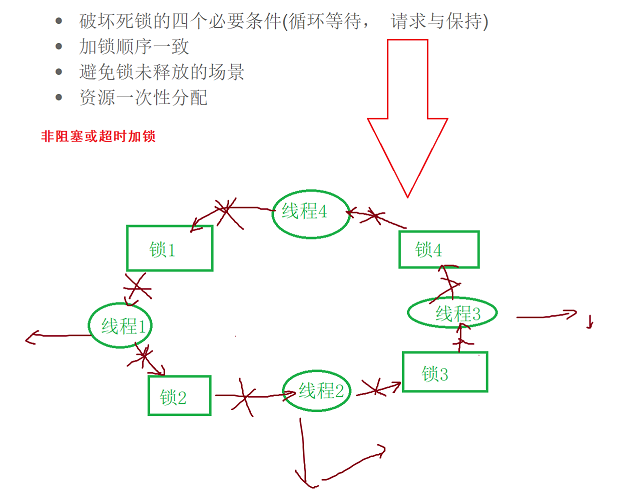

3. Four necessary conditions for deadlock generation

- Mutually exclusive condition: a resource can only be used by one execution flow at a time. (a mutex can only be owned by one execution flow at the same time)

- Request and hold condition: when an execution flow is blocked by requesting resources, it will hold the obtained resources. (thread A holds lock 1 and wants to request lock 2, while thread B holds lock 2 and wants to request lock 1)

- No deprivation condition: the resources obtained by an execution flow cannot be forcibly deprived until they are used up at the end.

- Circular waiting condition: A circular waiting resource relationship is formed between several execution streams. (thread A waits for the lock held by thread B, and thread B waits for the lock held by thread A)

4. Conditions to avoid deadlock (one of these conditions can be met)

- The locking sequence is consistent.

- Avoid scenarios where the lock is not released.

- Resources are allocated at one time.

5. Deadlock avoidance algorithm

- Deadlock detection algorithm.

- Banker algorithm.

5, Thread synchronization

Purpose: multiple threads access critical resources without error:

1. An example of a single person eating noodles without conditional variables with locks is simulated

Examples of locking of simulated eating and making noodles:

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 using namespace std;

5

6 int g_bowl=1;

7 pthread_mutex_t g_lock;

8

9 void* EatStart(void* arg)

10 {

11 (void)arg;

12

13 while(1)

14 {

15 pthread_mutex_lock(&g_lock);

16 g_bowl--;

17 cout<<"i am:"<<pthread_self()<<" i eat:"<<g_bowl<<endl;

18 pthread_mutex_unlock(&g_lock);

19 }

20 return NULL;

21 }

22 void *MakeStart(void* arg)

23 {

24 (void)arg;

25 while(1)

26 {

27 pthread_mutex_lock(&g_lock);

28 g_bowl++;

29 cout<<"i am:"<<pthread_self()<<" i make:"<<g_bowl<<endl;

30 pthread_mutex_unlock(&g_lock);

31 }

32 return NULL;

33 }

34 int main()

35 {

36 pthread_mutex_init(&g_lock,NULL);

37

38 pthread_t tid_eat;

39 pthread_t tid_make;

40 int ret=pthread_create(&tid_eat,NULL,EatStart,NULL);

41 if(ret<0)

42 {

43 cout<<"Thread creation failed!"<<endl;

44 }

45

46 ret=pthread_create(&tid_make,NULL,MakeStart,NULL);

47 {

48 if(ret<0)

49 {

50 cout<<"Thread creation failed!\n"<<endl;

51 }

52 }

53

54 while(1)

55 {

56 sleep(1);

57 }

58

59

60 pthread_mutex_destroy(&g_lock);

61 return 0;

62 }

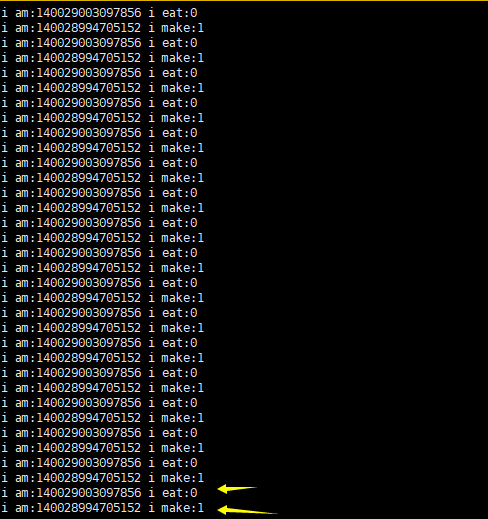

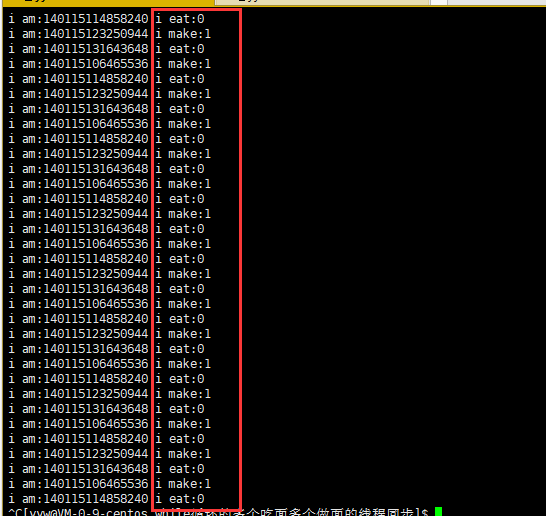

You can see that the bowl has been reduced to a negative number, and you may also see that the bowl has been added to a positive number exceeding 1. Why does this happen? Because thread EatStart is responsible for eating noodles and thread MakeStart is responsible for making noodles. When thread MakeStart gets CPU resources, it may continue to make noodles in the bowl, and the bowl will be more than 1. When thread EatStart gets CPU resources, it may continue to eat noodles, and the bowl will be less than 0, and then eat noodles all the time, resulting in a decrease in the bowl to 0 or even negative.

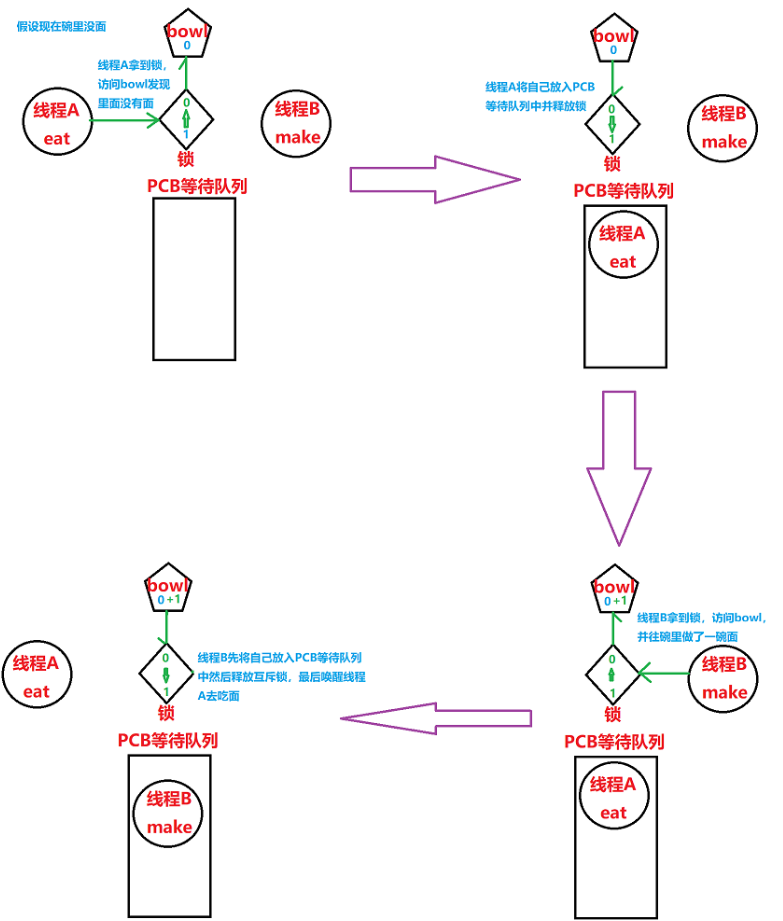

2. Conditional variables

- When a thread accesses a variable mutually exclusive, it may find that it can do nothing until other threads change state.

- For example, when a thread accesses the queue and finds that the queue is empty, it can only wait and add a node to the queue only to other threads. This situation requires the use of conditional variables.

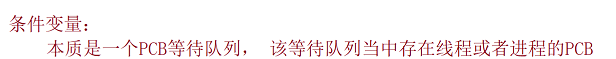

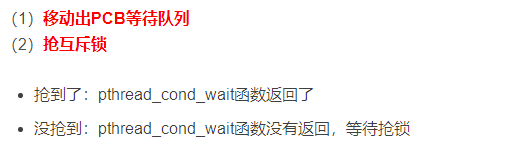

1.PCB waiting queue

3. Conditional variable function

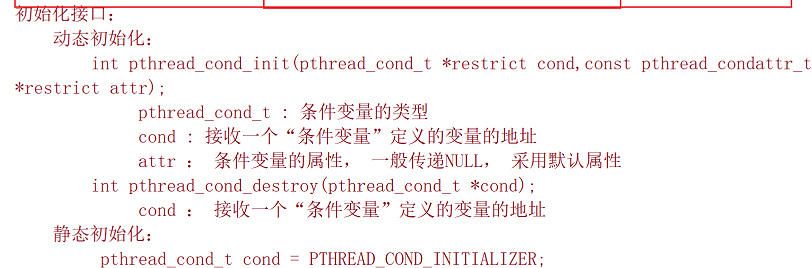

1. Condition variable initialization

Static initialization:

pthread_cond_t cond = PTHREAD_COND_INITIALIZER;

Dynamic initialization:

int pthread_cond_init(pthread_cond_t restrict cond, const pthread_condattr_t restrict attr);

- Cond: the variable of "condition variable" to be initialized. Generally, a pthread is passed_ cond_ Address of type T variable

- attr: in general, NULL is given and the default attribute is used

2. Conditional variable destruction

int pthread_cond_destroy(pthread_cond_t cond)

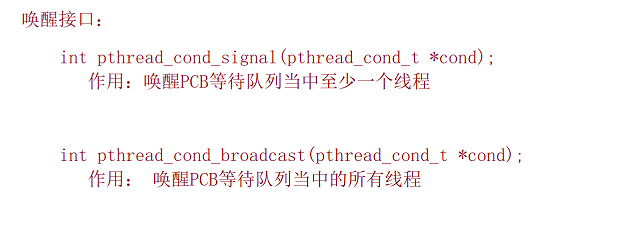

3. Conditional variable wake-up

int pthread_cond_signal(pthread_cond_t cond);

- Function: notify the thread in the PCB waiting queue. If the thread receives it, it will exit the queue from the PCB waiting queue.

- Wake up at least one thread in the PCB waiting queue.

int pthread_cond_broadcast(pthread_cond_t cond);

- Wake up all the threads in the PCB waiting queue.

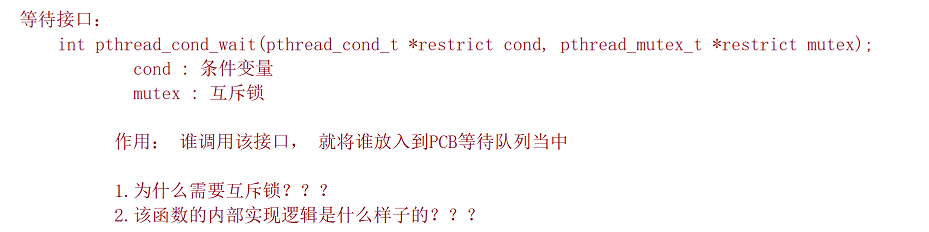

4. Conditional variable waiting

int pthread_cond_wait(pthread_cond_t restrict cond,pthread_mutex_t restrict mutex);

Parameters:

- cond: wait on this condition variable

- Mutex: mutex, which will be explained in detail later

4. Example of single person eating noodles by simulating the conditional variable of locking

If the conditional variable bowl is less than or equal to 0 added to thread EatStart, you can't eat noodles. If the conditional variable bowl > 1 added to thread MakeStart, you can't make noodles.

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 using namespace std;

5

6 int g_bowl=1;

7 pthread_mutex_t g_lock;

8

9 pthread_cond_t g_eat_cond;

10 pthread_cond_t g_make_cond;

11

12 //Eat noodles

13 void* EatStart(void* arg)

14 {

15 (void)arg;

16

17 while(1)

18 {

19 pthread_mutex_lock(&g_lock);

20 if(g_bowl<=0)

21 {

22 pthread_cond_wait(&g_eat_cond,&g_lock);//The mutex passed is to be unlocked later

23 }

24 g_bowl--;

25 cout<<"i am:"<<pthread_self()<<" i eat:"<<g_bowl<<endl;

26 pthread_mutex_unlock(&g_lock);

27

28 pthread_cond_signal(&g_make_cond);//Inform the noodle maker to make noodles

29 }

30 return NULL;

31 }

32

33 //Make noodles

34 void *MakeStart(void* arg)

35 {

36 (void)arg;

37 while(1)

38 {

39 pthread_mutex_lock(&g_lock);

40 if(g_bowl>0)

41 {

42 pthread_cond_wait(&g_make_cond,&g_lock);

43 }

44 g_bowl++;

45

46 cout<<"i am:"<<pthread_self()<<" i make:"<<g_bowl<<endl;

47 pthread_mutex_unlock(&g_lock);

48

49 pthread_cond_signal(&g_eat_cond);//Inform the noodle eater to eat noodles

50 }

51 return NULL;

52 }

53 int main()

54 {

55 //Initialize mutex

56 pthread_mutex_init(&g_lock,NULL);

57

58 //Initialize condition variable

59 pthread_cond_init(&g_eat_cond,NULL);

60 pthread_cond_init(&g_make_cond,NULL);

61

62 int i=0;

63 for(i=0;i<1;i++)

64 {

65 pthread_t tid;

66 int ret=pthread_create(&tid,NULL,EatStart,NULL);

67 if(ret<0)

68 {

69 cout<<"Thread creation failed!"<<endl;

70 }

71

72 ret=pthread_create(&tid,NULL,MakeStart,NULL);

73 {

74 if(ret<0)

75 {

76 cout<<"Thread creation failed!\n"<<endl;

77 }

78 }

79 }

80

81 while(1)

82 {

83 sleep(1);

84 }

85

86 //Destroy mutex

87 pthread_mutex_destroy(&g_lock);

88

89 //Destroy condition variable

90 pthread_cond_destroy(&g_eat_cond);

91 pthread_cond_destroy(&g_make_cond);

92 return 0;

93 }

5. Example of multiple people eating noodles by simulating the conditional variable of locking

1 #include<iostream>

2 #include<pthread.h>

3 #include<unistd.h>

4 using namespace std;

5

6 int g_bowl=1;

7 pthread_mutex_t g_lock;

8

9 pthread_cond_t g_eat_cond;

10 pthread_cond_t g_make_cond;

11

12 //Eat noodles

13 void* EatStart(void* arg)

14 {

15 (void)arg;

16

17 while(1)

18 {

19 pthread_mutex_lock(&g_lock);

20 if(g_bowl<=0)

21 {

22 pthread_cond_wait(&g_eat_cond,&g_lock);//The mutex passed is to be unlocked later

23 }

24 g_bowl--;

25 cout<<"i am:"<<pthread_self()<<" i eat:"<<g_bowl<<endl;

26 pthread_mutex_unlock(&g_lock);

27

28 pthread_cond_signal(&g_make_cond);//Inform the noodle maker to make noodles

29 }

30 return NULL;

31 }

32

33 //Make noodles

34 void *MakeStart(void* arg)

35 {

36 (void)arg;

37 while(1)

38 {

39 pthread_mutex_lock(&g_lock);

40 if(g_bowl>0)

41 {

42 pthread_cond_wait(&g_make_cond,&g_lock);

43 }

44 g_bowl++;

45

46 cout<<"i am:"<<pthread_self()<<" i make:"<<g_bowl<<endl;

47 pthread_mutex_unlock(&g_lock);

48

49 pthread_cond_signal(&g_eat_cond);//Inform the noodle eater to eat noodles

50 }

51 return NULL;

52 }

53 int main()

54 {

55 //Initialize mutex

56 pthread_mutex_init(&g_lock,NULL);

57

58 //Initialize condition variable

59 pthread_cond_init(&g_eat_cond,NULL);

60 pthread_cond_init(&g_make_cond,NULL);

61

62 int i=0;

63 for(i=0;i<2;i++)

64 {

65 pthread_t tid;

66 int ret=pthread_create(&tid,NULL,EatStart,NULL);

67 if(ret<0)

68 {

69 cout<<"Thread creation failed!"<<endl;

70 }

71

72 ret=pthread_create(&tid,NULL,MakeStart,NULL);

73 {

74 if(ret<0)

75 {

76 cout<<"Thread creation failed!\n"<<endl;

77 }

78 }

79 }

80

81 while(1)

82 {

83 sleep(1);

84 }

85

86 //Destroy mutex

87 pthread_mutex_destroy(&g_lock);

88

89 //Destroy condition variable

90 pthread_cond_destroy(&g_eat_cond);

91 pthread_cond_destroy(&g_make_cond);

92 return 0;

93 }

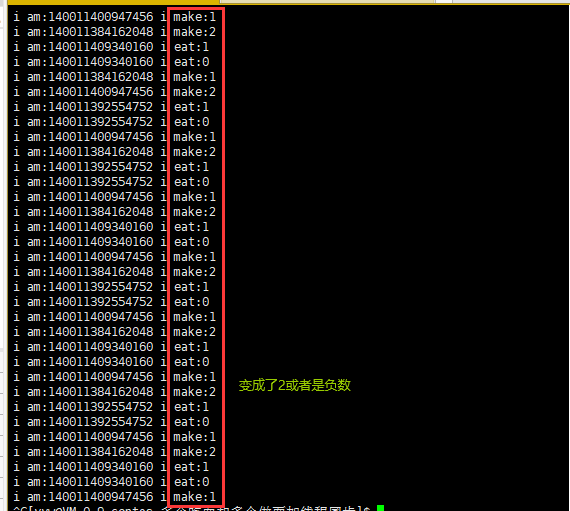

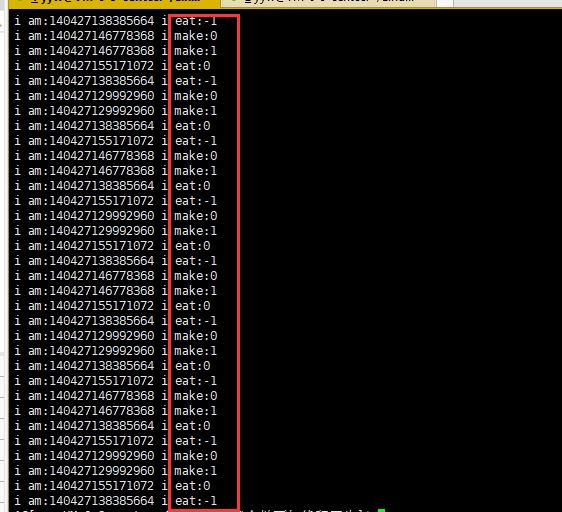

Suppose that there are noodles in the bowl, and make1 gets the lock, then make1 judges that there are noodles in the bowl, puts itself into the PCB waiting queue for waiting, and then releases the mutex. Suppose that at this time, eat1 gets the mutex, and then eat1 eats the noodles in the bowl, then releases the lock and notifies the PCB waiting queue. At this time, make1 has left the queue. Suppose that make2 gets the lock and makes a bowl of noodles, Then release the lock, and then make1 gets the lock again. At this time, make1 will skip pthread_ cond_ With the wait function, make1 skips the judgment of whether there is noodles in the bowl and directly makes noodles in the bowl. At this time, the value of bowl changes from 1 to 2.

Suppose that there are noodles in the bowl, and at this time eat1 gets the lock, then eat1 judges that there are no noodles in the bowl, puts itself into the PCB waiting queue for waiting, and then releases the mutex lock. Suppose that make1 gets the mutex lock, then make1 makes the noodles in the bowl, then releases the lock and notifies the PCB waiting queue. At this time, eat1 has left the queue. Suppose that eat2 gets the lock and eats a bowl of noodles, Then release the lock, and then eat1 gets the lock again. At this time, eat1 will skip pthread_cond_wait function, eate1 skips judging whether there is noodles in the bowl and directly eats noodles in the bowl. At this time, the value of bowl changes from 0 to - 1.

To solve this problem, you only need to change the if judgment condition into a while loop.

#include<iostream>

#include<pthread.h>

#include<unistd.h>

using namespace std;

int g_bowl=1;

pthread_mutex_t g_lock;

pthread_cond_t g_eat_cond;

pthread_cond_t g_make_cond;

//Eat noodles

void* EatStart(void* arg)

{

(void)arg;

while(1)

{

pthread_mutex_lock(&g_lock);

while(g_bowl<=0)

{

pthread_cond_wait(&g_eat_cond,&g_lock);//The mutex passed is to be unlocked later

}

g_bowl--;

cout<<"i am:"<<pthread_self()<<" i eat:"<<g_bowl<<endl;

pthread_mutex_unlock(&g_lock);

pthread_cond_signal(&g_make_cond);//Inform the noodle maker to make noodles

}

return NULL;

}

//Make noodles

void *MakeStart(void* arg)

{

(void)arg;

while(1)

{

pthread_mutex_lock(&g_lock);

while(g_bowl>0)

{

pthread_cond_wait(&g_make_cond,&g_lock);

}

g_bowl++;

cout<<"i am:"<<pthread_self()<<" i make:"<<g_bowl<<endl;

pthread_mutex_unlock(&g_lock);

pthread_cond_signal(&g_eat_cond);//Inform the noodle eater to eat noodles

}

return NULL;

}

int main()

{

//Initialize mutex

pthread_mutex_init(&g_lock,NULL);

//Initialize condition variable

pthread_cond_init(&g_eat_cond,NULL);

pthread_cond_init(&g_make_cond,NULL);

int i=0;

for(i=0;i<2;i++)

{

pthread_t tid;

int ret=pthread_create(&tid,NULL,EatStart,NULL);

if(ret<0)

{

cout<<"Thread creation failed!"<<endl;

}

ret=pthread_create(&tid,NULL,MakeStart,NULL);

{

if(ret<0)

{

cout<<"Thread creation failed!\n"<<endl;

}

}

}

while(1)

{

sleep(1);

}

//Destroy mutex

pthread_mutex_destroy(&g_lock);

//Destroy condition variable

pthread_cond_destroy(&g_eat_cond);

pthread_cond_destroy(&g_make_cond);

return 0;

}

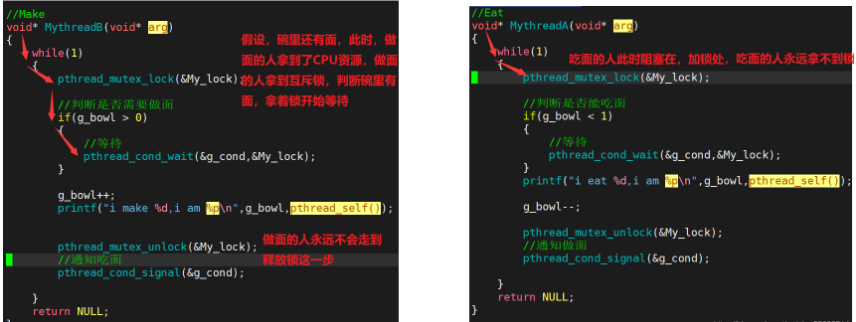

6. Several problems of conditional variable on waiting interface

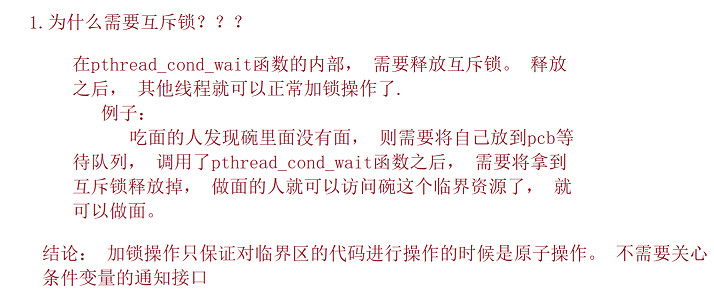

1. Why do the wait interface parameters of condition variables need mutual exclusion?

Because the need is in pthread_ cond_ The wait function unlocks the lock internally. When a thread enters, it needs to release the lock before others can use it. After unlocking, other execution streams can obtain the mutex. Therefore, the mutex needs to be passed in. Otherwise, if pthread is called_ cond_ If the wait thread does not release the mutex while waiting, other threads cannot unlock it.

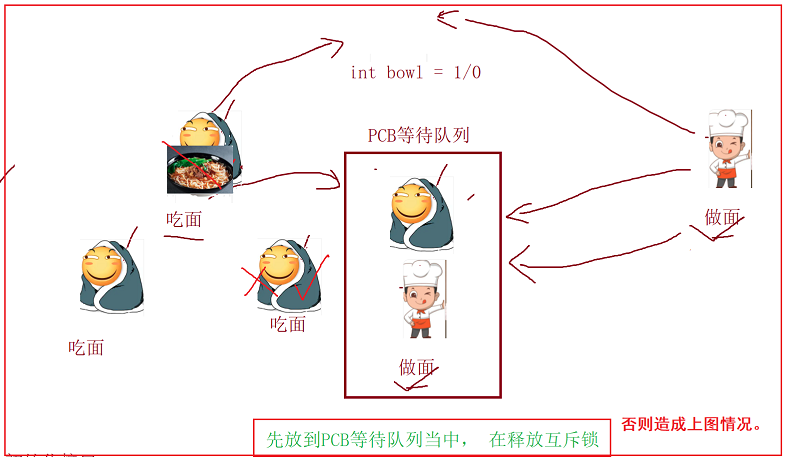

2.pthread_ cond_ Implementation principle of wait function

Suppose that the Noodle Eating thread judges that there is no noodles in the bowl and needs to wait. If it releases the mutex first, before it releases the mutex and enters the waiting queue, the noodle making thread may get the mutex and finish the noodles and notify the PCB waiting queue. At this time, the Noodle Eating thread has not entered the PCB waiting queue. The PCB waiting queue is empty, The noodle making thread gets the mutex to make noodles. At this time, the noodle making thread judges that there is noodles in the bowl, and puts itself into the PCB waiting queue for waiting. At this time, the Noodle Eating thread also waits in the PCB waiting queue, so it cannot release the mutex first.

3. When the thread is waiting, what needs to be done after it is awakened

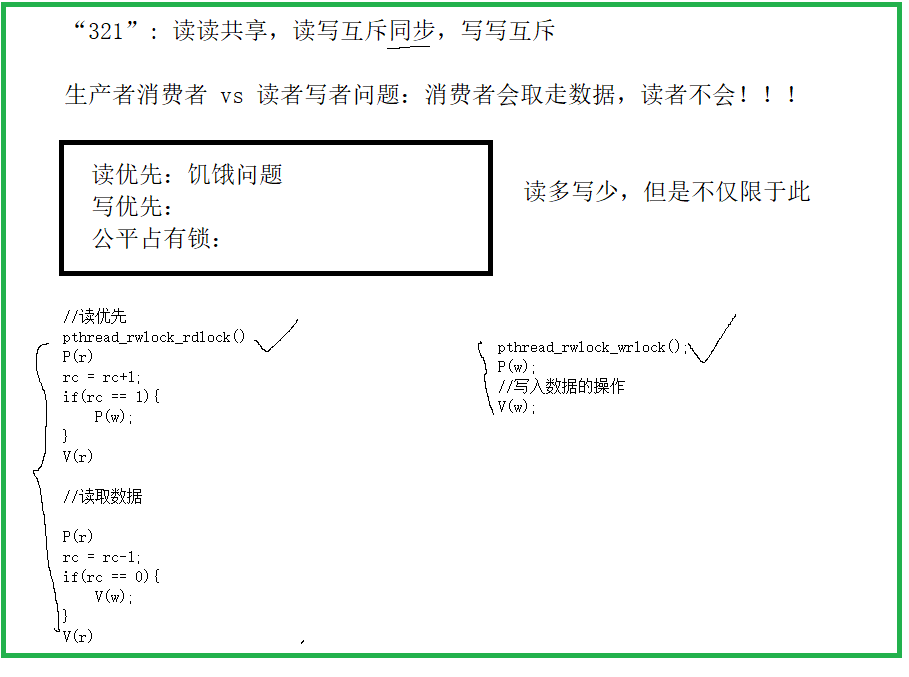

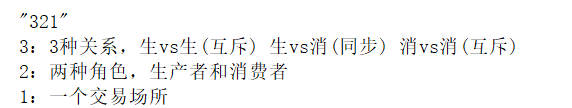

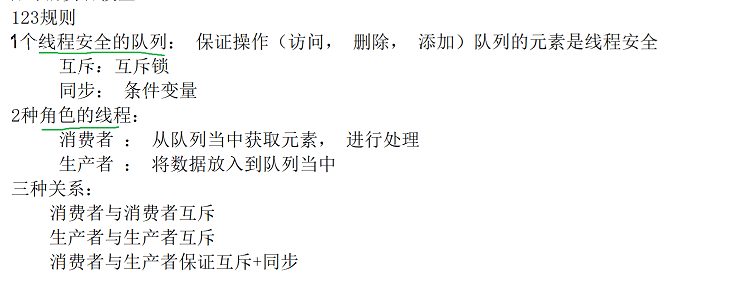

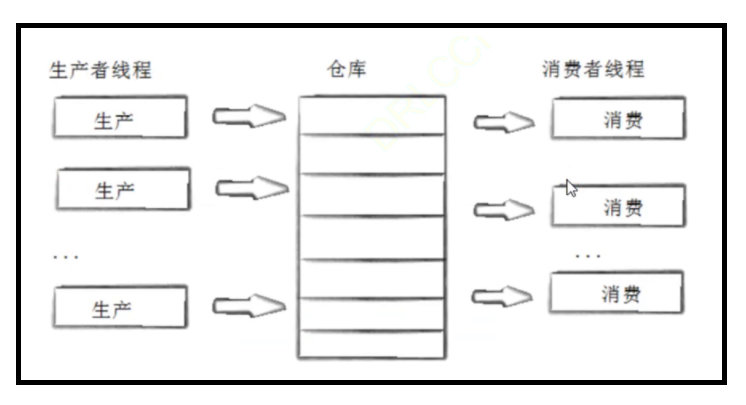

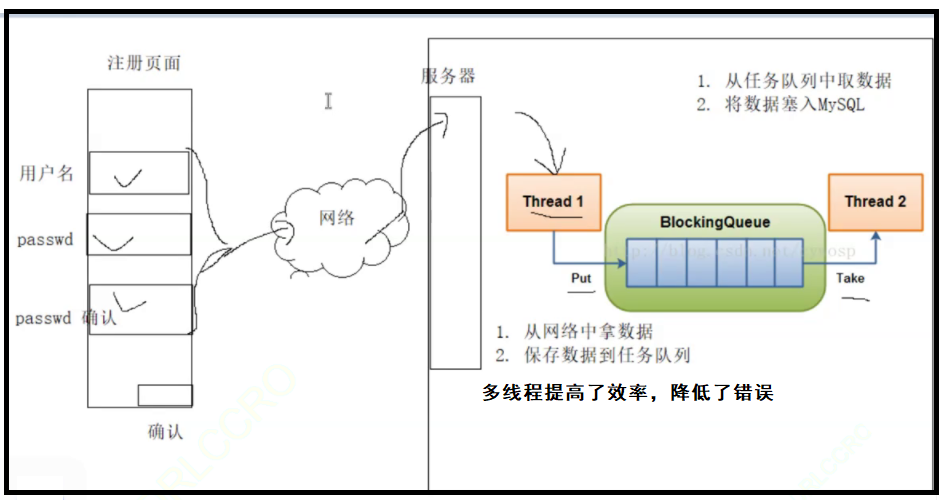

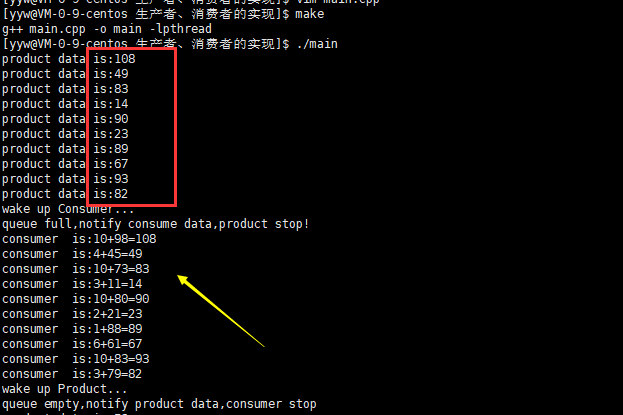

6, Producer consumer model

1. Principles followed by producers and consumers

- 321 principle (easy to remember)

2. Principles followed by producers and consumers

Producer consumer model is to solve the strong coupling problem between producers and consumers through a container. Producers and consumers do not communicate directly with each other, but communicate through the blocking queue. Therefore, after producing data, producers do not need to wait for consumers to process, but directly throw it to the blocking queue. Consumers do not ask producers for data, but directly take it from the blocking queue. The blocking queue is equivalent to a buffer, which balances the processing capacity of producers and consumers. This blocking queue is used to decouple producers and consumers.

3. Advantages of producer consumer model

- Decoupling.

- Support concurrency.

- Uneven busy and free time is supported.

4. Producer consumer model based on BlockingQueue

Blocking queue in multithreaded programming, blocking queue is a data structure commonly used to implement producer and consumer models. The difference from ordinary queues is that when the queue is empty, the operation of obtaining elements from the queue will be blocked until elements are put into the queue; When the queue is full, the operation of storing elements in the queue will also be blocked until some elements are taken out of the queue (the above operations are based on different threads, which will be blocked when operating on the blocked queue process).

5. Implementation of producer consumer code based on BlockingQueue

BlockQueue.cpp

1 #pragma once / / prevent header files from being included repeatedly

2

3 #include<iostream>

4

5 #include<pthread.h>

6 #include<unistd.h>

7 #include<queue>

8 //using namespace std;

9

10 class Task

11 {

12 public:

13 int _x;

14 int _y;

15 public:

16 Task()

17 {}

18 Task(int x,int y)

19 :_x(x)

20 ,_y(y)

21 {}

22 int run()

23 {

24 return _x+_y;

25 }

26 ~Task()

27 {}

28 };

29 class BlockQueue

30 {

31 private:

32 // std::queue<int> q; // Set up a queue

33 std::queue<Task> q; //Set up a queue

34 int _cap; //capacity

35 pthread_mutex_t lock; //Set a mutex

36

37 pthread_cond_t c_cond; //Notify consumers when full

38 pthread_cond_t p_cond; //Notify the producer if it is empty

39

40 private: //Encapsulate

41 void LockQueue() //Lock

42 {

43 pthread_mutex_lock(&lock);

44 }

45 void UnLockQueue() //Unlock

46 {

47 pthread_mutex_unlock(&lock);

48 }

49

50

51 bool IsEmpty() //Determine whether the queue is empty

52 {

53 return q.size()==0;

54 }

55 bool IsFull() //Determine whether the queue is full

56 {

57 return q.size()==_cap;

58 }

59

60 void ProductWait() //Producer waiting

61 {

62 pthread_cond_wait(&p_cond,&lock);

63 }

64 void ConsumerWait() //Consumer waiting

65 {

66 pthread_cond_wait(&c_cond,&lock);

67 }

68

69 void WakeUpProduct() //Wake up the producer

70 {

71 std::cout<<"wake up Product..."<<std::endl;

72 pthread_cond_signal(&p_cond);

73 }

74 void WakeUpConsumer() //Awaken consumers

75 {

76 std::cout<<"wake up Consumer..."<<std::endl;

77 pthread_cond_signal(&c_cond);

78 }

79

80 public:

81 BlockQueue(int cap) //Constructor Initializers

82 :_cap(cap)

83 {

84 pthread_mutex_init(&lock,NULL);

85 pthread_cond_init(&c_cond,NULL);

86 pthread_cond_init(&p_cond,NULL);

87 }

88 ~BlockQueue() //Destructor destroy

89 {

90 pthread_mutex_destroy(&lock);

91 pthread_cond_destroy(&c_cond);

92 pthread_cond_destroy(&p_cond);

93 }

94

95 void put(Task in)

96 {

97 //If the Queue is a critical resource, it needs to be locked, and judge whether it is full, and encapsulate the interface

98 LockQueue();

99 while(IsFull())

100 {

101 WakeUpConsumer();

102 std::cout<<"queue full,notify consume data,product stop!"<<std::endl;

103 ProductWait(); //Producer thread wait

104 }

105 q.push(in);

106

107 UnLockQueue();

108 }

109 void Get(Task& out)

110 {

111 LockQueue();

112 while(IsEmpty())

113 {

114 WakeUpProduct();

115 std::cout<<"queue empty,notify product data,consumer stop"<<std::endl;

116 ConsumerWait();

117 }

118 out=q.front();

119 q.pop();

120

121 UnLockQueue();

122 }

123

124 //Thread interface function

125 /*void* Product(void* arg)

126 {

127

128 }

129 void* Consumer(void* arg)

130 {

131

132 }*/

133

134 };

main.cpp

1 #include"BlockQueue.cpp"

2 using namespace std;

3 #include<stdlib.h>

4

5 pthread_mutex_t p_lock;

6 pthread_mutex_t c_lock;

7 void* Product_Run(void* arg)

8 {

9 BlockQueue* bq=(BlockQueue*)arg;

10

11 srand((unsigned int)time(NULL));

12 while(true)

13 {

14 pthread_mutex_lock(&p_lock);

15 // int data=rand()%10+1;

16 int x=rand()%10+1;

17 int y=rand()%100+1;

18 Task t(x,y);

19 bq->put(t);

20 pthread_mutex_unlock(&p_lock);

21 cout<<"product data is:"<<t.run()<<endl;

22 }

23 }

24 void* Consumer_Run(void* arg)

25 {

26 BlockQueue* bq=(BlockQueue*)arg;

27 while(true)

28 {

29 pthread_mutex_lock(&c_lock);

30 // int n=0;

31 Task t;

32 bq->Get(t);

33 pthread_mutex_unlock(&c_lock);

34 cout<<"consumer is:"<<t._x<<"+"<<t._y<<"="<<t.run()<<endl;

35 sleep(1);

36 }

37 }

38 int main()

39 {

40 BlockQueue* bq=new BlockQueue(10);

41 pthread_t c,p;

42

43 pthread_create(&c,NULL,Product_Run,(void*)bq);

44

45 pthread_create(&p,NULL,Consumer_Run,(void*)bq);

46

47 pthread_join(c,NULL);

48 pthread_join(p,NULL);

49

50 delete bq;

51 return 0;

52 }

makefile

1 main:main.cpp

2 g++ $^ -o $@ -lpthread

3 .PHONY:clean

4 clean:

5 rm -f main

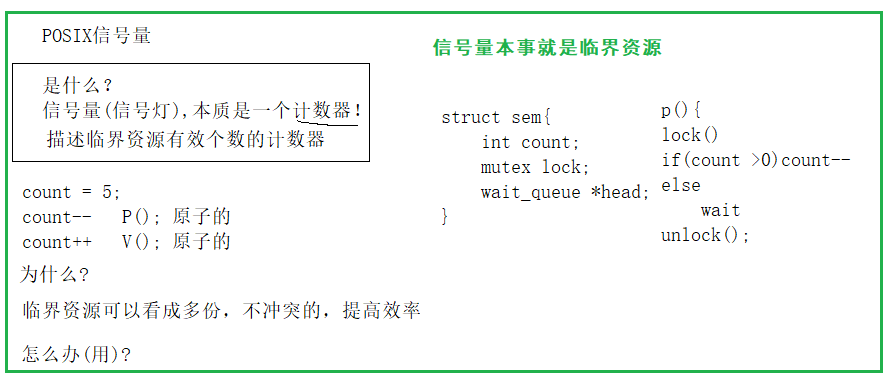

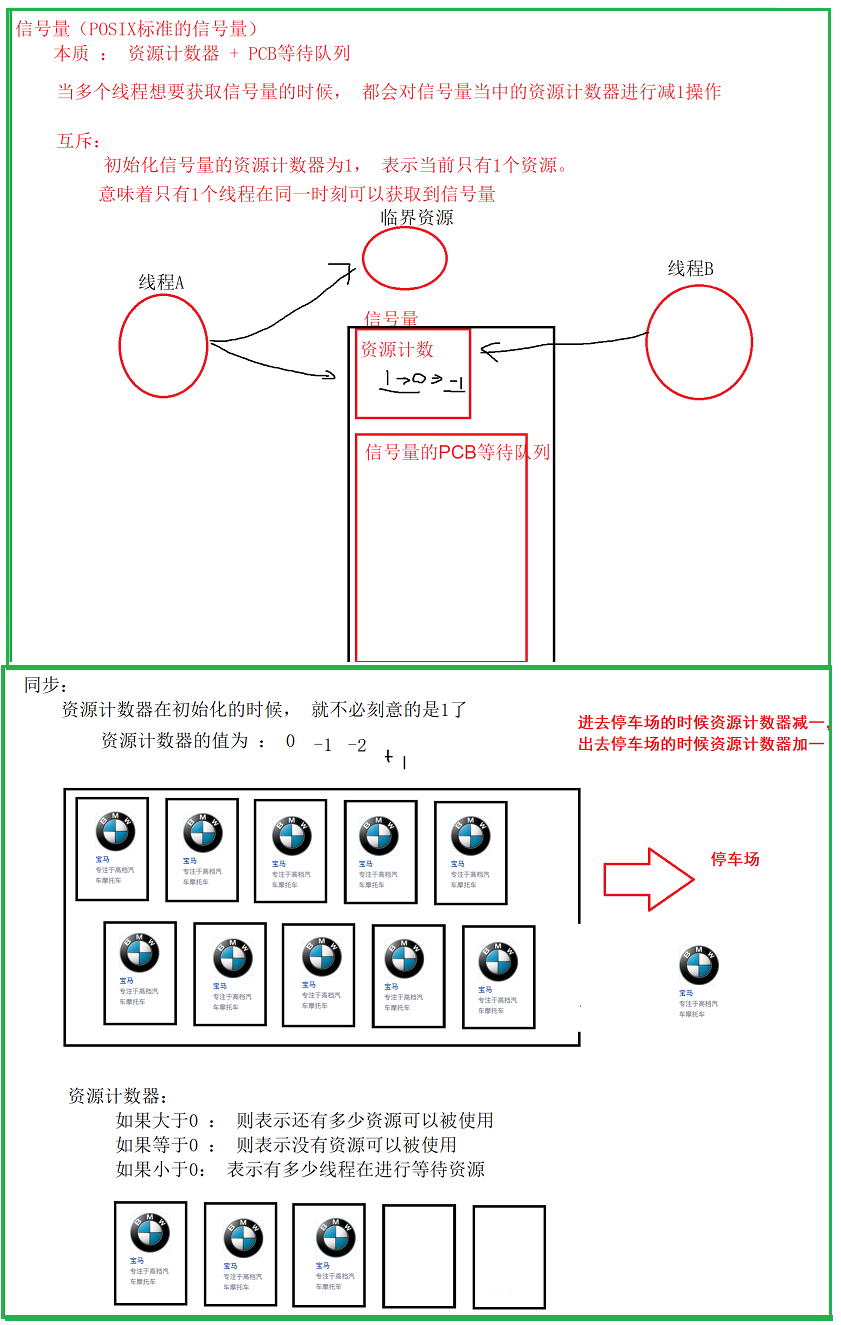

7, POSIX semaphore

1. Concept of POSIX semaphore

POSIX semaphores and SystemV semaphores have the same function. They are used for synchronous operation to achieve the purpose of accessing shared resources without conflict. However, POSIX can be used for inter thread synchronization.

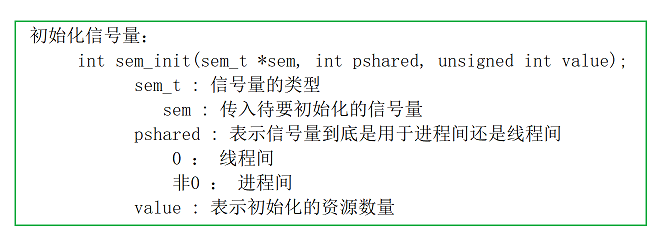

2.POSIX semaphore initialization

#include <semaphore.h>

int sem_init(sem_t sem, int pshared, unsigned int value);

Parameters:

- pshared:0 indicates inter thread sharing, and non-zero indicates inter process sharing

- Value: initial value of semaphore

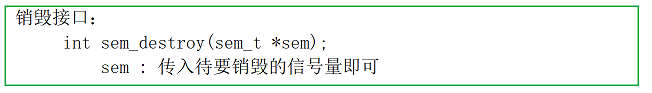

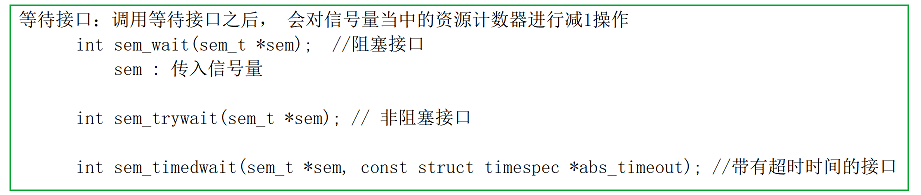

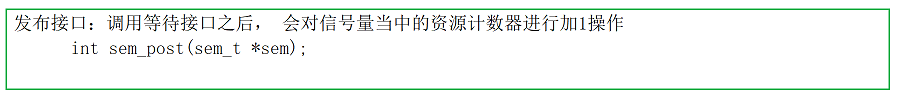

3.POSIX semaphore destruction

int sem_destroy(sem_t sem);

4.POSIX semaphore waiting

Function: wait for the semaphore, and the value of the semaphore will be reduced by 1

int sem_wait(sem_t sem);

5.POSIX semaphore release

Function: release semaphore, indicating that the resource is used up and can be returned. Increase the semaphore value by 1.

int sem_post(sem_t sem);

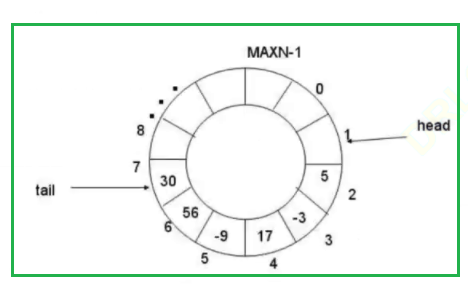

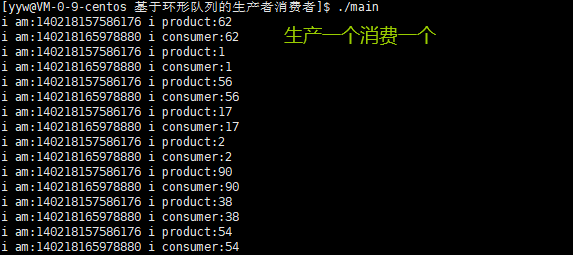

6. Production and consumption model based on ring queue

- The previous producer consumer example was based on queue, and its space can be allocated dynamically. Now this program (POSIX semaphore) is rewritten based on a fixed size ring queue.

- The ring queue is simulated by array, and the ring characteristics are simulated by module operation.

- The start state and end state of the ring structure are the same. It is not easy to judge whether it is empty or full, so you can judge whether it is full or empty by adding a counter or marker bit. In addition, an empty position can be reserved as a full state.

- However, we now have the semaphore counter, which is very simple for the synchronization process between multiple threads.

7. Code implementation of production and consumption model based on ring queue

RingQueue.cpp

1 #pragma once

2

3 #include<iostream>

4 #include<unistd.h>

5 #include<vector>

6 #include<semaphore.h>

7

8 #include<stdlib.h>

9 #define NUM 10

10

11 class RingQueue

12 {

13 private:

14 std::vector<int> v;

15 int _cap; //capacity

16 sem_t sem_blank; //producer

17 sem_t sem_data; //consumer

18

19 int c_index; //Consumer index

20 int p_index; //Producer index

21

22 public:

23 RingQueue(int cap=NUM)

24 :_cap(cap)

25 ,v(cap)

26 {

27 sem_init(&sem_blank,0,cap);

28 sem_init(&sem_data,0,0);

29 c_index=0;

30 p_index=0;

31 }

32 ~RingQueue()

33 {

34 sem_destroy(&sem_blank);

35 sem_destroy(&sem_data);

36 }

37

38 void Get(int& out)

39 {

40 sem_wait(&sem_data);

41 //consumption

42 out=v[c_index];

43 c_index++;

44 c_index=c_index%NUM; //Prevent cross-border and form a ring queue

45 sem_post(&sem_blank);

46 }

47 void Put(const int& in)

48 {

49 sem_wait(&sem_blank);

50 //production

51 v[p_index]=in;

52 p_index++;

53 p_index=p_index%NUM;

54 sem_post(&sem_data);

55 }

56 };

main.cpp

1 #include"RingQueue.h"

2 using namespace std;

3

4

5 void* Consumer(void* arg)

6 {

7 RingQueue *bq=(RingQueue*)arg;

8 int data;

9 while(1)

10 {

11 bq->Get(data);

12 cout<<"i am:"<<pthread_self()<<" i consumer:"<<data<<endl;

13 }

14 }

15 void* Product(void* arg)

16 {

17 RingQueue* bq=(RingQueue*)arg;

18 srand((unsigned int)time(NULL));

19 while(1)

20 {

21 int data=rand()%100;

22 bq->Put(data);

23 cout<<"i am:"<<pthread_self()<<" i product:"<<data<<endl;

24 sleep(1);

25 }

26 }

27 int main()

28 {

29 RingQueue* pq=new RingQueue();

30 pthread_t c;

31 pthread_t p;

32 pthread_create(&c,NULL,Consumer,(void*)pq);

33 pthread_create(&p,NULL,Product,(void*)pq);

34

35 pthread_join(c,NULL);

36 pthread_join(p,NULL);

37 return 0;

38 }

Makefile

1 main:main.cpp

2 g++ $^ -o $@ -lpthread

3 .PHONY:clean

4 clean:

5 rm -f main

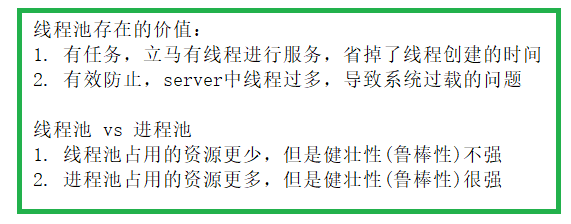

8, Thread pool

1. Thread pool concept

What is a thread pool? To put it simply, a thread pool is a pile of threads that have been created. Initially, they are in an idle waiting state. When a new task needs to be processed, take an idle waiting thread from the pool to process the task. When the processing is completed, put the thread back into the pool again for subsequent tasks. When all the threads in the pool are busy, there are no idle waiting threads available in the thread pool. At this time, select to create a new thread and put it into the pool as needed, or notify the task thread pool to be busy and try again later.

2. Role of thread pool

A thread usage mode. Too many threads will bring scheduling overhead, which will affect cache locality and overall performance. The thread pool maintains multiple threads, waiting for the supervisor to assign concurrent tasks. This avoids the cost of creating and destroying threads when processing short-time tasks. Thread pool can not only ensure the full utilization of the kernel, but also prevent over scheduling. The number of available threads should depend on the number of available concurrent processors, processor cores, memory, network sockets, etc.

3. Thread pool application scenario

- A large number of threads are required to complete the task, and the time to complete the task is relatively short. It is very appropriate to use thread pool technology for WEB server to complete the task of WEB page request. Because a single task is small and the number of tasks is huge, you can imagine the number of clicks on a popular website. But for long-time tasks, such as a Telnet connection request, the advantage of thread pool is not obvious. Because Telnet session time is much longer than thread creation time.

- Applications that require strict performance, such as requiring the server to respond quickly to customer requests.

- An application that accepts a large number of sudden requests without causing the server to produce a large number of threads. A large number of sudden customer requests will generate a large number of threads without thread pool. Although in theory, the maximum number of threads in most operating systems is not a problem. Generating a large number of threads in a short time may make the memory reach the limit and cause errors.

4. Thread pool example

- Create a fixed number of thread pools and cycle to get task objects from the task queue.

- After obtaining the task object, execute the task interface in the task object.

5. Queue based thread pool implementation code

Thread_Pool.h:

1 #include<iostream>

2 #include<math.h>

3 #include<unistd.h>

4 #include<stdlib.h>

5 #include<pthread.h>

6 #include<queue>

7

8 #define NUM 5

9 class Task

10 {

11 private:

12 int _b;

13 public:

14 Task()

15 {

16

17 }

18 Task(int b)

19 :_b(b)

20 {

21

22 }

23 ~Task()

24 {

25

26 }

27 void Run()

28 {

29 std::cout<<"i am:"<<pthread_self()<<" Task run.... :base# "<<_b<<" pow is "<<pow(_b,2)<<std::endl;

30 }

31 };

32 class ThreadPool

33 {

34 private:

35 std::queue<Task*> q;

36 int _max_num; //Total threads

37

38 pthread_mutex_t lock;

39 pthread_cond_t cond; //It can only be operated by consumers

40

41 private:

42 void LockQueue()

43 {

44 pthread_mutex_lock(&lock);

45 }

46 void UnLockQueue()

47 {

48 pthread_mutex_unlock(&lock);

49 }

50

51 bool IsEmpty()

52 {

53 return q.size()==0;

54 }

55 bool IsFull()

56 {

57 return q.size()==_max_num;

58 }

59

60 void ThreadWait()

61 {

62 pthread_cond_wait(&cond,&lock); //Wait for the condition variable to be satisfied

63 }

64

65 void ThreadWakeUp()

66 {

67 pthread_cond_signal(&cond);

68 }

69 public:

70 ThreadPool(int max_num=NUM )

71 :_max_num(max_num)

72 {

73

74 }

75

76 static void* Routine(void* arg)

77 {

78 while(1)

79 {

80 ThreadPool *tp=(ThreadPool*)arg;

81 while(tp->IsEmpty())

82 {

83 tp->LockQueue(); //Static member methods cannot access non static member methods, so pass (void*)this

84 tp->ThreadWait(); //Wait is empty

85 }

86

87 Task t;

88 tp->Get(t); //Get this task

89 tp->UnLockQueue();

90 t.Run(); //Get this task and run it

91 }

92 }

93

94 void ThreadPoolInit()

95 {

96 pthread_mutex_init(&lock,NULL);

97 pthread_cond_init(&cond,NULL);

98

99 int i=0;

100 pthread_t t;

101 for(i=0;i<_max_num;i++)

102 {

103 pthread_create(&t,NULL,Routine,(void*)this);

104 }

105 }

106 ~ThreadPool()

107 {

108 pthread_mutex_destroy(&lock);

109 pthread_cond_destroy(&cond);

110 }

111

112 //server playback data

113 void Put(Task& in)

114 {

115 LockQueue();

116

117 q.push(&in);

118

119 UnLockQueue();

120

121 ThreadWakeUp();

122 }

123 //Fetching data from ThreadPool

124 void Get(Task& out)

125 {

126 //You can take it directly in the thread pool without locking it

127 Task* t=q.front();

128 q.pop();

129 out=*t;

130 }

131 };

132

main.cpp

1 #include"Thread_Pool.h"

2 using namespace std;

3

4

5 int main()

6 {

7 ThreadPool *tp=new ThreadPool();

8

9 tp->ThreadPoolInit();

10

11 while(true)

12 {

13 int x=rand()%10+1;

14 Task t(x);

15 tp->Put(t);

16 sleep(1);

17 }

18 return 0;

19 }

makefile

1 main:main.cpp

2 g++ $^ -o $@ -lpthread

3 .PHONY:clean

4 clean:

5 rm -f main

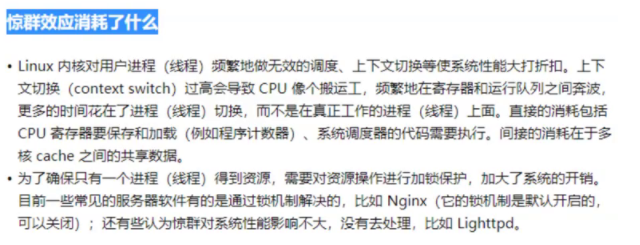

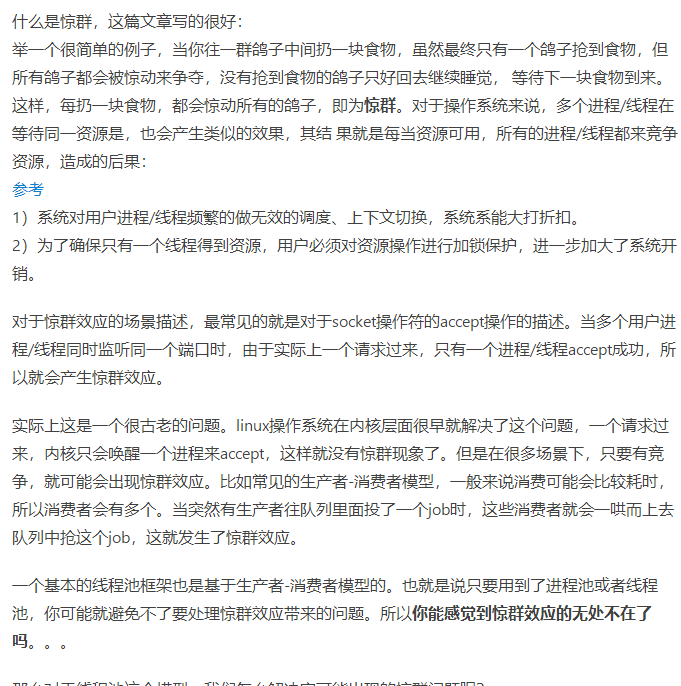

6. Thread pool clustering problem

9, Thread safe singleton mode

1. Single case mode concept

Singleton mode is a creation mode, which restricts the application to create only a single instance of a specific class > type. For example, a web site will need a database connection > object, but there should be and only one, so we implement this restriction by using singleton mode. We can use a static attribute to ensure that there is only a single > instance for a specific class.

2. Features of single case mode