**

Detailed explanation of actual combat of jsoup crawler

**

Today, I'd like to share a summary about how jsoup crawled Sina's web page for some time.

Before realizing the above functions, we should first understand two key points: 1 About the details and tutorials of jsoup crawling, you should understand the relevant tags of jsoup when crawling: if you are interested, you can go to Baidu to learn the corresponding tutorials in detail.

2. The second point is that we need to understand the page structure of the opposite website. Now it is generally divided into HTML display, and another is the form of page effect presented through js asynchronous loading. js asynchronous loading is relatively complex. Today, we will focus on the actual use of jsoup displayed in HTML. Today, we will take Sina as an example.

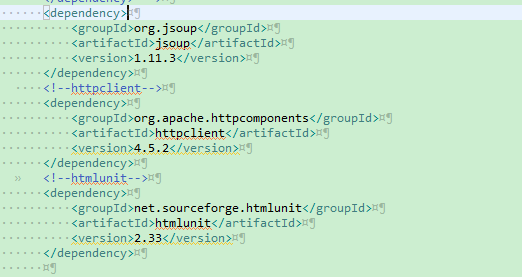

Step 1: import corresponding pom file contents:

Step 2: understand the basic structure of Sina Web page

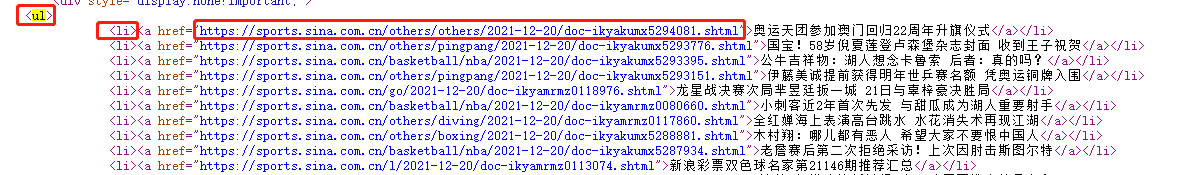

We enter the Sina Web page. For example, we click into the sports classification column, enter the sports classification page, right-click to view the web source code, and we can see

The structure of the corresponding list is basically UL > Li > A, so we can get the link of the corresponding title to enter the details

We can get the list information of the corresponding categories above. We can click any one to enter the corresponding details page and continue to see the structure of the page:

The basic structure of the corresponding details is the construction of basic data dominated by class and article. Today, we will basically explain that capturing the corresponding title content information is the leading implementation of the function

The above is the corresponding page structure of sina. Let's directly enter the business code:

1. Obtain the document information of the page by simulating the CHROME browser. The code is as follows

url is the corresponding path information of the corresponding crawl, and useHtmlUnit is whether to use HtmlUnit. Here, we can pass the value as false.

public static Document getHtmlFromUrl(String url, boolean useHtmlUnit) {

Document document = null;

if (!useHtmlUnit) {

try {

document = Jsoup.connect(url)

// Simulate Firefox browser

.userAgent("Mozilla/4.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)").get();

} catch (Exception e) {

}

} else {

// Analog CHROME viewer

WebClient webClient = new WebClient(BrowserVersion.CHROME);

webClient.getOptions().setJavaScriptEnabled(true);// 1 start JS

webClient.getOptions().setCssEnabled(false);// 2 disable CSS to avoid automatic secondary request for CSS rendering

webClient.getOptions().setActiveXNative(false);

webClient.getOptions().setRedirectEnabled(true);// 3 start redirection

webClient.setCookieManager(new CookieManager());// 4 start cookie management

webClient.setAjaxController(new NicelyResynchronizingAjaxController());// 5 start the ajax agent

webClient.getOptions().setThrowExceptionOnScriptError(false);// 6. Whether to throw an exception when JS runs an error

webClient.getOptions().setThrowExceptionOnFailingStatusCode(false);

webClient.getOptions().setUseInsecureSSL(true);// Ignore ssl authentication

webClient.getOptions().setTimeout(10000);// 7 set timeout

/**

* Set proxy proxyconfig = webclient getOptions(). getProxyConfig(); proxyConfig. setProxyHost(listserver.get(0));

* proxyConfig.setProxyPort(Integer.parseInt(listserver.get(1)));

*/

HtmlPage htmlPage = null;

try {

htmlPage = webClient.getPage(url);

webClient.waitForBackgroundJavaScript(10000);

webClient.setJavaScriptTimeout(10000);

String htmlString = htmlPage.asXml();

return Jsoup.parse(htmlString);

} catch (Exception e) {

e.printStackTrace();

} finally {

webClient.close();

}

}

return document;

}

Step 2 core code

/**

*

* @param: @param Corresponding captured path information: http://sports.sina.com.cn/ Assume Sina's sports path

* @param: @param The corresponding tag id is its own corresponding tag id, which represents Sina

* @param: @param On line number

* @param: @param Tag rules of corresponding web pages: Here we assume: UL > Li > A

* @param: @return If the quantity tag is 0, the crawling ends

* @return: List<Map<String,String>>

*/

private static List<Map<String,String>> pullNews(String tagUrl,Integer tag,Integer num,String regex) {

//Get web page

Document html= null;

try {

html = BaseJsoupUtil.getHtmlFromUrl(tagUrl, false);

} catch (Exception e) {

}

List<Map<String,String>> returnList = new ArrayList<>();//Define the list returned

//jsoup gets the < a > tag. Different addresses match different matching rules

Elements newsATags = null;

//It can be understood that the above rules of our first page about sports are basically: UL > Li > a

if(Utils.notBlank(regex)) {

newsATags = html.select(regex);

}else {

System.out.println(tagUrl+"Collection rule not set");

return returnList;

}

//When the rules do not apply

if(newsATags.isEmpty()) {

System.out.println(tagUrl+"Rule not applicable");

return returnList;

}

//Extract url from < a > tag

List<Map<String,String>> list = new ArrayList<>();//Define the list that stores the url

//Traverse the list information of our sports topics to obtain the corresponding details through the corresponding url

for (Element a : newsATags) {

String articleTitle=a.text();//Get current title information

String url = a.attr("href");//The path to get the corresponding title, that is, the path to jump to the corresponding details as I mentioned above. We grab the article title, picture and other information we want by entering the details

if(url.contains("html")) {

if(url.indexOf("http") == -1) {

url = "http:" + url;

}

Map<String,String> map = new HashMap<>();

map.put("url", url);

map.put("title", articleTitle);

list.add(map);

}else {

continue;

}

}

//Access the page according to the url to get the content

for(Map<String,String> m :list) {

Document newsHtml = null;

try {

//Obtain the html information under the corresponding path through the simulator. The url here is the link of the corresponding details. Obtain the corresponding page information content through the simulator

newsHtml = BaseJsoupUtil.getHtmlFromUrl(m.get("url"), false);

String title=m.get("title");//Get title

Elements newsContent=null;

String data;

newsContent=newsHtml.select(".article");//Here is the second part of the information I explained above. jsoup grabs information through tags. The above is the grabbing rule with class as article

if(newsContent!=null) {

String content=newsContent.toString();//Get the corresponding content information

//Too short an article doesn't need to be written

if(content.length() < 200) {//Filter articles that are too long

break;

}

//Time filtering

if(Utils.isBlank(newsHtml.select(".date").text())) {

data=newsHtml.select(".time").text().replaceAll("year", "-").replaceAll("month", "-").replaceAll("day", "");

}else{

data=newsHtml.select(".date").text().replaceAll("year", "-").replaceAll("month", "-").replaceAll("day", "");

}

//Specify path time filtering

if(tagUrl.equals("http://collection.sina.com.cn/")) {

data=newsHtml.select(".titer").text().replaceAll("year", "-").replaceAll("month", "-").replaceAll("day", "");

}else if(tagUrl.equals("https://finance.sina.com.cn/stock/") || tagUrl.equals("http://blog.sina.com.cn/lm/history/")) {

data=newsHtml.select(".time SG_txtc").text().replaceAll("year", "-").replaceAll("month", "-").replaceAll("day", "").replaceAll("(", "").replaceAll(")", "");

}

//Get picture information

List<String> imgSrcList = BaseJsoupUtil.getImgSrcList(content);

String coverImg = "";

if(!imgSrcList.isEmpty()) {

if(num == 0) {

break;//The set upper limit of collection quantity has been reached

}

num --;

for(String url : imgSrcList){

String imgUrl = BaseJsoupUtil.downloadUrl(url.indexOf("http") == -1?"http:" + url:url);

content = Utils.isBlankStr(content)?content:content.replace(url, imgUrl);

if(Utils.isBlank(coverImg))coverImg = imgUrl.replace(FileConst.QINIU_FILE_HOST+"/", "");

}

//Get what we want and package it into the map, which basically ends the pull

Map<String,String> map = new HashMap<>();

map.put("title", title);//title

map.put("date", data);//Article creation time

map.put("content", HtmlCompressor.compress(content));//Article content

map.put("tag", tag.toString());//Tag id

map.put("coverImg", coverImg);//Article cover

map.put("url", m.get("url"));//url

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

return returnList;

}

The above is the basic logic of jsoop crawling. The overall summary is to be familiar with the tutorial on the use of corresponding jsoop tags and the specific rules for crawling pages. After knowing these basic rules, we can obtain information in combination with our own business logic. Here, we only do technical exchanges on Sina crawling, and we hope you will not use it for commercial use. Well, that's all for today. I'll continue to share my pit records later. If you have any questions, you can leave a message.