Basic concepts

Multithreading

Multithreading means that more than one thread is generated when the program (a process) runs

Parallel:

It is true that multiple cpu instances or multiple machines execute a piece of processing logic at the same time

Concurrent

The cpu call algorithm makes users appear to execute at the same time. In fact, it is not really concurrent at the cpu operation level. There are often common resources in the scene. Therefore, for this common resource, there are often bottlenecks. We will use TPS or QPS to reflect the processing capacity of the system

Thread safety

In the case of concurrency, the code is used by multiple threads. The scheduling order of threads does not affect any results. At this time, when using multiple threads, we only need to pay attention to whether the memory and cpu of the system are sufficient. Conversely, unsafe threads mean that the scheduling order of threads will affect the final results

synchronization

Through artificial control and scheduling to ensure multi-threaded access to shared resources, it becomes thread safety to ensure the accuracy of the results

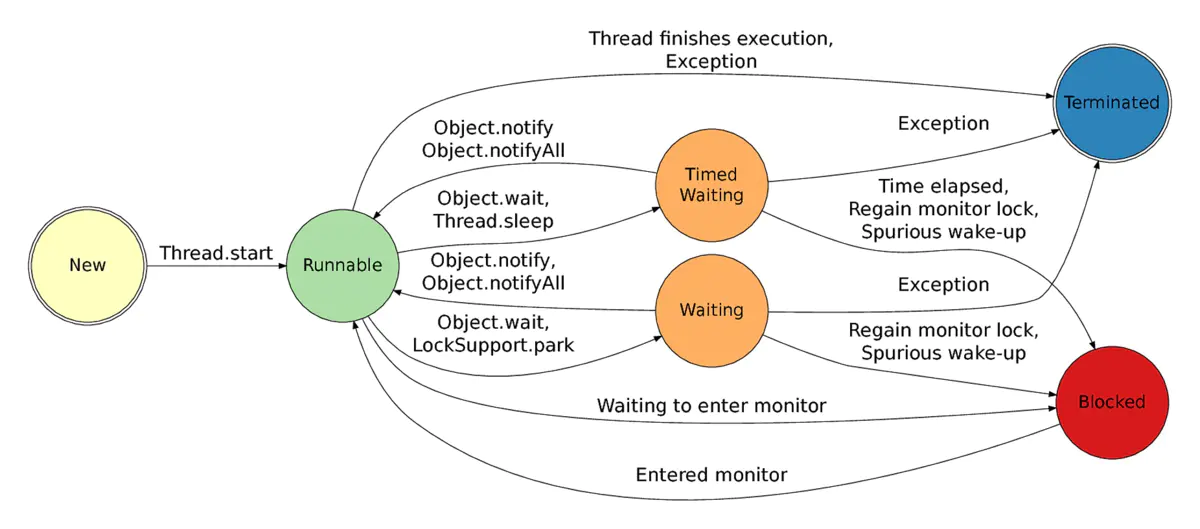

Status of the thread

New (new status)

When a thread is in the new state after it is created, when will this state change? As long as it calls the start() method, the thread enters the lock pool state

Blocked (lock pool)

After entering the lock pool, it will participate in the lock competition. When it obtains the lock, it can not run immediately. Because a single core cpu can only execute one thread at a certain time, it needs the time slice allocated by the operating system to execute it

Runnable (running state)

When a thread holding the lock of an object obtains the CPU time slice and starts executing, this thread is called running state

TIMED_ Waiting, waiting

The running thread may also call the wait method or the wait method with time parameters. At this time, the thread will release the object lock into the waiting queue (if the wait method is called, it will enter the waiting state; if the wait method with time parameters is called, it will enter the timed waiting state)

Terminated

When the thread completes its normal execution, it enters the termination (death) state, and the system will recover the resources occupied by the thread

be careful:

1. When a thread calls the sleep method or another thread in the current thread calls the join method with time parameters, it enters the timed waiting state

2. When other threads call the join() method without time parameter, they enter the waiting state

3. When the thread encounters I/O, it is still running

4. When a thread calls the suspend method to suspend, it is still running

synchronized,Lock

They are human thread scheduling tools applied to synchronization problems. wait/notify must exist in the synchronized block, and these three keywords are for the same monitor (the monitor of an object), which means that other threads can enter the synchronized block for execution after wait

Synchronization principle

The JVM specification stipulates that the JVM implements method synchronization and code block synchronization based on entering and exiting monitor objects, but the implementation details of the two are different. Code block synchronization is implemented using monitorenter and monitorexit instructions, while method synchronization is implemented using another method. The details are not described in detail in the JVM specification, but method synchronization can also be implemented using these two instructions The monitorenter instruction is inserted at the beginning of the synchronization code block after compilation, while the monitorexit is inserted at the end of the method and at the exception. The JVM must ensure that each monitorenter must have a corresponding monitorexit paired with it. Any object has a monitor associated with it. When a monitor is held, it is in a locked state. When the thread executes the monitorenter instruction, it will try to get the Take the ownership of the monitor corresponding to the object, that is, try to obtain the lock of the object

Use of synchronized

The essence of a lock is an object instance. For non static methods, Synchronized has two forms: Synchronized method body and Synchronized statement block. In essence, locks are object instances.

Lock instance

public class SynchronizeDemo{

public void doSth1(){

/**

* Lock object instance synchronizeDemo

*/

synchronized(synchronizeDemo){

try{

System.out.println("Executing method");

Thread.sleep(10000);

System.out.println("Exiting method");

}catch(InterruptedException e){

e.printStackTrace();

}

}

}

public void doSh2(){

/**

* Lock object instance this is equivalent to synchronizeDemo

*

*/

synchronized(this){

try{

System.out.println("Executing method");

Thread.sleep(10000);

System.out.println("Exiting method");

}catch(){

e.printStackTrace();

}

}

}

}

The method in the synchronized block obtains the monitor of the lock instance. If the instances are the same, only one thread can execute the contents of the block

Direct use method

public synchronized void doSth3(){

/**

* The surface rendering is the lock method body, which is actually synchronized(this) equivalent to the above

*/

try{

System.out.println("Executing method");

Thread.sleep(10000);

System.out.println("Exiting method");

}catch(InterruptedException e){

e.printStackTrace();

}

}

This is equivalent to the lock effect in the above code. Actually, the monitor of the Thread class is obtained. Further, if the static method is modified, the Synchronized calss object is locked

Use of Lock

Lock in Java util. There are three implementations in the concurrent package:

- ReentrantLock

- ReentrantReadWriteLock.ReadLock

- ReentrantReadWriteLock.WriteLock

The main purpose of lock is the same as Synchronized, but lock is more flexible

Fair lock means that when multiple threads wait for the same lock, they must obtain the lock in turn according to the time sequence of applying for the lock; Non fair locks do not guarantee this. When the lock is released, any thread waiting for the lock has the opportunity to obtain the lock. Synchronized locks are unfair. ReentrantLock is also unfair by default, but fair locks can be required through the constructor with boolean value;

Lock binding multiple conditions means that a ReentranLock object can bind multiple Condition objects at the same time. In Synchronized, the wait() and notify() or notifyAll() methods of the lock object can implement an implied Condition. If more than one Condition is associated, you don't have to add an additional lock. ReentranLock doesn't need to do this. You just need to call the newCondition method multiple times

ReentrantLock

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

/**

* Bounded blocking queue

* When the queue is empty, the queue will block the thread of delete and get operation until there are new elements in the queue

* When the queue is full, the queue blocks the process of adding until the queue has an empty location

*/

public class BoundedQueue<T>{

private Object[] items;

//Current number of subscripts and arrays added and deleted

private int addIndex,removeIndex,count;

private Lock lock = new ReentrantLock();

private Condition notEmpty = lock.newCondition();

private Condition notFull = lock.newCondition();

public BoundedQueue(){

items = new Object[5];

}

public BoundedQueue(int size){

items= new Object[size];

}

/**

* Add an element. When the data is full, the add thread enters the waiting state

*/

public void add(T t) throws InterruptedException{

lock.lock();

try{

while(items.length==count){

System.out.println("Add queue--Fall into waiting");

notFull.await();

}

items[addIndex] = t;

addIndex = ++addIndex == items.length ? 0 : addIndex;

count++;

notEmpty.signal();

}finally{

lock.unlock();

}

}

/**

* Delete and get an element array. If it is empty, it enters the wait

*/

public T remove() throws InterruptedException{

lock.lock();

try{

while(count==0){

System.out.println("Delete queue---Fall into waiting");

notEmpty.await();

}

Object tmp = items[removeIndex];

items[removeIndex] = null;

removeIndex = ++removeIndex == items.length ? 0 : removeIndex;

count--;

notFull.signal();

return (T) tmp;

}finally{

lock.unlock();

}

}

public Object[] getItems(){

return items;

}

public void setItems(Object[] items){

this.items=items;

}

public int getAddIndex() {

return addIndex;

}

public void setAddIndex(int addIndex) {

this.addIndex = addIndex;

}

public int getRemoveIndex() {

return removeIndex;

}

public void setRemoveIndex(int removeIndex) {

this.removeIndex = removeIndex;

}

public int getCount() {

return count;

}

public void setCount(int count) {

this.count = count;

}

}

ReentranReadWriteLock

Multiple read threads are allowed to access at the same time, but write threads and read threads, write threads and write threads are not allowed to access at the same time. Compared with the exclusive lock, the concurrency is improved. In practical applications, in most cases, the access to shared data (such as cache) is read operation, which is much more than write operation. At this time, ReentranReadWriteLock can provide better concurrency and throughput than the exclusive lock

System overhead caused by process switching

Java threads are directly mapped to the threads of the operating system. The suspension, blocking and wake-up of threads need the participation of the operating system. Therefore, there is a certain system overhead in the process of thread switching. Calling the Synchronized method in a multi-threaded environment may require multiple line state switches. Therefore, it can be said that Synchronized is a heavyweight operation in the Java language

Nevertheless, jdk1 After version 6, the Synchronized keyword is relatively optimized, and the lock spin feature is added to reduce the overhead caused by system thread switching, which is almost equal to the performance of ReentrantLock. Therefore, it is recommended to give priority to the use of Synchronized on the premise of meeting business requirements

The difference between volatile and synchronized

1.volatile essentially tells the jvm that the value of the current variable in the register (working memory) is uncertain and needs to be read from the main memory; synchronized locks the current variable. Only the current thread can access the variable. Other threads are blocked

2.volatile can only be used at the variable level; synchronized can be used at the variable, method, and class levels

3.volatile can only realize the modification visibility of variables and cannot guarantee atomicity; synchronized ensures the visibility and atomicity of variable modification

4.volatile will not cause thread blocking. synchronized may cause thread blocking

5. Variables marked volatile will not be optimized by the compiler. Variables marked synchronized can be optimized by the compiler

common method

yield()

The current thread can transfer the cpu control right to let other ready States run (switch), but not necessarily other threads run. It depends on the cpu mood or the current thread

sleep()

Pause for a period of time

join()

Call other. in a thread Join() will wait for other to finish executing before continuing this thread. It's like jumping in line

interrupt()

Interrupt thread

An interrupt is a state. The interrupt method only sets this state to true. All programs that run normally will not terminate without detecting the state, while blocking methods such as wait will check and throw exceptions