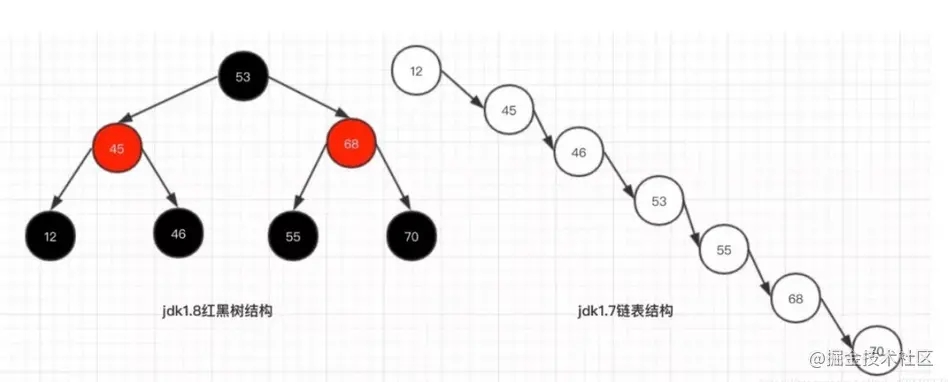

HashMap in Java uses the linked list method to solve hash conflicts. The HashMap principle is that key value pairs with the same bucket subscript are stored in a linked list. When the linked list becomes longer, searching and adding (you need to determine whether the key already exists) need to traverse the linked list, and the speed will become slower. JDK 1.8 adds the mechanism of converting the linked list into a red black tree, but the conversion of the red black tree is not a cheap operation. Only when the length of the linked list is greater than or equal to tree_threshold can it be treeify.

/**

* The bin count threshold for using a tree rather than list for a

* bin. Bins are converted to trees when adding an element to a

* bin with at least this many nodes. The value must be greater

* than 2 and should be at least 8 to mesh with assumptions in

* tree removal about conversion back to plain bins upon

* shrinkage.

*/

static final int TREEIFY_THRESHOLD = 8;

TREEIFY_ The default value of threshold is 8. The source code of putVal() is as follows:

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

In fact, not as long as the length of the linked list is greater than 8. When table When the length (number of buckets) is less than min_tree_capacity, the capacity will be expanded preferentially rather than converted to red black tree.

Jdk1 8 as an example, open the source code of HashMap and analyze the TreeNode attribute of the internal class of red black tree:

static final class TreeNode<K,V> extends LinkedHashMap.Entry<K,V> {

//Pointer to parent node

TreeNode<K,V> parent;

//Pointer to left child

TreeNode<K,V> left;

//Pointer to right child

TreeNode<K,V> right;

//The precursor pointer, which is the opposite of the next property

TreeNode<K,V> prev;

//Is it a red node

boolean red;

......

}

The left rotation method rotateLeft is as follows:

/*

* Left-handed logic

*/

static <K,V> TreeNode<K,V> rotateLeft(TreeNode<K,V> root,

TreeNode<K,V> p) {

//Root: indicates the root node

//p: Represents the node to be adjusted

//r: Represents the right node of p

//pp: represents the parent node of p

//rl: represents the left child node of the right child of p

TreeNode<K,V> r, pp, rl;

//r determines that if r is empty, the rotation is meaningless

if (p != null && (r = p.right) != null) {

//For the connection operation of multiple equal signs, look from right to left and set the father of rl to p

if ((rl = p.right = r.left) != null)

rl.parent = p;

//Judge whether the parent of p is empty and is the root node. If the root node is set to black

if ((pp = r.parent = p.parent) == null)

(root = r).red = false;

//Determine whether the p node is a left son or a right son

else if (pp.left == p)

pp.left = r;

else

pp.right = r;

r.left = p;

p.parent = r;

}

return root;

}

Similarly, the right-hand rotation method rotateRight is as follows:

/*

* Dextral logic

*/

static <K,V> TreeNode<K,V> rotateRight(TreeNode<K,V> root,

TreeNode<K,V> p) {

//Root: indicates the root node

//p: Represents the node to be adjusted

//l: Represents the left node of p

//pp: represents the parent node of p

//lr: represents the right child node of the left child of p

TreeNode<K,V> l, pp, lr;

//L judgment, if l is empty, the rotation is meaningless

if (p != null && (l = p.left) != null) {

//For the connection operation of multiple equal signs, look from right to left, and set the father of lr to p

if ((lr = p.left = l.right) != null)

lr.parent = p;

//Judge whether the parent of p is empty and is the root node. If the root node is set to black

if ((pp = l.parent = p.parent) == null)

(root = l).red = false;

//Judge whether the p node is a right son or a left son

else if (pp.right == p)

pp.right = l;

else

pp.left = l;

l.right = p;

p.parent = l;

}

return root;

}

Capacity expansion mechanism

HashMap is implemented based on array + linked list and red black tree, but the length of the array bucket used to store key values is fixed, which is determined by initialization.

Then, with the increase of the number of data inserts and the effect of load factor, it is necessary to expand the capacity to store more data. A very important point in capacity expansion is jdk1 8, there is no need to recalculate the hash value of each element.

Here, we mainly look at the code for capacity expansion (comments);

final Node<K,V>[] resize() {

Node<K,V>[] oldTab = table;

int oldCap = (oldTab == null) ? 0 : oldTab.length;

int oldThr = threshold;

int newCap, newThr = 0;

// Cap acity is the abbreviation of capacity. If the capacity is not empty, it indicates that it has been initialized.

if (oldCap > 0) {

// If the capacity reaches the maximum of 1 < < 30, it will not be expanded

if (oldCap >= MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return oldTab;

}

// Calculate the new capacity and threshold by twice the old capacity and threshold

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

newThr = oldThr << 1; // double threshold

}

else if (oldThr > 0) // initial capacity was placed in threshold

// initial capacity was placed in threshold translates as follows:;

// During initialization, assign the value of threshold to newCap,

// HashMap uses the threshold variable to temporarily save the value of the initialCapacity parameter

newCap = oldThr;

else { // zero initial threshold signifies using defaults

// This part is also, and there are corresponding English comments in the source code

// When the parameterless construction method is called, the array bucket array capacity is the default capacity of 1 < < 4; aka 16

// Threshold value; Is the product of the default capacity and the load factor, 0.75

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

// If newThr is 0, the capacity is calculated using the threshold formula

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY

(int)ft : Integer.MAX_VALUE);

}

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

// Initialize the array bucket for storing key s

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap];

table = newTab;

if (oldTab != null) {

// If the old array bucket and oldCap have values, the traversal will map the key values to the new array bucket

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

else if (e instanceof TreeNode)

// split here is the red black tree splitting operation. Operation at remapping time.

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

else { // preserve order

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

// Here is the linked list. If it is currently stored according to the linked list, the linked list nodes are grouped according to the original order {here is a special article on how to split the HashMap core knowledge, disturbance function, load factor, capacity expansion linked list splitting and deep learning without recalculating the hash value}

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

// Map the grouped linked list to the bucket

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

Mainly including;

- During Capacity expansion, calculate new newCap and new Threshold, which are abbreviations of two words, one is Capacity and the other is valve Threshold

- newCap array bucket for Innovation: new Node[newCap];

- After capacity expansion, the original elements stored in linked list and red black tree due to hash collision need to be split and stored in a new location.