What are the general ways to implement distributed locks?How do I design distributed locks using redis?Is it okay to use zk to design distributed locks?Which of these two distributed locks is more efficient?

(1) redis distributed lock

Officially known as the RedLock algorithm, it is an officially supported distributed lock algorithm by redis.

This distributed lock has three important considerations, mutually exclusive (only one client can acquire the lock), deadlock-free, and fault-tolerant (most redis nodes or this lock can be added and released).

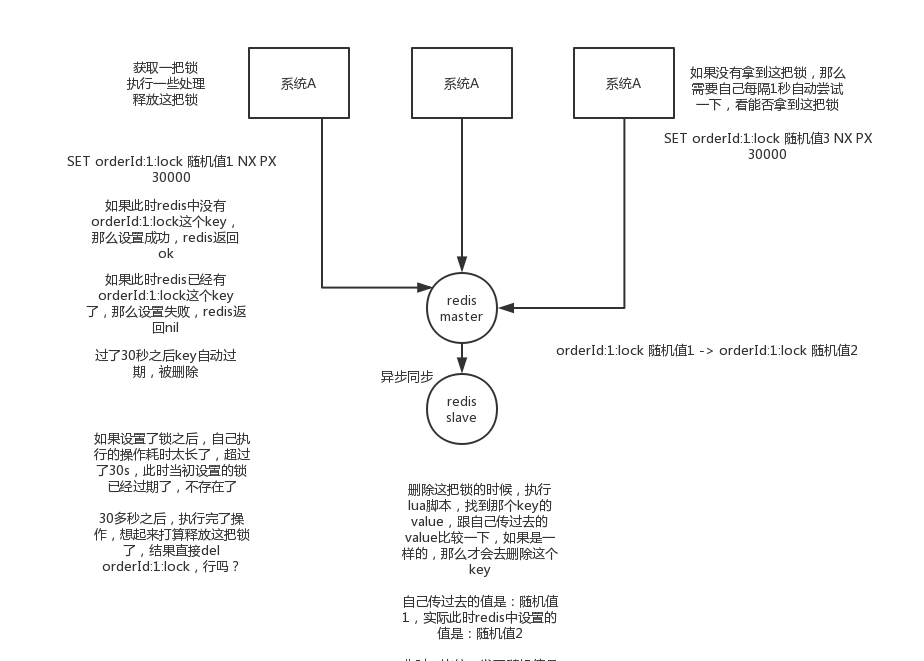

The first most common implementation is to create a key arithmetic lock in redis

SET my:lock random value NX PX 30000, this command is ok, this NX means only set successfully if key does not exist, PX 30000 means lock is released automatically after 30 seconds.When someone else creates it, they can't lock it if they find they already have it.

Releasing a lock means deleting the key, but it can generally be deleted by using a lua script, judging that the value is the same:

Baidu on how redis executes lua scripts

if redis.call("get",KEYS[1]) == ARGV[1] then

return redis.call("del",KEYS[1])

else

return 0

end

Why use random values?Because if a client acquires a lock, but the block takes a long time to complete, the lock may have been released automatically by this time. Others may have already acquired the lock. If you delete the key directly at this time, you will have problems, so you will have to release the lock with a random value plus a lua script.

But this is definitely not possible.Because if it's a normal redis single instance, it's a single point of failure.Or a redis master-slave, that redis master-slave replicates asynchronously. If the master node hangs up and the key has not been synchronized to the slave node, then the master node is switched from the node to the slave node, and someone else gets the lock.

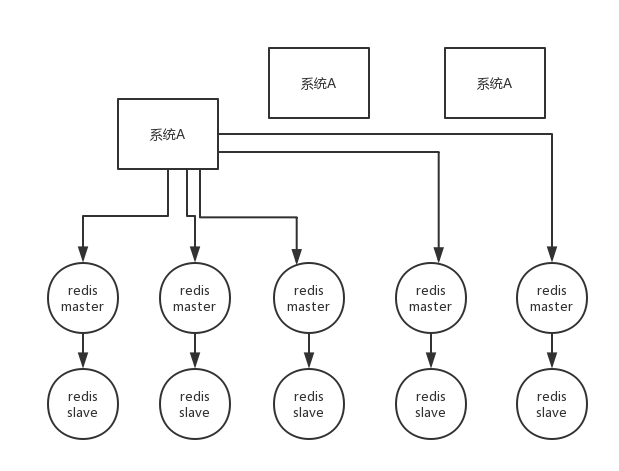

Second problem, RedLock algorithm

This scenario assumes a redis cluster with five instances of redis master.Then perform the following steps to acquire a lock:

1) Get the current timestamp in milliseconds

2) Similar to the above, alternating attempts to create locks on each master node have a shorter expiration time, typically tens of milliseconds

3) Attempt to establish a lock on most nodes, such as 5 nodes requiring 3 nodes (n / 2 +1)

4) The client calculates the time to establish the lock, and if the time to establish the lock is less than the time-out, the lock will be established successfully.

5) If lock establishment fails, delete the lock in turn

6) As long as someone else establishes a distributed lock, you have to keep polling to try to acquire it

(2) zk distributed lock

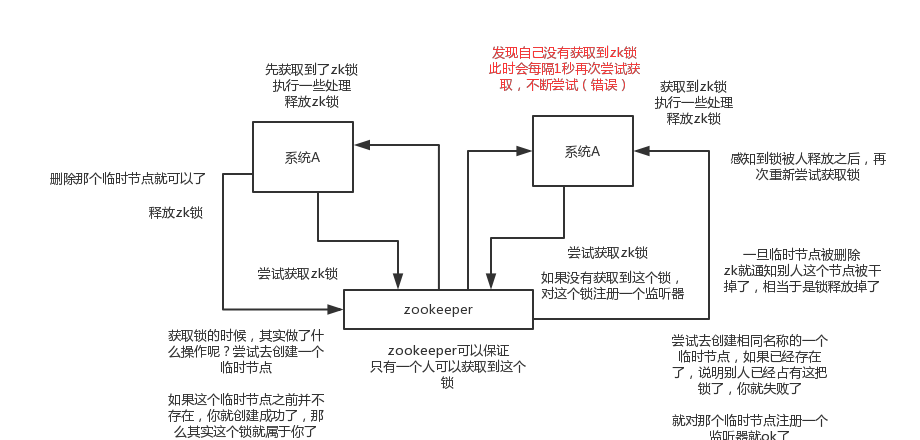

A distributed zk lock is a simple way to create a temporary znode when one of the nodes tries to create it and the lock is acquired when it is created successfully; at this time other clients fail to create the lock and only one listener can be registered to listen on the lock.Releasing a lock means deleting the znode, notifying the client when it is released, and then having a waiting client can reassemble the lock.

public class ZooKeeperSession {

private static CountDownLatch connectedSemaphore = new CountDownLatch(1);

private ZooKeeper zookeeper;

private CountDownLatch latch;

public ZooKeeperSession() {

try {

this.zookeeper = new ZooKeeper(

"192.168.31.187:2181,192.168.31.19:2181,192.168.31.227:2181",

50000,

new ZooKeeperWatcher());

try {

connectedSemaphore.await();

} catch(InterruptedException e) {

e.printStackTrace();

}

System.out.println("ZooKeeper session established......");

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* Acquiring distributed locks

* @param productId

*/

public Boolean acquireDistributedLock(Long productId) {

String path = "/product-lock-" + productId;

try {

zookeeper.create(path, "".getBytes(),

Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL);

return true;

} catch (Exception e) {

while(true) {

try {

Stat stat = zk.exists(path, true); // It's equivalent to registering a listener for a node to see if it exists

if(stat != null) {

this.latch = new CountDownLatch(1);

this.latch.await(waitTime, TimeUnit.MILLISECONDS);

this.latch = null;

}

zookeeper.create(path, "".getBytes(),

Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL);

return true;

} catch(Exception e) {

continue;

}

}

// Very elegant, I'm just going to show you this idea

// More general, our own zookeeper-based distributed locks are encapsulated in our company, and we implement them based on zookeeper's temporary sequential nodes, which are more elegant

}

return true;

}

/**

* Release a distributed lock

* @param productId

*/

public void releaseDistributedLock(Long productId) {

String path = "/product-lock-" + productId;

try {

zookeeper.delete(path, -1);

System.out.println("release the lock for product[id=" + productId + "]......");

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* Set up a watcher for zk session

* @author Administrator

*

*/

private class ZooKeeperWatcher implements Watcher {

public void process(WatchedEvent event) {

System.out.println("Receive watched event: " + event.getState());

if(KeeperState.SyncConnected == event.getState()) {

connectedSemaphore.countDown();

}

if(this.latch != null) {

this.latch.countDown();

}

}

}

/**

* Static inner class encapsulating a singleton

* @author Administrator

*

*/

private static class Singleton {

private static ZooKeeperSession instance;

static {

instance = new ZooKeeperSession();

}

public static ZooKeeperSession getInstance() {

return instance;

}

}

/**

* Get singletons

* @return

*/

public static ZooKeeperSession getInstance() {

return Singleton.getInstance();

}

/**

* A convenient way to initialize a singleton

*/

public static void init() {

getInstance();

}

}

//If there is a lock, competing for it by many people, then many people will queue up, the first person to get the lock will execute, and then release the lock, each person behind will listen to the node created by the person in front of him, once someone releases the lock, the person behind him will be notified by zookeeper, once notified, ok will be obtained by himselfWhen the lock is reached, the code can be executed

(3) Comparison of redis and zk distributed locks

redis distributed locks, actually need to keep trying to acquire locks, consuming performance

zk distributed lock, can not acquire lock, register a listener can, do not need to continuously actively attempt to acquire lock, performance cost is small

Another point is that if the client that redis acquired the lock is bug ged or hung, the lock can only be released after the time-out; if zk, because it created a temporary znode, as long as the client hangs, the znode will be gone and the lock will be released automatically

Are redis distribution locks always a problem?Traverse locks, calculate time, etc.zk's distributed lock semantics are clear and easy to implement

So let's not go into too many things first, let's just say these two things. I personally believe that zk's distributed locks are more secure than redis'and that the model is simple and easy to use