In practice, it is not enough to use a single Docker node in the production environment, so it is imperative to build a Docker cluster. However, in the face of many container cluster systems such as Kubernetes, Mesos and Swarm, how do we choose? Among them, Swarm is Docker's native, but also the simplest, easiest to learn, the most resource-saving, more suitable for small and medium-sized companies to use.

Introduction to Docker Swarm

Swarm belonged to a separate project before Docker 1.12. After the release of Docker 1.12, the project was merged into Docker and became a subcommand of Docker. Currently, Swarm is the only native support tool provided by the Docker community for Docker cluster management. It can convert a system composed of multiple Docker hosts into a single virtual Docker host, so that containers can form a subnet network across hosts.

Docker Swarm is a choreography tool that provides clustering and scheduling capabilities for IT operations and maintenance teams. Users can integrate all Docker Engines in the cluster into a "virtual Engine" resource pool and communicate with a single master Swarm by executing commands without having to communicate with each Docker Engine separately. Under the flexible scheduling strategy, IT teams can better manage the available host resources and ensure the efficient operation of application containers.

Docker Swarm's Advantages

High performance performance on any scale

For enterprise-level Docker Engine cluster and container scheduling, scalability is the key. Companies of any size, whether they have five or thousands of servers, can use Swarm effectively in their environments.

After testing, Swarm's scalability limit is to run 50,000 deployment containers on 1,000 nodes, each of which has a sub-second start-up time and no performance degradation.

Flexible container scheduling

Swarm helps IT operations teams optimize performance and resource utilization under limited conditions. Swarm's built-in scheduler supports a variety of filters, including node tags, affinity, and container strategy such as binpack, spread, random, and so on.

Continuous availability of services

Docker Swarm is provided with high availability by Swarm Manager by creating multiple Swarm master nodes and formulating alternative strategies when the master node is down. If a master node goes down, a slave node will be upgraded to a master node until the original master node returns to normal.

In addition, if a node is unable to join the cluster, Swarm will continue to try to join and provide error alerts and logs. Swarm can now try to reschedule the container to the normal node when the node fails.

Compatibility with Docker API and integration support

Swarm fully supports the Docker API, which means it can provide users with seamless experience of using different Docker tools (such as Docker CLI, Compose, Trusted Registry, Hub and UCP).

Docker Swarm provides native support for core functions of Docker-based applications such as multi-host networks and volume management. Compose files developed can be easily deployed to test servers or Swarm clusters (via docker-compose up). Docker Swarm can also pull and run images from Docker Trusted Registry or Hub.

In summary, Docker Swarm provides a high-availability solution for Docker cluster management, fully supports the standard Docker API, facilitates the management and scheduling of cluster Docker containers, and makes reasonable use of cluster host resources.

* Not all services should be deployed in the Swarm cluster. Databases and other stateful services are not suitable for deployment in SWARM clusters. *

Related concepts

node

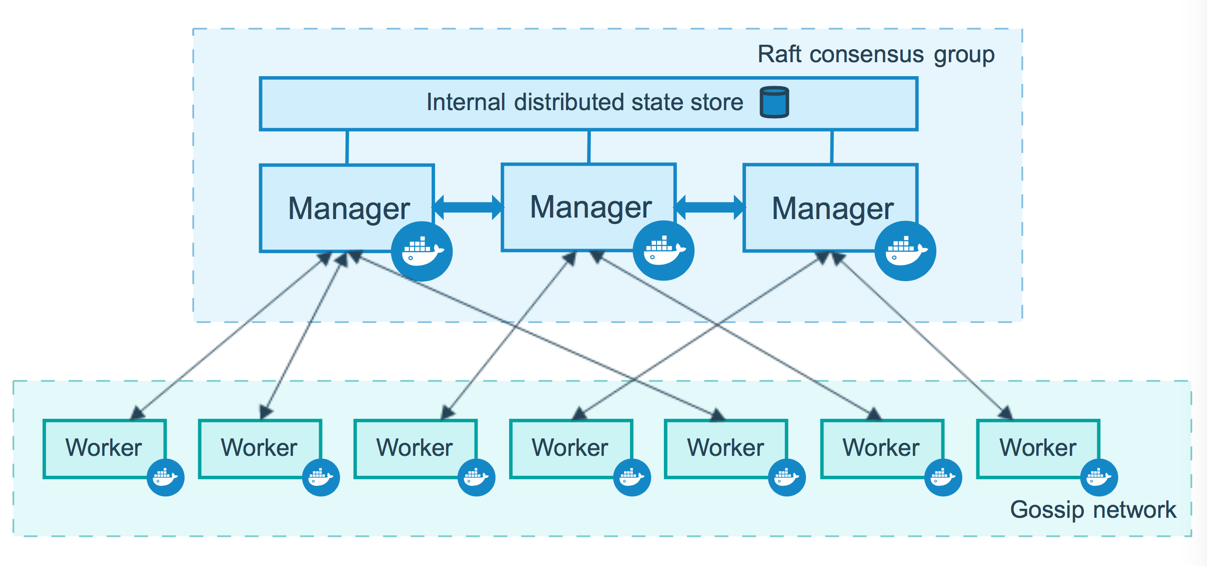

The host running Docker can actively initialize a Swarm cluster or join an existing Swarm cluster, so that the host running Docker becomes a node of the Swarm cluster. Nodes are divided into management node and worker node.

The management node is used for Swarm cluster management, and the docker swarm command can only be executed in the management node (the node exit cluster command docker swarm leaf can be executed in the working node). A Swarm cluster can have more than one management node, but only one can be a leader. Leader is implemented by raft protocol.

The work node is the task execution node, and the management node sends the service down to the work node for execution. The management node is also the working node by default. You can also configure the service to run only on the management node. The following figure shows the relationship between the management node and the working node in the cluster.

Services and tasks

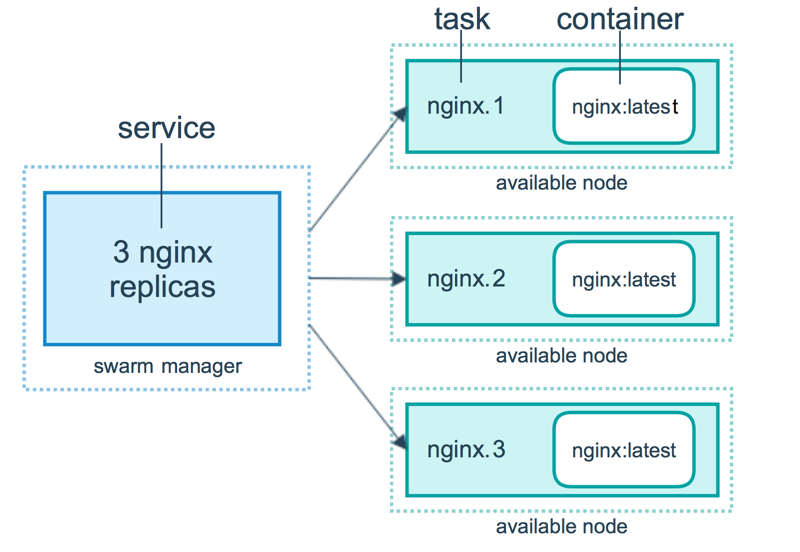

Task is the smallest scheduling unit in Swarm, and is currently a single container.

Services refer to a set of tasks. Services define the attributes of tasks. There are two modes of service:

- The replicated services run a specified number of tasks on each working node according to certain rules.

- Running a task on each working node of global services

The two modes are specified by the mode parameter of docker service creation. The following figure shows the relationships among containers, tasks, and services.

Create Swarm Cluster

We know that Swarm cluster consists of management nodes and work nodes. Let's create a minimal Swarm cluster with one management node and two working nodes.

Initialization Cluster

Look at the virtual host. It's not available now.

docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORSUsing virtualbox to create management nodes

docker-machine create --driver virtualbox manager1

#Enter Management Node

docker-machine ssh manager1Executing sudo-i allows access to Root privileges

We use docker swarm init to initialize a Swarm cluster in manager 1.

docker@manager1:~$ docker swarm init --advertise-addr 192.168.99.100

Swarm initialized: current node (j0o7sykkvi86xpc00w71ew5b6) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-47z6jld2o465z30dl7pie2kqe4oyug4fxdtbgkfjqgybsy4esl-8r55lxhxs7ozfil45gedd5b8a 192.168.99.100:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.If your Docker host has multiple network cards and multiple IP, you must specify the IP using - advertise-addr.

The node executing the docker swarm init command automatically becomes the management node.

Command docker info to view swarm cluster status:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

...snip...

Swarm: active

NodeID: dxn1zf6l61qsb1josjja83ngz

Is Manager: true

Managers: 1

Nodes: 1

...snip...Command docker nodes to view cluster node information:

docker@manager1:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

1ipck4z2uuwf11f4b9mnon2ul * manager1 Ready Active LeaderExit the virtual host

docker@manager1:~$ exit Adding working nodes

The previous step initialized a Swarm cluster with a management node. In the Docker Machine section, we learned that Docker Machine can create a virtual Docker host in seconds. Now we use it to create two Docker hosts and join the cluster.

Create virtual host worker1

Create host

$ docker-machine create -d virtualbox worker1Enter virtual host worker1

$ docker-machine ssh worker1Join swarm cluster

docker@worker1:~$ docker swarm join \

--token SWMTKN-1-47z6jld2o465z30dl7pie2kqe4oyug4fxdtbgkfjqgybsy4esl-8r55lxhxs7ozfil45gedd5b8a \

192.168.99.100:2377

This node joined a swarm as a worker. Exit the virtual host

docker@worker1:~$ exit Create virtual host worker2

Establish

$ docker-machine create -d virtualbox worker2Enter virtual host worker2

$ docker-machine ssh worker2Join swarm cluster

docker@worker2:~$ docker swarm join \

--token SWMTKN-1-47z6jld2o465z30dl7pie2kqe4oyug4fxdtbgkfjqgybsy4esl-8r55lxhxs7ozfil45gedd5b8a \

192.168.99.100:2377

This node joined a swarm as a worker. Exit the virtual host

docker@worker2:~$ exit Two Work Nodes Added Complete

View cluster

Enter the management node:

docker-machine ssh manager1View the virtual host on the host

docker@manager1:~$ docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS

manager1 * virtualbox Running tcp://192.168.99.100:2376 v17.12.1-ce

worker1 - virtualbox Running tcp://192.168.99.101:2376 v17.12.1-ce

worker2 - virtualbox Running tcp://192.168.99.102:2376 v17.12.1-ceExecute docker nodes on the primary node to query cluster host information

docker@manager1:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

1ipck4z2uuwf11f4b9mnon2ul * manager1 Ready Active Leader

rtcpqgcn2gytnvufwfveukgrv worker1 Ready Active

te2e9tr0qzbetjju5gyahg6f7 worker2 Ready ActiveIn this way, we create a smallest Swam cluster with one management node and two working nodes.

Deployment Services

We use the docker service command to manage services in the Swarm cluster, which can only run on the management node.

New service

Enter the cluster management node:

docker-machine ssh manager1Using docker Chinese Mirror

docker search alpine

docker pull registry.docker-cn.com/library/alpineNow let's run a service called helloworld in the Swarm cluster created in the previous section.

docker@manager1:~$ docker service create --replicas 1 --name helloworld alpine ping ityouknow.com

rwpw7eij4v6h6716jvqvpxbyv

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service convergedOrder Interpretation:

- The docker service create command creates a service

- The name service name is helloworld

- - Number of examples of replicas setup startup

- alpine refers to the mirror name used, and ping ityouknow.com refers to the bash that the container runs.

Use the command docker service PS rwpw7eij4v6h6716jvqpxbyv to see service progress

docker@manager1:~$ docker service ps rwpw7eij4v6h6716jvqvpxbyv

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

rgroe3s9qa53 helloworld.1 alpine:latest worker1 Running Running about a minute agoUse docker services to view the services currently running in the Swarm cluster.

docker@manager1:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

yzfmyggfky8c helloworld replicated 0/1 alpine:latestMonitor cluster status

Login Management Node Managerr1

docker-machine ssh manager1Run docker service inspect -- pretty < SERVICE-ID > to query the service profile status. Take helloworld service as an example:

docker@manager1:~$ docker service inspect --pretty helloworld

ID: rwpw7eij4v6h6716jvqvpxbyv

Name: helloworld

Service Mode: Replicated

Replicas: 1

Placement:

UpdateConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

...

Rollback order: stop-first

ContainerSpec:

Image: alpine:latest@sha256:7b848083f93822dd21b0a2f14a110bd99f6efb4b838d499df6d04a49d0debf8b

Args: ping ityouknow.com

Resources:

Endpoint Mode: vipRun docker service inspect helloworld to query service details.

Run docker Services PS <SERVICE-ID> to see which node is running the service:

docker@manager1:~$ docker service ps helloworld

NAME IMAGE NODE DESIRED STATE LAST STATE

helloworld.1.8p1vev3fq5zm0mi8g0as41w35 alpine worker1 Running Running 3 minutesViewing the execution of tasks at work nodes

docker-machine ssh worker1Dockerps are executed at the node to see the running status of the container.

docker@worker1:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

96bf5b1d8010 alpine:latest "ping ityouknow.com" 4 minutes ago Up 4 minutes helloworld.1.rgroe3s9qa53lf4u4ky0tzcb8In this way, we have successfully run a helloworld service in Swarm cluster, and according to the command we can see that it runs on worker1 node.

Experiments on Elastic Expansion

Let's do a set of experiments to feel Swarm's powerful dynamic horizontal scaling characteristics. First, we dynamically adjust the number of service instances.

Adjust the number of instances

Increase or decrease the number of service nodes

Adjust the number of service instances for helloworld to 2

docker service update --replicas 2 helloworldSee which node is running the service:

docker service ps helloworld

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

rgroe3s9qa53 helloworld.1 alpine:latest manager1 Running Running 8 minutes ago

a61nqrmfhyrl helloworld.2 alpine:latest worker2 Running Running 9 seconds agoAdjust the number of service instances for helloworld to 1

docker service update --replicas 1 helloworldLook again at the operation of the node:

docker service ps helloworld

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

a61nqrmfhyrl helloworld.2 alpine:latest worker2 Running Running about a minute agoAdjust the number of service instances of helloworld again to three

docker service update --replicas 3 helloworld

helloworld

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service convergedView the operation of the node:

docker@manager1:~$ docker service ps helloworld

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

mh7ipjn74o0d helloworld.1 alpine:latest worker2 Running Running 40 seconds ago

1w4p9okvz0xw helloworld.2 alpine:latest manager1 Running Running 2 minutes ago

snqrbnh4k94y helloworld.3 alpine:latest worker1 Running Running 32 seconds agoDelete Cluster Services

docker service rm helloworld

Adjust cluster size

Dynamic adjustment of Swarm cluster working nodes.

Add cluster

Create virtual host worker3

$ docker-machine create -d virtualbox worker3Enter virtual host worker3

$ docker-machine ssh worker3Join swarm cluster

docker@worker3:~$ docker swarm join \

--token SWMTKN-1-47z6jld2o465z30dl7pie2kqe4oyug4fxdtbgkfjqgybsy4esl-8r55lxhxs7ozfil45gedd5b8a \

192.168.99.100:2377

This node joined a swarm as a worker. Exit the virtual host

docker@worker3:~$exit Execute docker nodes on the primary node to query cluster host information

Log on to the primary node

docker-machine ssh manager1View Cluster Nodes

docker@manager1:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

j0o7sykkvi86xpc00w71ew5b6 * manager1 Ready Active Leader

xwv8aixasqraxwwpox0d0bp2i worker1 Ready Active

ij3z1edgj7nsqvl8jgqelrfvy worker2 Ready Active

i31yuluyqdboyl6aq8h9nk2t5 worker3 Ready ActiveYou can see that there are more worker 3 nodes in the cluster.

Exit Swarm Cluster

If the Manager wants to exit the Swarm cluster, execute the following command on ManageNode:

docker swarm leave

You can quit the cluster. If there are other Worker Node s in the cluster and you want the Manager to quit the cluster, add a mandatory option. The command line is as follows:

docker swarm leave --forceExit the test on Worker2 and log on to the worker2 node

docker-machine ssh worker2Execute exit order

docker swarm leave

View cluster nodes:

docker@manager1:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

j0o7sykkvi86xpc00w71ew5b6 * manager1 Ready Active Leader

xwv8aixasqraxwwpox0d0bp2i worker1 Ready Active

ij3z1edgj7nsqvl8jgqelrfvy worker2 Down Active

i31yuluyqdboyl6aq8h9nk2t5 worker3 Ready ActiveYou can see that the worker 2 status of the cluster node is offline

You can join again.

docker@worker2:~$ docker swarm join \

> --token SWMTKN-1-47z6jld2o465z30dl7pie2kqe4oyug4fxdtbgkfjqgybsy4esl-8r55lxhxs7ozfil45gedd5b8a \

> 192.168.99.100:2377

This node joined a swarm as a worker.Look again

docker@manager1:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

j0o7sykkvi86xpc00w71ew5b6 * manager1 Ready Active Leader

xwv8aixasqraxwwpox0d0bp2i worker1 Ready Active

0agpph1vtylm421rhnx555kkc worker2 Ready Active

ij3z1edgj7nsqvl8jgqelrfvy worker2 Down Active

i31yuluyqdboyl6aq8h9nk2t5 worker3 Ready ActiveYou can see that the cluster node worker2 has rejoined the cluster

Rebuild commands

When using VirtualBox for testing, if you want to repeat the experiment, you can delete the experimental nodes and repeat them.

//Stop Virtual Machine

docker-machine stop [arg...] //One or more virtual machine names

docker-machine stop manager1 worker1 worker2//Remove Virtual Machines

docker-machine rm [OPTIONS] [arg...]

docker-machine rm manager1 worker1 worker2Stop and delete the virtual host, then recreate it.

summary

Through Swarm's learning, I strongly feel the charm of automation level expansion, so that when the company's traffic outbreak, only one command can be executed to complete the instance online. If we do automatic control according to the company's business flow, it will really achieve full automatic dynamic scaling.

For example, we can use scripts to monitor the company's business traffic. When the traffic is at a certain level, we start the corresponding number of N nodes. When the traffic decreases, we also dynamically reduce the number of service instances, which can save the company's resources and not worry about the business outbreak being overwhelmed by traffic. Docker can develop so well or for a reason. Containerization is the most important part of DevOps. In the future, the technology of containerization will become more and more abundant and perfect. Intelligent operation and maintenance can be expected.

Reference resources

Getting started with swarm mode

Docker - From Introduction to Practice

Detailed introduction to the use of Docker Swarm