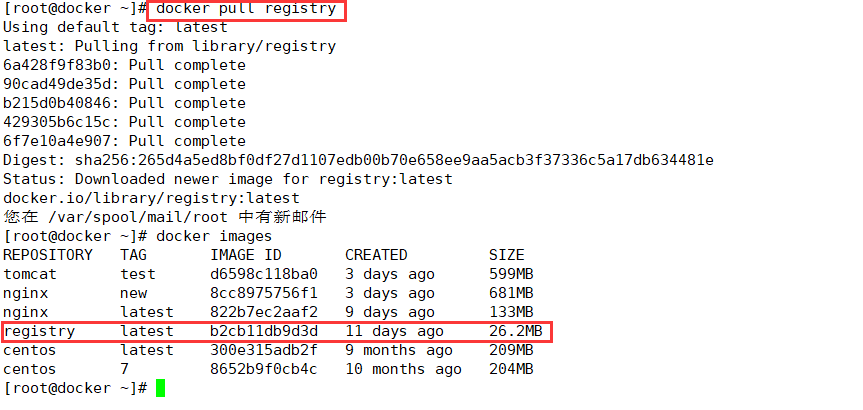

1. Establishment of private warehouse registry

[root@docker ~]# docker pull registry Using default tag: latest latest: Pulling from library/registry 6a428f9f83b0: Pull complete 90cad49de35d: Pull complete b215d0b40846: Pull complete 429305b6c15c: Pull complete 6f7e10a4e907: Pull complete Digest: sha256:265d4a5ed8bf0df27d1107edb00b70e658ee9aa5acb3f37336c5a17db634481e Status: Downloaded newer image for registry:latest docker.io/library/registry:latest You are in /var/spool/mail/root New mail in [root@docker ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE tomcat test d6598c118ba0 3 days ago 599MB nginx new 8cc8975756f1 3 days ago 681MB nginx latest 822b7ec2aaf2 9 days ago 133MB registry latest b2cb11db9d3d 11 days ago 26.2MB centos latest 300e315adb2f 9 months ago 209MB centos 7 8652b9f0cb4c 10 months ago 204MB

Setup in docker engine terminal

[root@docker ~]# vim /etc/docker/daemon.json

{

"insecure-registries":["192.168.206.188:5000"], #Add, local address port: 5000

"registry-mirrors": ["https://3zj1eww1.mirror.aliyuncs.com"]

}

[root@docker ~]# docker create -it registry /bin/bash

6df1f2798d6941741ccf87a2180334298914136fa00f88787df0753b4a011094

[root@docker ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6df1f2798d69 registry "/entrypoint.sh /bin..." 8 seconds ago Created stoic_feynman

[root@docker ~]# docker start 6df1f2798d69

d6df1f2798d69

[root@docker ~]# docker ps -a #Query state exited abnormally

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6df1f2798d69 registry "/entrypoint.sh /bin..." 42 seconds ago Exited (127) 3 seconds ago stoic_feynman

Host's/data/registry automatically creates/tmp/registry in Mount container

[root@docker ~]# docker run -d -p 5000:5000 -v /data/registry:/tmp/registry registry a5db7ad91a83e1fa117fdd422f46fcfb74296e1c9e2687197880d40200679c4b You are in /var/spool/mail/root Mail in [root@docker ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a5db7ad91a83 registry "/entrypoint.sh /etc..." 13 seconds ago Up 11 seconds 0.0.0.0:5000->5000/tcp, :::5000->5000/tcp interesting_archimedes 6df1f2798d69 registry "/entrypoint.sh /bin..." 3 minutes ago Exited (127) 2 minutes ago stoic_feynman [root@docker ~]#

Change nginx label

[root@docker ~]# docker tag nginx:latest 192.168.206.188:5000/nginx You are in /var/spool/mail/root Mail in [root@docker ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE tomcat test d6598c118ba0 5 days ago 599MB nginx new 8cc8975756f1 5 days ago 681MB 192.168.206.188:5000/nginx latest 822b7ec2aaf2 11 days ago 133MB nginx latest 822b7ec2aaf2 11 days ago 133MB registry latest b2cb11db9d3d 13 days ago 26.2MB centos latest 300e315adb2f 9 months ago 209MB centos 7 8652b9f0cb4c 10 months ago 204MB

Upload nginx image

[root@docker ~]# docker push 192.168.206.188:5000/nginx Using default tag: latest The push refers to repository [192.168.206.188:5000/nginx] d47e4d19ddec: Pushed 8e58314e4a4f: Pushed ed94af62a494: Pushed 875b5b50454b: Pushed 63b5f2c0d071: Pushed d000633a5681: Pushed latest: digest: sha256:6bf47794f923462389f5a2cda49cf5777f736db8563edc3ff78fb9d87e6e22ec size: 1570

Get a list of private warehouses

[root@docker ~]# curl -XGET http://192.168.206.188:5000/v2/_catalog

{"repositories":["nginx"]}

You are in /var/spool/mail/root Mail in

Test Private Warehouse Download

Delete mirror first

[root@docker ~]# docker rmi 192.168.206.188:5000/nginx Untagged: 192.168.206.188:5000/nginx:latest Untagged: 192.168.206.188:5000/nginx@sha256:6bf47794f923462389f5a2cda49cf5777f736db8563edc3ff78fb9d87e6e22ec

Redownload

[root@docker ~]# docker pull 192.168.206.188:5000/nginx Using default tag: latest latest: Pulling from nginx Digest: sha256:6bf47794f923462389f5a2cda49cf5777f736db8563edc3ff78fb9d87e6e22ec Status: Downloaded newer image for 192.168.206.188:5000/nginx:latest 192.168.206.188:5000/nginx:latest [root@docker ~]#

2. cgroup resource constraints

Docker uses Cgroup to control the resource quotas used by containers, including CPU, memory, and disk. Docker basically covers common resource quotas and usage control.

Cgroup is the abbreviation of ControlGroups and is a mechanism provided by the Linux kernel to limit, record, and isolate the physical resources used by process groups (such as CPU, memory, disk I0, and so on)

1.cpu usage control

cpu cycle: 1s is the law of a cycle, the parameter value is generally 100000 (CPU unit of measure is seconds)

If 20% of the cpu usage needs to be allocated to this container, the parameter needs to be set to 2000, which is equivalent to 0.2s per cycle allocated to this container

cpu can only be occupied by one process at a time

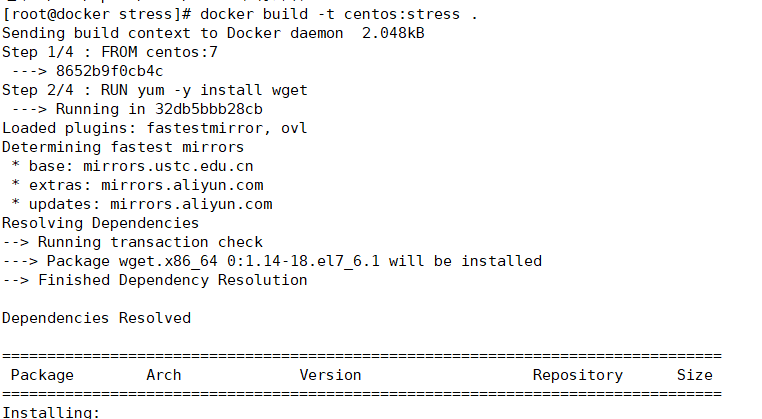

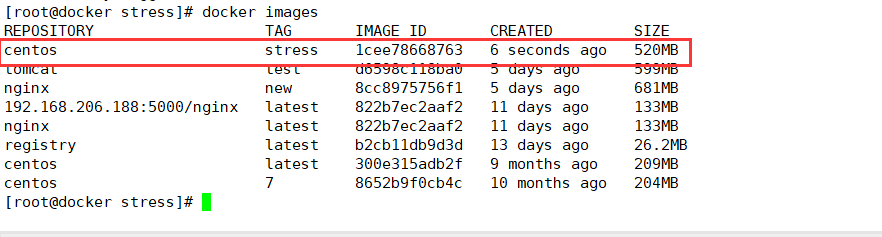

(1) stress test tool to test cpu and memory usage

Use Dockerfile to create a centos-based stress tool image

[root@docker ~]# mkdir /opt/stress [root@docker ~]# cd /opt/stress/ [root@docker stress]# vim Dockerfile FROM centos:7 RUN yum -y install wget RUN wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo RUN yum -y install stress [root@docker stress]# docker build -t centos:stress .

(2) Create a container using the following command. The value of the cpu-shares parameter in the command does not guarantee that one vcpu or how many GHz of CPU resources can be obtained. It is only an elastic weighted value

[root@docker stress]# docker run -itd --cpu-shares 100 centos:stress 1abb75ec906f522222e32163b05c8a5bca3151809f80298a0f044972ae355e07

By default, the CPU share of each Docker container is 1024. The share of a single container is meaningless. The CPU weighting effect of a container can only be reflected when multiple containers are running simultaneously

Examples:

For example, the CPU share of two containers A and B is 1000 and 500, respectively. Container A has twice as many chances of getting CPU time slices as container B when allocating time slices. However, depending on the running state of the host and other containers at that time, there is no guarantee that container A will get CPU time slices. For example, if the process of container A is always idle, container B will get CPU time slices.It is possible to obtain more CPU time slices than container A. In extreme cases, for example, if only one container is running on the host, even if its CPU share is only 50, it can also monopolize the CPU resources of the entire host.

2.cgroup Priority Weight Restriction

Container allocation takes effect only when resources allocated by the container are scarce, that is, when there is a need to restrict the resources used by the container. Therefore, it is not possible to determine how many CPU resources are allocated to a container based solely on its CPU share. The result of resource allocation depends on the CPU allocation of other containers running at the same time and on the operation of processes in the container.

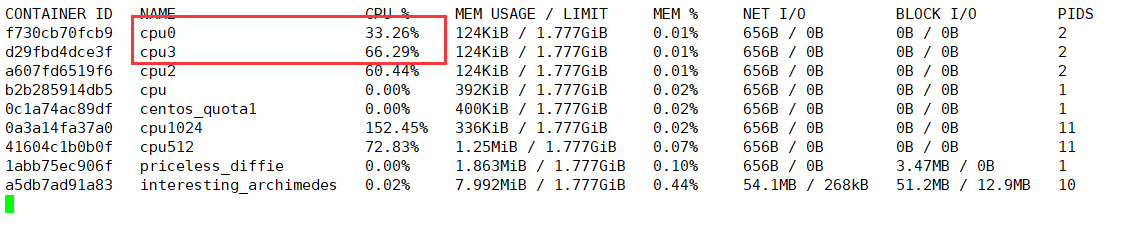

You can set the priority/permission of the container to use the CPU through cpu share, such as starting two containers and running to see the percentage of CPU usage.

[root@docker stress]# docker run -tid --name cpu512 --cpu-shares 512 centos:stress stress -c 10 #Simulate 10 subfunction processes inside a container 41604c1b0b0fcf1810c79d40df0028479da5cbb48a21c3a4424bf1035bb4e769 [root@docker stress]# docker run -tid --name cpu1024 --cpu-shares 1024 centos:stress stress -c 10 #Open another container for comparison 0a3a14fa37a05d9a286701903ea34cf848e34e1cf8b9b1efbffd166320c45d91 [root@docker stress]# docker stats #View Resource Usage

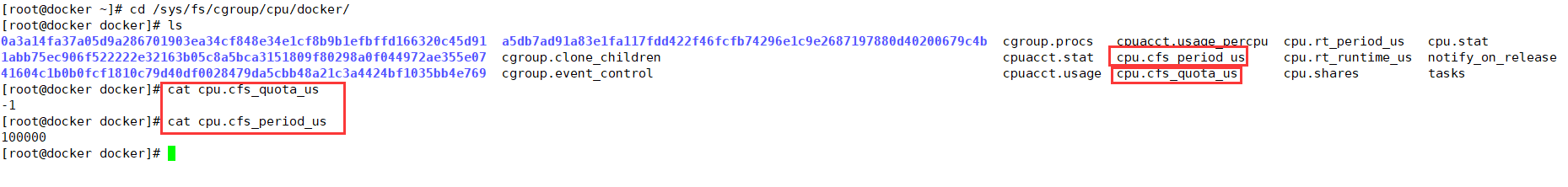

3.cpu cycle limit

Docker provides two parameter control containers ** - cpu-period, - cpu-quota** that control the CPU clock cycle to which the container can allocate. The cpu-period and cpu-quota parameters are generally used in combination.

- cpu-period is used to specify how long a container will reassign CPU usage

- cpu-quota: The percentage of resources allocated by the container; used to specify the maximum amount of time that can be spent running the container during this cycle; unlike -- cpu-shares, this configuration specifies an absolute value where the container will never use more CPU resources than it has been configured.

Units of parameters:

The units of cpu-period and cpu-quota are microseconds (mu s).

The cpu-period has a minimum value of 1000 microseconds, a maximum value of 1 second (10^6 mu s), and a default value of 0.1 second (100000 mus)

cpu-quota defaults to -1, meaning no control (no restrictions)

for example

Container processes require 0.2 seconds per second of a single CPU, and can set the cpu-period to 1000000 (that is, 1 second) and the cpu-quota to 20000 (0.2 seconds).

Of course, in a multi-core situation, if you allow container processes to fully occupy two cPUs, you can set the cpu-period to 100000 (that is, 6.1d seconds) and the cpu-quota to 20000 (0.2 seconds).

There are two ways to query resource constraints:

(1) Query resource constraints for containers

[root@docker ~]# cd /sys/fs/cgroup/cpu/docker/ [root@docker docker]# ls 0a3a14fa37a05d9a286701903ea34cf848e34e1cf8b9b1efbffd166320c45d91 a5db7ad91a83e1fa117fdd422f46fcfb74296e1c9e2687197880d40200679c4b cgroup.procs cpuacct.usage_percpu cpu.rt_period_us cpu.stat 1abb75ec906f522222e32163b05c8a5bca3151809f80298a0f044972ae355e07 cgroup.clone_children cpuacct.stat cpu.cfs_period_us cpu.rt_runtime_us notify_on_release 41604c1b0b0fcf1810c79d40df0028479da5cbb48a21c3a4424bf1035bb4e769 cgroup.event_control cpuacct.usage cpu.cfs_quota_us cpu.shares tasks [root@docker docker]# cat cpu.cfs_quota_us -1 [root@docker docker]# cat cpu.cfs_period_us 100000 [root@docker docker]#

Give an example

[root@docker docker]# docker run -itd --name centos_quota1 --cpu-period 100000 --cpu-quota 200000 centos:stress 0c1a74ac89dfec0583d8b63792bb0ad623b73e0ca6bc779db74fad7be45f7bf9 You are in /var/spool/mail/root Mail in [root@docker docker]# cd 0c1a74ac89dfec0583d8b63792bb0ad623b73e0ca6bc779db74fad7be45f7bf9 [root@docker 0c1a74ac89dfec0583d8b63792bb0ad623b73e0ca6bc779db74fad7be45f7bf9]# ls cgroup.clone_children cgroup.procs cpuacct.usage cpu.cfs_period_us cpu.rt_period_us cpu.shares notify_on_release cgroup.event_control cpuacct.stat cpuacct.usage_percpu cpu.cfs_quota_us cpu.rt_runtime_us cpu.stat tasks You are in /var/spool/mail/root Mail in [root@docker 0c1a74ac89dfec0583d8b63792bb0ad623b73e0ca6bc779db74fad7be45f7bf9]# cat cpu.cfs_period_us 100000 [root@docker 0c1a74ac89dfec0583d8b63792bb0ad623b73e0ca6bc779db74fad7be45f7bf9]# cat cpu.cfs_quota_us 200000 [root@docker 0c1a74ac89dfec0583d8b63792bb0ad623b73e0ca6bc779db74fad7be45f7bf9]#

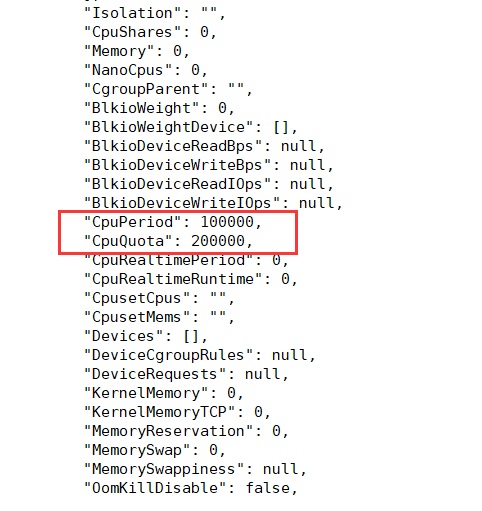

(2) Query resource constraints

docker inspect centos_quota1

[root@docker ~]# docker inspect centos_quota1

4.cpu core controls resources

For servers with multi-core CPUs, Docker can also control which CPU cores are used by the container to run, even with the'-epuset-cpus'parameter. This is particularly useful for servers with multiple CPUs and can be configured optimally for containers that require high-performance computing.

Two ways to control the use of resources:

(1) Use parameters directly to specify resource constraints when creating containers

[root@docker ~]# docker run -itd --name cpu --cpuset-cpus 0-1 centos:stress b2b285914db54cb281e4e0bf0369282083e3a59b6103e6f537e1a8a7afccaaba

Executing the above command requires the host to be dual-core, meaning that containers can only be created with 0/1 of two cores, and the cpu core configuration for the winning cgroup is as follows

[root@docker ~]# cd /sys/fs/cgroup/cpuset/docker/ [root@docker docker]# cd b2b285914db54cb281e4e0bf0369282083e3a59b6103e6f537e1a8a7afccaaba/ [root@docker b2b285914db54cb281e4e0bf0369282083e3a59b6103e6f537e1a8a7afccaaba]# cat cpuset.cpus 0-1

(2) Specify resource allocation after creating containers

Modify the host's file corresponding to container resource control, location: /sys/fs/cgroup/** directory to modify the corresponding file parameters

5. Mixed use of CPU quota control parameters

The cpuset-cpus parameter specifies that container A uses CPU kernel 0 and container B only uses CPU kernel 1.

[root@docker ~]# docker run -tid --name cpu2 --cpuset-cpus 1 --cpu-shares 512 centos:stress stress -c 1 a607fd6519f6831d4ada12b9c6fcac3b9daed2ec65a42bbf3c5349a2be77a441 [root@docker ~]# top #Press 1 to see the occupancy of each core; results show the occupancy of CPU 1 resources top - 00:26:55 up 1 day, 9:35, 1 user, load average: 21.00, 20.45, 20.35 Tasks: 227 total, 22 running, 205 sleeping, 0 stopped, 0 zombie %Cpu0 :100.0 us, 0.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st %Cpu1 : 99.7 us, 0.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.3 si, 0.0 st %Cpu2 : 99.7 us, 0.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.3 si, 0.0 st %Cpu3 : 99.3 us, 0.7 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st KiB Mem : 1863248 total, 173904 free, 526716 used, 1162628 buff/cache KiB Swap: 2097148 total, 2066940 free, 30208 used. 916000 avail Mem PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 115802 root 20 0 7312 100 0 R 98.3 0.0 1:19.82 stress 110952 root 20 0 7312 96 0 R 24.5 0.0 28:49.24 stress 110954 root 20 0 7312 96 0 R 22.2 0.0 28:41.85 stress 110953 root 20 0 7312 96 0 R 21.9 0.0 28:45.36 stress 110955 root 20 0 7312 96 0 R 21.5 0.0 28:37.50 stress 110960 root 20 0 7312 96 0 R 20.2 0.0 28:23.86 stress

[root@docker ~]# docker run -tid --name cpu3 --cpuset-cpus 3 --cpu-shares 1024 centos:stress stress -c 1

d29fbd4dce3f265288e6f7b239783dbeed527dde7857533d2f660342a9339c58

[root@docker ~]# top #Press 1 to see the occupancy of each core; results show the occupancy of CPU 1 resources

top - 00:28:09 up 1 day, 9:36, 1 user, load average: 21.72, 20.83, 20.49

Tasks: 239 total, 25 running, 214 sleeping, 0 stopped, 0 zombie

%Cpu0 :100.0 us, 0.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu1 : 98.9 us, 1.1 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu2 : 98.9 us, 0.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 1.1 si, 0.0 st

%Cpu3 :100.0 us, 0.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 1863248 total, 157524 free, 540004 used, 1165720 buff/cache

KiB Swap: 2097148 total, 2066940 free, 30208 used. 901428 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

115952 root 20 0 7312 100 0 R 98.9 0.0 0:25.67 stress

115802 root 20 0 7312 100 0 R 69.9 0.0 2:24.27 stress

110961 root 20 0 7312 96 0 R 19.4 0.0 28:50.42 stress

110953 root 20 0 7312 96 0 R 18.3 0.0 29:00.07 stress

110960 root 20 0 7312 96 0 R 18.3 0.0 28:38.13 stress

110956 root 20 0 7312 96 0 R 17.2 0.0 28:55.38 stress

The above are different CPUs

The following is still using cpu3 to see resource usage

[root@docker ~]# docker run -tid --name cpu0 --cpuset-cpus 3 --cpu-shares 512 centos:stress stress -c 1 f730cb70fcb93e2b8d94564cc4f517ea8417e6d8ff6e5cc8a2143f9725a0687a [root@docker ~]# docker stats

6. Memory Limit

Like the operating system, the memory available to the container consists of two parts: physical memory and wap.

Docker controls container memory usage through the following two sets of parameters.

-m or -memory: Set limits on memory usage, such as 100M, 1024M

- memory-swap: Set memory + swap usage quota

Perform the following commands to allow the container to use up to 200M of memory and 300M of swap

[root@docker ~]# docker run -it -m 200M --memory-swap=300M centos:stress [root@22133b8ffdd2 /]#

By default, the container can use all the free memory on the host.

Similar to the CPU's cgroups configuration, Docker automatically creates the appropriate CGroup configuration file for the container in directory/sys/fs/cgroup/memory/docker/<container's full length ID>

[root@docker ~]# docker stats

7. Bloio restrictions

By default, all containers read and write to the disk equally, and the container blockI0 priority can be changed by setting the **- blkio-weight** parameter.

-blkio-weight is similar to -cpu-shares in that it sets a relative weight value, which defaults to 500.

In the following example, container A reads and writes disk IO twice as much as container B

Open terminals separately

[root@docker ~]# docker run -it -m 200M --memory-swap=300M centos:stress [root@c188853ba01b /]# [root@docker ~]# docker run -it --name container_02 --blkio-weight 300 centos:stress [root@74b5ce8fbaf9 /]#

See

8. Restrictions of BPS and iops

Controlling the actual IO of the disk

bps is bytepersecond, the amount of data read and written per second.

iops is io per second, the number of IOs per second.

The bps and iops of the container can be controlled by the following parameters:

- device-read-bps, restrict the BPS of a device

- device-read-iops, limit the IOPs read from a device

- device-write-iops, limit writing IOPs for a device

(1) Limit the rate at which containers write/dev/sda to 5MB/s

[root@docker ~]# docker run -it --device-write-bps /dev/sda:5MB centos:stress [root@eeb1c7e144df /]# dd if=/dev/zero of=test bs=1M count=10 oflag=direct 10+0 records in 10+0 records out 10485760 bytes (10 MB) copied, 2.02323 s, 5.2 MB/s

(2) Limit the rate at which containers write/dev/sda to 10MB/s

[root@docker ~]# docker run -it --device-write-bps /dev/sda:10MB centos:stress [root@1215f1f87ddf /]# dd if=/dev/zero of=test bs=1M count=100 oflag=direct 100+0 records in 100+0 records out 104857600 bytes (105 MB) copied, 9.96511 s, 10.5 MB/s

(3) Significantly faster without restricting disks

[root@docker ~]# docker run -it centos:stress [root@a238a2d8fe76 /]# dd if=/dev/zero of=test bs=1M count=100 oflag=direct 100+0 records in 100+0 records out 104857600 bytes (105 MB) copied, 0.586663 s, 179 MB/s

9. Build an image to specify resource constraints

These are all created from the command line, or you can specify them when you build a mirror

--build-arg=[] : Setting variables at the time of image creation; --cpu-shares : Set up cpu Using weights; --cpu-period : limit CPU CFS cycle; --cpu-quota : limit CPU CFS quota; --cpuset-cpus : Specify what to use CPU id; --cpuset-mems : Specify memory used id; --disable-content-trust : Ignore checks and turn them on by default; -f : Specify what to use Dockerfile Route; --force-rm : Delete intermediate containers during mirroring settings; --isolation : Using Container Isolation Technology; --label=[] : Set Metadata for Mirroring; -m : Set maximum memory; --memory-swap : Set up Swap Maximum is memory swap, "-1"Represents unlimited swap; --no-cache : The process of creating a mirror does not use a cache; --pull : Try to update the new version of the mirror; --quiet, -q : Quiet mode, only mirror output after success ID; --rm : Delete intermediate container after setting mirror successfully; --shm-size : Set up/dev/shm Size, default is 64 M; --ulimit : Ulimit To configure; --squash : take Dockerfile All operations in are compressed into one layer; --tag, -t: The name and label of the mirror, usually name:tag perhaps name format;You can set multiple labels for a single image in one build. --network : default default;Set during build RUN Network mode of instructions

3. Summary

This paper mainly introduces the establishment of private warehouses and the cgroup resource limitation.

Summary:

1. Major types of resource constraints

(1) CPU weight shares, quota, cpuset

(2) Disk BPS, TPS restrictions, specify which disk, partition to use

(3) Memory-m, -swap memory, swap partition

2. Several ways to limit resources

(1) When build ing a mirror, you can specify the resource constraints for that mirror

(2) When run runs a mirror as a container, you can specify the resource limit of the container

(3) After the container is started, it can be located in the directory of the host machine corresponding to the container. Modify the resource limit and then overload