brief introduction

pyspider is a powerful Web crawler framework in Python, and supports distributed architecture.

Why use docker to build pyspider

When installing pyspider, you have crawled through some pits. For example, when using pip install pyspider, the version of Python is required to be 3.6 or less, because async is already the keyword of python3.7;

Using git clone code to install pyspider,python3 setup.py intall, you will encounter ssl certificate problems during the use process. In a word, you may encounter version compatibility problems.

Using docker to deploy pyspider

- Installation of docker is not specified;

- Go straight to the point.

docker network create --driver bridge pyspider

mkdir -p /volume1/docker/Pyspider/mysql/{conf,logs,data}/ /volume1/docker/Pyspider/redis/

docker run --network=pyspider --name redis -d -v /volume1/docker/Pyspider/redis:/data -p 6379:6379 redis

docker run --network pyspider -p 33060:3306 --name pymysql -v /volume1/docker/Pyspider/mysql/conf/my.cnf:/etc/mysql/my.cnf -v /volume1/docker/Pyspider/mysql/logs:/logs -v /volume1/docker/Pyspider/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root123 -d mysql

docker run --network=pyspider --name scheduler -d -p 23333:23333 --restart=always binux/pyspider --taskdb "mysql+taskdb://pyspider:py1234@192.168.2.4:33060:33060/taskdb" --resultdb "mysql+projectdb://pyspider:py1234@192.168.2.4:33060:33060/resultdb" --projectdb "mysql+projectdb://pyspider:py1234@192.168.2.4:33060:33060/projectdb" --message-queue "redis://redis:6379/0" scheduler --inqueue-limit 10000 --delete-time 3600-

Using docker compose deployment

- docker-compose.yml

version: '2'

services:

phantomjs:

image: 'binux/pyspider:latest'

command: phantomjs

cpu_shares: 256

environment:

- 'EXCLUDE_PORTS=5000,23333,24444'

expose:

- '25555' # Expose port 25555 to the container link ed to this service

mem_limit: 256m

restart: always

phantomjs-lb:

image: 'dockercloud/haproxy:latest' # Using haproxy to use load balancing

links:

- phantomjs

volumes:

- /var/run/docker.sock:/var/run/docker.sock # In the version of docker compose V2, haproxy needs to specify the docker socket (in MAC system)

restart: always

fetcher:

image: 'binux/pyspider:latest'

command: '--message-queue "redis://Redis: 6379 / 0 "-- phantomjs proxy" phantomjs: 80 "catcher -- XMLRPC 'ා catcher starts in rpc mode

cpu_shares: 256

environment:

- 'EXCLUDE_PORTS=5000,25555,23333'

links:

- 'phantomjs-lb:phantomjs'

mem_limit: 256m

restart: always

fetcher-lb:

image: 'dockercloud/haproxy:latest' # Using haproxy to use load balancing

links:

- fetcher

volumes:

- /var/run/docker.sock:/var/run/docker.sock # In the version of docker compose V2, haproxy needs to specify the docker socket (in MAC system)

restart: always

processor:

image: 'binux/pyspider:latest'

command: '--projectdb "mysql+projectdb://pyspider:py1234@192.168.2.4:33060/projectdb" --message-queue "redis://redis:6379/0" processor'

cpu_shares: 256

mem_limit: 256m

restart: always

result-worker:

image: 'binux/pyspider:latest'

command: '--taskdb "mysql+taskdb://pyspider:py1234@192.168.2.4:33060/taskdb" --projectdb "mysql+projectdb://pyspider:py1234@192.168.2.4:33060/projectdb" --resultdb "mysql+resultdb://pyspider:py1234@192.168.2.4:33060/resultdb" --message-queue "redis://redis:6379/0" result_worker'

cpu_shares: 256

mem_limit: 256m

restart: always

webui:

image: 'binux/pyspider:latest'

command: '--taskdb "mysql+taskdb://pyspider:py1234@192.168.2.4:33060/taskdb" --projectdb "mysql+projectdb://pyspider:py1234@192.168.2.4:33060/projectdb" --resultdb "mysql+resultdb://pyspider:py1234@192.168.2.4:33060/resultdb" --message-queue "redis://redis:6379/0" webui --max-rate 3 --max-burst 6 --scheduler-rpc "http://scheduler:23333/" --fetcher-rpc "http://fetcher/"'

cpu_shares: 256

environment:

- 'EXCLUDE_PORTS=24444,25555,23333'

ports:

- '15000:5000' # The external port of webui is 5000. You can access the webui service through http://localhost:5000.

links:

- 'fetcher-lb:fetcher' # link to other load balancing haproxy services.

mem_limit: 256m

restart: always

webui-lb:

image: 'dockercloud/haproxy:latest'

links:

- webui

restart: always

nginx:

image: 'nginx'

links:

- 'webui-lb:HAPROXY'

ports:

- '5080:80'

volumes:

- /volume1/docker/Pyspider/nginx/nginx.conf:/etc/nginx/nginx.conf

- /volume1/docker/Pyspider/nginx/conf.d/:/etc/nginx/conf.d/

- /volume1/docker/Pyspider/nginx/sites-enabled/:/etc/nginx/sites-enabled/

restart: always

networks:

default:

external:

name: pyspider #Specify the network interface of docker compose as: pyspider; implement the interworking with the container created by docker run.

-

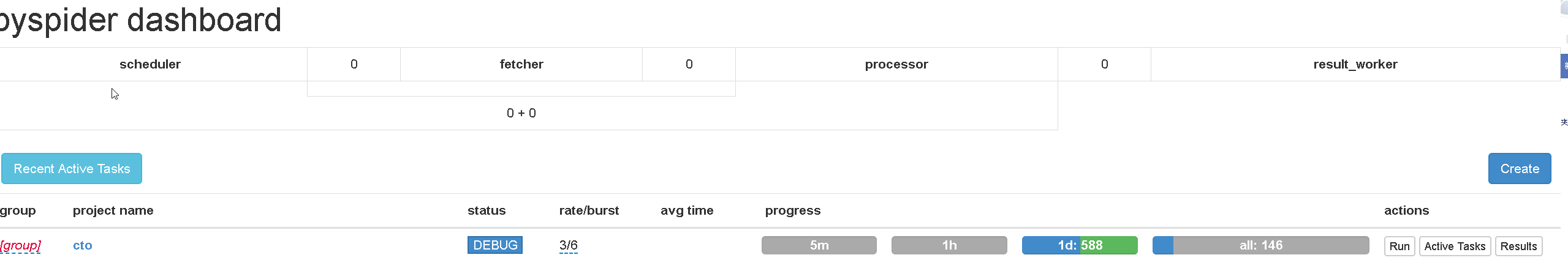

Visit url:

http://ip:15000 - web ui

If you want to create more fetcher, result work and phantomjs container instances, you can use: docker compose scale phantomjs = 2 processor = 4 Result worker = 2 docker compose to automatically create 2 phantomjs services, 4 processor services and 2 Result worker services for you; haproxy will automatically realize load balancing