#This article is about the network communication between multiple containers of a single machine and the network communication across host containers

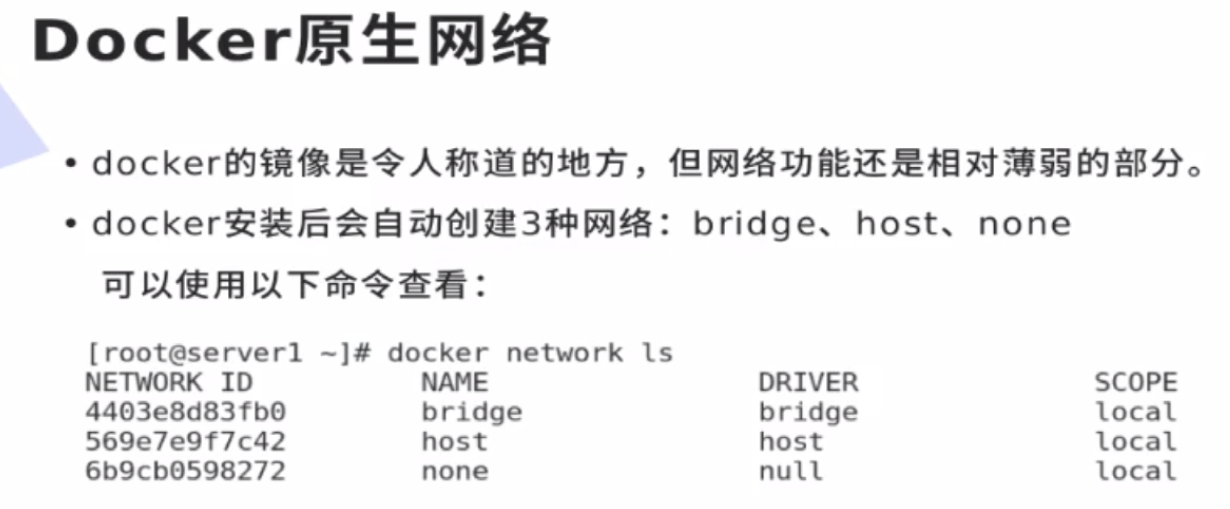

docker native network

Delete the unused network. The parameter prune is available in every docker instruction.

[root@docker1 harbor]# docker network prune Are you sure you want to continue? [y/N] y

docker native network bridge host is null. By default, bridge is used

[root@docker1 harbor]# docker network ls NETWORK ID NAME DRIVER SCOPE 8db80f123dd8 bridge bridge local b51b022f8430 host host local c386dbae12f0 none null local

How do containers communicate

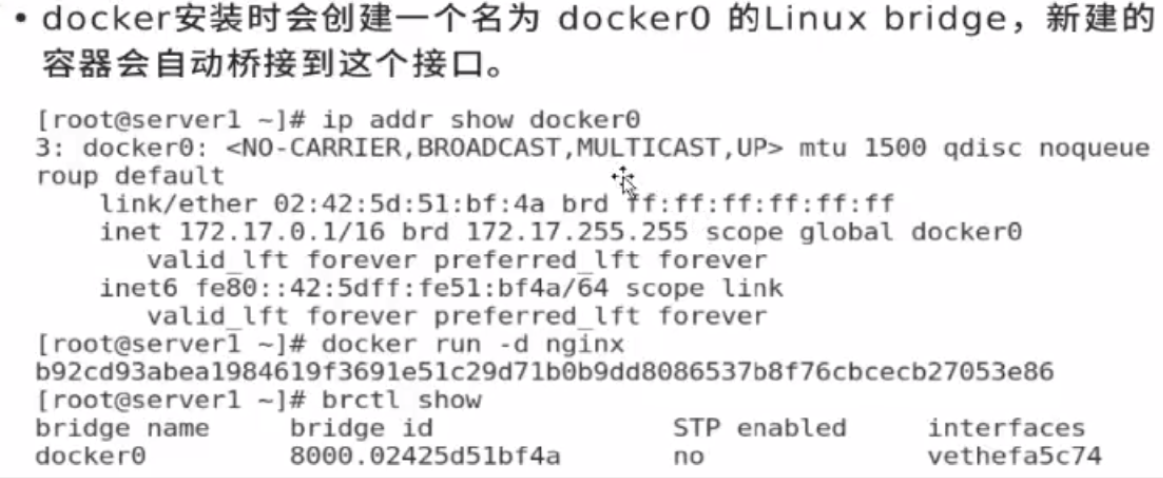

Bridging mode

docker0 is the gateway of all containers of the current host. The container address is monotonically increasing in the default allocation. The first is 0.2 and the second is 0.3

When the container stops, its ip resources will be released and then allocated to other containers. The ip address in the container will change and be dynamic

First, install a tool to operate the bridge interface. After the bridge network card is lost, you can add it through this tool

[root@docker1 harbor]# yum install bridge-utils.x86_64 -y [root@docker1 harbor]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.0242b9a15b22 no

veth7c468c6 is the virtual network card of the container. The container is bridged. One end is in the network in the container and the other end is in docker0

[root@docker1 harbor]# docker run -d --name nginx nginx [root@docker1 harbor]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES f28a0d105547 nginx "/docker-entrypoint...." 3 seconds ago Up 2 seconds 80/tcp nginx [root@docker1 harbor]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.0242b9a15b22 no veth7c468c6

The virtual network card docker0 is 39, and the container may be 40, both ends of a network cable

veth7c468c6@if39:

[root@docker1 harbor]# ip addr show

40: veth7c468c6@if39: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether aa:23:2b:fe:e9:94 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::a823:2bff:fefe:e994/64 scope link

valid_lft forever preferred_lft forever

[root@docker1 harbor]# docker run -d --name nginx2 nginx f640de1d58fe3628540618fc03aa8f4fe4259c55fec6d22eb41b741b94b6ae24 [root@docker1 harbor]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.0242b9a15b22 no veth5af9c0d veth7c468c6

ip is monotonically increasing

[root@docker1 harbor]# ip addr show docker0

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

therefore

[root@docker1 harbor]# curl 172.17.0.2 <title>Welcome to nginx!</title> [root@docker1 harbor]# curl 172.17.0.3 <h1>Welcome to nginx!</h1>

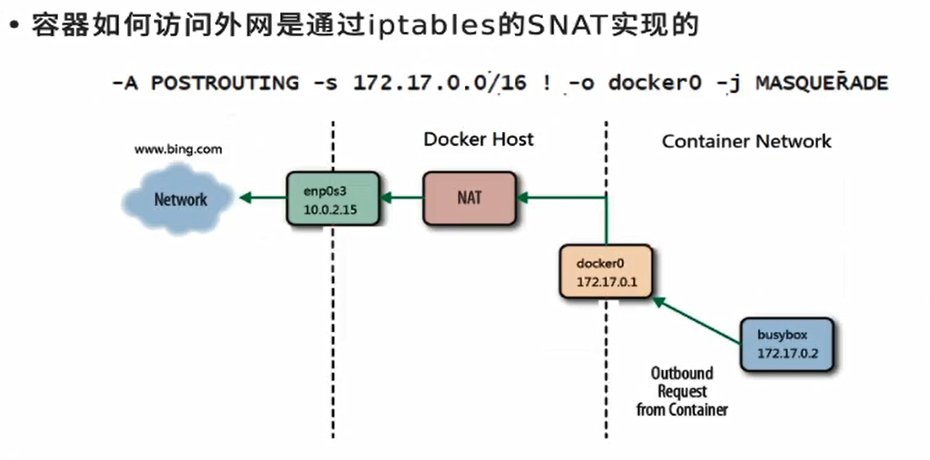

The container can access the external network through nat, and MASQUERADE is enabled

The container can access the external network through nat, and MASQUERADE is enabled

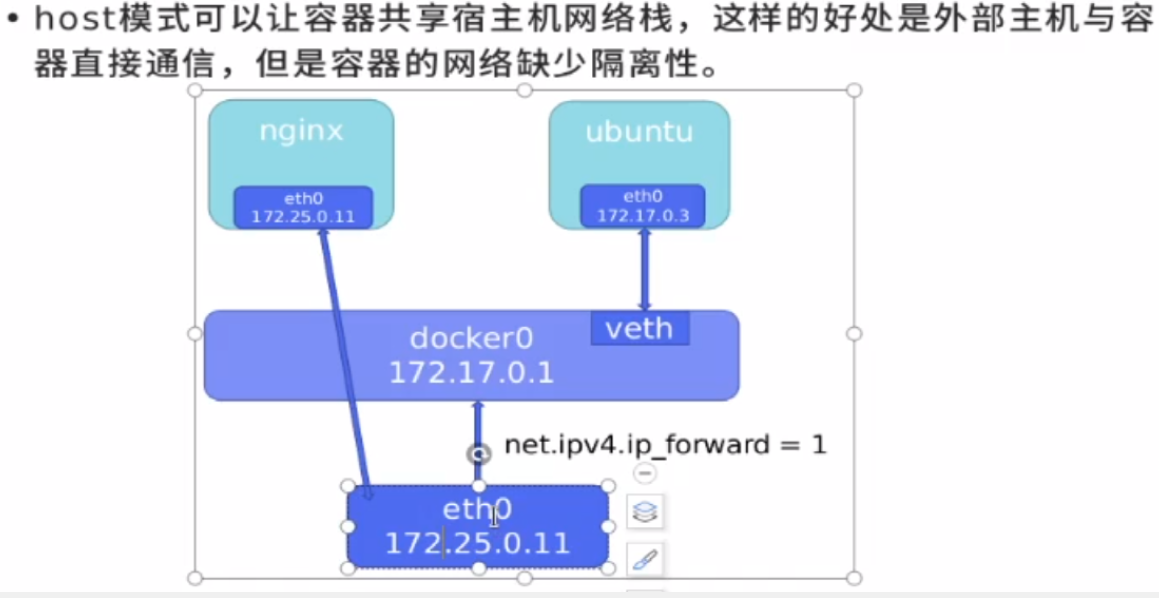

The container is bridged to docker0 through the virtual network, and docker0 sends the external network through eth0 (turn on the kernel routing function ipv4=1)

[root@docker1 harbor]# iptables -t nat -nL Chain POSTROUTING (policy ACCEPT) target prot opt source destination MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0

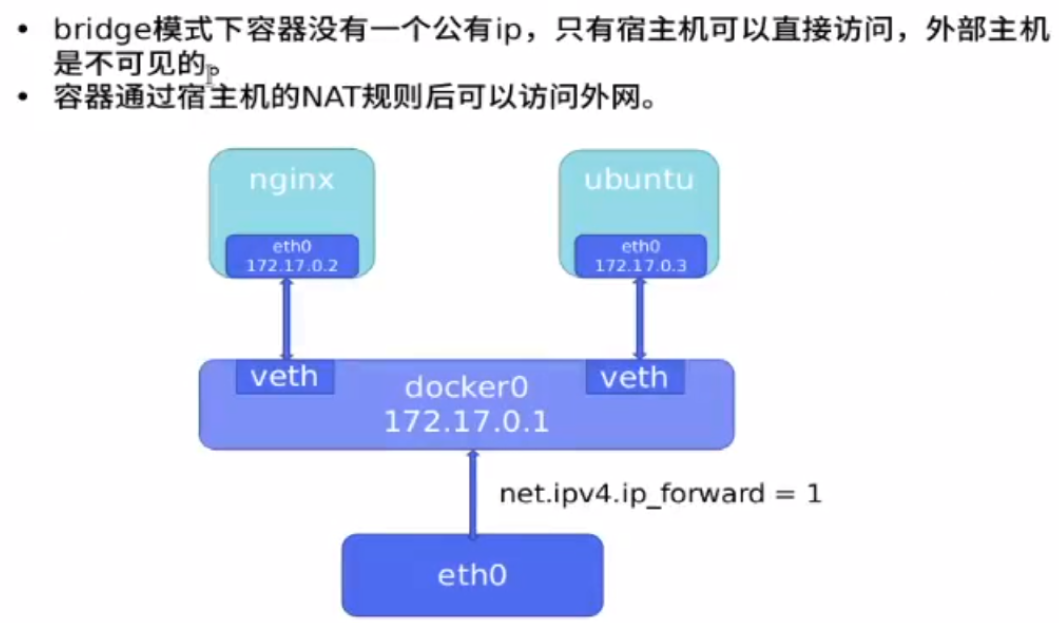

Host shares the network of the host

The default is - network

[root@docker1 harbor]# docker run -d --name demo --network host nginx

There is only one virtual network card in it. The host will not generate a virtual network card. The network that directly uses the host computer will have a public ip. The external network can access the container, but it is necessary to avoid port conflicts and conflicts between containers and between containers and host computers

[root@docker1 harbor]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1b9e64aaa49a nginx "/docker-entrypoint...." About a minute ago Up About a minute demo f28a0d105547 nginx "/docker-entrypoint...." 14 minutes ago Up 14 minutes 80/tcp nginx [root@docker1 harbor]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.0242b9a15b22 no veth7c468c6

host network mode

[root@docker1 harbor]# curl 172.25.254.1 <title>Welcome to nginx!</title>

Port conflict, unable to start

[root@docker1 harbor]# docker run -d --name demo2 --network host nginx [root@docker1 harbor]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1b9e64aaa49a nginx "/docker-entrypoint...." 9 minutes ago Up 9 minutes demo f28a0d105547 nginx "/docker-entrypoint...." 21 minutes ago Up 21 minutes 80/tcp nginx [root@docker1 harbor]# docker logs demo2 nginx: [emerg] bind() to [::]:80 failed (98: Address already in use)

nat is not required for host direct connection, but

nat is not required for host direct connection, but

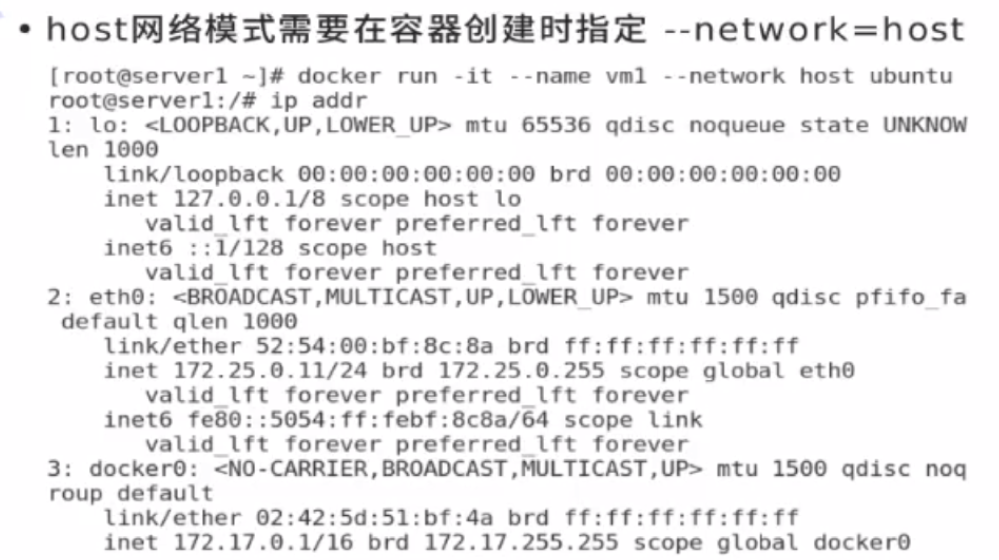

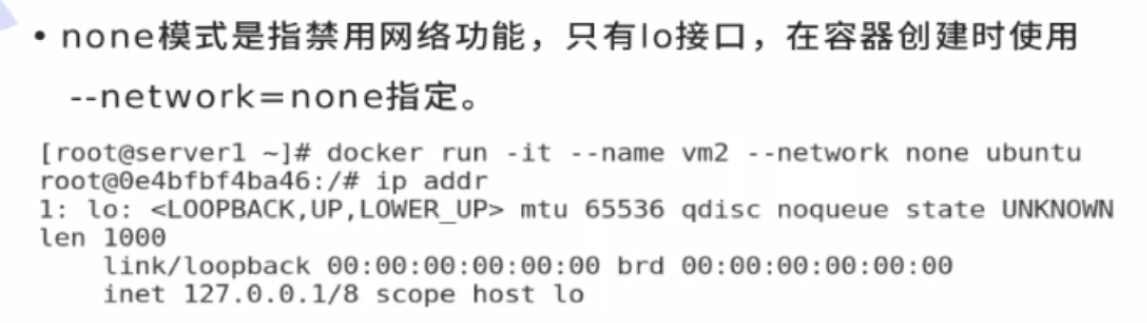

none mode

none mode

Disable network mode and do not give container ip address

[root@docker1 harbor]# docker run -d --name demo2 --network none nginx

[root@docker1 harbor]# docker run -it --rm --network none busybox

/ # ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

Some containers store more confidential things, such as password hashes, and do not need a network

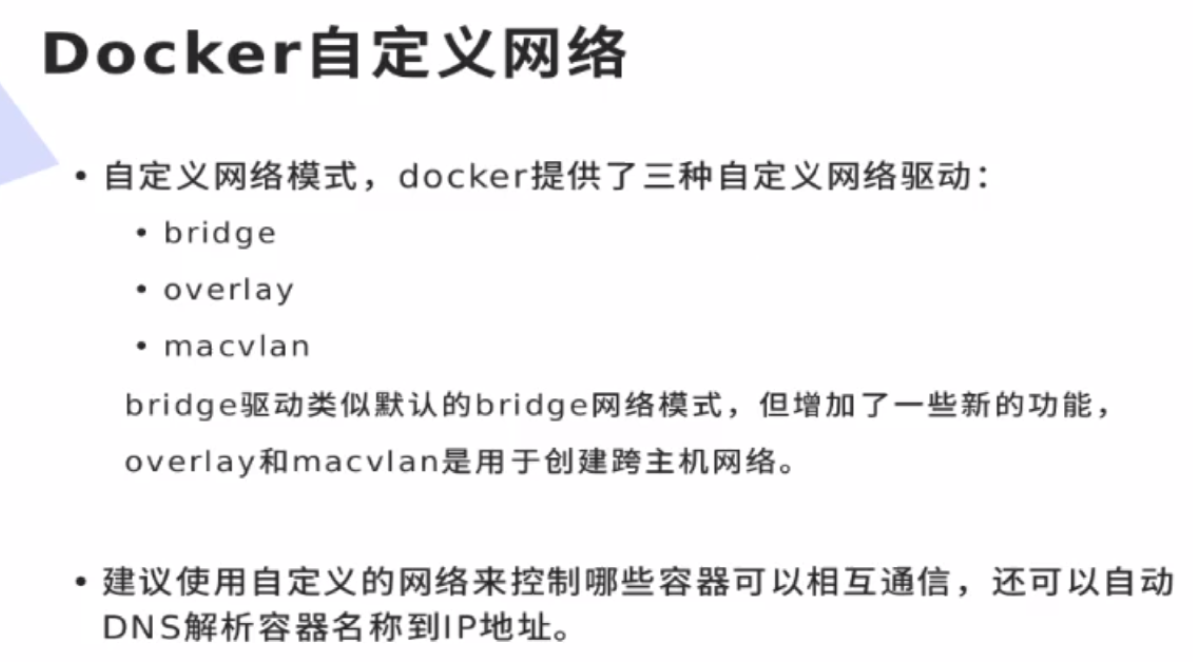

docker custom network

overlay is commonly used in the Internet, and the three major operators often use macvlan (bottom hardware method to solve network cross host communication)

The custom bridge can fix the ip of a container by itself, and the bridge will automatically assign an ip when the container is used, such as 1. Restart after shutdown, it may not be ip1 but ip2.

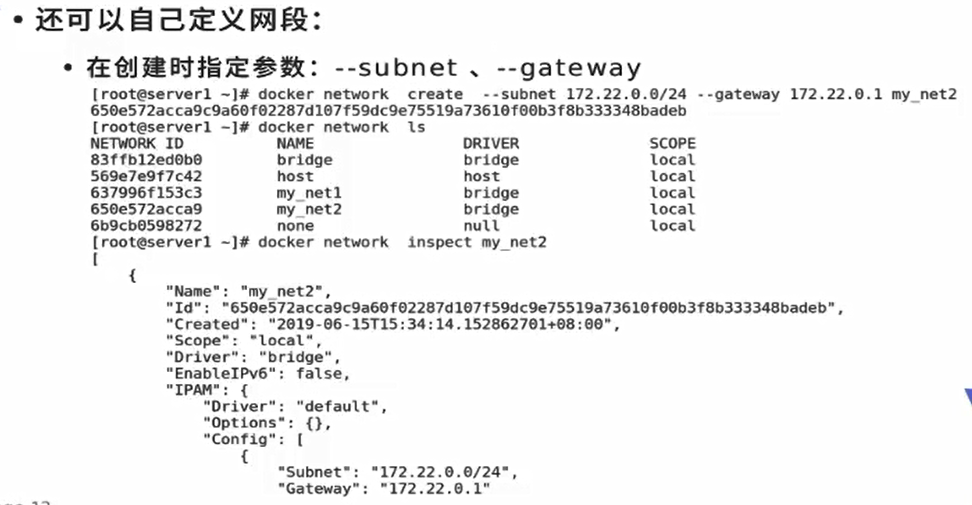

Custom bridge can also customize ip network segment and subnet address

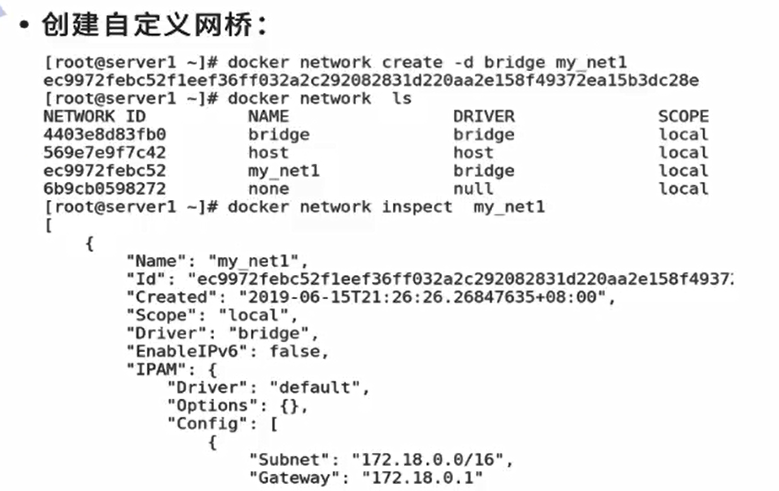

Create a custom network bridge. The default custom network is bridge

Create a custom network bridge. The default custom network is bridge

[root@docker1 harbor]# docker network --help [root@docker1 harbor]# docker network create my_net1 [root@docker1 harbor]# docker network ls NETWORK ID NAME DRIVER SCOPE 8db80f123dd8 bridge bridge local b51b022f8430 host host local 18fb73b3c2f5 my_net1 bridge local c386dbae12f0 none null local

harbor is also a warehouse created by itself. Because it can automatically DNS resolve the container name to IP, it is recommended to use the warehouse created by itself when the container uses bridge bridge

Without network parameters, it will be bridged to the native bridge by default

[root@docker1 harbor]# docker run -d --name web1 nginx [root@docker1 harbor]# brctl show bridge name bridge id STP enabled interfaces br-18fb73b3c2f5 8000.024231c9d512 no docker0 8000.0242b9a15b22 no veth2e47b03 [root@docker1 harbor]# docker run -d --name web2 --network my_net1 nginx [root@docker1 harbor]# brctl show bridge name bridge id STP enabled interfaces br-18fb73b3c2f5 8000.024231c9d512 no veth231ba38 docker0 8000.0242b9a15b22 no veth2e47b03

This container is a native bridge by default and does not provide parsing

[root@docker1 harbor]# docker run -it --rm busybox ping: bad address 'web1' / # ping 172.17.0.2 64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.059 ms / # ping web2 ping: bad address 'web2'

Custom bridge function

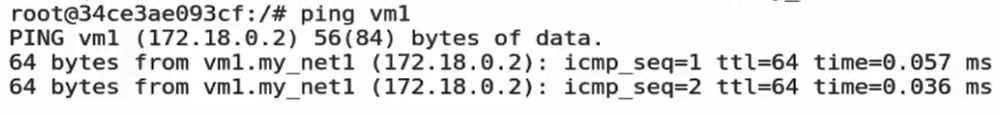

Communicate through DNS resolution

[root@docker1 harbor]# docker run -it --rm --network my_net1 busybox / # ping web2 64 bytes from 172.18.0.2: seq=0 ttl=64 time=0.120 ms / # ping web1 ping: bad address 'web1'

ping web1 is different because the customized network segment is different from docker0 network segment

[root@docker1 harbor]# ip addr show

43: br-18fb73b3c2f5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:31:c9:d5:12 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-18fb73b3c2f5

valid_lft forever preferred_lft forever

inet6 fe80::42:31ff:fec9:d512/64 scope link

valid_lft forever preferred_lft forever

5: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

The ip address of the container is dynamic, but it is not possible to communicate with ip. The ip address is dynamically changed, and the container name is used for communication

[root@docker1 harbor]# docker stop web2 [root@docker1 harbor]# docker run -d --name web3 --network my_net1 nginx [root@docker1 harbor]# docker start web2 [root@docker1 harbor]# docker run -it --rm --network my_net1 busybox / # ping web2 64 bytes from 172.18.0.3: seq=0 ttl=64 time=0.051 ms

[root@docker1 harbor]# docker network create --help

[root@docker1 harbor]# docker network create --subnet 172.20.0.0/24 --gateway 172.20.0.1 my_net2

3043c2a41e35d3a32a7a649fcaa3b4b0b2d3700121751b9a63415b6d31308bae

[root@docker1 harbor]# docker network ls

NETWORK ID NAME DRIVER SCOPE

8db80f123dd8 bridge bridge local

b51b022f8430 host host local

18fb73b3c2f5 my_net1 bridge local

3043c2a41e35 my_net2 bridge local

c386dbae12f0 none null local

[root@docker1 harbor]# ip addr show generates the bridge interface

58: br-3043c2a41e35: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:4f:90:95:90 brd ff:ff:ff:ff:ff:ff

inet 172.20.0.1/24 brd 172.20.0.255 scope global br-3043c2a41e35

valid_lft forever preferred_lft forever

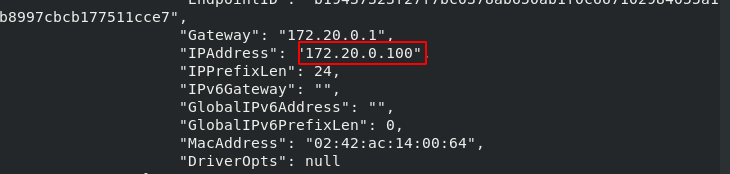

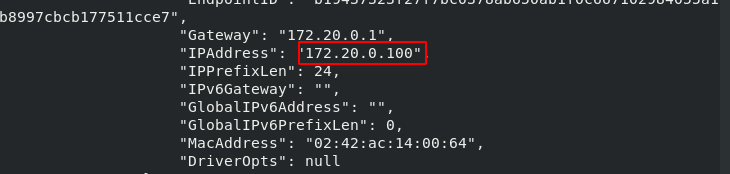

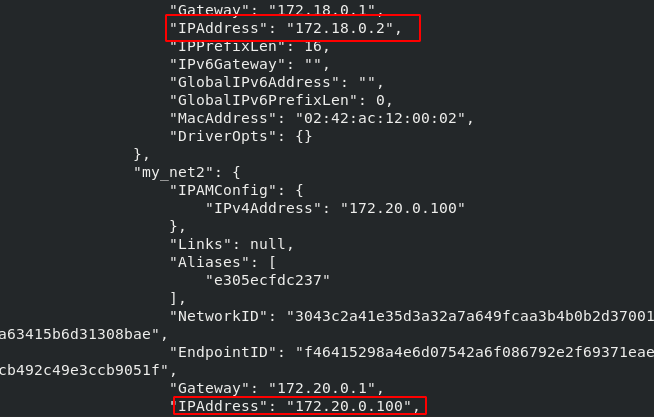

[root@docker1 harbor]# docker run -d --name web4 --network my_net2 --ip 172.20.0.100 nginx [root@docker1 harbor]# docker inspect web4

[root@docker1 harbor]# docker stop web4 [root@docker1 harbor]# docker start web4 [root@docker1 harbor]# docker inspect web4

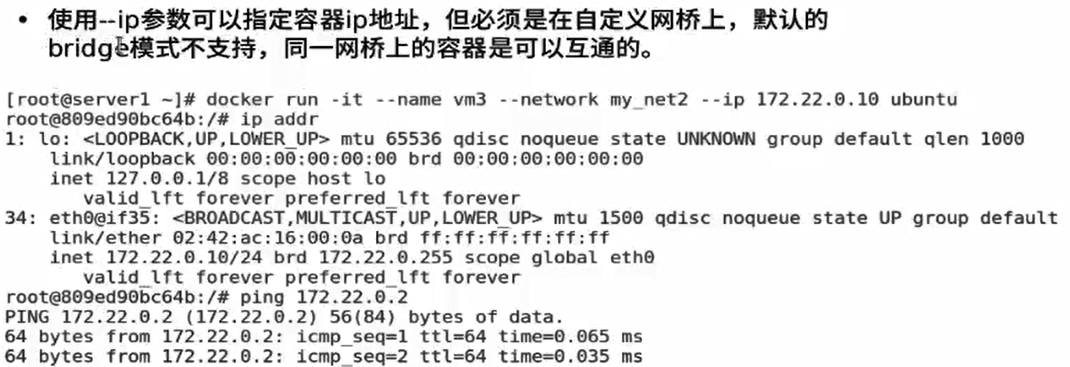

It must be a user-defined network segment before it can be - ip

It must be a user-defined network segment before it can be - ip

my_net1 has no custom network segment

my_net1 has no custom network segment

[root@docker1 harbor]# docker run -d --name web5 --network my_net1 --ip 172.18.0.100 nginx docker: Error response from daemon: user specified IP address is supported only when connecting to networks with user configured subnets.

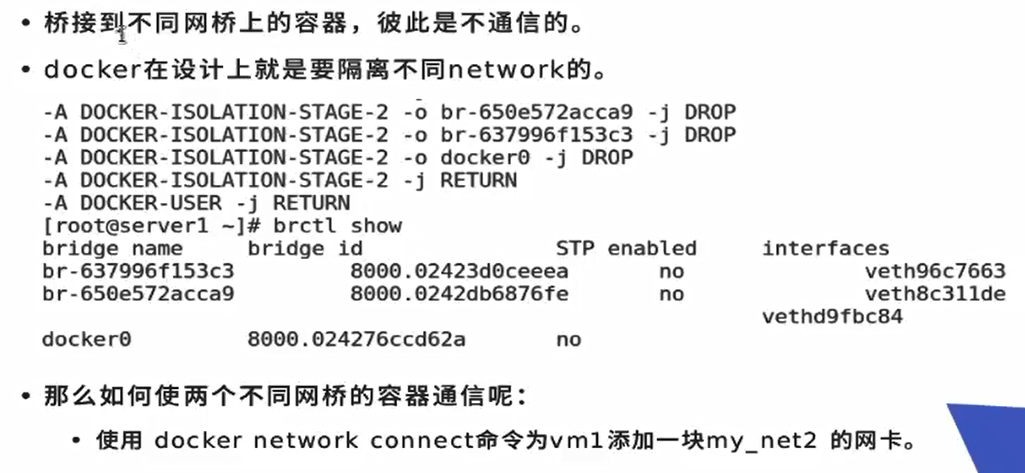

docker isolates different network s for security, and its policies are discarded by DROP

The container communication of different bridges should not adjust its strategy, which will destroy its isolation

[root@docker1 ~]# iptables -L Chain DOCKER-ISOLATION-STAGE-2 (3 references) target prot opt source destination DROP all -- anywhere anywhere DROP all -- anywhere anywhere DROP all -- anywhere anywhere RETURN all -- anywhere anywhere

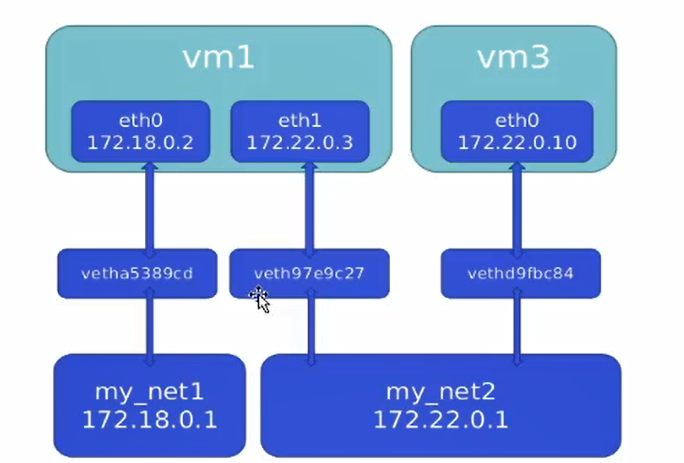

web3 my_net1 web4 my_net2, let it interoperate

web3 my_net1 web4 my_net2, let it interoperate

Add a piece of my to web3_ Net1 network card

[root@docker1 ~]# docker network connect my_net1 web4 [root@docker1 ~]# docker inspect web3

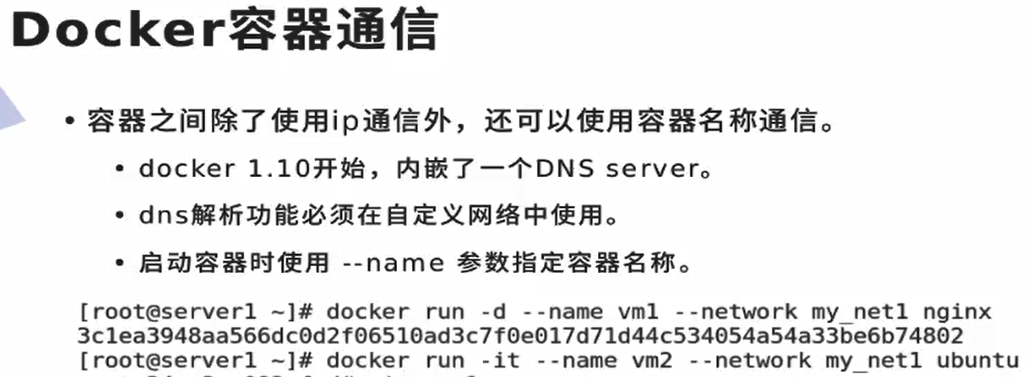

Docker container communication

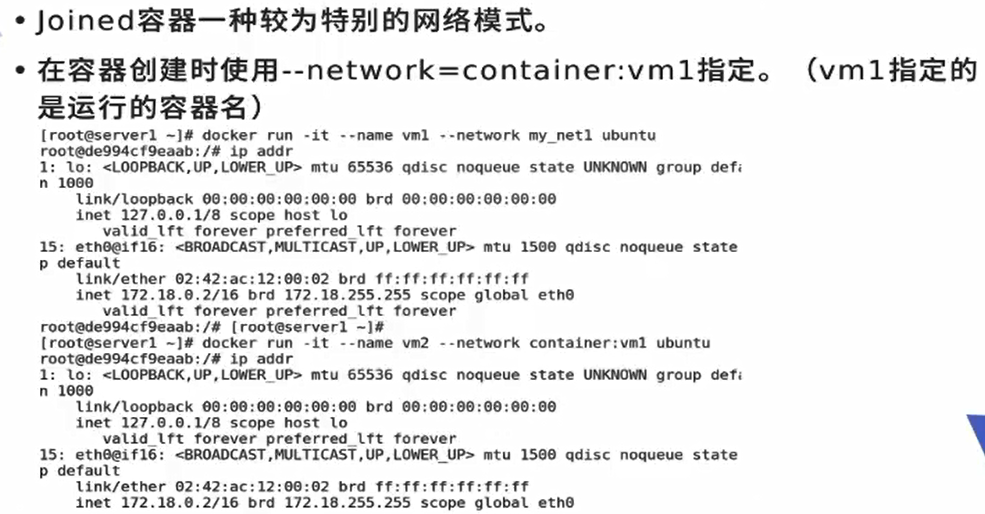

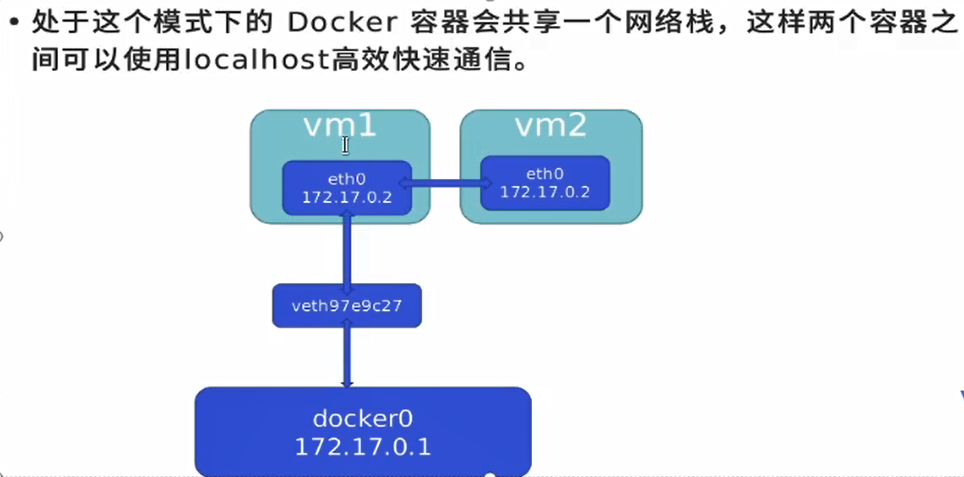

Joined container:

Joined container:

A network sharing one container. Two different containers can communicate at high speed through localhost

For example, lamp architecture, nginx mysql php can be deployed and run in different containers, but it can be accessed through localhost only through different ports, such as mysql3306, php9000 and nginx80.

web3 is my_net1

[root@docker1 ~]# docker inspect web3

"Gateway": "172.18.0.1",

ip address of shared web3

[root@docker1 ~]# docker pull radial/busyboxplus

[root@docker1 ~]# docker tag radial/busyboxplus:latest busyboxplus:latest

[root@docker1 ~]# docker run -it --rm --network container:web3 busyboxplus

/ # ip addr

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0

/ # curl localhost

<title>Welcome to nginx!</title>

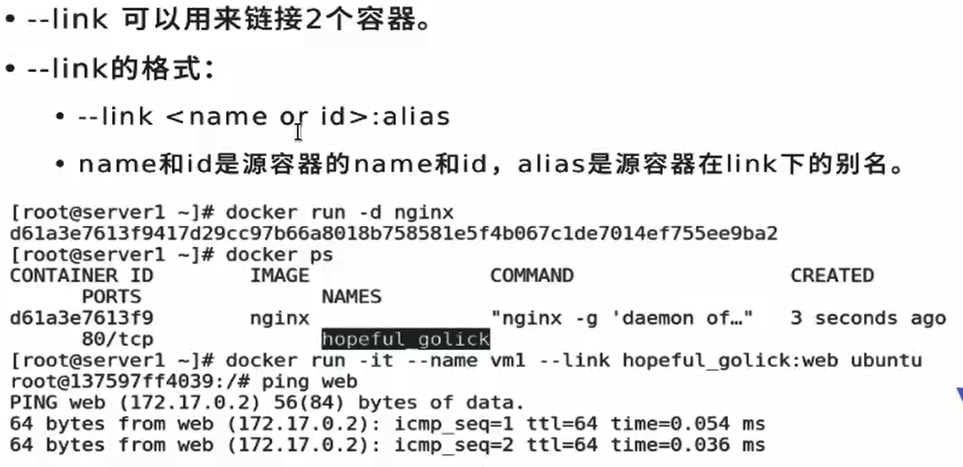

The default network has no resolution. Add DNS resolution to the default network. When the container is restarted, the ip changes and its resolution changes automatically.

Host name: alias. It can communicate with ping host name or alias

This is not as good as directly using the DNS in the customized briege

[root@docker1 ~]# docker run -it --rm --link web3:nginx --network my_net1 busyboxplus

/ # ip addr

inet 172.18.0.4/16 brd 172.18.255.255 scope global eth0

/ # ping web3

64 bytes from 172.18.0.3: seq=0 ttl=64 time=0.057 ms

/ # env HOSTNAME=ca85afe9651d SHLVL=1 HOME=/ TERM=xterm PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin PWD=/

web1 bridges docker0, env, which adds variables and parsing

[root@docker1 ~]# docker inspect web1

"IPAddress": "172.17.0.2",

[root@docker1 ~]# docker run -it --rm --link web1:nginx busyboxplus

/ # ping web1

64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.060 ms

/ # env

HOSTNAME=01ba3b877905

SHLVL=1

HOME=/

NGINX_ENV_PKG_RELEASE=1~bullseye

NGINX_PORT_80_TCP=tcp://172.17.0.2:80

NGINX_ENV_NGINX_VERSION=1.21.5

NGINX_ENV_NJS_VERSION=0.7.1

TERM=xterm

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

NGINX_PORT=tcp://172.17.0.2:80

NGINX_NAME=/sad_benz/nginx

PWD=/

NGINX_PORT_80_TCP_ADDR=172.17.0.2

NGINX_PORT_80_TCP_PORT=80

NGINX_PORT_80_TCP_PROTO=tcp

/ # ping nginx

64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.052 ms

/ # cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 nginx 2fc55fe8c1fd web1

172.17.0.3 01ba3b877905

Plus analysis

Therefore – link cannot be used for custom networks, but only for default networks.

The default network is not resolved

/ # ping web1 ping: bad address 'web1'

And its resolution will change with the change of container ip address, but the variable env it writes will not change

Turn web1 off and start to change its ip, and its resolution has also changed

/ # cat /etc/hosts 172.17.0.2 nginx 2fc55fe8c1fd web1 / # cat /etc/hosts 172.17.0.4 nginx 2fc55fe8c1fd web1

How data comes in and goes out

How data comes in and goes out

Each additional one opens a virtual camouflage

[root@docker1 ~]# iptables -t nat -nL Chain POSTROUTING (policy ACCEPT) target prot opt source destination MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0 MASQUERADE all -- 172.18.0.0/16 0.0.0.0/0 MASQUERADE all -- 172.20.0.0/24 0.0.0.0/0

docker0 and real network card realize packet routing through Linux kernel

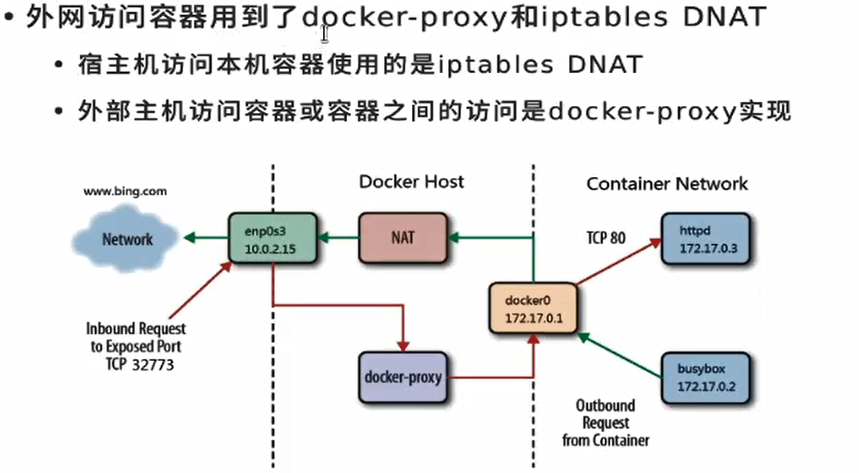

Dual redundancy: one is forwarded through iptable firewall and the other is implemented through process agent. Either of the two can take effect (external network access container)

Dual redundancy: one is forwarded through iptable firewall and the other is implemented through process agent. Either of the two can take effect (external network access container)

docker prox cannot completely replace iptable DNAT, because iptable is also used for forwarding

Empty container

Empty container

[root@docker1 ~]# docker ps -aq 81cd079f1e08 f7d480524524 e305ecfdc237 7b5f010de330 ddcac54b82da 2fc55fe8c1fd [root@docker1 ~]# docker rm -f `docker ps -aq`

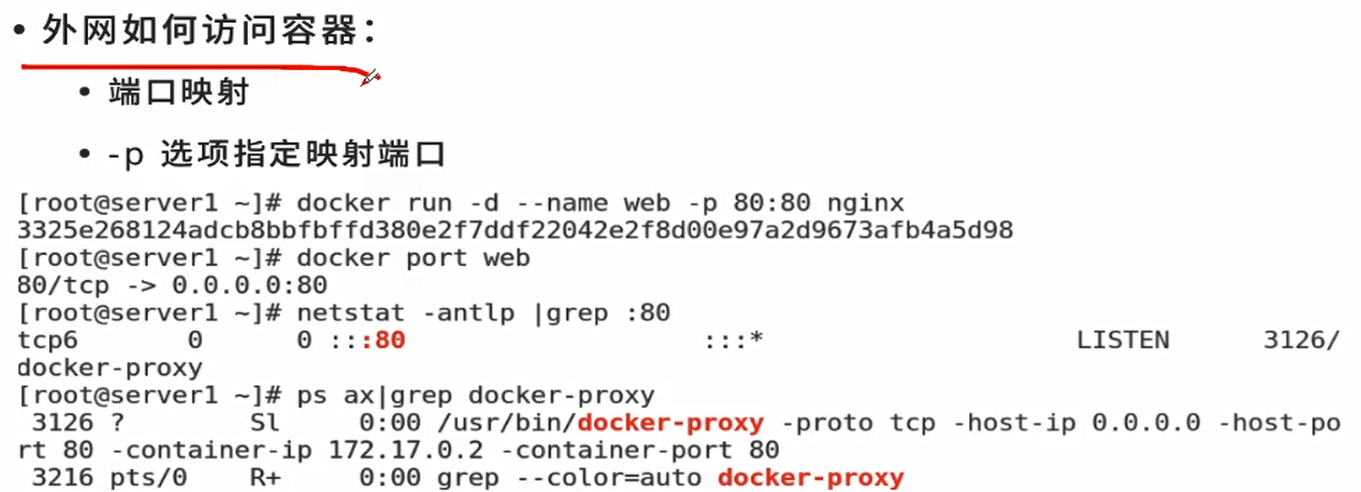

When accessing the local port 80, it will be redirected to port 80 of the container

[root@docker1 ~]# docker run -d --name demo -p 80:80 nginx [root@docker1 ~]# iptables -t nat -nL Chain DOCKER (2 references) DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:80 to:172.17.0.2:80

[root@docker1 ~]# curl 172.25.254.1 <title>Welcome to nginx!</title>

Special process, monitoring port 80, dual redundancy

[root@docker1 ~]# netstat -antlp tcp6 0 0 :::80 :::* LISTEN 7569/docker-proxy

Prove that the external access container is dual redundant

The host computer directly goes through the gateway docker0 ping

[root@docker1 ~]# iptables -t nat -F [root@docker1 ~]# iptables -t nat -nL Chain PREROUTING (policy ACCEPT) target prot opt source destination Chain INPUT (policy ACCEPT) target prot opt source destination Chain OUTPUT (policy ACCEPT) target prot opt source destination Chain POSTROUTING (policy ACCEPT) target prot opt source destination Chain DOCKER (0 references) target prot opt source destination

Because they are all in the same bridge, there is no need for firewall and prox process

/ # ping 172.17.0.2 64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.079 ms [root@docker1 ~]# ps ax 7569 ? Sl 0:00 /usr/bin/docker-proxy -proto tcp -host-ip 0.0.0.0 -host-port 80 -contai [root@docker1 ~]# kill 7569 / # ping 172.17.0.2 64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.065 ms

The outside doesn't work

Without the fire wall and proxy, the outside can't come in

[kiosk@foundation38 Desktop]$ curl 172.25.254.1 curl: (7) Failed to connect to 172.25.254.1 port 80: Connection refused

When the firewall is closed, the external data can still enter the container through the docker proxy

[root@docker1 ~]# systemctl restart docker [root@docker1 ~]# iptables -t nat -nL Chain POSTROUTING (policy ACCEPT) target prot opt source destination MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0 MASQUERADE all -- 172.18.0.0/16 0.0.0.0/0 MASQUERADE all -- 172.20.0.0/24 0.0.0.0/0 [root@docker1 ~]# docker start demo [root@docker1 ~]# iptables -t nat -nL Chain POSTROUTING (policy ACCEPT) target prot opt source destination MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0 MASQUERADE all -- 172.18.0.0/16 0.0.0.0/0 MASQUERADE all -- 172.20.0.0/24 0.0.0.0/0 MASQUERADE tcp -- 172.17.0.2 172.17.0.2 tcp dpt:80 DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:80 to:172.17.0.2:80 [kiosk@foundation38 Desktop]$ curl 172.25.254.1 <title>Welcome to nginx!</title> [root@docker1 ~]# ps ax | grep proxy 8496 ? Sl 0:00 /usr/bin/docker-proxy -proto tcp -host-ip 0.0.0.0 -host-port 80 -container-ip 172.17.0.2 -container-port 80 8572 pts/1 S+ 0:00 grep --color=auto proxy [root@docker1 ~]# iptables -t nat -F [root@docker1 ~]# iptables -t nat -nL Chain PREROUTING (policy ACCEPT) target prot opt source destination Chain INPUT (policy ACCEPT) target prot opt source destination Chain OUTPUT (policy ACCEPT) target prot opt source destination Chain POSTROUTING (policy ACCEPT) target prot opt source destination Chain DOCKER (0 references) target prot opt source destination [kiosk@foundation38 Desktop]$ curl 172.25.254.1 <title>Welcome to nginx!</title>

Close the process and leave the wall of fire

[root@docker1 ~]# systemctl restart docker [root@docker1 ~]# docker start demo [root@docker1 ~]# ps ax | grep proxy 8896 ? Sl 0:00 /usr/bin/docker-proxy -proto tcp -host-ip 0.0.0.0 -host-port 80 -container-ip 172.17.0.2 -container-port 80 8971 pts/1 R+ 0:00 grep --color=auto proxy [root@docker1 ~]# kill 8896 [kiosk@foundation38 Desktop]$ curl 172.25.254.1 <title>Welcome to nginx!</title>

Therefore, the external access container is a dual redundancy mechanism

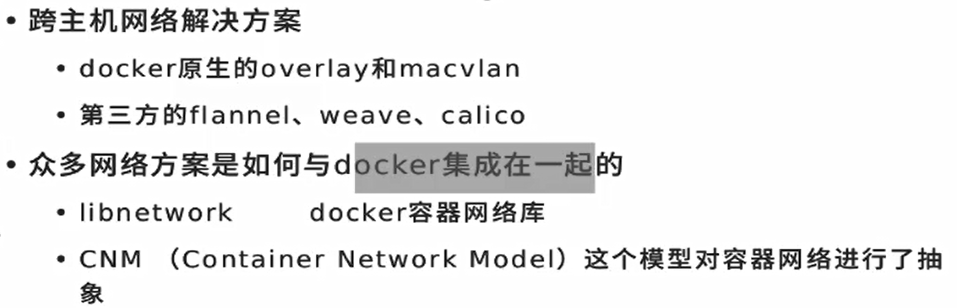

Cross host container network

Clean up the environment

[root@docker1 ~]# docker network prune WARNING! This will remove all custom networks not used by at least one container. Are you sure you want to continue? [y/N] y Deleted Networks: my_net1 my_net2 [root@docker1 ~]# docker rm -f demo [root@docker1 ~]# docker volume prune Are you sure you want to continue? [y/N] y [root@docker1 ~]# docker image prune Delete only the name,id yes none,The image that can't be used is actually the cache of the image

Experimental environment

[root@docker1 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 3811ae4f8bf9 bridge bridge local b51b022f8430 host host local c386dbae12f0 none null local [root@docker2 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 568aa16db709 bridge bridge local 20d505b4b669 host host local 09ed8d8227c2 none null local

overlay is used by docker swarm. This time, use macvlan first

The third party is k8s using

docker officially gives you the network library and model. You can develop your own network according to your own needs

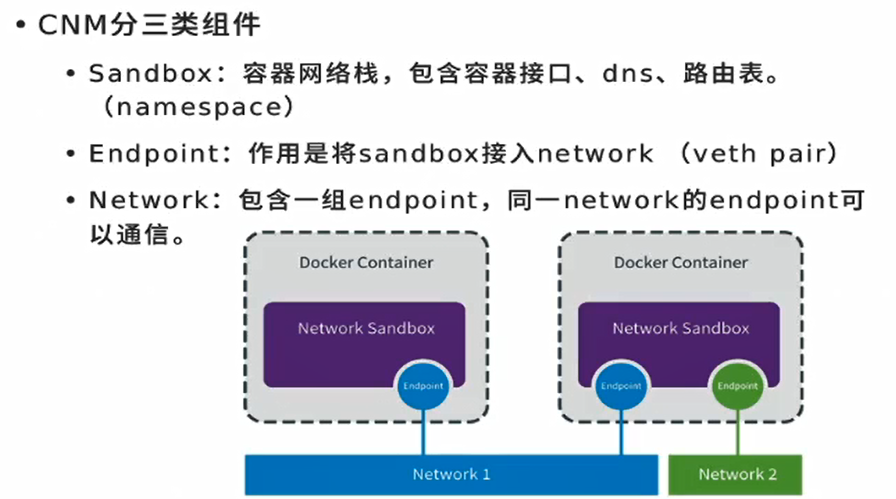

Endpoint is equivalent to virtual network card

Endpoint is equivalent to virtual network card

Mac VLAN is the underlying solution, which is solved by hardware

Mac VLAN is the underlying solution, which is solved by hardware

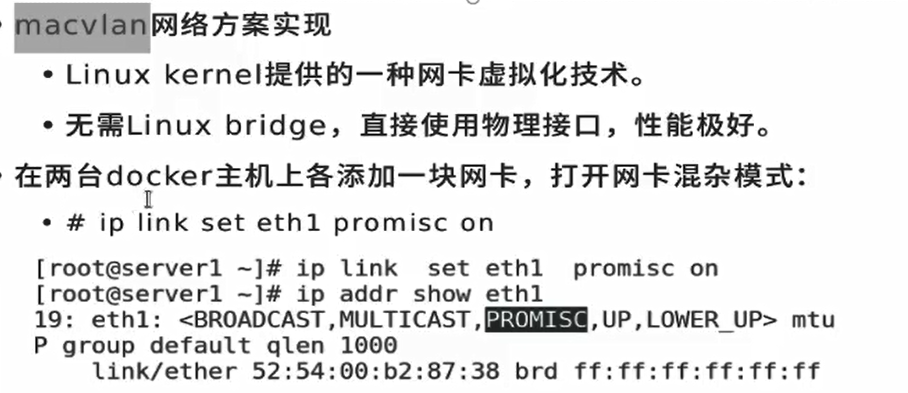

Add a network card bridging eth0 to docker1 and docker2 respectively

Add a network card bridging eth0 to docker1 and docker2 respectively

Activate the hybrid mode of the newly added network card

[root@docker1 ~]# ip addr 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 46: enp7s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 [root@docker1 ~]# ip link set enp7s0 promisc on [root@docker1 ~]# ip addr 46: enp7s0: <BROADCAST,MULTICAST,PROMISC> mtu 1500 qdisc noop state DOWN group default qlen 1000 [root@docker1 ~]# ip link set up enp7s0 [root@docker1 ~]# ip addr 46: enp7s0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 [root@docker2 ~]# ip link set enp7s0 promisc on [root@docker2 ~]# ip link set up enp7s0 [root@docker2 ~]# ip addr 4: enp7s0: <NO-CARRIER,BROADCAST,MULTICAST,PROMISC,UP> mtu 1500 qdisc pfifo_fast state DOWN group default qlen 1000

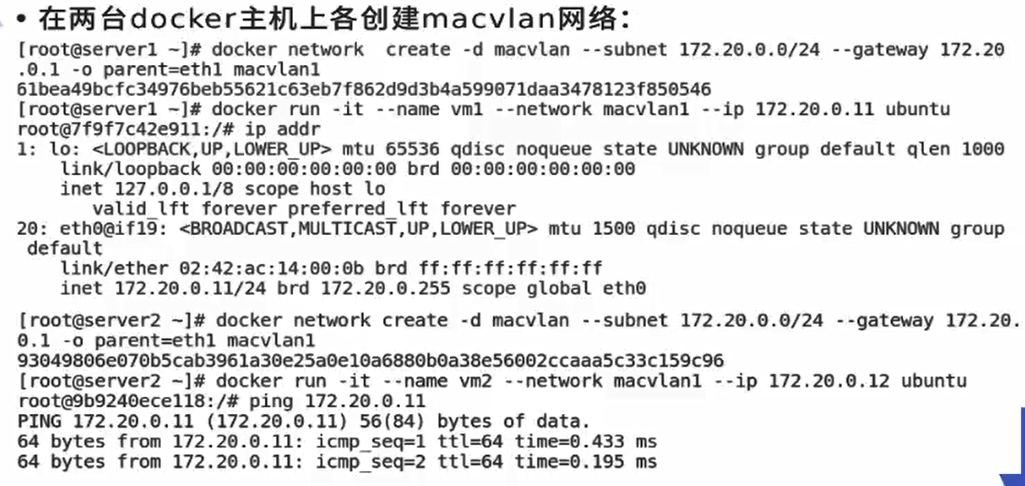

Two ip addresses cannot conflict. Two network cards can be assigned an ip address of 0.1. However, because they are in a vlan, the ip addresses should not conflict.

[root@docker1 ~]# docker network create -d macvlan --subnet 10.0.0.0/24 --gateway 10.0.0.1 -o parent=enp7s0 mynet1

[root@docker1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

3811ae4f8bf9 bridge bridge local

b51b022f8430 host host local

9e1d71bae46c mynet1 macvlan local

c386dbae12f0 none null local

[root@docker1 ~]# docker run -it --rm --network mynet1 --ip 10.0.0.10 busyboxplus

/ # ip addr

inet 10.0.0.10/24 brd 10.0.0.255 scope global eth0

[root@docker2 ~]# docker network create -d macvlan --subnet 10.0.0.0/24 --gateway 10.0.0.1 -o parent=enp7s0 mynet1

[root@docker2 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

568aa16db709 bridge bridge local

20d505b4b669 host host local

dd46171171cb mynet1 macvlan local

09ed8d8227c2 none null local

[root@docker2 ~]# docker run -it --rm --network mynet1 --ip 10.0.0.11 busybox

/ # ip addr

inet 10.0.0.11/24 brd 10.0.0.255 scope global eth0

/ # ping 10.0.0.10

64 bytes from 10.0.0.10: seq=0 ttl=64 time=0.814 ms

/ # ping 10.0.0.11

64 bytes from 10.0.0.11: seq=0 ttl=64 time=0.284 ms

Through the underlying hardware to solve the cross host communication, because the container is thousands, it may need many network segments and a large number of network cards. How many network cards can the hardware insert?

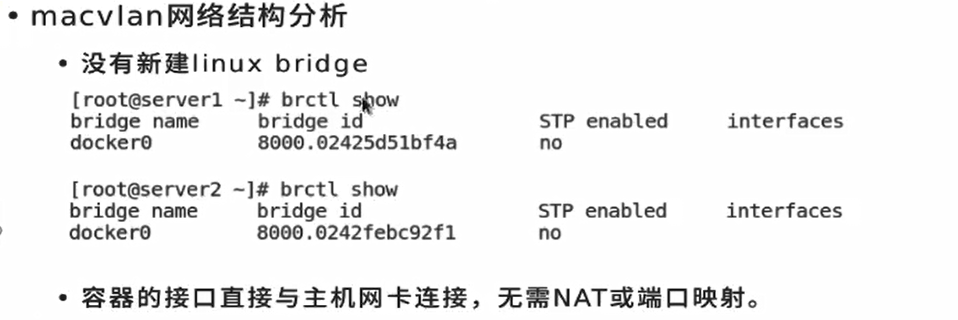

There is no new linux bridge and virtual network card

There is no new linux bridge and virtual network card

[root@docker1 ~]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.0242c27a3cac no [root@docker1 ~]# ip addr 46: enp7s0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

[root@docker1 ~]# ip link set enp7s0 down / # ping 10.0.0.10 Impassability [root@docker1 ~]# ip link set enp7s0 up 64 bytes from 10.0.0.10: seq=29 ttl=64 time=1002.343 ms

There is no need for NAT and port mapping. All this macvlan needs to connect the underlying physical link, and the rest doesn't matter. docker doesn't care

[root@docker2 ~]# docker run -d --name demo --network mynet1 --ip 10.0.0.11 webserver:v3 / # curl 10.0.0.11 <title>Welcome to nginx!</title> [root@docker2 ~]# netstat -antlp [root@docker2 ~]# iptables -nL -t nat No, DNAT,No port mapping

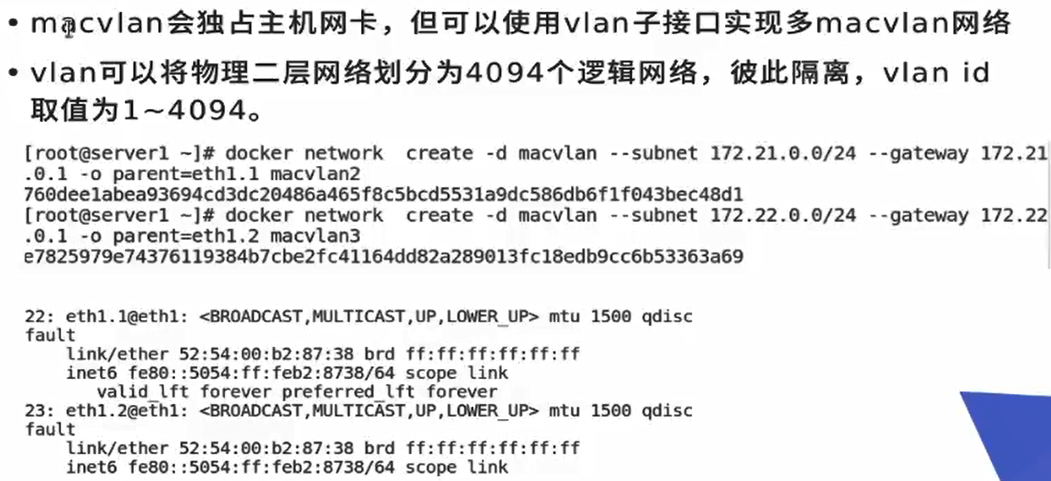

Since you cannot connect unlimited network cards, you can create sub interfaces (. 1. 2.3.4...) and so on

Since you cannot connect unlimited network cards, you can create sub interfaces (. 1. 2.3.4...) and so on

Second floor

[root@docker2 ~]# docker network create -d macvlan --subnet 20.0.0.0/24 --gateway 20.0.0.1 -o parent=enp7s0.1 mynet2

[root@docker1 ~]# docker network create -d macvlan --subnet 20.0.0.0/24 --gateway 20.0.0.1 -o parent=enp7s0.1 mynet2

[root@docker1 ~]# docker run -d --network mynet2 nginx

[root@docker1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e91e508fdd2b nginx "/docker-entrypoint...." 28 seconds ago Up 26 seconds practical_chandrasekhar

[root@docker1 ~]# docker inspect e91e508fdd2b

"IPAddress": "20.0.0.2",

[root@docker1 ~]# docker network create -d macvlan --subnet 30.0.0.0/24 --gateway 30.0.0.1 -o parent=enp7s0.2 mynet3

In order to make layer-2 communication, we can use layer-3 as a routing gateway to connect the MAC VLAN network

In order to make layer-2 communication, we can use layer-3 as a routing gateway to connect the MAC VLAN network

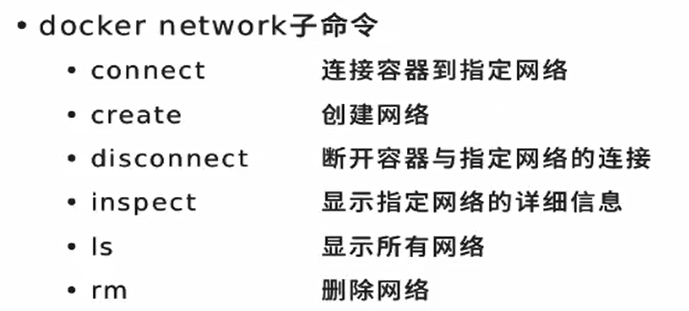

inspect to view specific information, regardless of whether the network, volume, container and image parameters are used

inspect to view specific information, regardless of whether the network, volume, container and image parameters are used

[root@docker1 ~]# docker network inspect mynet2 [root@docker1 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 3811ae4f8bf9 bridge bridge local b51b022f8430 host host local 9e1d71bae46c mynet1 macvlan local 7450c8721d84 mynet2 macvlan local 2642e999f12a mynet3 macvlan local c386dbae12f0 none null local