As a small note reference!!!

This article mainly writes the docker single host network type none host container bridge

The first three are simple. Finally, I will talk about the bridge network in detail

When docker is installed, three different networks will be created by default:

[^_^] kfk ~# docker network ls NETWORK ID NAME DRIVER SCOPE 86c72e7bd780 bridge bridge local 753952a6771e host host local 73bd69e2e4f3 none null local

none network

none has no other network card. It can only ping itself and cannot be accessed by other containers. This scenario is rarely used. It is generally used for projects with high security requirements

Create a container for the none network

docker run -itd --name none_test1 --network none busybox

View the information of the next container

"Containers": {

"2edae895834c595e8e5177b9b4641e9054f319398bffb082bb5cb73f8e937911": {

"Name": "none_test1",

"EndpointID": "eabb52395e918fb74c0cdf07956e713c475e77423704029c2e1821678d8e270a",

"MacAddress": "",

"IPv4Address": "",

"IPv6Address": ""

}You can see that she has no physical address and ip address. Go into the container and have a look There is only one lo local loop and no other network

[^_^] kfk ~# docker exec -it none_test1 sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever

host network

host Sharing the network protocol stack on the physical machine will generate the same network as the host. It can be connected to the host network and directly connected to the docker. It has good performance, but it will sacrifice some port flexibility.

The container in host mode can directly use the IP address of the host to communicate with the outside world. If the host has a public IP, the container also has this public IP. At the same time, the service port in the container can also use the port of the host without additional NAT conversion. Moreover, there is no need to forward or unpack data packets through linux bridge during container communication, so it has great performance advantages. Of course, this model has both advantages and disadvantages, mainly including the following aspects:

1. The most obvious is that the container no longer has an isolated and independent network stack. The container will compete with the host to use the network stack, and the collapse of the container may lead to the collapse of the host. This problem may not be allowed in the production environment.

2. The container will no longer have all port resources, because some ports have been occupied by other services such as host service and bridge mode container port binding.

Create a host network

docker run -itd --name host_test1 --network host busybox

View container information

"Containers": {

"969e44eb8f62c87ab29f8f34a04c1d26433f50b13f7fc3dd6ba23182351921e6": {

"Name": "host_test1",

"EndpointID": "046bd2985d4586ca584cbee37b855087c676159f136a448fcf96c244e3e5c80e",

"MacAddress": "",

"IPv4Address": "",

"IPv6Address": ""

}adopt docker network inspect host The information is the same as none

Enter the container to view network information The following information is the same on the host

[^_^] kfk ~# docker exec -it host_test1 sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000 link/ether 00:0c:29:6f:5e:ed brd ff:ff:ff:ff:ff:ff inet 192.168.237.128/24 brd 192.168.237.255 scope global dynamic ens33 valid_lft 1741sec preferred_lft 1741sec inet6 fe80::20c:29ff:fe6f:5eed/64 scope link valid_lft forever preferred_lft forever docker0 Link encap:Ethernet HWaddr 02:42:C4:59:A6:16 inet addr:172.17.0.1 Bcast:172.17.255.255 Mask:255.255.0.0 inet6 addr: fe80::42:c4ff:fe59:a616/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:5 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 B) TX bytes:438 (438.0 B)

container network

The container mode, that is, the join mode, is similar to the host mode, except that the container will share the network namespace with the specified container. This mode is to specify an existing container and share the IP and port of the container. In addition to the network, the two containers are shared, and others, such as file systems and processes, are isolated.

When running a pure web service container (without the support of base image), the container can only provide the operation of software. You can connect the containers together through the container

Create a container without a base environment

[^_^] kfk ~# docker run -d --name join_web httpd

Enter this container to view the information

[T_T] kfk ~# docker exec -it join_web sh # ls bin build cgi-bin conf error htdocs icons include logs modules # ip a sh: 2: ip: not found #

🍙 be careful Without the basic support of base image, in software image, it can only provide minimal operation, only the operation of software, rather than the use of a complete system

By connecting the container with other images through the container, you can use the sh command to view the network information

[^_^] kfk ~# docker run -it --network container:web --rm busybox sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 343: eth0@if344: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever 345: eth1@if346: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:58:00:03 brd ff:ff:ff:ff:ff:ff inet 172.88.0.3/24 brd 172.88.0.255 scope global eth1 valid_lft forever preferred_lft forever

bridge network

The bridge mode is docker by default. After docker is created, the network card docker0 will be created by default. In this mode, docker creates an independent network stack for the container, ensures that the processes in the container use an independent network environment, and realizes the network stack isolation between containers and between containers and host computers. At the same time, through the docker0 bridge on the host, the container can communicate with the host and even the outside world.

In principle, the container can communicate with the host and even other machines outside. On the same host, containers are connected to the docker0 bridge, which can be used as a virtual switch to enable containers to communicate with each other. However, because the IP address of the host and the IP address of the container veth pair are not in the same network segment, it is not enough for the network other than the host to actively discover the existence of the container only by relying on the technology of veth pair and namespace. In order to enable the outside world to access the processes in the container, docker adopts the port binding method, that is, it forwards the port traffic on the host to the port in the container through iptables NAT

When the container in bridge mode communicates with the outside world, it will occupy the port on the host, so as to compete with the host for port resources. The management of the host port will be a big problem. At the same time, since the communication between the container and the outside world is based on iptables NAT on the third layer, the loss of performance and efficiency can be predicted. (from the Internet because it's better than I explained)

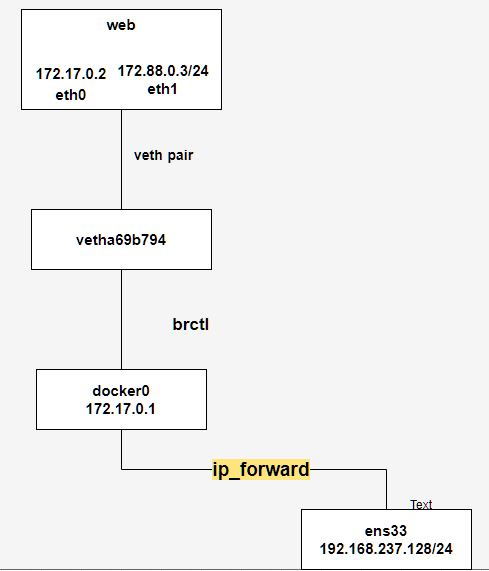

As shown in the figure above, the web container is connected to veth through a pair of veth pair s, and the other side is connected to docker0 through bridging. Docker0 and ens33 are connected to the Internet through routing forwarding in the same device

Create a file named my_net bridge network card (random network segment)

[^_^] kfk ~# docker network create --driver bridge my_net

[^_^] kfk ~# docker network ls NETWORK ID NAME DRIVER SCOPE(Effective scope) 86c72e7bd780 bridge bridge local 753952a6771e host host local d3e083fe5bee my_net bridge local 73bd69e2e4f3 none null local

View network card information

[^_^] kfk ~# docker network inspect my_net

[

{

"Name": "my_net",

"Id": "d3e083fe5bee04d6e9589374add2cff90f8ebae4e9ff5a63b9d99a9abdc6cfa5",

"Created": "2021-11-30T20:37:59.732821062-05:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]You can see his network segment, which will be automatically added according to the existing bridge network card

The default created container uses the docker0 network card. If you accidentally remove the docker0 network card, you can restart docker, and a docker0 network card will be created automatically

[^_^] kfk ~# docker run -itd --name 0_test busybox

View network information

/ # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 349: eth0@if350: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:04 brd ff:ff:ff:ff:ff:ff inet 172.17.0.4/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever

349 this is behind the ip of the container eth0@if350 @ It means to connect the local eth0 349pair network card to the host veth350. If you can't see the 350 information here, you can view it on the host ip a

350: veth1df0c5f@if349: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether 2a:7b:22:78:de:15 brd ff:ff:ff:ff:ff:ff link-netnsid 4 inet6 fe80::287b:22ff:fe78:de15/64 scope link valid_lft forever preferred_lft forever

A new network card interface will be generated on the bridge network card

docker0 8000.0242c459a616 no veth1df0c5f

When you check the information of the bridge network card, you will find that there is more container information in it

{

"Name": "bridge",

"Id": "86c72e7bd78059192fccac08c830d93051ce0f23bcf53732143d5d818466fb2e",

"Created": "2021-11-30T21:17:41.366025036-05:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"b97896268be5ff18c2ed2143ae387904fc6d8014b1cdb9caef4765695a2ebe88": {

"Name": "web",

"EndpointID": "02b864684ec05ceaf6addc851c972c2155ff5cc32930c55d88810adc10062ddd",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}You can see the network segment of the network card, gateway information, and the mac address and ip address of the container

Create a custom bridge network (specify ip address manually)

[^_^] kfk ~# docker network create --driver bridge --subnet 172.88.0.0/24 --gateway 172.88.0.1 my_net2

Create a file named my_net2's network card gateway is 172.88.0.0 subnet mask 24 bit gateway bit 172.88.0.1

View the details of this network card

{

"Name": "my_net2",

"Id": "1c8654d79d7f499a75cb6138fcdcf94092c0aee14c05a3a651629fc3c7ec39ce",

"Created": "2021-12-01T01:46:49.892944972-05:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.88.0.0/24",

"Gateway": "172.88.0.1"

}

]

},Create a container and specify the network card to bridge

[^_^] kfk ~# docker run -itd --name test2 --network my_net2 busybox

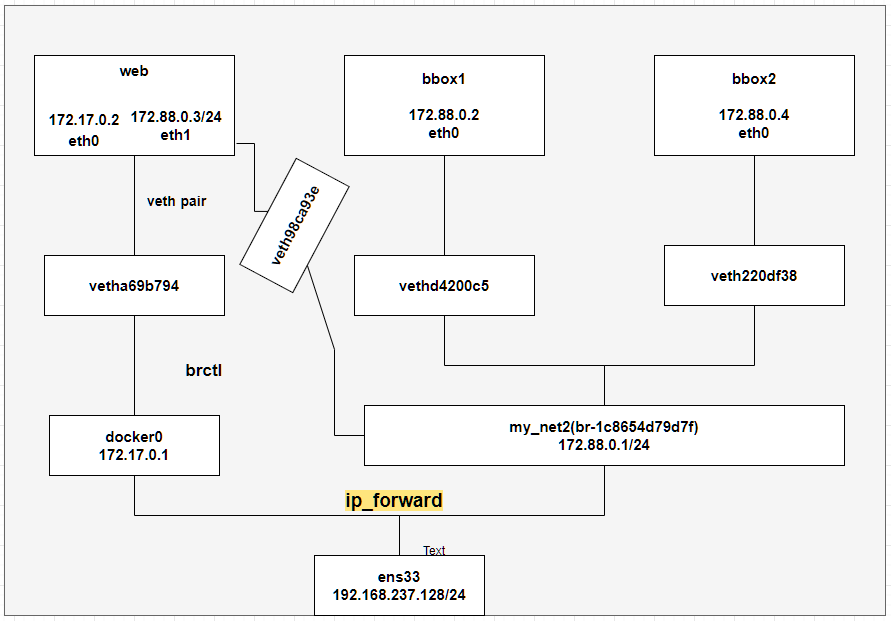

The following is the explanation of this picture

The container in docker0 can be used with my_ IP address of net2 network card ping on, on the contrary_ Net2 containers can also communicate with docker0 network card. They communicate through l route forwarding (ip_forward). You can view it through ip r [check the routing / default gateway information. Viewing the routing information will show us the routing path of the data packet to the destination. To view the network routing information, use the ip route show command (ip r for short)],

It can be seen that these network segments can be forwarded to each other. The problem of communication failure lies in the firewall

Iptables save can see several sections of ISOLATION, that is, isolated islands

This means that # the request of the DOCKER-ISOLATION-STAGE-1 chain comes from the network segment of docker0, and the target network segment does not belong to the network segment of docker0, then jump to DOCKER-ISOLATION-STAGE-2 for processing

-A DOCKER-ISOLATION-STAGE-1 -i docker0 ! -o docker0 -j DOCKER-ISOLATION-STAGE-2

#For the request of the DOCKER-ISOLATION-STAGE-2 chain, if the target network segment is br-1c8654d79d7f The network segment where the request is located discards the request

-A DOCKER-ISOLATION-STAGE-2 -o br-1c8654d79d7f -j DROP

It can be seen here that docker0 is not connected to other hosts. It can only be connected to the network card, and then blocked by the firewall. If you want to communicate, you can add an additional network card to a container through the following method

[T_T] kfk ~# docker network connect my_net2 web

Enter the container to view the network card information

[T_T] kfk ~# docker run -it --network container:web --rm busybox sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 343: eth0@if344: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever 345: eth1@if346: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:58:00:03 brd ff:ff:ff:ff:ff:ff inet 172.88.0.3/24 brd 172.88.0.255 scope global eth1 valid_lft forever preferred_lft forever

Can see This container has a new network card with ip 172.88 This network segment, docker0 The container inside can be mixed with my_net2 to communicate with the container inside

If you think my text is helpful to you, you can pay attention to my official account official account.

The name of the official account is coo

QR code: