Dubbo version

- Dubbo version 2.6 seven

load balancing

-

When the service provider is a cluster, in order to avoid a large number of requests being concentrated on one or several service provider machines, a load balancing strategy needs to be made. Dubbo provides a variety of balancing strategies. The default is random, that is, the services of one service provider are called randomly at a time

-

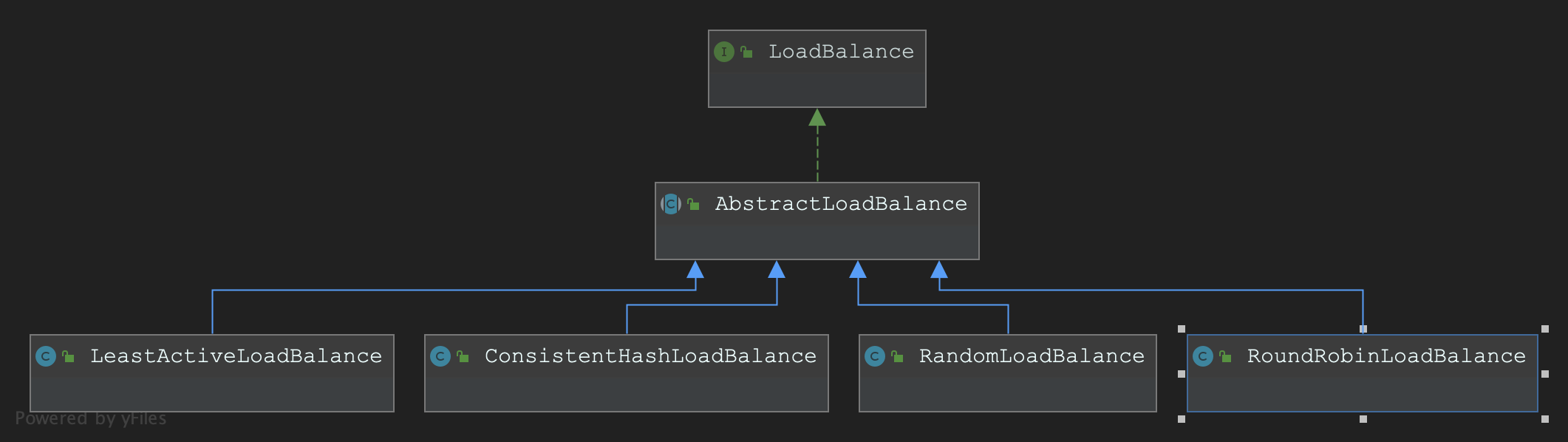

Dubbo provides four load balancing implementations

- RandomLoadBalance based on weight random algorithm: the weight is set according to probability, which is more uniform, and the weight of the provider can be adjusted dynamically

- Leadactiveloadbalance based on the minimum number of active calls algorithm: if the number of active calls of each provider is the same, select one at random. An active counter is maintained in each service provider to record the current number of simultaneous requests, that is, the number of concurrent tasks. The smaller the value, the faster the current service provider processes or the lower the load of the current machine. Therefore, the machine with the lowest activity is selected for routing. If a service provider processes slowly, it will process more requests at the same time due to accumulation, that is, the number of active calls is large (high activity). At this time, the provider with slow processing speed will receive fewer requests.

- ConsistentHashLoadBalance based on consistency hash: consistency hash can ensure that requests with the same parameters are always sent to the same provider. When a provider's machine goes down, the requests originally sent to the provider will be shared equally among other providers based on virtual nodes, so as not to cause drastic changes.

- Roundrobin loadbalance based on weighted polling algorithm: Round Robin, which sets the round robin ratio according to the weight after the Convention. There will be cases where service providers that execute slowly accumulate requests. For example, a machine executes very slowly, However, the machine is not down (if it is down, the current machine will be deleted from the service list of ZooKeeper). When many new requests arrive at the machine, new requests will be stacked because the previous requests have not been processed. Over time, all requests of consumers calling this machine will be blocked.

-

In Dubbo, all load balancing implementation classes inherit from AbstractLoadBalance, which implements the LoadBalance interface and encapsulates some common logic

AbstractLoadBalance

-

AbstractLoadBalance is a public abstract base class. The core method AbstractLoadBalance#getWeight is the method of obtaining weight

- Get the weight=xxx configuration value. The default value is 100

- Obtain the service provider startup timestamp and calculate the service provider runtime

- Get the service warm-up time. The default is 10 * 60 * 1000, that is, 10 minutes.

- If the service running time is less than the preheating time, the service weight is recalculated, that is, the weight is reduced. The purpose of preheating is to prevent the service from being in a high load state at the beginning of startup. It is an optimization to make the service run at "low power" for a period of time after startup

protected int getWeight(Invoker<?> invoker, Invocation invocation) { // Get the weight configuration value from the url. The default value is 100 int weight = invoker.getUrl().getMethodParameter(invocation.getMethodName(), Constants.WEIGHT_KEY, Constants.DEFAULT_WEIGHT); if (weight > 0) { // Get service provider startup timestamp long timestamp = invoker.getUrl().getParameter(Constants.REMOTE_TIMESTAMP_KEY, 0L); if (timestamp > 0L) { //Calculate service provider runtime int uptime = (int) (System.currentTimeMillis() - timestamp); // Get the service warm-up time. The default is 10 * 60 * 1000, that is, 10 minutes int warmup = invoker.getUrl().getParameter(Constants.WARMUP_KEY, Constants.DEFAULT_WARMUP); //If the service running time is less than the preheating time, the service weight is recalculated, that is, the weight is reduced //The purpose of preheating is to prevent the service from being in a high load state at the beginning of startup. It is an optimization to make the service run at "low power" for a period of time after startup if (uptime > 0 && uptime < warmup) { weight = calculateWarmupWeight(uptime, warmup, weight); } } } return weight; } static int calculateWarmupWeight(int uptime, int warmup, int weight) { // Calculate the weight. The following code is equivalent to (uptime / warmup) * weight. // As the uptime of the service increases, the weight calculation value ww will gradually approach the configured value weight int ww = (int) ((float) uptime / ((float) warmup / (float) weight)); return ww < 1 ? 1 : (ww > weight ? weight : ww); }

RandomLoadBalance

-

RandomLoadBalance is A concrete implementation of weighted random algorithm. Suppose there is A group of servers server={(A,5),(B,3),(C,2)}, with the server in front and the corresponding weight of the server behind. Put the weight value on the one-dimensional coordinate axis. Then [0,5) interval belongs to server A, [5,8) interval belongs to server B, [8,10) interval belongs to server C

-

Generate A range through the random number generator [0, 10), and then calculate which interval the random number will fall into. The number 3 will fall into the corresponding interval of server A, and then return to server A. the larger the weight of the machine, the larger the range of the corresponding interval on the coordinate axis, so the number generated by the random number generator will have A greater probability of falling into this interval

-

When the number of calls is relatively small, the Random number generated by Random may be relatively concentrated. At this time, most requests will fall on the same server. This shortcoming is not very serious and can be ignored in most cases.

-

RandomLoadBalance is a simple and efficient load balancing implementation, so Dubbo chose it as the default implementation.

-

RandomLoadBalance#doSelect core source code

@Override protected <T> Invoker<T> doSelect(List<Invoker<T>> invokers, URL url, Invocation invocation) { //Number of service providers int length = invokers.size(); // Number of invokers int totalWeight = 0; // The sum of weights //Does each invoker have the same weight boolean sameWeight = true; // Every invoker has the same weight? //The first is to calculate the total weight, //The second is to check whether the weight of each service provider is the same for (int i = 0; i < length; i++) { int weight = getWeight(invokers.get(i), invocation); //Cumulative weight totalWeight += weight; // Sum //Check whether the weight of the current service provider is the same as that of the previous service provider. If not, set sameWeight = false //Wouldn't it be better to create a previousWeight here to save the previous one?? Avoid double counting if (sameWeight && i > 0 && weight != getWeight(invokers.get(i - 1), invocation)) { sameWeight = false; } } //Obtain the random number and calculate the interval on which the random number falls if (totalWeight > 0 && !sameWeight) { // If (not every invoker has the same weight & at least one invoker's weight>0), select randomly based on totalWeight. // Randomly obtain the number in a [0, totalWeight) interval int offset = random.nextInt(totalWeight); // Return a invoker based on the random value. // Loop to subtract the service provider weight value from the offset number. When the offset is less than 0, the corresponding Invoker is returned. // For example, we have servers = [A, B, C], weights = [5, 3, 2], offset = 7. // For the first cycle, offset - 5 = 2 > 0, i.e. offset > 5, // Indicates that it will not fall on the interval corresponding to server A. // Second cycle, offset - 3 = - 1 < 0, i.e. 5 < offset < 8, // Indicates that it will fall on the interval corresponding to server B for (int i = 0; i < length; i++) { // Let the random value offset subtract the weight value offset -= getWeight(invokers.get(i), invocation); if (offset < 0) { return invokers.get(i); } } } // If all invokers have the same weight value or totalWeight=0, return evenly. // If the weight values of all service providers are the same, just return one at random return invokers.get(random.nextInt(length)); }

RoundRobinLoadBalance

- Polling refers to assigning requests to each server in turn. For example, we have three servers A, B and C. We assign the first request to server A, the second request to server B, the third request to server C, and the fourth request to server A again. This process is called polling. Polling is A stateless load balancing algorithm, which is simple to implement and suitable for scenarios where the performance of each server is similar

- But in reality, we cannot guarantee that the performance of each server is similar. It is obviously unreasonable if we allocate the same number of requests to servers with poor performance. Therefore, at this time, we need to weight the polling process to regulate the load of each server. After weighting, the proportion of requests that each server can get is close to or equal to their weight ratio. For example, the weight ratio of servers A, B and C is 5:2:1. Then, among the eight requests, server A will receive five of them, server B will receive two of them, and server C will receive one of them.

LeastActiveLoadBalance

-

Weighted minimum active load balancing leadactiveloadbalance. The smaller the number of active calls, it indicates that the service provider is more efficient and can process more requests per unit time. At this point, priority should be given to assigning the request to the service provider

-

Each service provider corresponds to an active number active. Initially, the active number of all service providers is 0. Each time a request is received, the active number is increased by 1. After the request is completed, the active number is reduced by 1. After the service runs for a period of time, the service provider with good performance processes the request faster, so the active number decreases faster. At this time, such service provider can give priority to obtaining new service requests, which is the basic idea of the minimum active number load balancing algorithm. In addition to the minimum active number, leadactiveloadbalance also introduces weight values in its implementation

-

Implementation logic

- Traverse the invokers list to find the Invoker with the smallest number of active

- If multiple invokers have the same minimum active number, record the subscripts of these invokers in the invokers set, accumulate their weights, and compare whether their weight values are equal

- If only one Invoker has the minimum number of active, you can directly return the Invoker at this time

- If there are multiple invokers with the minimum active number and their weights are not equal, the processing method is the same as RandomLoadBalance

- If there are multiple invokers with the minimum active number, but their weights are equal, one can be returned randomly

-

Implementation code

@Override protected <T> Invoker<T> doSelect(List<Invoker<T>> invokers, URL url, Invocation invocation) { int length = invokers.size(); // Number of invokers //Minimum active number int leastActive = -1; // The least active value of all invokers //Number of service provider providers (hereinafter referred to as Invoker) with the same "minimum number of activities" int leastCount = 0; // The number of invokers having the same least active value (leastActive) //leastIndexs is used to record the subscript information of invokers with the same "minimum active number" in the invokers list int[] leastIndexs = new int[length]; // The index of invokers having the same least active value (leastActive) //Record the weight sum of the service provider whose number of calls is equal to the minimum number of calls int totalWeight = 0; // The sum of with warmup weights // The Invoker weight value of the first minimum active number is used to compare with the weights of other invokers with the same minimum active number, // To check whether the weights of all invokers with the same minimum number of activities are equal int firstWeight = 0; // Initial value, used for comparision //Is the weight of all service providers whose call times are equal to the minimum call times the same boolean sameWeight = true; // Every invoker has the same weight value? // Traverse the invokers list and filter out all service providers whose call times are equal to the minimum call times for (int i = 0; i < length; i++) { Invoker<T> invoker = invokers.get(i); // Get the active number corresponding to the Invoker int active = RpcStatus.getStatus(invoker.getUrl(), invocation.getMethodName()).getActive(); // Active number int afterWarmup = getWeight(invoker, invocation); // Weight // Find a smaller active number and start over if (leastActive == -1 || active < leastActive) { // Restart, when find a invoker having smaller least active value. // Update the minimum active number leastActive with the current active number active leastActive = active; // Record the current least active value // Update leastCount to 1 leastCount = 1; // Reset leastCount, count again based on current leastCount // Record the current subscript value in leastIndexs leastIndexs[0] = i; // Reset totalWeight = afterWarmup; // Reset firstWeight = afterWarmup; // Record the weight the first invoker sameWeight = true; // Reset, every invoker has the same weight value? } else if (active == leastActive) { // If current invoker's active value equals with leaseActive, then accumulating. // The active number of the current Invoker is the same as the minimum active number leastActive leastIndexs[leastCount++] = i; // Record index number of this invoker // Cumulative weight totalWeight += afterWarmup; // Add this invoker's weight to totalWeight. // If every invoker has the same weight? // Check whether the weight of the current Invoker is equal to the firstWeight, // If not, set sameWeight to false if (sameWeight && i > 0 && afterWarmup != firstWeight) { sameWeight = false; } } } // assert(leastCount > 0) // When only one Invoker has the minimum active number, you can directly return the Invoker if (leastCount == 1) { // If we got exactly one invoker having the least active value, return this invoker directly. return invokers.get(leastIndexs[0]); } // There are multiple invokers with the same minimum active number, but their weights are different if (!sameWeight && totalWeight > 0) { // Randomly generate a number between [0, totalweight] // If (not every invoker has the same weight & at least one invoker's weight>0), select randomly based on totalWeight. int offsetWeight = random.nextInt(totalWeight) + 1; // Return a invoker based on the random value. // Loop lets the random number subtract the weight value of the Invoker with the minimum active number, // When offset is less than or equal to 0, the corresponding Invoker is returned for (int i = 0; i < leastCount; i++) { int leastIndex = leastIndexs[i]; // Get the weight value and let the random number subtract the weight value- ⭐ ️ offsetWeight -= getWeight(invokers.get(leastIndex), invocation); if (offsetWeight <= 0) return invokers.get(leastIndex); } } // If all invokers have the same weight value or totalWeight=0, return evenly. // If the weight is the same or the weight is 0, an Invoker is returned randomly return invokers.get(leastIndexs[random.nextInt(leastCount)]); }

ConsistentHashLoadBalance

-

By default, only the first parameter hash can be modified through configuration

<dubbo:parameter key="hash.arguments" value="0.1" />

-

160 virtual nodes are used by default, which can be modified through configuration

<dubbo:parameter key="hash.nodes" value="320">

-

Implement ConsistentHashLoadBalance#doSelect

@Override protected <T> Invoker<T> doSelect(List<Invoker<T>> invokers, URL url, Invocation invocation) { String methodName = RpcUtils.getMethodName(invocation); String key = invokers.get(0).getUrl().getServiceKey() + "." + methodName; // Get the original hashcode of invokers int identityHashCode = System.identityHashCode(invokers); ConsistentHashSelector<T> selector = (ConsistentHashSelector<T>) selectors.get(key); // If invokers is a new List object, it means that the number of service providers has changed, which may be added or reduced. // The selector identityHashCode != Identityhashcode condition holds if (selector == null || selector.identityHashCode != identityHashCode) { selectors.put(key, new ConsistentHashSelector<T>(invokers, methodName, identityHashCode)); selector = (ConsistentHashSelector<T>) selectors.get(key); } return selector.select(invocation); } -

ConsistentHashSelector

private static final class ConsistentHashSelector<T> { // Using TreeMap to store Invoker virtual nodes private final TreeMap<Long, Invoker<T>> virtualInvokers; private final int replicaNumber; private final int identityHashCode; private final int[] argumentIndex; ConsistentHashSelector(List<Invoker<T>> invokers, String methodName, int identityHashCode) { this.virtualInvokers = new TreeMap<Long, Invoker<T>>(); this.identityHashCode = identityHashCode; URL url = invokers.get(0).getUrl(); // Get the number of virtual nodes. The default is 160 this.replicaNumber = url.getMethodParameter(methodName, "hash.nodes", 160); // Get the parameter subscript value involved in hash calculation. By default, hash operation is performed on the first parameter String[] index = Constants.COMMA_SPLIT_PATTERN.split(url.getMethodParameter(methodName, "hash.arguments", "0")); argumentIndex = new int[index.length]; for (int i = 0; i < index.length; i++) { argumentIndex[i] = Integer.parseInt(index[i]); } for (Invoker<T> invoker : invokers) { String address = invoker.getUrl().getAddress(); for (int i = 0; i < replicaNumber / 4; i++) { // Perform md5 operation on address + i to obtain a 16 byte array byte[] digest = md5(address + i); // Four hash operations are performed on some bytes of digest to obtain four different long positive integers for (int h = 0; h < 4; h++) { // When h = 0, take 4 bytes with subscript 0 ~ 3 in digest for bit operation // When h = 1, take 4 bytes with subscripts 4 ~ 7 in digest for bit operation // The process is the same as above when h = 2 and H = 3 long m = hash(digest, h); // Store the mapping relationship from hash to invoker in virtualInvokers, // Virtual invokers needs to provide efficient query operations, so TreeMap is selected as the storage structure virtualInvokers.put(m, invoker); } } } } public Invoker<T> select(Invocation invocation) { // Change parameter to key String key = toKey(invocation.getArguments()); // md5 operation on parameter key byte[] digest = md5(key); // Take the first four bytes of the digest array for hash operation, and then pass the hash value to the selectForKey method, // Find the right Invoker return selectForKey(hash(digest, 0)); } private String toKey(Object[] args) { StringBuilder buf = new StringBuilder(); for (int i : argumentIndex) { if (i >= 0 && i < args.length) { buf.append(args[i]); } } return buf.toString(); } private Invoker<T> selectForKey(long hash) { // Find the Invoker whose first node value is greater than or equal to the current hash in the TreeMap Map.Entry<Long, Invoker<T>> entry = virtualInvokers.tailMap(hash, true).firstEntry(); // If the hash is greater than the largest position of the Invoker on the ring, then entry = null, // The header node of TreeMap needs to be assigned to entry if (entry == null) { entry = virtualInvokers.firstEntry(); } // Return to Invoker return entry.getValue(); } ...ellipsis... }

Custom load balancing

-

Implement LoadBalance interface

public class MyLoadBalance implements LoadBalance { @Override public <T> Invoker<T> select(List<Invoker<T>> invokers, URL url, Invocation invocation) throws RpcException { return invokers.get(0); } } -

Create / SRC / main / resources / meta-inf / Dubbo / com alibaba. dubbo. rpc. cluster. Loadbalance file

myLoadBalance=com.alibaba.dubbo.spi.MyLoadBalance

-

to configure

<dubbo:reference id="demoService" check="false" interface="com.alibaba.dubbo.demo.DemoService"loadbalance="myLoadBalance"/>