Firstly, the network model is introduced, and then the experimental results of SL1 and SP5 are introduced.

1 introduction of shallow self encoder used

1.1 shallow self encoder model (AE)

The encoder and decoder adopt convolution with three-layer step size of 2, and the number of characteristic layers of the encoder changes to 3-64-128-256; The decoder uses deconvolution, which is symmetrical to the encoder, and the number of characteristic layers changes to 256-128-64-3. Use L2 loss function. The total parameter is 1.32M, the optimal training times is 8000, the learning rate is 0.0001, and the training time of a single pattern is 18 minutes and 32 seconds. The test time of a single picture is 0.02s (3090Ti). The residual of ssim used here can shorten the test time, and the residual of L1 is about 0.05s (3090Ti).

The encoder and decoder adopt convolution with three-layer step size of 2, and the number of characteristic layers of the encoder changes to 3-64-128-256; The decoder uses deconvolution, which is symmetrical to the encoder, and the number of characteristic layers changes to 256-128-64-3. Use L2 loss function. The total parameter is 1.32M, the optimal training times is 8000, the learning rate is 0.0001, and the training time is 18 minutes and 32 seconds. The test time of a single picture is 0.02s (3090Ti). The residual of ssim used here can shorten the test time. The video memory occupies 1.7G.

- The code of AE model is as follows:

class SDAE_group(nn.Module):

"""Encoder-Decoder architecture for MemAE."""

def __init__(self,nc=3):

super(SDAE_group, self).__init__()

self.nc = nc

self.encoder = nn.Sequential(

nn.Conv2d(nc, 64, 4, 2, 1, bias=False), # B, 64, 128, 128

nn.BatchNorm2d(64),

nn.ReLU(True),

nn.Conv2d(64, 128, 4, 2, 1, bias=False), # B, 128, 64, 64

nn.BatchNorm2d(128),

nn.ReLU(True),

nn.Conv2d(128, 256, 4, 2, 1, bias=False), # B, 32, 32, 32

nn.BatchNorm2d(256),

nn.ReLU(True),

)

self.decoder = nn.Sequential(

# Added

nn.ConvTranspose2d(256, 128, 4, 2, 1, bias=False), # B, 32, 32, 32

nn.BatchNorm2d(128),

nn.ReLU(True),

nn.ConvTranspose2d(128, 64, 4, 2, 1, bias=False), # B, 64, 128, 128

nn.BatchNorm2d(64),

nn.ReLU(True),

nn.ConvTranspose2d(64, 3, 4, 2, 1, bias=False), # B, 3, 256, 256

)

def forward(self, x):

z = self._encode(x)

x_recon = self._decode(z)

return x_recon

def _encode(self, x):

return self.encoder(x)

def _decode(self, z):

return self.decoder(z)

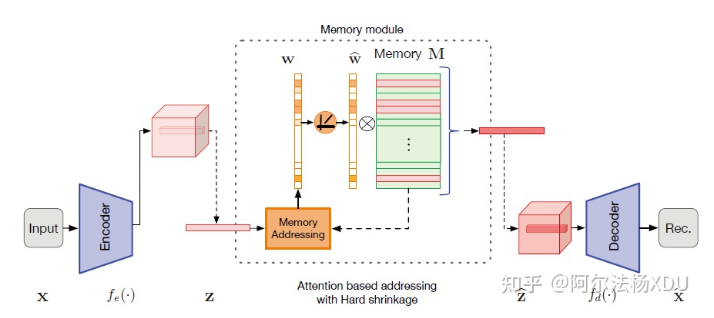

1.2 MemAE module (no increase in the number of parameters)

The model in the figure is divided into three parts: encoding, MemAE module and decoding. MemAE records only normal modes. After adding AE to MemAE module, the parameter is still 1.32M. The simple idea is that the encoder encodes z, and the transformation is to search in memory, get z ^, and then decode. The parameters of Memory M can be updated.

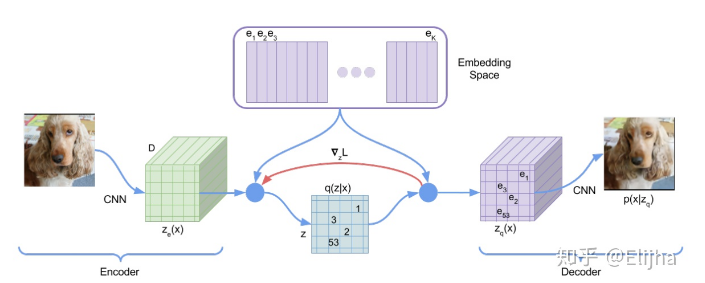

==I think it is similar to the memory module of VQVAE, and the dimension of embedded space (N*C) is basically similar. The expression of mathematical formula is different. VQVAE uses nearest neighbor to find similar blocks from embedded space, and MemAE uses cosine similarity.

VQVAE model

1.3 experimental results

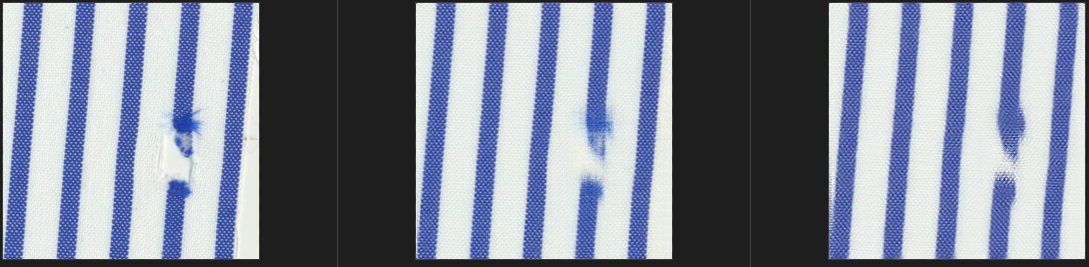

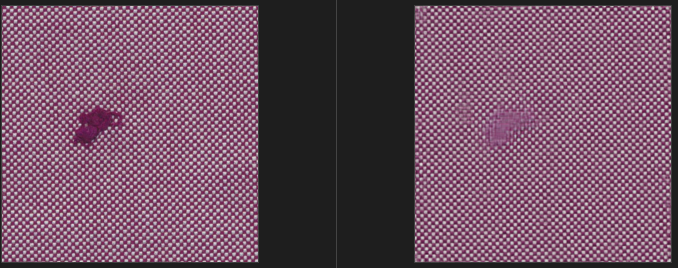

Two representative patterns SL1 and SP5 are tested, and the challenging figure with large defects in the experiment is taken

AE can't repair the defects, but only lighten them. After MemAE is added, the defect repair effect is obviously improved.

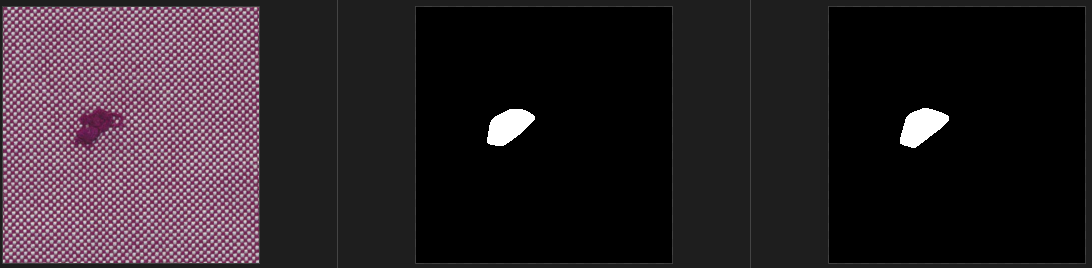

1.3.1 removal effect of AE and MemAE on defects in SL1 data set

Original picture (left) ------------------------------------------ AE reconstruction and detection results (middle) ----------------------------------------- MemAE reconstruction and detection results (right)

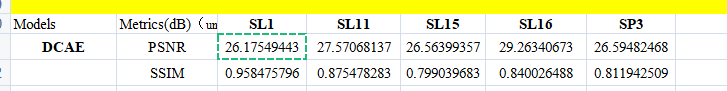

- Quantitative evaluation index of AE (reconstruction stage)

PSNR: 31.0736

SSIM : 0.9886 - **MemAE quantitative evaluation index (reconstruction stage)**

PSNR: 26.3052

SSIM : 0.9688 - In addition, compare the indicators of DCAE

*Conclusion

PSNR and SSIM are calculated on the training data set. The higher the value, the stronger the reconstruction and repair ability in the detection stage. The value of AE is very high, but the defect cannot be repaired. After adding MemAE, the values of PSNR and SSIM become lower, but the defect is repaired relatively well. The reconstruction of DCAE uses pooling, and the surrounding non defect areas will also be blurred (compared with step convolution).

The results of DCAE reconstruction are attached:

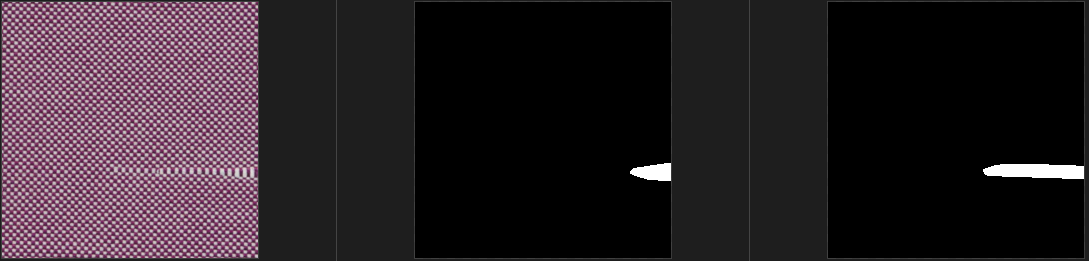

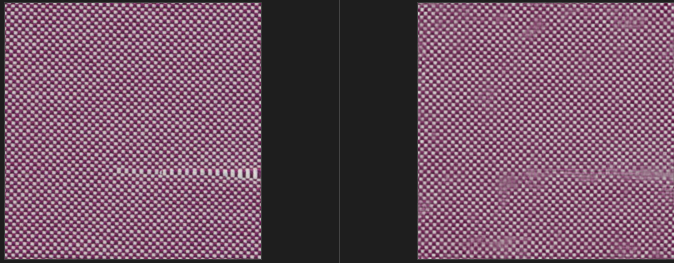

1.3.2 removal effect of AE and MemAE on defects on SP5 data set

Original image (left) ------------------------------------------ AE reconstruction result (middle) ------------------------------------------ MemAE reconstruction result (right)