Hello, I'm Liang Xu.

wget is a free utility that can download files from the network. Its working principle is to obtain data from the Internet and save it to a local file or display it on your terminal.

This is actually done by the browsers we use, such as Firefox or Chrome. In fact, we call the wget program internally for data download.

This article introduces the common usage of 8 wget commands, hoping to be helpful to our friends.

1. Use the wget command to download the file

You can use the wget command to download the file with the specified link. By default, the downloaded file is saved to a file with the same name in the current working directory.

$ wget http://www.lxlinux.net --2021-09-20 17:23:47-- http://www.lxlinux.net/ Resolving www.lxlinux.net... 93.184.216.34, 2606:2800:220:1:248:1893:25c8:1946 Connecting to www.lxlinux.net|93.184.216.34|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 1256 (1.2K) [text/html] Saving to: 'index.html'

If you don't want to save the downloaded file locally, but just want to display it in standard output (stdout), you can use the -- output document option, followed by a -.

$ wget http://www.lxlinux.net --output-document - | head -n4 <!doctype html> <html> <head> <title>Example Domain</title>

If you want to rename the downloaded file, you can use the -- output document option (or simply use - O):

$ wget http://www.lxlinux.net --output-document newfile.html

2. Breakpoint continuation

If the file you want to download is very large, it may not be completely downloaded at one time due to the network. If you have to download it again every time, you don't know how long it will take.

In this case, you can use the -- continue option (or - c) to implement breakpoint continuation. In other words, if the download is interrupted for various reasons, you can continue the previous download without having to download again.

$ wget --continue https://www.lxlinux.net/linux-distro.iso

3. Download a series of files

If you need many small files instead of a large file, the wget command can also help you easily implement it.

However, you need to use some bash syntax to achieve this. Generally speaking, the names of these files have certain rules, such as file_1.txt,file_2.txt,file_3.txt, etc., then you need to use this command:

$ wget http://www.lxlinux.net/file_{1..4}.txt4. Mirror the entire site

If you want to download a website, including its directory structure, you need to use the -- mirror option.

This option is equivalent to -- recursive -- level inf -- timestamping -- no remove listing, which means that it is infinitely recursive, so you can download all content on the specified domain.

If you use wget archive sites, these options -- no cookies -- page requisites -- convert links can also be used to ensure that each page is up-to-date and complete.

5. Modify HTML request header

Little friends who have studied network communication know that HTTP packets contain a lot of elements. The HTTP header is an integral part of the initial packet.

When you use your browser to browse a web page, your browser will send an HTTP request header to the server. What exactly did you send? You can use the -- debug option to view the header information sent by wget every request:

$ wget --debug www.lxlinux.net ---request begin--- GET / HTTP/1.1 User-Agent: Wget/1.19.5 (linux-gnu) Accept: */* Accept-Encoding: identity Host: www.lxlinux.net Connection: Keep-Alive ---request end---

You can use the -- header option to modify the request header. Why do you do this? In fact, there are many usage scenarios. For example, sometimes it is necessary to simulate a request from a specific browser for testing.

For example, if you want to simulate the request sent by the Edge browser, you can do the following:

$ wget --debug --header="User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36 Edg/91.0.864.59" http://www.lxlinux.net

In addition, you can also pretend to be a specific mobile device (such as iPhone):

$ wget --debug \ --header = "User-Agent: Mozilla/5.0 (iPhone; CPU iPhone OS 13_5_1 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.1.1 Mobile/15E148 Safari /604.1" \ HTTP: // www.lxlinux.net

6. View the response header

In the same way that the browser requests to send header information, header information is also included in the response. Similarly, you can use the -- debug option to view the response header:

$ wget --debug www.lxlinux.net [...] ---response begin--- HTTP/1.1 200 OK Accept-Ranges: bytes Age: 188102 Cache-Control: max-age=604800 Content-Type: text/html; charset=UTF-8 Etag: "3147526947" Server: ECS (sab/574F) Vary: Accept-Encoding X-Cache: HIT Content-Length: 1256 ---response end--- 200 OK Registered socket 3 for persistent reuse. URI content encoding = 'UTF-8' Length: 1256 (1.2K) [text/html] Saving to: 'index.html'

7. Response 301 response

Those familiar with network protocols know that 200 response code means that everything is going as expected. The 301 response means that the URL has pointed to a different website.

In this case, if you need to download files, you need to use wget's redirection function. Therefore, if you encounter a 301 response, you need to use the -- Max redirect option.

If you don't want to redirect, you can set -- Max redirect to 0.

$ wget --max-redirect 0 http://www.lxlinux.net --2021-09-21 11:01:35-- http://www.lxlinux.net/ Resolving www.lxlinux.net... 192.0.43.8, 2001:500:88:200::8 Connecting to www.lxlinux.net|192.0.43.8|:80... connected. HTTP request sent, awaiting response... 301 Moved Permanently Location: https://www.www.lxlinux.net/ [following] 0 redirections exceeded.

Alternatively, you can set it to another number to control the number of redirects that wget follows.

8. Expand short links

Sometimes, we need to convert a long link into a short link. For example, when filling in information in the text box, sometimes the text box has restrictions on the length of characters. At this time, the short chain can greatly reduce the number of characters.

In addition to using a third-party platform, we can directly use the wget command to restore short links to long links. The -- Max redirect option is still used here:

$ wget --max-redirect 0 "https://bit.ly/2yDyS4T" --2021-09-21 11:32:04-- https://bit.ly/2yDyS4T Resolving bit.ly... 67.199.248.10, 67.199.248.11 Connecting to bit.ly|67.199.248.10|:443... connected. HTTP request sent, awaiting response... 301 Moved Permanently Location: http://www.lxlinux.net/ [following] 0 redirections exceeded.

The penultimate line of the output, in the Location section, you can see the true face of the short chain after expansion.

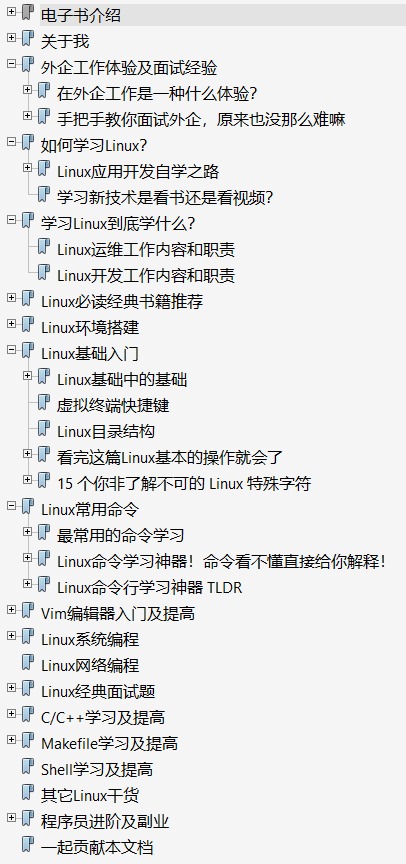

Finally, recently, many small partners asked me for a Linux learning roadmap, so I stayed up late in my spare time for a month and sorted out an e-book according to my own experience. Whether you are interviewing or self-improvement, I believe it will help you!

Free to everyone, just ask everyone to give me a praise!

E-book | learning roadmap for Linux Development

I also hope some friends can join me and make this e-book more perfect!

Have you got anything? I hope the old fellow will take three strikes to show this article to more people.

Recommended reading: