General introduction

The project is mainly based on github project Introduce how to access TIPC and how to support serving. For more information about the original project, please see readme Understand MD. In addition, you can also refer to the official TIPC and Serving To connect your own model to TIPC and serve support.

TIPC basic chain

♣ brief introduction

In fact, what we want to do is to use a shell script to read txt files, and then achieve the purposes of train, eval, dynamic to static and infer. We hope that by running only one shell script, users can complete one or more of the above functions.

♠ Specific process

So since we are going to train, Eval, dynamic to static and infer, first we have to write their py script files. Generally, the corresponding are train py,eval.py,export_model.py and infer Py, we usually write the first two when writing algorithms, so let me introduce the next two script files.

Dynamic and static of model

In fact, it mainly refers to the transformation of the model from dynamic diagram to static diagram for later information and so on. The specific detailed code can be according to the directory ppdet / engine / trainer Modify line 531 in py.:

import os

import paddle

from paddle.static import InputSpec

def export(model, model_name, output_dir):

# Adjust the model to eval mode

model.eval()

# Set the address where the static graph model is saved

save_dir = os.path.join(output_dir, model_name)

if not os.path.exists(save_dir):

os.makedirs(save_dir)

# Set the input shape to [3, - 1, - 1] if it is a dynamic shape

image_shape = [3, -1, -1]

# Set other inputs required

input_spec = [{

"image": InputSpec(

shape=[None] + image_shape, name='image'),

"im_shape": InputSpec(

shape=[None, 2], name='im_shape'),

"scale_factor": InputSpec(

shape=[None, 2], name='scale_factor')

}]

static_model = paddle.jit.to_static(self.model, input_spec=input_spec)

# NOTE: dy2st do not pruned program, but jit.save will prune program

# input spec, prune input spec here and save with pruned input spec

pruned_input_spec = _prune_input_spec(

input_spec,

static_model.forward.main_program,

static_model.forward.outputs

)

# Save model

paddle.jit.save(

static_model,

os.path.join(save_dir, 'model'),

input_spec=pruned_input_spec

)

def _prune_input_spec(input_spec, program, targets):

# try to prune static program to figure out pruned input spec

# so we perform following operations in static mode

paddle.enable_static()

pruned_input_spec = [{}]

program = program.clone()

program = program._prune(targets=targets)

global_block = program.global_block()

for name, spec in input_spec[0].items():

try:

v = global_block.var(name)

pruned_input_spec[0][name] = spec

except Exception:

pass

paddle.disable_static()

return pruned_input_spec

After the code is written, execute tools/export_model.py, and the static graph model will be saved to output_inference/retinanet_r50_fpn_1x_coco (the specific storage location depends on the program written by yourself).

# Install required dependencies !pip install -r requirements.txt

!python tools/export_model.py -c configs/retinanet/retinanet_r50_fpn_1x_coco.yml -o weights=/home/aistudio/data/data104154/best_model.pdparams

Influence of model

Here, you can use argparse to receive the parameters entered when starting with the command line. For this part and the preparation of more visualization, video reasoning and preprocessing operator s, please refer to the directory: deploy / Python / infer py,deploy/python/preprocess.py

import os

import yaml

import numpy as np

import math

import paddle

from paddle.inference import Config

from paddle.inference import create_predictor

# RetinaNet-Based-on-PPdet-main/deploy/python/utils.py

# import some parameter settings, time calculation class and memory usage calculation class

from utils import argsparser, Timer, get_current_memory_mb

class Detector(object):

def __init__(self,

pred_config,

model_dir,

device='CPU',

run_mode='fluid',

batch_size=1,

trt_min_shape=1,

trt_max_shape=1280,

trt_opt_shape=640,

trt_calib_mode=False,

cpu_threads=1,

enable_mkldnn=False):

self.pred_config = pred_config

# Initializing predictor is mainly used to set cpu\gpu, Mkldnn\TensorRT and other related settings

self.predictor, self.config = load_predictor(

model_dir,

run_mode=run_mode,

batch_size=batch_size,

min_subgraph_size=self.pred_config.min_subgraph_size,

device=device,

use_dynamic_shape=self.pred_config.use_dynamic_shape,

trt_min_shape=trt_min_shape,

trt_max_shape=trt_max_shape,

trt_opt_shape=trt_opt_shape,

trt_calib_mode=trt_calib_mode,

cpu_threads=cpu_threads,

enable_mkldnn=enable_mkldnn)

# These two functions are used to record time and memory

self.det_times = Timer()

self.cpu_mem, self.gpu_mem, self.gpu_util = 0, 0, 0

# Preprocessing function

def preprocess(self, image_list):

# The main purpose here is to set the operator

# If the preprocessing is fixed, you can also refer to the following link and directly Compose together

# https://github.com/littletomatodonkey/AlexNet-Prod/blob/tipc/pipeline/Step5/AlexNet_paddle/deploy/inference_python/infer.py#L48

preprocess_ops = []

for op_info in self.pred_config.preprocess_infos:

new_op_info = op_info.copy()

op_type = new_op_info.pop('type')

preprocess_ops.append(eval(op_type)(**new_op_info))

input_im_lst = []

input_im_info_lst = []

for im_path in image_list:

im, im_info = preprocess(im_path, preprocess_ops)

input_im_lst.append(im)

input_im_info_lst.append(im_info)

# Convert the preprocessed input into the format required by the model (depending on your own model, this function may not be required)

inputs = create_inputs(input_im_lst, input_im_info_lst)

return inputs

# Post processing

def postprocess(self,

np_boxes,

np_masks,

inputs,

np_boxes_num,

threshold=0.5):

# postprocess output of predictor

results = {}

results['boxes'] = np_boxes

results['boxes_num'] = np_boxes_num

if np_masks is not None:

results['masks'] = np_masks

return results

def predict(self, image_list, threshold=0.5, warmup=0, repeats=1):

# The preprocessing obtains the input required by the model

self.det_times.preprocess_time_s.start()

inputs = self.preprocess(image_list)

self.det_times.preprocess_time_s.end()

np_boxes, np_masks = None, None

input_names = self.predictor.get_input_names()

# Get the name of input and output

for i in range(len(input_names)):

input_tensor = self.predictor.get_input_handle(input_names[i])

input_tensor.copy_from_cpu(inputs[input_names[i]])

for i in range(warmup):

self.predictor.run()

output_names = self.predictor.get_output_names()

boxes_tensor = self.predictor.get_output_handle(output_names[0])

np_boxes = boxes_tensor.copy_to_cpu()

if self.pred_config.mask:

masks_tensor = self.predictor.get_output_handle(output_names[2])

np_masks = masks_tensor.copy_to_cpu()

self.det_times.inference_time_s.start()

for i in range(repeats):

self.predictor.run()

output_names = self.predictor.get_output_names()

boxes_tensor = self.predictor.get_output_handle(output_names[0])

np_boxes = boxes_tensor.copy_to_cpu()

boxes_num = self.predictor.get_output_handle(output_names[1])

np_boxes_num = boxes_num.copy_to_cpu()

if self.pred_config.mask:

masks_tensor = self.predictor.get_output_handle(output_names[2])

np_masks = masks_tensor.copy_to_cpu()

self.det_times.inference_time_s.end(repeats=repeats)

self.det_times.postprocess_time_s.start()

results = []

# Return results after post-processing

if reduce(lambda x, y: x * y, np_boxes.shape) < 6:

print('[WARNNING] No object detected.')

results = {'boxes': np.array([[]]), 'boxes_num': [0]}

else:

results = self.postprocess(

np_boxes, np_masks, inputs, np_boxes_num, threshold=threshold)

self.det_times.postprocess_time_s.end()

self.det_times.img_num += len(image_list)

return results

def get_timer(self):

return self.det_times

def load_predictor(model_dir,

run_mode='fluid',

batch_size=1,

device='CPU',

min_subgraph_size=3,

use_dynamic_shape=False,

trt_min_shape=1,

trt_max_shape=1280,

trt_opt_shape=640,

trt_calib_mode=False,

cpu_threads=1,

enable_mkldnn=False):

if device != 'GPU' and run_mode != 'fluid':

raise ValueError(

"Predict by TensorRT mode: {}, expect device=='GPU', but device == {}"

.format(run_mode, device))

# Use the api of information to load the model

config = Config(

os.path.join(model_dir, 'model.pdmodel'),

os.path.join(model_dir, 'model.pdiparams')

)

if device == 'GPU':

# initial GPU memory(M), device ID

config.enable_use_gpu(200, 0)

# optimize graph and fuse op

config.switch_ir_optim(True)

elif device == 'XPU':

config.enable_xpu(10 * 1024 * 1024)

else:

config.disable_gpu()

config.set_cpu_math_library_num_threads(cpu_threads)

if enable_mkldnn:

try:

# cache 10 different shapes for mkldnn to avoid memory leak

config.set_mkldnn_cache_capacity(10)

config.enable_mkldnn()

except Exception as e:

print(

"The current environment does not support `mkldnn`, so disable mkldnn."

)

pass

precision_map = {

'trt_int8': Config.Precision.Int8,

'trt_fp32': Config.Precision.Float32,

'trt_fp16': Config.Precision.Half

}

if run_mode in precision_map.keys():

config.enable_tensorrt_engine(

workspace_size=1 << 10,

max_batch_size=batch_size,

min_subgraph_size=min_subgraph_size,

precision_mode=precision_map[run_mode],

use_static=False,

use_calib_mode=trt_calib_mode)

if use_dynamic_shape:

min_input_shape = {

'image': [batch_size, 3, trt_min_shape, trt_min_shape]

}

max_input_shape = {

'image': [batch_size, 3, trt_max_shape, trt_max_shape]

}

opt_input_shape = {

'image': [batch_size, 3, trt_opt_shape, trt_opt_shape]

}

config.set_trt_dynamic_shape_info(min_input_shape, max_input_shape,

opt_input_shape)

print('trt set dynamic shape done!')

# disable print log when predict

config.disable_glog_info()

# enable shared memory

config.enable_memory_optim()

# disable feed, fetch OP, needed by zero_copy_run

config.switch_use_feed_fetch_ops(False)

predictor = create_predictor(config)

return predictor, config

'''

Mainly divided into bs Whether to wait for 1 processing, considering that it may be necessary to padding.

In addition, put the required fields and related information into a dictionary, and then return.

'''

def create_inputs(imgs, im_info):

inputs = {}

im_shape = []

scale_factor = []

if len(imgs) == 1:

inputs['image'] = np.array((imgs[0], )).astype('float32')

inputs['im_shape'] = np.array(

(im_info[0]['im_shape'], )).astype('float32')

inputs['scale_factor'] = np.array(

(im_info[0]['scale_factor'], )).astype('float32')

return inputs

for e in im_info:

im_shape.append(np.array((e['im_shape'], )).astype('float32'))

scale_factor.append(np.array((e['scale_factor'], )).astype('float32'))

inputs['im_shape'] = np.concatenate(im_shape, axis=0)

inputs['scale_factor'] = np.concatenate(scale_factor, axis=0)

imgs_shape = [[e.shape[1], e.shape[2]] for e in imgs]

max_shape_h = max([e[0] for e in imgs_shape])

max_shape_w = max([e[1] for e in imgs_shape])

padding_imgs = []

for img in imgs:

im_c, im_h, im_w = img.shape[:]

padding_im = np.zeros(

(im_c, max_shape_h, max_shape_w), dtype=np.float32)

padding_im[:, :im_h, :im_w] = img

padding_imgs.append(padding_im)

inputs['image'] = np.stack(padding_imgs, axis=0)

return inputs

You can load the static graph model just exported for the prepared information code and test the pictures

!python ./deploy/python/infer.py --device=gpu --model_dir=output_inference/retinanet_r50_fpn_1x_coco --batch_size=1 --image_dir=demo

The visualization of the results will be saved to \output:

About the preparation of txt command configuration file and shell script

- txt command configuration file

The shell script reads the information of TXT file through the number of lines, which corresponds to the number of lines. For example, in the shell script, write the fourth line (counting from 0) in the txt file to read whether to use gpu. At this time, if you put use_gpu:True writes to line 6, so the shell reads the wrong information. Therefore, do not add or delete rows at will. If you have to add or delete rows, you need to modify the corresponding shell script at the same time.

The following comments can be deleted when they are officially used (note that the double # number in the line should not be deleted).

===========================train_params=========================== model_name:retinanet_r50_fpn_1x_coco # The name of the output folder used as the general storage model python:python3.7 gpu_list:0 # It is generally set to 0, and multi gpu is not supported temporarily use_gpu:True # Whether to use gpu. If the cpu needs to be tested in addition to gpu, it is written as True|False auto_cast:null|amp # Is half precision tested epoch:lite_train_lite_infer=2|lite_train_whole_infer=1|whole_train_whole_infer=12 save_dir:tipc/train_infer_python/output/retinanet_r50 # output saved address TrainReader.batch_size:lite_train_lite_infer=2|lite_train_whole_infer=2|whole_train_whole_infer=1 pretrain_weights:/home/aistudio/data/data104154/best_model.pdparams # Pre training weight position, you can also put the optimal weight train_model_name:model_final.pdparams # Name of weight saved by train train_infer_img_dir:./dataset/coco/test2017/ # Dataset location filename:retinanet_r50_fpn_1x_coco ## trainer:norm_train # This is only for norm_ Just verify the train pattern norm_train:tools/train.py -c configs/retinanet/retinanet_r50_fpn_1x_coco.yml -o # Here is the command line for running train. Generally, the configuration file location after - c needs to be modified pact_train:null fpgm_train:null distill_train:null null:null null:null ## ===========================eval_params=========================== eval:tools/eval.py -c configs/retinanet/retinanet_r50_fpn_1x_coco.yml -o # Here is the command line to run eval null:null ## ===========================infer_params=========================== --output_dir:./output_infer/python/retinanet_r50 # Output location of information weights:/home/aistudio/data/data104154/best_model.pdparams # Optimal weight for information loading norm_export:tools/export_model.py -c configs/retinanet/retinanet_r50_fpn_1x_coco.yml -o # Command line for static graph model export pact_export:null fpgm_export:null distill_export:null export1:null export2:null kl_quant_export:null ## infer_mode:norm infer_quant:False inference:./deploy/python/infer.py # Location of the infer script --device:gpu|cpu # gpu and cpu are tested --enable_mkldnn:True|False # Whether mkldnn is used in cpu test --cpu_threads:1|4 # Testing different threads --batch_size:1 --use_tensorrt:null # Is tensorrt used in gpu testing --run_mode:fluid # Here you can set the accuracy of tensorrt, which is not required this time --model_dir:tipc/train_infer_python/output/retinanet_r50/norm_train_gpus_0_autocast_null/retinanet_r50_fpn_1x_coco # Weight for information loading --image_dir:./dataset/coco/test2017/ # Image required by information --save_log_path:null --run_benchmark:False --trt_max_shape:1600

For inference, run infer Other parameters of Py configuration, such as device and enable_mkldnn, etc., is the load input into the previous code_ The predictor function initializes the predictor.

- About shell script files. In fact, there are not many places that need to be modified. You may need to input the parameters required by your own txt file and py script file.

- You may need to parse the lines you added in txt, refer to the first two lines below, and update the number of lines updated by other parameters (because you added a line, the I of $(func_parser_key "${lines[i]}") in parameter parsing after it should be increased by 1.

- Add or delete args or kwargs according to the parameters required by your py script, as shown in the following, "${set_use_gpu} ${set_save_model} ${set_epoch} ${set_pretrain} ${set_batchsize} ${set_filename} ${set_autocast}"

your_params_key=$(func_parser_key "${lines[4]}")

your_params_value=$(func_parser_value "${lines[4]}")

cmd="${python} ${run_train} ${set_use_gpu} ${set_save_model} ${set_epoch} ${set_pretrain} ${set_batchsize} ${set_filename} ${set_autocast}"

Lightweight verification of model

In fact, if the TIPC verification of the original model is passed, the TIPC of lightweight verification is very easy. Here, take mobilenetv1 as an example, first replace the backbone of the original model with mobilenetv1. What needs to be determined is the characteristics of which layers you need to return. Here you can understand the network structure through reading the source code: retinanet based on ppdet main / ppdet / modeling / backbones / mobilenet_ v1. Py, here we need to return the features of layers 4, 6 and 13. The stripe of these layers is just 8, 16 and 32. The pre training weight must also use mobilenetv1. The optimizer may also need to be modified, and the rest can use the original:

_BASE_: [ '../datasets/coco_detection.yml', '../runtime.yml', '_base_/retinanet_r50_fpn.yml', '_base_/optimizer_1x.yml', '_base_/retinanet_reader.yml', ] pretrain_weights: https://paddledet.bj.bcebos.com/models/pretrained/MobileNetV1_pretrained.pdparams RetinaNet: backbone: MobileNet MobileNet: scale: 1 feature_maps: [4, 6, 13] with_extra_blocks: false extra_block_filters: []

Then, after training to obtain the optimal weight, modify the relevant part of the original txt file to the content of mobilenetv1 (for example, the optimal weight bit is replaced by mobilenetv1). The two comparison files here are:

tipc/train_infer_python/configs/retinanet/retinanet_mobilenet_v1_fpn_1x_coco.txt

tipc/train_infer_python/configs/retinanet/retinanet_r50_fpn_1x_coco.txt

♦ Effect experience and display

For more details, please refer to the file tipc/train_infer_python/README.md

- Installing various dependencies and preparing datasets

!pip install -r requirements.txt !bash tipc/train_infer_python/prepare.sh tipc/train_infer_python/configs/retinanet/retinanet_r50_fpn_1x_coco.txt 'lite_train_lite_infer'

- To retinanet_r50_fpn_1x_coco uses a small amount of data for training and reasoning

!bash tipc/train_infer_python/test_train_inference_python.sh tipc/train_infer_python/configs/retinanet/retinanet_r50_fpn_1x_coco.txt 'lite_train_lite_infer'

If the operation is successful, it will output as follows:

Run successfully with command - python3.7 tools/train.py -c configs/retinanet/retinanet_r50_fpn_1x_coco.yml -o use_gpu=True save_dir=tipc/train_infer_python/output/retinanet_r50/norm_train_gpus_0_autocast_null epoch=2 pretrain_weights=/home/aistudio/data/data104154/best_model.pdparams TrainReader.batch_size=2 filename=retinanet_r50_fpn_1x_coco ! Run successfully with command - python3.7 tools/eval.py -c configs/retinanet/retinanet_r50_fpn_1x_coco.yml -o weights=tipc/train_infer_python/output/retinanet_r50/norm_train_gpus_0_autocast_null/retinanet_r50_fpn_1x_coco/model_final.pdparams use_gpu=True ! Run successfully with command - python3.7 tools/export_model.py -c configs/retinanet/retinanet_r50_fpn_1x_coco.yml -o weights=tipc/train_infer_python/output/retinanet_r50/norm_train_gpus_0_autocast_null/retinanet_r50_fpn_1x_coco/model_final.pdparams filename=retinanet_r50_fpn_1x_coco --output_dir=./output_infer/python/retinanet_r50 ! Run successfully with command - python3.7 ./deploy/python/infer.py --device=gpu --run_mode=fluid --model_dir=./output_infer/python/retinanet_r50/retinanet_r50_fpn_1x_coco --batch_size=1 --image_dir=./dataset/coco/test2017/ --run_benchmark=False --trt_max_shape=1600 --output_dir=./output_infer/python/retinanet_r50 > tipc/train_infer_python/output/retinanet_r50/python_infer_gpu_precision_fluid_batchsize_1.log 2>&1 !

- To retinanet_mobilenet_v1_fpn_1x_coco uses a small amount of data for training and reasoning

!bash tipc/train_infer_python/test_train_inference_python.sh tipc/train_infer_python/configs/retinanet/retinanet_mobilenet_v1_fpn_1x_coco.txt 'lite_train_lite_infer'

If the operation is successful, the above information will also be output, and the output and log files will be saved to tipc/train_infer_python/output. Visualize the output as follows:

Serving support

♣ brief introduction

What we need to do in service deployment is to load the model on the server side, then access it through the client side and reason. The model loaded here is converted from the static graph model, so you need to export the static graph model as before.

♠ Specific process

If we have done TIPC, we already have the static graph model of the model. Then, after we convert the static graph model to get the required serving model, we mainly carry out server-side, client-side and some pre-processing and post-processing, and finally connect it to TIPC, which is to write a shell script and corresponding txt file as before.

Environmental preparation

First, install some packages required for serving, check your environment, and select the corresponding version for installation:

!nvidia-smi

Tue Jan 25 09:56:06 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 418.67 Driver Version: 418.67 CUDA Version: 10.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla V100-SXM2... On | 00000000:05:00.0 Off | 0 |

| N/A 34C P0 40W / 300W | 0MiB / 32480MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

# Download package !wget https://paddle-serving.bj.bcebos.com/test-dev/whl/paddle_serving_server_gpu-0.7.0.post101-py3-none-any.whl !wget https://paddle-serving.bj.bcebos.com/test-dev/whl/paddle_serving_client-0.7.0-cp37-none-any.whl !wget https://paddle-serving.bj.bcebos.com/test-dev/whl/paddle_serving_app-0.7.0-py3-none-any.whl

# Installation package !pip install paddle_serving_server_gpu-0.7.0.post101-py3-none-any.whl !pip install paddle_serving_client-0.7.0-cp37-none-any.whl !pip install paddle_serving_app-0.7.0-py3-none-any.whl

Use the following command to convert the static graph model into the model required by serving

''' --dirname Static graph model saving directory --model_filename Static graph model file name --params_filename model.pdiparams --serving_server deploy/serving/serving_server Server file saving directory --serving_client deploy/serving/serving_client Client file save directory ''' !python3 -m paddle_serving_client.convert --dirname output_inference/retinanet_r50_fpn_1x_coco --model_filename model.pdmodel --params_filename model.pdiparams --serving_server deploy/serving/serving_server --serving_client deploy/serving/serving_client

The output model can be found in the directory deploy/serving.

Server and its configuration file

The server mainly needs to write input preprocessing and result post-processing. You can refer to the following code

class RetinaNetOp(Op):

def init_op(self):

# Here are some preprocessing operations required for compose

self.eval_transforms = Compose([

Resize(target_size=[800, 1333]),

NormalizeImage(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224,0.225]),

Permute(),

PadStride(32)

])

def preprocess(self, input_dicts, data_id, log_id):

# In fact, this is mainly for the case where bs is 1

(_, input_dict), = input_dicts.items()

batch_size = len(input_dict.keys())

imgs = []

imgs_info = {'im_shape':[], 'scale_factor':[]}

for key in input_dict.keys():

# Decode incoming data

data = base64.b64decode(input_dict[key].encode('utf8'))

img = cv2.imdecode(np.frombuffer(data, np.uint8), cv2.IMREAD_COLOR)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

# Add fields required by the model

im_info = {

'scale_factor': np.array([1., 1.], dtype=np.float32),

'im_shape': img.shape[:2],

}

# Data enhancement and preprocessing

img, im_info = self.eval_transforms(img, im_info)

imgs.append(img[np.newaxis, :].copy())

imgs_info["im_shape"].append(im_info["im_shape"][np.newaxis, :].copy())

imgs_info["scale_factor"].append(im_info["scale_factor"][np.newaxis, :].copy())

input_imgs = np.concatenate(imgs, axis=0)

input_im_shape = np.concatenate(imgs_info["im_shape"], axis=0)

input_scale_factor = np.concatenate(imgs_info["scale_factor"], axis=0)

# The final return only needs to take care of the first one. Its content is the input required for your model reasoning

return {"image": input_imgs, "im_shape": input_im_shape, "scale_factor": input_scale_factor}, False, None, ""

def postprocess(self, input_dicts, fetch_dict, data_id, log_id):

# You can use deploy / serving / serving here_ server/serving_ server_ Conf.prototxt transforms de'dao through static graph model

np_boxes = list(fetch_dict.values())[0]

# Here is the output after nms. Just follow this for post-processing

keep = (np_boxes[:, 1] > 0.5) & (np_boxes[:, 0] > -1)

np_boxes = np_boxes[keep, :]

result = {"class_id": [], "confidence": [], "left_top": [], "right_bottom": []}

for dt in np_boxes:

clsid, bbox, score = int(dt[0]), dt[2:], dt[1]

xmin, ymin, xmax, ymax = bbox

result["class_id"].append(clsid)

result["confidence"].append(score)

result["left_top"].append([xmin, ymin])

result["right_bottom"].append([xmax, ymax])

result["class_id"] = str(result["class_id"])

result["confidence"] = str(result["confidence"])

result["left_top"] = str(result["left_top"])

result["right_bottom"] = str(result["right_bottom"])

return result, None, ""

# The rest are fixed operations

class RetinaNetService(WebService):

def get_pipeline_response(self, read_op):

retinanet_op = RetinaNetOp(name="retinanet", input_ops=[read_op])

return retinanet_op

# define the service class

uci_service = RetinaNetService(name="retinanet")

# load config and prepare the service

uci_service.prepare_pipeline_config("config.yml")

# start the service

uci_service.run_service()

As for the preparation of server-side configuration file, there is not much to be written in this part:

op:

# op name, and Web_ The initialization name parameter of Service class in Service is consistent

retinanet:

#Concurrent number, is_ thread_ When OP = true, it refers to thread concurrency; Otherwise, it is process concurrency

concurrency: 1

#When the op configuration does not have a server_endpoints, from local_service_conf read local service configuration

local_service_conf:

# Location of service model export

model_config: "./serving_server"

For details, please refer to:

deploy/serving/web_service.py deploy/serving/config.yml

For the preparation of preprocessing operators, please refer to deploy/serving/preprocess_ops.py can be added according to your needs.

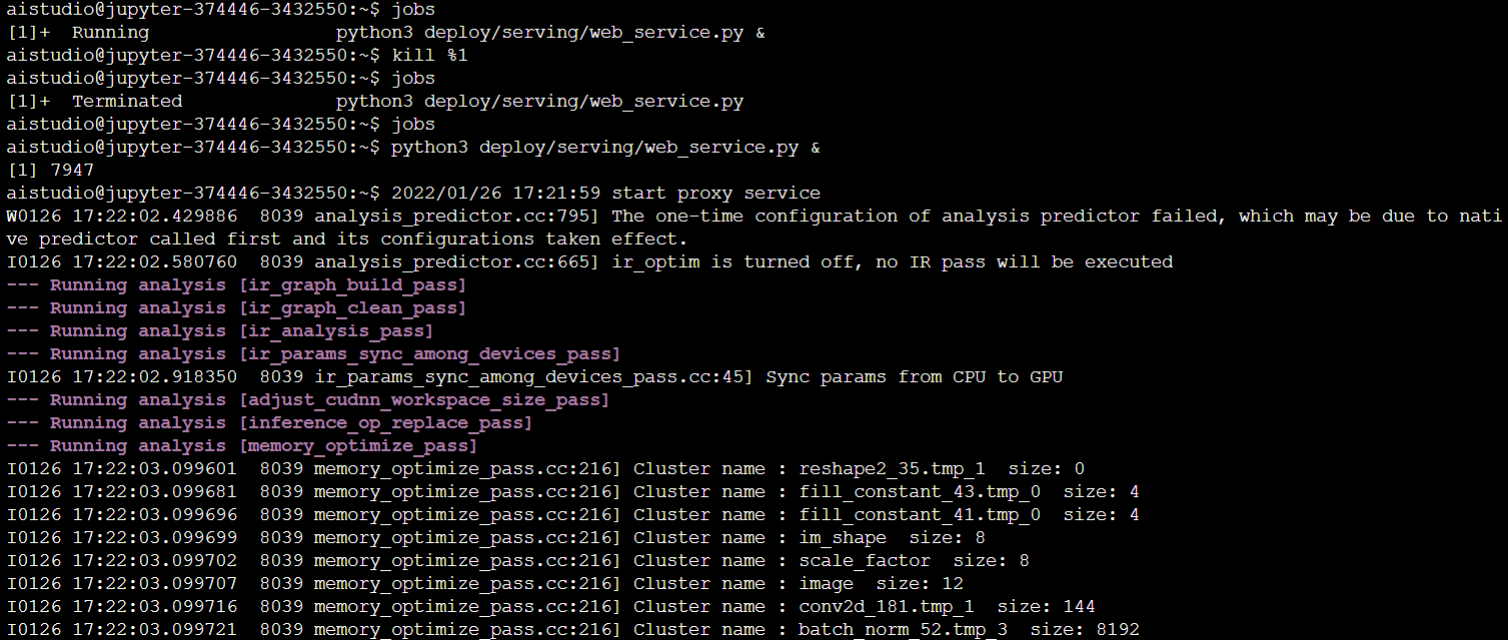

Here, you need to take the terminal and run python3 deploy / serving / Web_ service. Py &, note here that if you have run it before, you need to kill it as shown in the figure below. In addition, all paths at this time need to be changed to absolute paths, and the later access tipc uses relative paths.

Writing of client

The client is simpler. It mainly explains which model you want to use to access and which picture you want to test. The details are as follows:

def get_args(add_help=True):

import argparse

parser = argparse.ArgumentParser(

description='Paddle Serving', add_help=add_help)

# Pictures to be tested

parser.add_argument('--img_path', default="dataset/coco/test2017/000000575930.jpg")

args = parser.parse_args()

return args

# Encode input

def cv2_to_base64(image):

return base64.b64encode(image).decode('utf8')

def main(args):

# Visit the url of your model and change it to your model name

url = "http://127.0.0.1:18080/retinanet/prediction"

logid = 10000

img_path = args.img_path

with open(img_path, 'rb') as file:

image_data1 = file.read()

# data should be transformed to the base64 format

image = cv2_to_base64(image_data1)

data = {"key": ["image"], "value": [image], "logid": logid}

# send requests

r = requests.post(url=url, data=json.dumps(data))

print(r.json())

if __name__ == "__main__":

args = get_args()

main(args)

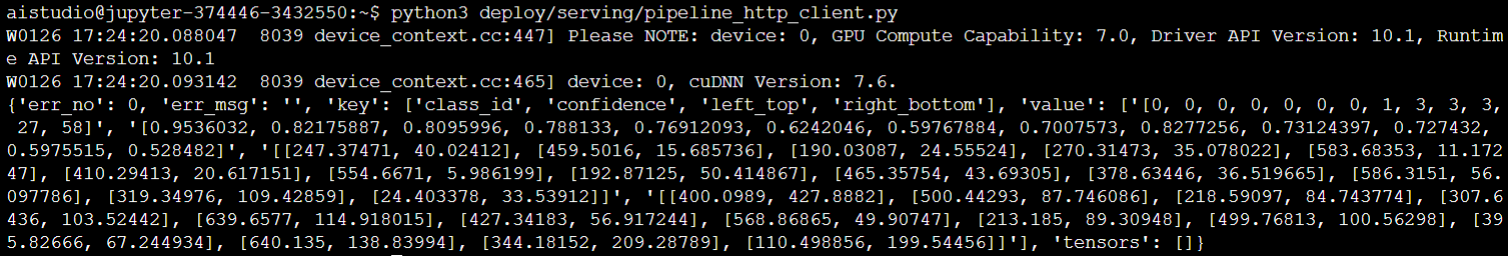

Similarly, test the writing client. After starting the server according to the above method, use ctrl c, and then enter the command in the terminal to run the client python3 deploy/serving/pipeline_http_client.py

Access TIPC

serving access to TIPC is not as complex as before, and there are few things that need to be changed. The points needing attention are the same as before. If the txt file is written:

===========================serving_params=========================== model_name:RetinaNet python:python3.7 trans_model:-m paddle_serving_client.convert --dirname:output_infer/python/retinanet_r50/retinanet_r50_fpn_1x_coco # The location of the static graph model, which is used to export the serving model --model_filename:model.pdmodel --params_filename:model.pdiparams --serving_server:deploy/serving/serving_server # server side model export location --serving_client:deploy/serving/serving_client # Location of client-side model export serving_dir:./deploy/serving web_service:web_service.py op.alexnet.local_service_conf.devices:0 null:null null:null null:null null:null pipline:pipeline_http_client.py ervice_conf.devices:0 null:null null:null null:null null:null pipline:pipeline_http_client.py --img_path:../../dataset/coco/test2017/000000575930.jpg # Pictures to be tested

♦ Effect experience and display

For more detailed introduction and environment configuration, please refer to the file TIPC / serving / readme MD, first according to the dependency:

!pip install -r requirements.txt

Test

!bash tipc/serving/test_serving.sh tipc/serving/configs/retinanet_r50_fpn_1x_coco.txt

################### run test ###################

/home/aistudio/deploy/serving

2022/01/25 11:23:14 start proxy service

W0125 11:23:18.311997 6867 analysis_predictor.cc:795] The one-time configuration of analysis predictor failed, which may be due to native predictor called first and its configurations taken effect.

I0125 11:23:18.441354 6867 analysis_predictor.cc:665] ir_optim is turned off, no IR pass will be executed

[1m[35m--- Running analysis [ir_graph_build_pass][0m

[1m[35m--- Running analysis [ir_graph_clean_pass][0m

[1m[35m--- Running analysis [ir_analysis_pass][0m

[1m[35m--- Running analysis [ir_params_sync_among_devices_pass][0m

I0125 11:23:18.746263 6867 ir_params_sync_among_devices_pass.cc:45] Sync params from CPU to GPU

[1m[35m--- Running analysis [adjust_cudnn_workspace_size_pass][0m

[1m[35m--- Running analysis [inference_op_replace_pass][0m

[1m[35m--- Running analysis [memory_optimize_pass][0m

I0125 11:23:18.907073 6867 memory_optimize_pass.cc:216] Cluster name : reshape2_35.tmp_1 size: 0

I0125 11:23:18.907119 6867 memory_optimize_pass.cc:216] Cluster name : fill_constant_43.tmp_0 size: 4

I0125 11:23:18.907122 6867 memory_optimize_pass.cc:216] Cluster name : fill_constant_41.tmp_0 size: 4

I0125 11:23:18.907131 6867 memory_optimize_pass.cc:216] Cluster name : im_shape size: 8

I0125 11:23:18.907136 6867 memory_optimize_pass.cc:216] Cluster name : scale_factor size: 8

I0125 11:23:18.907138 6867 memory_optimize_pass.cc:216] Cluster name : image size: 12

I0125 11:23:18.907143 6867 memory_optimize_pass.cc:216] Cluster name : conv2d_181.tmp_1 size: 144

I0125 11:23:18.907147 6867 memory_optimize_pass.cc:216] Cluster name : batch_norm_52.tmp_3 size: 8192

I0125 11:23:18.907155 6867 memory_optimize_pass.cc:216] Cluster name : relu_39.tmp_0 size: 4096

I0125 11:23:18.907160 6867 memory_optimize_pass.cc:216] Cluster name : conv2d_123.tmp_0 size: 8192

I0125 11:23:18.907163 6867 memory_optimize_pass.cc:216] Cluster name : batch_norm_49.tmp_1 size: 8192

I0125 11:23:18.907166 6867 memory_optimize_pass.cc:216] Cluster name : conv2d_161.tmp_1 size: 144

I0125 11:23:18.907169 6867 memory_optimize_pass.cc:216] Cluster name : conv2d_171.tmp_1 size: 144

I0125 11:23:18.907172 6867 memory_optimize_pass.cc:216] Cluster name : relu_45.tmp_0 size: 8192

I0125 11:23:18.907176 6867 memory_optimize_pass.cc:216] Cluster name : elementwise_add_15 size: 8192

I0125 11:23:18.907179 6867 memory_optimize_pass.cc:216] Cluster name : reshape2_28.tmp_0 size: 320

I0125 11:23:18.907183 6867 memory_optimize_pass.cc:216] Cluster name : conv2d_141.tmp_1 size: 144

I0125 11:23:18.907186 6867 memory_optimize_pass.cc:216] Cluster name : relu_21.tmp_0 size: 2048

I0125 11:23:18.907189 6867 memory_optimize_pass.cc:216] Cluster name : relu_88.tmp_0 size: 1024

I0125 11:23:18.907197 6867 memory_optimize_pass.cc:216] Cluster name : conv2d_151.tmp_1 size: 144

[1m[35m--- Running analysis [ir_graph_to_program_pass][0m

I0125 11:23:19.583788 6867 analysis_predictor.cc:714] ======= optimize end =======

I0125 11:23:19.620709 6867 naive_executor.cc:98] --- skip [feed], feed -> scale_factor

I0125 11:23:19.620766 6867 naive_executor.cc:98] --- skip [feed], feed -> image

I0125 11:23:19.620771 6867 naive_executor.cc:98] --- skip [feed], feed -> im_shape

I0125 11:23:19.632345 6867 naive_executor.cc:98] --- skip [_generated_var_22], fetch -> fetch

I0125 11:23:19.632387 6867 naive_executor.cc:98] --- skip [_generated_var_23], fetch -> fetch

W0125 11:23:19.708725 6867 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1

W0125 11:23:19.712651 6867 device_context.cc:465] device: 0, cuDNN Version: 7.6.

{'err_no': 0, 'err_msg': '', 'key': ['class_id', 'confidence', 'left_top', 'right_bottom'], 'value': ['[0, 27, 39, 39, 39, 39, 48, 48]', '[0.9298271, 0.78884697, 0.609955, 0.56487834, 0.56370527, 0.5328276, 0.6830632, 0.67401433]', '[[288.6603, 9.321735], [412.90067, 172.55153], [539.206, 3.6034787], [557.5477, 4.5205536], [521.5307, 4.789155], [571.5571, 0.0], [15.654112, 242.51068], [202.25995, 197.21396]]', '[[638.92633, 390.8219], [477.95944, 296.9499], [559.34314, 64.882324], [572.8147, 40.891556], [538.74994, 67.22812], [583.3348, 42.266556], [213.04216, 322.14337], [368.59772, 320.33978]]'], 'tensors': []}

[33m Run successfully with command - python3.7 pipeline_http_client.py --img_path=../../dataset/coco/test2017/000000575930.jpg> ../../tipc/serving/output/server_infer_gpu_pipeline_http_usetrt_null_precision_null_batchsize_1.log 2>&1! [0m

The specific output is saved in tipc/serving/output, and the serving output can be:

{'err_no': 0, 'err_msg': '', 'key': ['class_id', 'confidence', 'left_top', 'right_bottom'], 'value': ['[0, 27, 39, 39, 39, 39, 48, 48]', '[0.9298271, 0.78884697, 0.609955, 0.56487834, 0.56370527, 0.5328276, 0.6830632, 0.67401433]', '[[288.6603, 9.321735], [412.90067, 172.55153], [539.206, 3.6034787], [557.5477, 4.5205536], [521.5307, 4.789155], [571.5571, 0.0], [15.654112, 242.51068], [202.25995, 197.21396]]', '[[638.92633, 390.8219], [477.95944, 296.9499], [559.34314, 64.882324], [572.8147, 40.891556], [538.74994, 67.22812], [583.3348, 42.266556], [213.04216, 322.14337], [368.59772, 320.33978]]'], 'tensors': []}

Compare with the information output

class_id:0, confidence:0.9298, left_top:[288.66,9.32],right_bottom:[638.93,390.82] class_id:27, confidence:0.7888, left_top:[412.90,172.55],right_bottom:[477.96,296.95] class_id:39, confidence:0.6100, left_top:[539.21,3.60],right_bottom:[559.34,64.88] class_id:39, confidence:0.5649, left_top:[557.55,4.52],right_bottom:[572.81,40.89] class_id:39, confidence:0.5637, left_top:[521.53,4.79],right_bottom:[538.75,67.23] class_id:39, confidence:0.5328, left_top:[571.56,0.00],right_bottom:[583.33,42.27] class_id:48, confidence:0.6831, left_top:[15.65,242.51],right_bottom:[213.04,322.14] class_id:48, confidence:0.6740, left_top:[202.26,197.21],right_bottom:[368.60,320.34]

Completely consistent 🚀🚀🚀, This is the end 🌸🌸🌸