Explore YOLO v3 source code - conclusion prediction

Updated:

Part 1 training:

https://mp.weixin.qq.com/s/T9LshbXoervdJDBuP564dQ

Part 2 model:

https://mp.weixin.qq.com/s/N79S9Qf1OgKsQ0VU5QvuHg

Part 3 network:

https://mp.weixin.qq.com/s/hC4P7iRGv5JSvvPe-ri_8g

Chapter 4 truth value:

https://mp.weixin.qq.com/s/5Sj7QadfVvx-5W9Cr4d3Yw

Part 5 Loss:

https://mp.weixin.qq.com/s/4L9E4WGSh0hzlD303036bQ

1. Detection function

Use the trained YOLO v3 model to detect the objects in the picture, where:

- Create an instance Yolo of the Yolo class;

- Use Image.open() to load the image;

- Call yolo.detect_image() detects an image;

- Close yolo's session;

- Display the detected image r_image;

realization:

def detect_img_for_test():

yolo = YOLO()

img_path = './dataset/img.jpg'

image = Image.open(img_path)

r_image = yolo.detect_image(image)

yolo.close_session()

r_image.show()

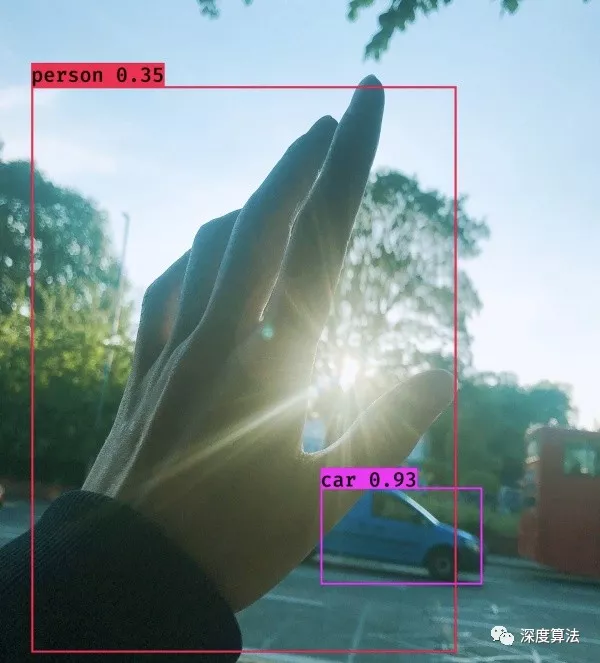

Output:

2. YOLO parameters

Initialization parameters of YOLO class:

- anchors_path: configuration file of anchor box, 9 width height combinations;

- model_path: the model that has been trained and supports retraining;

- classes_path: category file, matching with model file;

- score: the threshold of confidence, and delete the candidate box less than the threshold;

- IOU: IOU threshold of candidate box, and delete candidate boxes in the same category that are greater than the threshold;

- class_names: category list, read classes_path;

- Anchors: anchor box list, read anchors_path;

- model_image_size: the size of the image detected by the model. All input images need to be filled according to this;

- colors: generate random color sets through HSV color gamut, and the number is equal to the number of categories_ names;

- boxes, scores, classes: the core output of the detection, generated by the function generate(), is the output package of the model.

realization:

self.anchors_path = 'configs/yolo_anchors.txt' # Anchors self.model_path = 'model_data/yolo_weights.h5' # Model file self.classes_path = 'configs/coco_classes.txt' # Category file self.score = 0.20 self.iou = 0.20 self.class_names = self._get_class() # Get category self.anchors = self._get_anchors() # Get anchor self.sess = K.get_session() self.model_image_size = (416, 416) # fixed size or (None, None), hw self.colors = self.__get_colors(self.class_names) self.boxes, self.scores, self.classes = self.generate()

In__ get_ In colors():

- Divide the H value of bit 0 of HSV by 1, and the other SV values are 1 to generate a group of HSV list;

- Call hsv_to_rgb, convert HSV color gamut to RGB color gamut;

- The RGB value of 01 is multiplied by 255 to convert to a complete color value, (0255);

- Random shuffle color list;

realization:

@staticmethod def __get_colors(names):

# Different boxes, different colors

hsv_tuples = [(float(x) / len(names), 1., 1.)

for x in range(len(names))] # Different colors

colors = list(map(lambda x: colorsys.hsv_to_rgb(*x), hsv_tuples))

colors = list(map(lambda x: (int(x[0] * 255), int(x[1] * 255), int(x[2] * 255)), colors)) # RGB

np.random.seed(10101)

np.random.shuffle(colors)

np.random.seed(None)

return colors

The reason why HSV division is selected instead of RGB is that the color value offset of HSV is better, and the color of the drawn box is easier to distinguish.

3. Output package

boxes, scores and classes continue to be encapsulated on the basis of the model and are generated by the function generate(), where:

- boxes: coordinates of the four points of the box (top, left, bottom, right);

- scores: category confidence of box, fusion box confidence and category confidence;

- classes: the category of the box;

In the function generate(), set the parameters:

- num_anchors: the total number of anchor box es, usually 9;

- num_classes: the total number of classes. For example, COCO is 80 classes;

- yolo_model: by Yolo_ For the model created by body, call load_weights loading parameters;

realization:

num_anchors = len(self.anchors) # Number of anchors num_classes = len(self.class_names) # Number of categories self.yolo_model = yolo_body(Input(shape=(416, 416, 3)), 3, num_classes) self.yolo_model.load_weights(model_path) # Load model parameters

Next, set input_image_shape is placeholder, that is, the parameter variable in TF. In Yolo_ In Eval:

- Continue to encapsulate Yolo_ output of model;

- anchors, anchor box list;

- Category class_ Total number of names len();

- Enter the optional size of the picture, input_image_shape, i.e. (416, 416);

- score_threshold, the overall confidence threshold score of the box;

- iou_threshold, IOU threshold IOU of the same category box;

- Returns the coordinate boxes of the box, the category confidence scores of the box, and the category classes of the box;

realization:

self.input_image_shape = K.placeholder(shape=(2,))

boxes, scores, classes = yolo_eval(

self.yolo_model.output, self.anchors, len(self.class_names),

self.input_image_shape, score_threshold=self.score, iou_threshold=self.iou)

return boxes, scores, classes

The output score value will be greater than score_threshold, less than yolo_eval() has been deleted.

4. YOLO assessment

In function yolo_eval(), complete the encapsulation of prediction logic, where the input is:

- yolo_outputs: the output of YOLO model, a list of three scales, i.e. 13-26-52. The last dimension is the predicted value, which is composed of 255=3x(5+80). 3 is the anchor number of each layer, 5 is the four box values xywh and the confidence of objects contained in one box, and 80 is the number of categories of COCO;

- anchors: the value of 9 anchor box es;

- num_classes: number of categories; COCO is 80 categories;

- image_shape: TF parameter of placeholder type, default (416, 416);

- max_boxes: the maximum number of detection frames in the figure, 20;

- score_threshold: the confidence threshold of the box. If the box is smaller than the threshold, it will be deleted. If more boxes are needed, the threshold will be lowered. If fewer boxes are needed, the threshold will be raised;

- iou_threshold: the IoU threshold of the same category box. The overlapping box greater than the threshold will be deleted. If there are many overlapping objects, the threshold will be increased. If there are few overlapping objects, the threshold will be reduced;

Among them, Yolo_ The format of outputs is as follows:

[(?, 13, 13, 255), (?, 26, 26, 255), (?, 52, 52, 255)]

The anchors list is as follows:

[(10,13), (16,30), (33,23), (30,61), (62,45), (59,119), (116,90), (156,198), (373,326)]

realization:

boxes, scores, classes = yolo_eval(

self.yolo_model.output, self.anchors, len(self.class_names),

self.input_image_shape, score_threshold=self.score, iou_threshold=self.iou)

def yolo_eval(yolo_outputs, anchors, num_classes, image_shape,

max_boxes=20, score_threshold=.6, iou_threshold=.5):

Next, process parameters:

- num_layers, the number of layers of the output feature map, 3 layers;

- anchor_mask, which divides anchors into three layers, the first layer 13x13 is 678, the second layer 26x26 is 345, and the third layer 52x52 is 012;

- input_shape: the size of the input image, that is, the size of the 0th feature image multiplied by 32, that is, 13x32=416, which is related to the network structure of Darknet.

num_layers = len(yolo_outputs) anchor_mask = [[6, 7, 8], [3, 4, 5], [0, 1, 2]] if num_layers == 3 else [[3, 4, 5], [1, 2, 3]] # default setting input_shape = K.shape(yolo_outputs[0])[1:3] * 32

The larger the feature map, 13 - > 52, the smaller the detected object and the smaller the anchors required, so the anchors list is assigned in reverse order.

Next, YOLO is output at layer l of YOLO_ In outputs, call YOLO_. boxes_ and_ Scores(), extract box_ Boxes and confidence_ box_scores, put the box data of three layers into the list boxes and boxes_ Score, then splice concatenate and flatten, and the output data is all boxes and confidence.

Where, the output boxes and boxes_ The format of scores is as follows:

boxes: (?, 4) # ? Is the number of frames box_scores: (?, 80)

realization:

boxes = []

box_scores = []

for l in range(num_layers):

_boxes, _box_scores = yolo_boxes_and_scores(

yolo_outputs[l], anchors[anchor_mask[l]], num_classes, input_shape, image_shape)

boxes.append(_boxes)

box_scores.append(_box_scores)

boxes = K.concatenate(boxes, axis=0)

box_scores = K.concatenate(box_scores, axis=0)

concatenate is used to flatten the data of multiple layers, because the box has been restored to real coordinates, and there is no difference in different scales.

In function Yolo_ boxes_ and_ In scores():

- yolo_ Output of head: box_xy is the central coordinate of box, (01) relative position; box_wh is the width and height of box, (01) relative value; box_confidence is the confidence of the object in the box; box_class_probs is category confidence;

- yolo_correct_boxes, box_xy and box_ The (0 ~ 1) relative value of wh is converted into real coordinates, and the output boxes are the values of (y_min,x_min,y_max,x_max);

- reshape, flatten the values of different meshes into a list of boxes, that is (?, 13,13,3,4) - > (?, 4);

- box_scores is the product of frame confidence and category confidence, and then reshape flattens, (?, 80);

- Return box boxes and box confidence box_scores.

realization:

def yolo_boxes_and_scores(feats, anchors, num_classes, input_shape, image_shape):

'''Process Conv layer output'''

box_xy, box_wh, box_confidence, box_class_probs = yolo_head(

feats, anchors, num_classes, input_shape)

boxes = yolo_correct_boxes(box_xy, box_wh, input_shape, image_shape)

boxes = K.reshape(boxes, [-1, 4])

box_scores = box_confidence * box_class_probs

box_scores = K.reshape(box_scores, [-1, num_classes])

return boxes, box_scores

next:

- Mask, filter the boxes less than the confidence threshold, and only keep the boxes greater than the confidence, mask;

- max_boxes_tensor, maximum number of detection frames per picture, max_boxes is 20;

realization:

mask = box_scores >= score_threshold max_boxes_tensor = K.constant(max_boxes, dtype='int32')

next:

- Filter the box class by mask and category c_ Boxes and confidence class_box_scores;

- Through NMS and non maximum suppression, the NMS index NMS of box boxes is filtered out_ index;

- According to the index, select the box of gather output class_boxes and classes_ box_ Score, and then generate category information classes;

- Combine the data of multiple categories to generate the final detection data frame and return it.

realization:

boxes_ = []

scores_ = []

classes_ = []

for c in range(num_classes):

class_boxes = tf.boolean_mask(boxes, mask[:, c])

class_box_scores = tf.boolean_mask(box_scores[:, c], mask[:, c])

nms_index = tf.image.non_max_suppression(

class_boxes, class_box_scores, max_boxes_tensor, iou_threshold=iou_threshold)

class_boxes = K.gather(class_boxes, nms_index)

class_box_scores = K.gather(class_box_scores, nms_index)

classes = K.ones_like(class_box_scores, 'int32') * c

boxes_.append(class_boxes)

scores_.append(class_box_scores)

classes_.append(classes)

boxes_ = K.concatenate(boxes_, axis=0)

scores_ = K.concatenate(scores_, axis=0)

classes_ = K.concatenate(classes_, axis=0)

Output format:

boxes_: (?, 4) scores_: (?,) classes_: (?,)

5. Test method

Step 1, image processing:

- Convert the equal proportion of the image into the detection size, the detection size needs to be a multiple of 32, and the surrounding is filled;

- Add 1 dimension to the picture to conform to the input parameter format;

if self.model_image_size != (None, None): # 416x416, 416=32*13, which must be a multiple of 32. The minimum scale is divided by 32

assert self.model_image_size[0] % 32 == 0, 'Multiples of 32 required'

assert self.model_image_size[1] % 32 == 0, 'Multiples of 32 required'

boxed_image = letterbox_image(image, tuple(reversed(self.model_image_size))) # Fill image

else:

new_image_size = (image.width - (image.width % 32), image.height - (image.height % 32))

boxed_image = letterbox_image(image, new_image_size)

image_data = np.array(boxed_image, dtype='float32')

print('detector size {}'.format(image_data.shape))

image_data /= 255. # Convert 0 ~ 1

image_data = np.expand_dims(image_data, 0) # Add batch dimension and add 1 dimension to the picture

Step 2, feed data, image, image size;

out_boxes, out_scores, out_classes = self.sess.run(

[self.boxes, self.scores, self.classes],

feed_dict={

self.yolo_model.input: image_data,

self.input_image_shape: [image.size[1], image.size[0]],

K.learning_phase(): 0

})

Step 3, draw the border, automatically set the border width, draw the border and category text, and use the pilot drawing library.

font = ImageFont.truetype(font='font/FiraMono-Medium.otf',

size=np.floor(3e-2 * image.size[1] + 0.5).astype('int32')) # typeface

thickness = (image.size[0] + image.size[1]) // 512 # THK

for i, c in reversed(list(enumerate(out_classes))):

predicted_class = self.class_names[c] # category

box = out_boxes[i] # frame

score = out_scores[i] # Degree of implementation

label = '{} {:.2f}'.format(predicted_class, score) # label

draw = ImageDraw.Draw(image) # Drawing

label_size = draw.textsize(label, font) # Label text

top, left, bottom, right = box

top = max(0, np.floor(top + 0.5).astype('int32'))

left = max(0, np.floor(left + 0.5).astype('int32'))

bottom = min(image.size[1], np.floor(bottom + 0.5).astype('int32'))

right = min(image.size[0], np.floor(right + 0.5).astype('int32'))

print(label, (left, top), (right, bottom)) # frame

if top - label_size[1] >= 0: # Label text

text_origin = np.array([left, top - label_size[1]])

else:

text_origin = np.array([left, top + 1])

# My kingdom for a good redistributable image drawing library.

for i in range(thickness): # Picture frame

draw.rectangle(

[left + i, top + i, right - i, bottom - i],

outline=self.colors[c])

draw.rectangle( # Text background

[tuple(text_origin), tuple(text_origin + label_size)],

fill=self.colors[c])

draw.text(text_origin, label, fill=(0, 0, 0), font=font) # Copywriting

del draw

supplement

1. concatenate

concatenate connects data elements of the same dimension.

realization:

from keras import backend as K sess = K.get_session() a = K.constant([[2, 4], [1, 2]]) b = K.constant([[3, 2], [5, 6]]) c = [a, b] c = K.concatenate(c, axis=0) print(sess.run(c)) """ [[2. 4.] [1. 2.] [3. 2.] [5. 6.]] """

2. gather

gather selects list elements by index.

realization:

from keras import backend as K sess = K.get_session() a = K.constant([[2, 4], [1, 2], [5, 6]]) b = K.gather(a, [1, 2]) print(sess.run(b)) """ [[1. 2.] [5. 6.]] """

reference

@online{SpikeKing2021Nov,

author = {SpikeKing},

title = {{explore YOLO v3 source code - conclusion forecast}},

organization = {wechat public platform},

year = {2021},

month = {11},

date = {2021-11-22},

urldate = {2021-11-22},

note = {[Online; accessed 22. Nov. 2021]},

url = {https://mp.weixin.qq.com/s/J1ddmUvT_F2HcljLtg_uWQ},

abstract = {{this article mainly shares the details of how to implement the algorithm of YOLO v3, the Keras framework. This is the sixth article, detecting objects in pictures, using the trained model, and screening the optimal detection frame through the product of frame confidence and category confidence.}}

}