Support technology sharing, reprinting or copying, please point out the source of the article The blogger is Jack__0023

1. Background

Because of the different scenarios, the face detection provided by android last time can not satisfy my use, because long time opening, he will have various small conditions, and then to identify (not in this blog, after I have sorted out the data to test no problem to send a blog), so to Op. Env's embrace.

bak: Face detection is not face recognition. Detection means whether a person is a person or not. Recognition means who you are. Age and gender identification must be open cv3.3 or more, because only this version or more supports CNN.

2. opencv Environment Installation (if not, take a look)

2-1. Preparatory Tools

2-1-1, opencv resource packages, you can download opencv official website, I use version 3.4.0, because I did this project at the beginning of 19 years, but I did not release it, so you have a better choice, you can choose version 4.0 or more, because version 4.0 seems to have optimized memory. This is the download address of opencv official website resources

2-2. opencv Introducing android

2-2-1. When you download it, you will get a resource compression package and decompress it.

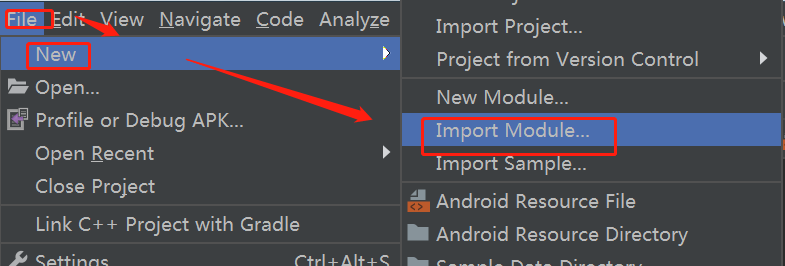

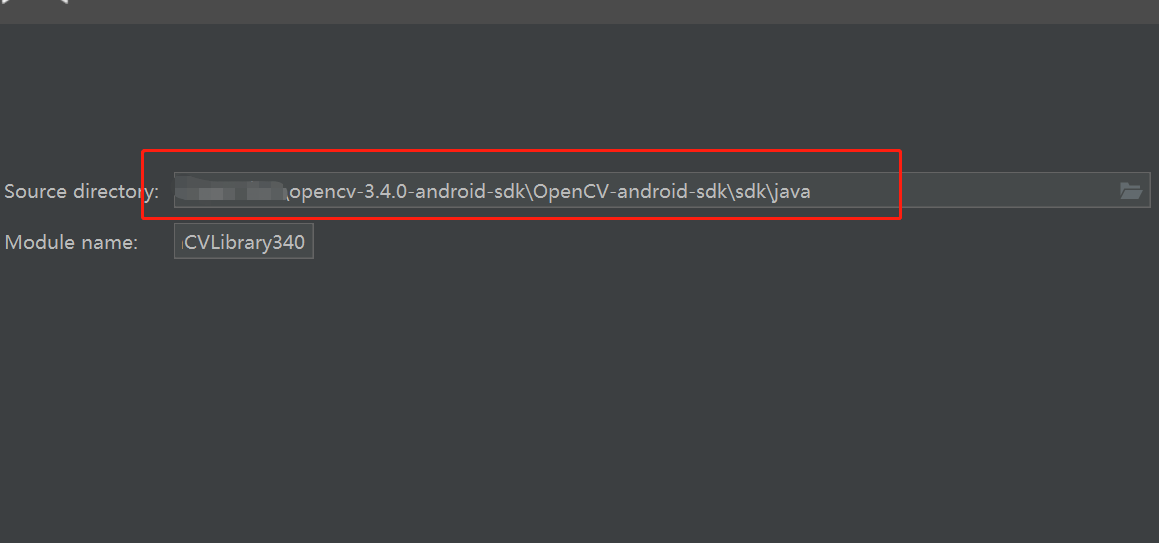

2-2-2. Introduce module in Android (opencv-3.4.0-android-sdkOpenCV-android-sdksdksdk\java folder for which module is a decompressed file) and keep next as you go, as shown in the figure.

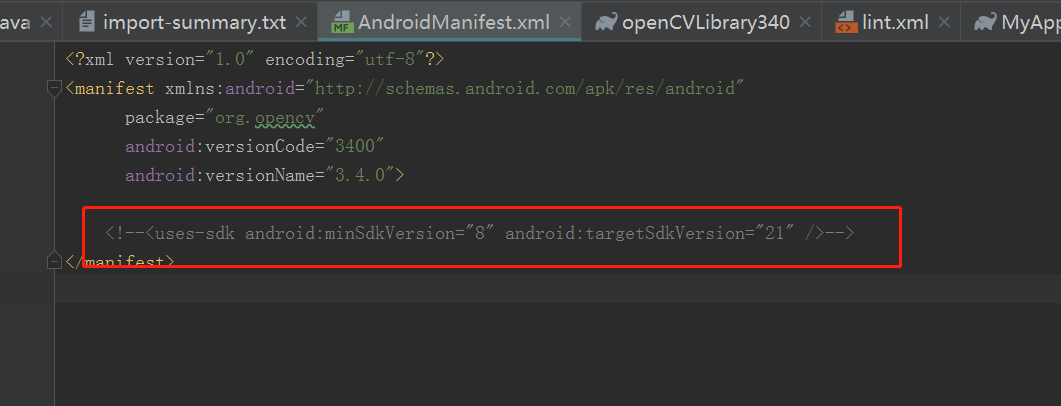

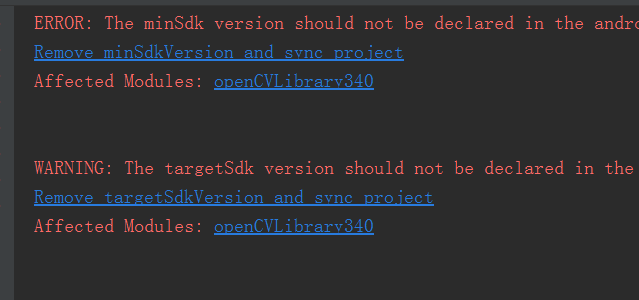

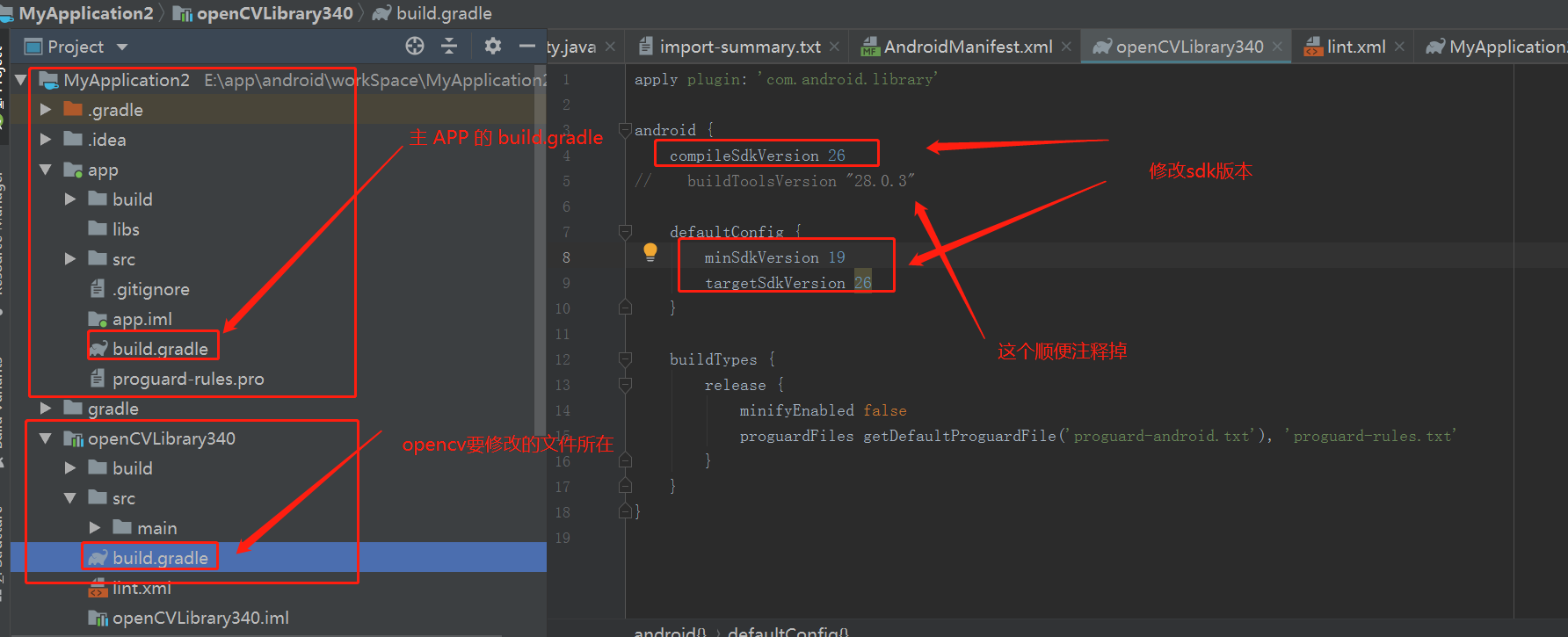

2-2-3. If you encounter this exception at the time of introduction

The solution is to open the Android Manifest. XML and build.gradle of the introduced opencv module, which is commented out in Android Manifest.

In build.gradle, just change to the same sdk version configuration as build.gradle in your main app.

Then try again can solve it. If you encounter other problems, you can trust me.

3. Code area (face detection and gender and age recognition are divided into two parts)

3-1. Introduction of Face Detection Code

3-1-1, Detection Module Selection

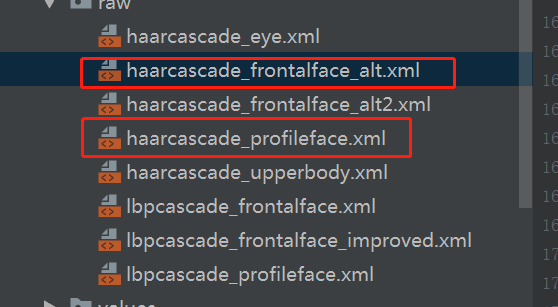

I choose the front face and side face detection, of course, you choose according to your business needs, opencv is very human, eyes and many other options; so I choose the two training modules of OpenCV as shown in the figure.

The reason for choosing these two Haar modules is that although Haar is not the fastest detection module (for example, lbp is faster), it is relatively accurate. Haar is an Adaboost algorithm, which uses light and shade changes to classify. Specific haar, lbp and hog can be distinguished by you to find information. There are many gods explaining that, in my opinion, the two modules in the picture are more in line with my idea.

3-1-2, Detection Code Area

Note: I made an interception here, and it turned out that when nobody was there, it was detected twice a second, and when someone was there, it was detected once a second (intercepted by onCamera Frame method).

Reason: This can avoid unnecessary calculation, if not intercepted, it can detect five to twenty times a second (specifically looking at the camera and initializing the number of frames of the camera configuration). I try to do this for a few days without causing the app to exit because of overheating. Specifically, I have to comment on it. What problems can I chat with privately?

package com.yxm.opencvface.activity; import android.app.Activity; import android.content.Context; import android.graphics.Bitmap; import android.hardware.Camera; import android.os.Bundle; import android.os.Handler; import android.util.Log; import android.widget.TextView; import com.yxm.opencvface.R; import org.opencv.android.CameraBridgeViewBase; import org.opencv.android.OpenCVLoader; import org.opencv.core.Core; import org.opencv.core.Mat; import org.opencv.core.MatOfRect; import org.opencv.core.Rect; import org.opencv.core.Size; import org.opencv.dnn.Net; import org.opencv.objdetect.CascadeClassifier; import java.io.File; import java.io.FileOutputStream; import java.io.InputStream; public class TestActivity extends Activity implements CameraBridgeViewBase.CvCameraViewListener2 { protected static int LIMIT_TIME = 3;//Critical time, save the picture once in more than 3 seconds protected static Bitmap mFaceBitmap;//Images containing faces private CameraBridgeViewBase openCvCameraView; private static final String TAG = "yaoxuminTest"; private Handler mHandler; private CascadeClassifier mFrontalFaceClassifier = null; //Front Face Cascade Classifier private CascadeClassifier mProfileFaceClassifier = null; //Side Face Cascade Classifier private Rect[] mFrontalFacesArray; private Rect[] mProfileFacesArray; //Preserving Face Data for Sex and Age Analysis private Rect[] mTempFrontalFacesArray; private Mat mRgba; //Image container private Mat mGray; private TextView mFrontalFaceNumber; private TextView mProfileFaceNumber; private TextView mCurrentNumber; private TextView mWaitTime; private int mFrontFaces = 0; private int mProfileFaces = 0; //Record the number of people who watched before private int mRecodeFaceSize; //Number of current viewers private int mCurrentFaceSize; //Keep the maximum number of viewers peak private int mMaxFaceSize; //Recording time, second level private long mRecodeTime; //Recorded time, millisecond level, idle time, used for counting, to achieve a 0.5 second check private long mRecodeFreeTime; //Current time, second level private long mCurrentTime = 0; //Current time, millisecond level private long mMilliCurrentTime = 0; //Watching time private int mWatchTheTime = 0; //Number of people leaving private int mLeftFaces = 0; //Setting up detection area private Size m55Size = new Size(55, 55); private Size m65Size = new Size(65, 65); private Size mDefault = new Size(); protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_opencv); mHandler = new Handler(); //Initialization control initComponent(); //Initialize Camera initCamera(); //Initialization of opencv resources in onResume method of parent class } @Override public void onResume() { super.onResume(); if (!OpenCVLoader.initDebug()) { Log.e(TAG, "OpenCV init error"); } //Initialize opencv resources initOpencv(); } /** * @Description Initialization component * @author Yao Xumin * @date 2019/7/24 12:12 */ protected void initComponent() { openCvCameraView = findViewById(R.id.javaCameraView); mFrontalFaceNumber = findViewById(R.id.tv_frontal_face_number); mProfileFaceNumber = findViewById(R.id.tv_profile_face_number); mCurrentNumber = findViewById(R.id.tv_current_number); mWaitTime = findViewById(R.id.tv_wait_time); } /** * @Description Initialize Camera * @author Yao Xumin * @date 2019/7/24 12:12 */ protected void initCamera() { int camerId = 0; Camera.CameraInfo info = new Camera.CameraInfo(); int numCameras = Camera.getNumberOfCameras(); for (int i = 0; i < numCameras; i++) { Camera.getCameraInfo(i, info); Log.v("yaoxumin", "stay CameraRenderer Class openCamera In this method, the camera is turned on. Camera.open Method,cameraId:" + i); camerId = i; break; } openCvCameraView.setCameraIndex(camerId); //Camera Index - 1/0: Posterior Double Photography 1: Front Photography openCvCameraView.enableFpsMeter(); //Display FPS openCvCameraView.setCvCameraViewListener(this);//Monitor openCvCameraView.setMaxFrameSize(640, 480);//Setting frame size } /** * @Description Initialize opencv resources * @author Yao Xumin * @date 2019/7/24 12:12 */ protected void initOpencv() { initFrontalFace(); initProfileFace(); // display openCvCameraView.enableView(); } /** * @Description Initialize Face Classifier * @author Yao Xumin * @date 2019/7/24 12:12 */ public void initFrontalFace() { try { //This model is relatively good for me. InputStream is = getResources().openRawResource(R.raw.haarcascade_frontalface_alt); //OpenCV Face Model File: lbpcascade_frontalface_improved File cascadeDir = getDir("cascade", Context.MODE_PRIVATE); File mCascadeFile = new File(cascadeDir, "haarcascade_frontalface_alt.xml"); FileOutputStream os = new FileOutputStream(mCascadeFile); byte[] buffer = new byte[4096]; int bytesRead; while ((bytesRead = is.read(buffer)) != -1) { os.write(buffer, 0, bytesRead); } is.close(); os.close(); // Loading Face Classifier mFrontalFaceClassifier = new CascadeClassifier(mCascadeFile.getAbsolutePath()); } catch (Exception e) { Log.e(TAG, e.toString()); } } /** * @Description Initialize Side Face Classifier * @author Yao Xumin * @date 2019/7/24 12:12 */ public void initProfileFace() { try { //This model is relatively good for me. InputStream is = getResources().openRawResource(R.raw.haarcascade_profileface); //OpenCV Face Model File: lbpcascade_frontalface_improved File cascadeDir = getDir("cascade", Context.MODE_PRIVATE); File mCascadeFile = new File(cascadeDir, "haarcascade_profileface.xml"); FileOutputStream os = new FileOutputStream(mCascadeFile); byte[] buffer = new byte[4096]; int bytesRead; while ((bytesRead = is.read(buffer)) != -1) { os.write(buffer, 0, bytesRead); } is.close(); os.close(); // Loading Side Face Classifier mProfileFaceClassifier = new CascadeClassifier(mCascadeFile.getAbsolutePath()); } catch (Exception e) { Log.e(TAG, e.toString()); } } @Override public void onCameraViewStarted(int width, int height) { mRgba = new Mat(); mGray = new Mat(); } @Override public void onCameraViewStopped() { mRgba.release(); mGray.release(); } /** * @Description Face Detection and Gender and Age Recognition * @author Yao Xumin * @date 2019/7/24 12:16 */ @Override public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) { mRgba = inputFrame.rgba(); //RGBA mGray = inputFrame.gray(); //Single channel gray scale image //Solve the problem of rotating display of front-end camera Core.flip(mRgba, mRgba, 1); //Rotate, mirror mMilliCurrentTime = System.currentTimeMillis() / 100;//Get the current time level in milliseconds mCurrentTime = mMilliCurrentTime / 10;//Get the current time, second level //Check every 0.5 seconds. If you keep testing and don't do this filtering, then maybe at least five times a second to calculate whether there is a face or not. This kind of calculation has certain pressure on the machine. //And these calculations can start without so much, which I personally think is appropriate. //When no human is detected, detect twice a second //When you detect a person, change it to 1 second once, so that you don't overheat for a day or even a few days and the app will quit. if (mRecodeFreeTime + 5 <= mMilliCurrentTime) { mRecodeFreeTime = mMilliCurrentTime; if (mRecodeTime == 0 || mCurrentFaceSize == 0 || mRecodeTime < mCurrentTime) {//After identifying a person, do a test in one second mRecodeTime = mCurrentTime;//Record the current time //Detection and display MatOfRect frontalFaces = new MatOfRect(); MatOfRect profileFaces = new MatOfRect(); if (mFrontalFaceClassifier != null) {//Here, two Size are used to detect faces. The smaller the size, the farther the detection distance is. Four parameters, 1.1, 5, 2, m65Size and mDefault, can improve the accuracy of detection. Five are confirmed five times. Baidu MultiScale is a specific method. mFrontalFaceClassifier.detectMultiScale(mGray, frontalFaces, 1.1, 5, 2, m65Size, mDefault); mFrontalFacesArray = frontalFaces.toArray(); if (mFrontalFacesArray.length > 0) { Log.i(TAG, "The number of faces is : " + mFrontalFacesArray.length); } mCurrentFaceSize = mFrontFaces = mFrontalFacesArray.length; } if (mProfileFaceClassifier != null) {//Here, two Sizes are used to detect faces. The smaller the Size, the farther the detection distance is. mProfileFaceClassifier.detectMultiScale(mGray, profileFaces, 1.1, 6, 0, m55Size, mDefault); mProfileFacesArray = profileFaces.toArray(); if (mProfileFacesArray.length > 0) Log.i(TAG, "Side Face Number : " + mProfileFacesArray.length); mProfileFaces = mProfileFacesArray.length; } mProfileFacesArray = profileFaces.toArray(); mCurrentFaceSize += mProfileFaces; /* if (mProfileFacesArray.length > 0){ for (int i = 0; i < mProfileFacesArray.length; i++) { //Mark with a box Imgproc.rectangle(mRgba, mProfileFacesArray[i].tl(), mProfileFacesArray[i].br(), new Scalar(0, 255, 0, 255), 3); } }*/ if (mCurrentFaceSize > 0) {//Someone was detected mRecodeFaceSize = mCurrentFaceSize;//Record the number of times someone looks at it if (mRecodeFaceSize > mMaxFaceSize)//Record the largest number of people mMaxFaceSize = mRecodeFaceSize; mWatchTheTime++;//Overlay viewing time if (mWatchTheTime == LIMIT_TIME) {//Get to the critical point and save the pictures. Log.v(TAG, "The number of faces currently recognized is:" + mCurrentFaceSize); /* Bitmap bitmap = Bitmap.createBitmap(mRgba.width(), mRgba.height(), Bitmap.Config.RGB_565); Utils.matToBitmap(mRgba, bitmap); mFaceBitmap = bitmap;*/ } } else {//No one watched. if (mWatchTheTime > 0) {//Did anyone watch it before? if (mWatchTheTime < LIMIT_TIME) {//If it is less than the critical value, there is no interface for data packet dropping operation. Log.v(TAG, "Not exceeding the threshold, the current number of viewers is:" + mRecodeFaceSize + ",The current time for viewing posters is:" + mWatchTheTime); } else {//More than or equal to the critical value for sending pictures Log.v(TAG, "Over the threshold, the current number of viewers is:" + mRecodeFaceSize + ",The current time for viewing posters is:" + mWatchTheTime); } Log.i(TAG, "mTempFrontalFacesArray : " + mTempFrontalFacesArray); } } //Display the number of people detected mHandler.postDelayed(new Runnable() { @Override public void run() { mFrontalFaceNumber.setText(mFrontFaces + ""); mProfileFaceNumber.setText(mProfileFaces + ""); mCurrentNumber.setText(mCurrentFaceSize + ""); mWaitTime.setText(mWatchTheTime + ""); } }, 0); } } return mRgba; } }

OK, face detection is done here, start age and gender recognition

3-2, Age and Gender Recognition Areas

3-2-1. Introduction

In fact, it is also in face detection to continue to do recognition, but we should pay attention to the timing of recognition, of course, this recognition I have not done fine-tuning, so the error is still a little big, you can continue to fine-tune Baidu data;

I'm here for testing, so I'll identify men and women when someone fails to detect them. I'll adjust it later and chat with me privately if necessary.

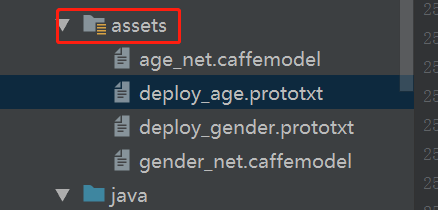

Module resources, these two modules I found several things turned out, you can also go over the wall to download foreign websites (if you do not know the download address behind me), age and gender recognition is based on the CNN model, you specifically want to ask what CNN is, you can find information, there are also foreign gods. The paper, I just read it roughly, so I won't show off. Several models are shown below.

I will send you the module resource download.

3-2-2, Code Area

3-2-2-1, resource loading or onCameraViewStarted method, changed to

@Override public void onCameraViewStarted(int width, int height) { mRgba = new Mat(); mGray = new Mat(); //Loading age module String proto = getPath("deploy_age.prototxt"); String weights = getPath("age_net.caffemodel"); Log.i(TAG, "onCameraViewStarted| ageProto : " + proto + ",ageWeights : " + weights); mAgeNet = Dnn.readNetFromCaffe(proto, weights); //Loading gender module proto = getPath("deploy_gender.prototxt"); weights = getPath("gender_net.caffemodel"); Log.i(TAG, "onCameraViewStarted| genderProto : " + proto + ",genderWeights : " + weights); mGenderNet = Dnn.readNetFromCaffe(proto, weights); if (mAgeNet.empty()) { Log.i(TAG, "Network loading failed"); } else { Log.i(TAG, "Network loading success"); } }

The complete face detection and age and gender recognition codes are as follows

package com.yxm.opencvface.activity; import android.app.Activity; import android.content.Context; import android.content.res.AssetManager; import android.graphics.Bitmap; import android.hardware.Camera; import android.os.Bundle; import android.os.Handler; import android.util.Log; import android.widget.TextView; import com.yxm.opencvface.R; import org.opencv.android.CameraBridgeViewBase; import org.opencv.android.OpenCVLoader; import org.opencv.core.Core; import org.opencv.core.Mat; import org.opencv.core.MatOfRect; import org.opencv.core.Rect; import org.opencv.core.Scalar; import org.opencv.core.Size; import org.opencv.dnn.Dnn; import org.opencv.dnn.Net; import org.opencv.imgproc.Imgproc; import org.opencv.objdetect.CascadeClassifier; import java.io.BufferedInputStream; import java.io.File; import java.io.FileOutputStream; import java.io.IOException; import java.io.InputStream; public class TestActivity extends Activity implements CameraBridgeViewBase.CvCameraViewListener2 { protected static int LIMIT_TIME = 3;//Critical time, save the picture once in more than 5 seconds protected static Bitmap mFaceBitmap;//Images containing faces private CameraBridgeViewBase openCvCameraView; private static final String TAG = "yaoxuminTest"; private Handler mHandler; private CascadeClassifier mFrontalFaceClassifier = null; //Front Face Cascade Classifier private CascadeClassifier mProfileFaceClassifier = null; //Side Face Cascade Classifier private Rect[] mFrontalFacesArray; private Rect[] mProfileFacesArray; private Mat mRgba; //Image container private Mat mGray; private TextView mFrontalFaceNumber; private TextView mProfileFaceNumber; private TextView mCurrentNumber; private TextView mWaitTime; private int mFrontFaces = 0; private int mProfileFaces = 0; //Record the number of people who watched before private int mRecodeFaceSize; //Number of current viewers private int mCurrentFaceSize; //Keep the maximum number of viewers peak private int mMaxFaceSize; //Recording time, second level private long mRecodeTime; //Recorded time, millisecond level, idle time, used for counting, to achieve a 0.5 second check private long mRecodeFreeTime; //Current time, second level private long mCurrentTime = 0; //Current time, millisecond level private long mMilliCurrentTime = 0; //Watching time private int mWatchTheTime = 0; //Number of people leaving private int mLeftFaces = 0; //Setting up detection area private Size m55Size = new Size(55, 55); private Size m65Size = new Size(65, 65); private Size mDefault = new Size(); //Sex and Age Recognition Areas private Net mAgeNet; private static final String[] AGES = new String[]{"0-2", "4-6", "8-13", "15-20", "25-32", "38-43", "48-53", "60+"}; private Net mGenderNet; private static final String[] GENDERS = new String[]{"male", "female"}; //Preserving Face Data for Sex and Age Analysis private Rect[] mTempFrontalFacesArray; protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_opencv); mHandler = new Handler(); //Initialization control initComponent(); //Initialize Camera initCamera(); //Initialization of opencv resources in onResume method of parent class } @Override public void onResume() { super.onResume(); if (!OpenCVLoader.initDebug()) { Log.e(TAG, "OpenCV init error"); } //Initialize opencv resources initOpencv(); } /** * @Description Initialization component * @author Yao Xumin * @date 2019/7/24 12:12 */ protected void initComponent() { openCvCameraView = findViewById(R.id.javaCameraView); mFrontalFaceNumber = findViewById(R.id.tv_frontal_face_number); mProfileFaceNumber = findViewById(R.id.tv_profile_face_number); mCurrentNumber = findViewById(R.id.tv_current_number); mWaitTime = findViewById(R.id.tv_wait_time); } /** * @Description Initialize Camera * @author Yao Xumin * @date 2019/7/24 12:12 */ protected void initCamera() { int camerId = 0; Camera.CameraInfo info = new Camera.CameraInfo(); int numCameras = Camera.getNumberOfCameras(); for (int i = 0; i < numCameras; i++) { Camera.getCameraInfo(i, info); Log.v("yaoxumin", "stay CameraRenderer Class openCamera In this method, the camera is turned on. Camera.open Method,cameraId:" + i); camerId = i; break; } openCvCameraView.setCameraIndex(camerId); //Camera Index - 1/0: Posterior Double Photography 1: Front Photography openCvCameraView.enableFpsMeter(); //Display FPS openCvCameraView.setCvCameraViewListener(this);//Monitor openCvCameraView.setMaxFrameSize(640, 480);//Setting frame size } /** * @Description Initialize opencv resources * @author Yao Xumin * @date 2019/7/24 12:12 */ protected void initOpencv() { initFrontalFace(); initProfileFace(); // display openCvCameraView.enableView(); } /** * @Description Initialize Face Classifier * @author Yao Xumin * @date 2019/7/24 12:12 */ public void initFrontalFace() { try { //This model is relatively good for me. InputStream is = getResources().openRawResource(R.raw.haarcascade_frontalface_alt); //OpenCV Face Model File: lbpcascade_frontalface_improved File cascadeDir = getDir("cascade", Context.MODE_PRIVATE); File mCascadeFile = new File(cascadeDir, "haarcascade_frontalface_alt.xml"); FileOutputStream os = new FileOutputStream(mCascadeFile); byte[] buffer = new byte[4096]; int bytesRead; while ((bytesRead = is.read(buffer)) != -1) { os.write(buffer, 0, bytesRead); } is.close(); os.close(); // Loading Face Classifier mFrontalFaceClassifier = new CascadeClassifier(mCascadeFile.getAbsolutePath()); } catch (Exception e) { Log.e(TAG, e.toString()); } } /** * @Description Initialize Side Face Classifier * @author Yao Xumin * @date 2019/7/24 12:12 */ public void initProfileFace() { try { //This model is relatively good for me. InputStream is = getResources().openRawResource(R.raw.haarcascade_profileface); //OpenCV Face Model File: lbpcascade_frontalface_improved File cascadeDir = getDir("cascade", Context.MODE_PRIVATE); File mCascadeFile = new File(cascadeDir, "haarcascade_profileface.xml"); FileOutputStream os = new FileOutputStream(mCascadeFile); byte[] buffer = new byte[4096]; int bytesRead; while ((bytesRead = is.read(buffer)) != -1) { os.write(buffer, 0, bytesRead); } is.close(); os.close(); // Loading Side Face Classifier mProfileFaceClassifier = new CascadeClassifier(mCascadeFile.getAbsolutePath()); } catch (Exception e) { Log.e(TAG, e.toString()); } } @Override public void onCameraViewStarted(int width, int height) { mRgba = new Mat(); mGray = new Mat(); //Loading age module String proto = getPath("deploy_age.prototxt"); String weights = getPath("age_net.caffemodel"); Log.i(TAG, "onCameraViewStarted| ageProto : " + proto + ",ageWeights : " + weights); mAgeNet = Dnn.readNetFromCaffe(proto, weights); //Loading gender module proto = getPath("deploy_gender.prototxt"); weights = getPath("gender_net.caffemodel"); Log.i(TAG, "onCameraViewStarted| genderProto : " + proto + ",genderWeights : " + weights); mGenderNet = Dnn.readNetFromCaffe(proto, weights); if (mAgeNet.empty()) { Log.i(TAG, "Network loading failed"); } else { Log.i(TAG, "Network loading success"); } } @Override public void onCameraViewStopped() { mRgba.release(); mGray.release(); } /** * @Description Face Detection and Gender and Age Recognition * @author Yao Xumin * @date 2019/7/24 12:16 */ @Override public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) { mRgba = inputFrame.rgba(); //RGBA mGray = inputFrame.gray(); //Single channel gray scale image //Solve the problem of rotating display of front-end camera Core.flip(mRgba, mRgba, 1); //Rotate, mirror mMilliCurrentTime = System.currentTimeMillis() / 100;//Get the current time level in milliseconds mCurrentTime = mMilliCurrentTime / 10;//Get the current time, second level //Check every 0.5 seconds. If you keep testing and don't do this filtering, then maybe at least five times a second to calculate whether there is a face or not. This kind of calculation has certain pressure on the machine. //And these calculations can start without so much, which I personally think is appropriate. //When no human is detected, detect twice a second //When you detect a person, change it to 1 second once, so that you don't overheat for a day or even a few days and the app will quit. if (mRecodeFreeTime + 5 <= mMilliCurrentTime) { mRecodeFreeTime = mMilliCurrentTime; if (mRecodeTime == 0 || mCurrentFaceSize == 0 || mRecodeTime < mCurrentTime) {//After identifying a person, do a test in one second mRecodeTime = mCurrentTime;//Record the current time //Detection and display MatOfRect frontalFaces = new MatOfRect(); MatOfRect profileFaces = new MatOfRect(); if (mFrontalFaceClassifier != null) {//Here, two Size are used to detect faces. The smaller the size, the farther the detection distance is. Four parameters, 1.1, 5, 2, m65Size and mDefault, can improve the accuracy of detection. Five are confirmed five times. Baidu MultiScale is a specific method. mFrontalFaceClassifier.detectMultiScale(mGray, frontalFaces, 1.1, 5, 2, m65Size, mDefault); mFrontalFacesArray = frontalFaces.toArray(); if (mFrontalFacesArray.length > 0) { Log.i(TAG, "The number of faces is : " + mFrontalFacesArray.length); } mCurrentFaceSize = mFrontFaces = mFrontalFacesArray.length; } if (mProfileFaceClassifier != null) {//Here 2 110 are used to detect faces. The smaller the size, the farther the detection distance is. mProfileFaceClassifier.detectMultiScale(mGray, profileFaces, 1.1, 6, 0, m55Size, mDefault); mProfileFacesArray = profileFaces.toArray(); if (mProfileFacesArray.length > 0) Log.i(TAG, "Side Face Number : " + mProfileFacesArray.length); mProfileFaces = mProfileFacesArray.length; } mProfileFacesArray = profileFaces.toArray(); mCurrentFaceSize += mProfileFaces; /* if (mProfileFacesArray.length > 0){ for (int i = 0; i < mProfileFacesArray.length; i++) { //Mark with a box Imgproc.rectangle(mRgba, mProfileFacesArray[i].tl(), mProfileFacesArray[i].br(), new Scalar(0, 255, 0, 255), 3); } }*/ if (mCurrentFaceSize > 0) {//Someone was detected mRecodeFaceSize = mCurrentFaceSize;//Record the number of times someone looks at it if (mRecodeFaceSize > mMaxFaceSize)//Record the largest number of people mMaxFaceSize = mRecodeFaceSize; mWatchTheTime++;//Overlay viewing time //The Needs of Gender Recognition mTempFrontalFacesArray = mFrontalFacesArray; if (mWatchTheTime == LIMIT_TIME) {//Get to the critical point and save the pictures. Log.v(TAG, "The number of faces currently recognized is:" + mCurrentFaceSize); /* Bitmap bitmap = Bitmap.createBitmap(mRgba.width(), mRgba.height(), Bitmap.Config.RGB_565); Utils.matToBitmap(mRgba, bitmap); mFaceBitmap = bitmap;*/ } } else {//No one watched. if (mWatchTheTime > 0) {//Did anyone watch it before? if (mWatchTheTime < LIMIT_TIME) {//If it is less than the critical value, there is no interface for data packet dropping operation. Log.v(TAG, "Not exceeding the threshold, the current number of viewers is:" + mRecodeFaceSize + ",The current time for viewing posters is:" + mWatchTheTime); } else {//More than or equal to the critical value for sending pictures Log.v(TAG, "Over the threshold, the current number of viewers is:" + mRecodeFaceSize + ",The current time for viewing posters is:" + mWatchTheTime); } //Data Reset mWatchTheTime = mMaxFaceSize = mRecodeFaceSize = 0; Log.i(TAG, "mTempFrontalFacesArray : " + mTempFrontalFacesArray); if (mTempFrontalFacesArray != null) { String age; Log.i(TAG, "mTempFrontalFacesArray.length : " + mTempFrontalFacesArray.length); for (int i = 0; i < mTempFrontalFacesArray.length; i++) { Log.i(TAG, "Age : " + analyseAge(mRgba, mTempFrontalFacesArray[i])); Log.i(TAG, "Gender : " + analyseGender(mRgba, mTempFrontalFacesArray[i])); } mTempFrontalFacesArray = null; } } } //Display the number of people detected mHandler.postDelayed(new Runnable() { @Override public void run() { mFrontalFaceNumber.setText(mFrontFaces + ""); mProfileFaceNumber.setText(mProfileFaces + ""); mCurrentNumber.setText(mCurrentFaceSize + ""); mWaitTime.setText(mWatchTheTime + ""); } }, 0); } } return mRgba; } /** * @param mRgba Photo Resources * @param face Face resources * @return Return age range * @Description Age analysis * @author Yao Xumin * @date 2019/7/24 12:54 */ private String analyseAge(Mat mRgba, Rect face) { try { Mat capturedFace = new Mat(mRgba, face); //Resizing pictures to resolution of Caffe model Imgproc.resize(capturedFace, capturedFace, new Size(227, 227)); //Converting RGBA to BGR Imgproc.cvtColor(capturedFace, capturedFace, Imgproc.COLOR_RGBA2BGR); //Forwarding picture through Dnn Mat inputBlob = Dnn.blobFromImage(capturedFace, 1.0f, new Size(227, 227), new Scalar(78.4263377603, 87.7689143744, 114.895847746), false, false); mAgeNet.setInput(inputBlob, "data"); Mat probs = mAgeNet.forward("prob").reshape(1, 1); Core.MinMaxLocResult mm = Core.minMaxLoc(probs); //Getting largest softmax output double result = mm.maxLoc.x; //Result of age recognition prediction Log.i(TAG, "Result is: " + result); return AGES[(int) result]; } catch (Exception e) { Log.e(TAG, "Error processing age", e); } return null; } /** * @param mRgba Photo Resources * @param face Face resources * @return Return analysis of gender * @Description Gender analysis * @author Yao Xumin * @date 2019/7/24 13:04 */ private String analyseGender(Mat mRgba, Rect face) { try { Mat capturedFace = new Mat(mRgba, face); //Resizing pictures to resolution of Caffe model Imgproc.resize(capturedFace, capturedFace, new Size(227, 227)); //Converting RGBA to BGR Imgproc.cvtColor(capturedFace, capturedFace, Imgproc.COLOR_RGBA2BGR); //Forwarding picture through Dnn Mat inputBlob = Dnn.blobFromImage(capturedFace, 1.0f, new Size(227, 227), new Scalar(78.4263377603, 87.7689143744, 114.895847746), false, false); mGenderNet.setInput(inputBlob, "data"); Mat probs = mGenderNet.forward("prob").reshape(1, 1); Core.MinMaxLocResult mm = Core.minMaxLoc(probs); //Getting largest softmax output double result = mm.maxLoc.x; //Result of gender recognition prediction. 1 = FEMALE, 0 = MALE Log.i(TAG, "Result is: " + result); return GENDERS[(int) result]; } catch (Exception e) { Log.e(TAG, "Error processing gender", e); } return null; } /** * @param file File name * @return Return file path * @Description The path to get the file * @author Yao Xumin * @date 2019/7/24 13:05 */ private String getPath(String file) { AssetManager assetManager = getApplicationContext().getAssets(); BufferedInputStream inputStream; try { //Reading data from app/src/main/assets inputStream = new BufferedInputStream(assetManager.open(file)); byte[] data = new byte[inputStream.available()]; inputStream.read(data); inputStream.close(); File outputFile = new File(getApplicationContext().getFilesDir(), file); FileOutputStream fileOutputStream = new FileOutputStream(outputFile); fileOutputStream.write(data); fileOutputStream.close(); return outputFile.getAbsolutePath(); } catch (IOException ex) { Log.e(TAG, ex.toString()); } return ""; } }

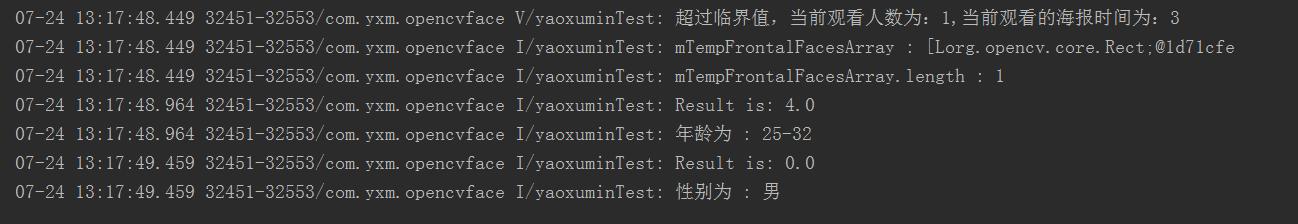

The recognition results are as follows