principle

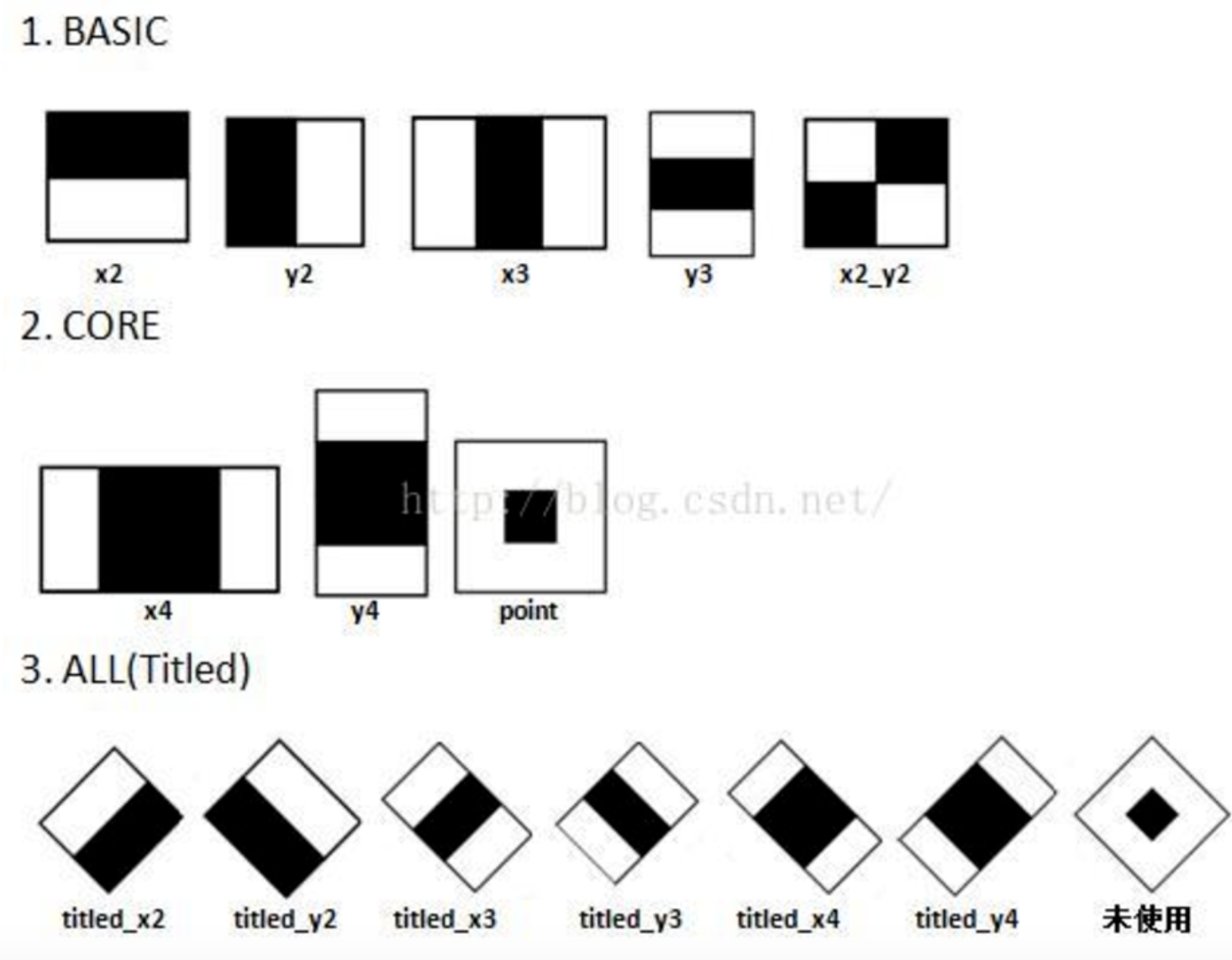

We use machine learning to complete face detection, First, we need a large number of positive sample images (face images) and negative sample images (images without faces) to train the classifier. We need to extract features from them. The Haar feature in the figure below will be used, just like our convolution kernel. Each feature is a value, which is equal to the pixel value in the black rectangle, and then subtract the sum of the pixel values in the white rectangle.

Haar eigenvalues reflect the gray changes of the image. For example, some features of the face can be simply described by rectangular features. The color of the eyes is darker than the cheeks, the color of both sides of the bridge of the nose is darker than the bridge of the nose, and the color of the mouth is darker than the surrounding color.

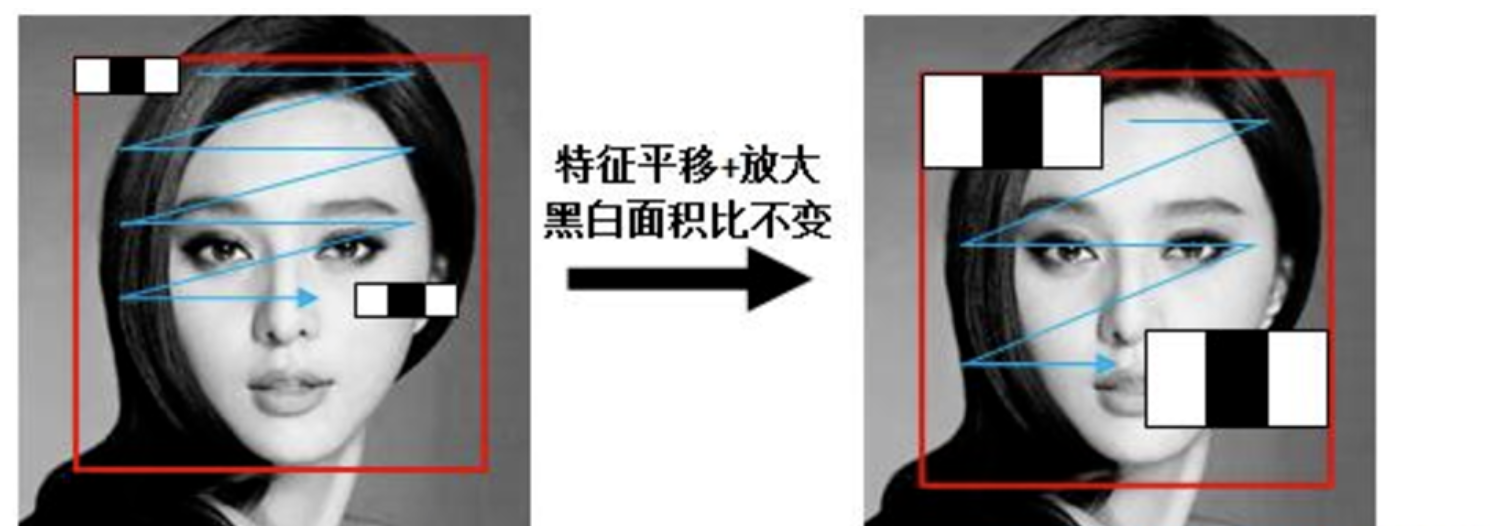

Haar feature can be used at any position of the image and its size can be changed at will, so the rectangular feature value is a function of three factors: rectangular template category, rectangular position and rectangular size. Therefore, the change of category, size and position makes a small detection window contain many rectangular features.

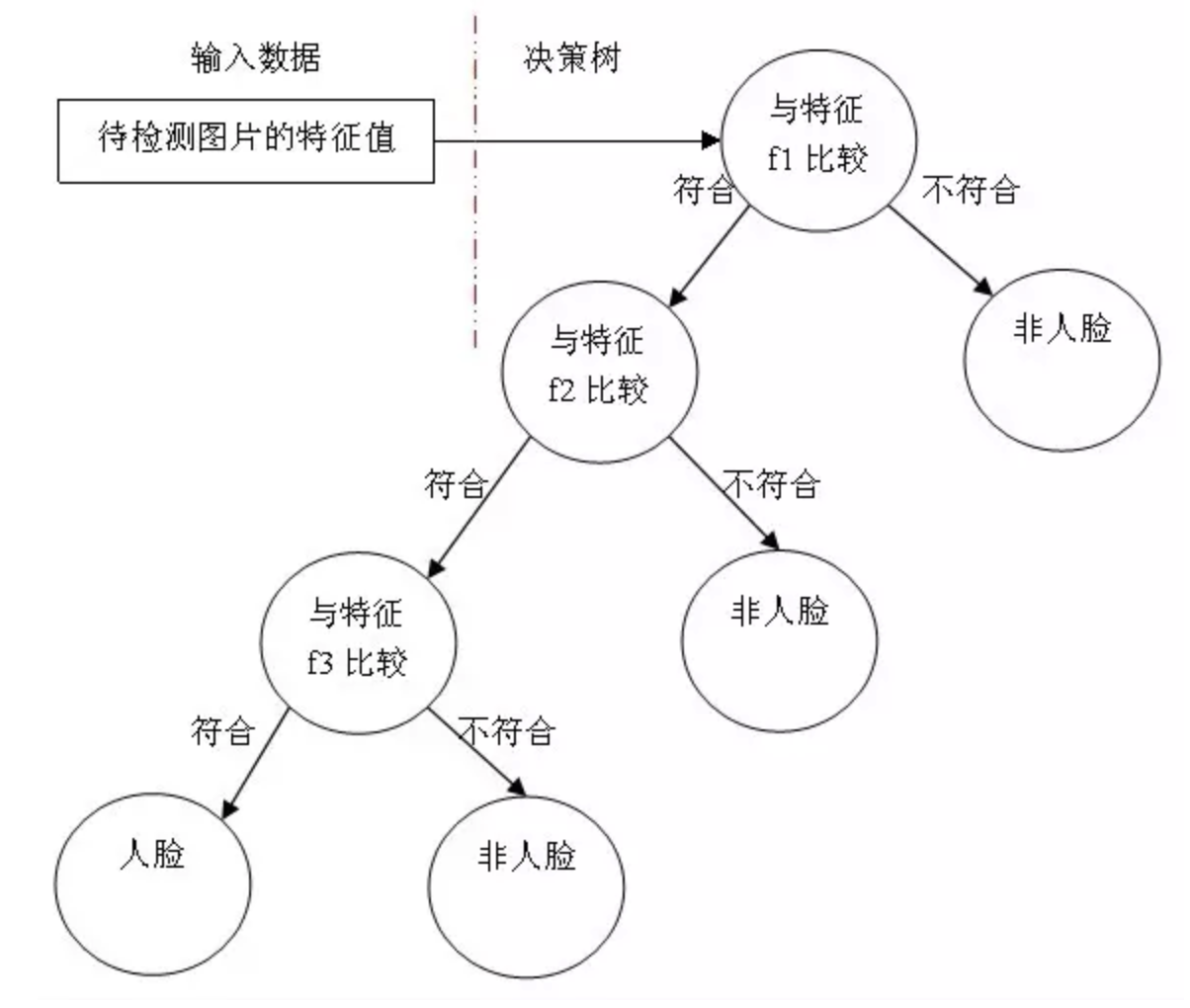

After obtaining the features of the image, an adaboost cascade decision maker constructed by decision tree is trained to recognize whether it is a face.

operation

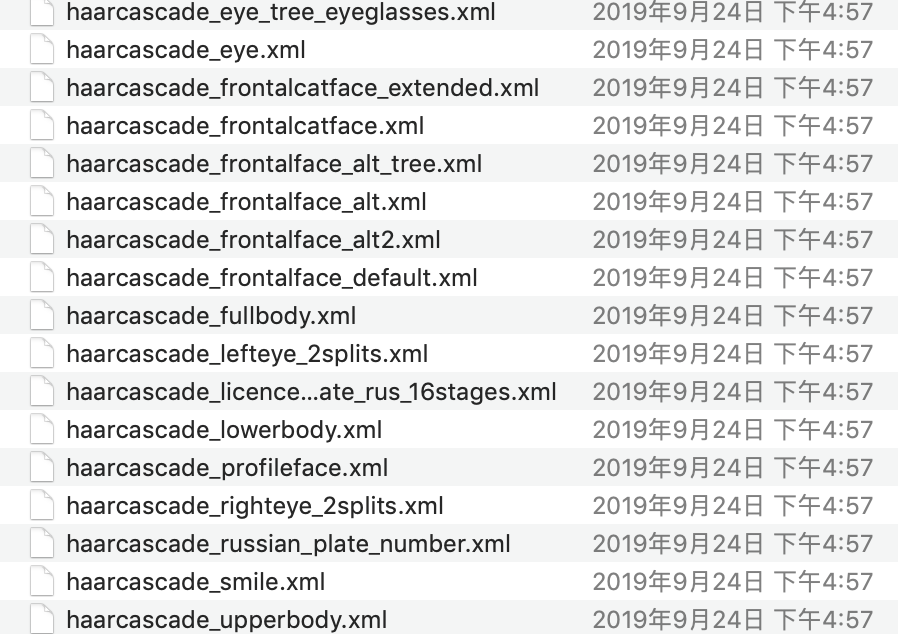

The trained detectors in OpenCV, including face, eyes, cat face, etc., are saved in XML files. We can find them through the following procedures:

import cv2 as cv print(cv.__file__)

Then we use these files to recognize faces, eyes, etc. The detection process is as follows:

-

Read the picture and convert it into a grayscale image

-

Instantiate classifier objects for face and eye detection

# Instantiated cascade classifier classifier =cv.CascadeClassifier( "haarcascade_frontalface_default.xml" ) # Load classifier classifier.load('haarcascade_frontalface_default.xml') -

Face and eye detection

rect = classifier.detectMultiScale(gray, scaleFactor, minNeighbors, minSize,maxsize)

Parameters:

- Gray: face image to be detected

- scaleFactor: the scale factor of the search window in the first and second scans

- Minneighbors: the target will not be considered a target until it has been detected at least once

- minsize and maxsize: the minimum and maximum size of the target

Just draw the test results.

Example:

import cv2 as cv

import matplotlib.pyplot as plt

# 1. Read the picture in the form of gray image

img = cv.imread("16.jpg")

gray = cv.cvtColor(img,cv.COLOR_BGR2GRAY)

# 2. Instantiate OpenCV face and eye recognition classifier

face_cas = cv.CascadeClassifier( "haarcascade_frontalface_default.xml" )

face_cas.load('haarcascade_frontalface_default.xml')

eyes_cas = cv.CascadeClassifier("haarcascade_eye.xml")

eyes_cas.load("haarcascade_eye.xml")

# 3. Call face recognition

faceRects = face_cas.detectMultiScale( gray, scaleFactor=1.2, minNeighbors=3, minSize=(32, 32))

for faceRect in faceRects:

x, y, w, h = faceRect

# Frame face

cv.rectangle(img, (x, y), (x + h, y + w),(0,255,0), 3)

# 4. Detect eyes in the recognized face

roi_color = img[y:y+h, x:x+w]

roi_gray = gray[y:y+h, x:x+w]

eyes = eyes_cas.detectMultiScale(roi_gray)

for (ex,ey,ew,eh) in eyes:

cv.rectangle(roi_color,(ex,ey),(ex+ew,ey+eh),(0,255,0),2)

# 5. Drawing of test results

plt.figure(figsize=(8,6),dpi=100)

plt.imshow(img[:,:,::-1]),plt.title('detection result ')

plt.xticks([]), plt.yticks([])

plt.show()

Face detection in video:

import cv2 as cv

import matplotlib.pyplot as plt

# 1. Read video

cap = cv.VideoCapture("movie.mp4")

# 2. Face recognition in each frame of data

while(cap.isOpened()):

ret, frame = cap.read()

if ret==True:

gray = cv.cvtColor(frame, cv.COLOR_BGR2GRAY)

# 3. Instantiate the classifier of OpenCV face recognition

face_cas = cv.CascadeClassifier( "haarcascade_frontalface_default.xml" )

face_cas.load('haarcascade_frontalface_default.xml')

# 4. Call face recognition

faceRects = face_cas.detectMultiScale(gray, scaleFactor=1.2, minNeighbors=3, minSize=(32, 32))

for faceRect in faceRects:

x, y, w, h = faceRect

# Frame face

cv.rectangle(frame, (x, y), (x + h, y + w),(0,255,0), 3)

cv.imshow("frame",frame)

if cv.waitKey(1) & 0xFF == ord('q'):

break

# 5. Release resources

cap.release()

cv.destroyAllWindows()