demand

Recently, we need to make a user-defined filter display based on ffplay. According to a binary bin file, we actually want to take a segment of the stream and blacken the video according to the information therein, that is, when one bit of the stream is 1, a 16 * 16 pixel block of the blackened video.

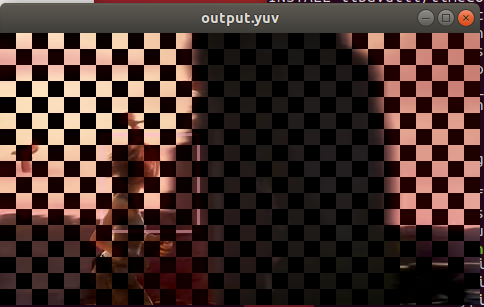

Put a finished example diagram to facilitate a more intuitive understanding of my intention.

reflection

Because this gadget needs to play local videos, pull streams, etc., it directly changes the source code of ffplay to generate a customized version of ffplay.

Originally, I wanted to sort out an article, but there were too many things. In the later stage, I gave up directly. I want to see the effect of how to do it. Part of the code can be directly turned to the bottom.

Overall structure diagram

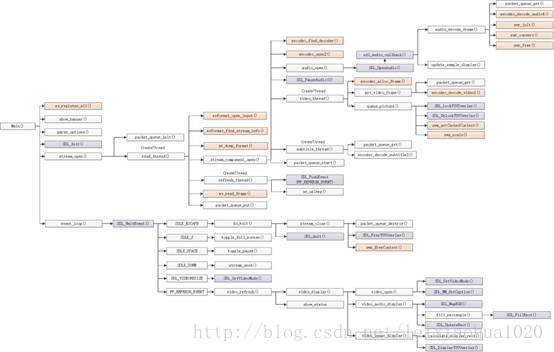

Here is a picture of the overall structure of ffplay painted by Thor, Thor article

Thor's article is very helpful because it uses ffmpeg 4 3.1, so it is slightly different from Thor's article, but the principle is the same.

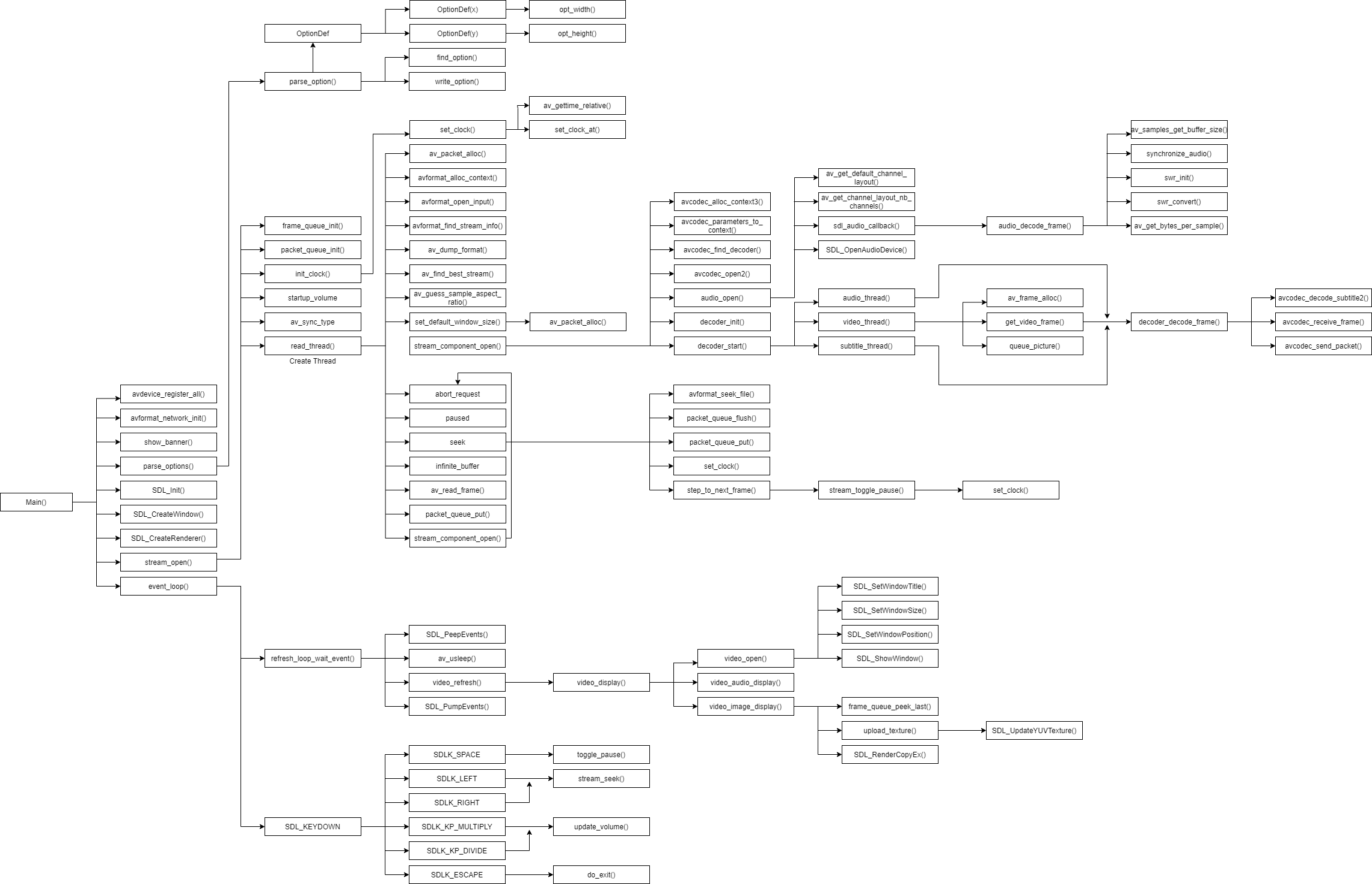

The flow chart of another article is more specific, and it also conforms to the current ffmpeg 4 0, you can also have a look ffplay flow chart

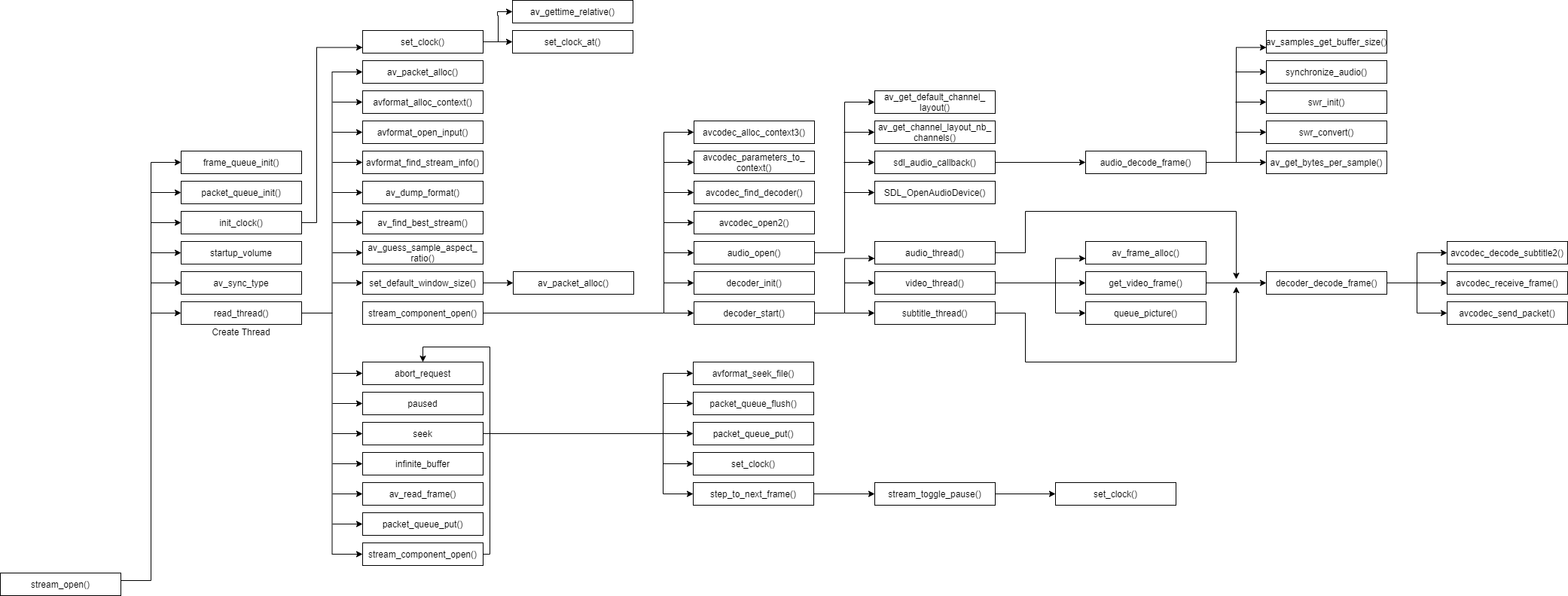

Then, in order to get familiar with the overall process, I drew a ffplay flow chart.

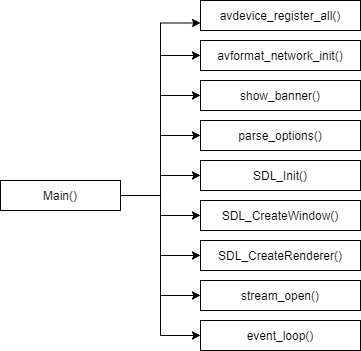

main() function

ffplay is provided externally as a gadget. It is compiled and stored in the bin directory of ffmpeg. ffplay is an independent player core.

The following functions are called in the main() function:

avdevice_register_all(): register all encoders and decoders.

avformat_network_init(): initialize the network library. It is stated in the official document that this function is optional. Since ffplay involves streaming playback, it is initialized in ffplay. This is an interface of an avformat and its corresponding document libavformat document

show_banner(): print out FFmpeg version information (compilation time, compilation options, class library information, etc.).

parse_options(): parse the input command.

SDL_Init(): SDL initialization. Both video and audio in FFPlay use SDL

SDL_CreateWindow(): creates an SDL window, Wiki address

SDL_CreateRedner() creates an SDL rendering context for the SDL window, SDL_CreateRenderer Wiki address,SDL_RendererFlags Wiki address

stream_open (): open the input media.

event_loop(): handles keyboard events and video refresh. In my opinion, it is the main thread loop

avdevice_register_all()

This is a function in libavdevice library. Reading data using libavdevice is similar to directly opening video files. Because the device of the system is also considered by FFmpeg as an input format (i.e. AVInputFormat).

When using libavdevice, you need to include its header file:

#include "libavdevice/avdevice.h"

Then, you need to register libavdevice in the program:

avdevice_register_all();

For specific applications using avdevice, refer to Raytheon's article: The simplest example of AVDevice based on FFmpeg (reading camera)

It is worth noting that a lot of previous data are in * * av_register_all() * * for initialization, but it seems that this API has been cancelled for a long time, and it is not found in ffmpeg. See the following API change prompt:

2018-02-06 - 0694d87024 - lavf 58.9.100 - avformat.h

Deprecate use of av_register_input_format(), av_register_output_format(),

av_register_all(), av_iformat_next(), av_oformat_next().

Add av_demuxer_iterate(), and av_muxer_iterate().

This prompt is located at:

ffmpeg/doc/APIchanges

avformat_network_init()

When developing with ffmpeg class library, the function to open streaming media (or local file) is avformat_open_input().

If the network flow is opened, the function avformat should be added in front_ network_ init().

Therefore, it is obvious that if you want to pull the stream through rtsp or use the network, you should run the initialization function avformat of the network stream_ network_ Init(), and then pull the stream.

Thor in Method of opening streaming media in FFMPEG class library This paper expounds this, and makes a small example of rtsp pull flow, which can be seen below.

show_banner()

Print and output FFmpeg version information (compilation time, compilation options, class library information, etc.).

In fact, it prints out the relevant version information, compilation option information, etc. at the beginning of the program.

parse_options()

Refer to Raytheon article: ffplay. Simple analysis of C function structure

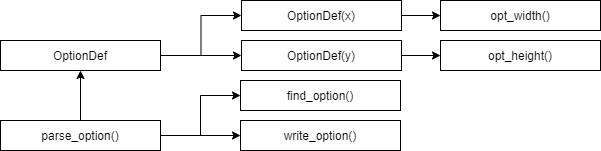

parse_options() parses all input options. The command "- F" in the input command "ffplay -f h264 test.264" will be parsed out. The function call structure is shown in the figure below. It should be noted that the parse of FFplay (ffplay.c)_ Parse in options() and FFmpeg (ffmpeg.c)_ Options () is actually the same.

parse_options() will loop through parse_option() until all options are resolved.

parse_option()

Resolve an input option. The specific analysis steps will not be repeated.

OptionDef structure

Each option information of FFmpeg and ffplay is stored in an OptionDef structure. It is defined as follows:

typedef struct OptionDef {

const char *name;

int flags;

#define HAS_ARG 0x0001

#define OPT_BOOL 0x0002

#define OPT_EXPERT 0x0004

#define OPT_STRING 0x0008

#define OPT_VIDEO 0x0010

#define OPT_AUDIO 0x0020

#define OPT_INT 0x0080

#define OPT_FLOAT 0x0100

#define OPT_SUBTITLE 0x0200

#define OPT_INT64 0x0400

#define OPT_EXIT 0x0800

#define OPT_DATA 0x1000

#define OPT_PERFILE 0x2000 /* the option is per-file (currently ffmpeg-only).

implied by OPT_OFFSET or OPT_SPEC */

#define OPT_OFFSET 0x4000 /* option is specified as an offset in a passed optctx */

#define OPT_SPEC 0x8000 /* option is to be stored in an array of SpecifierOpt.

Implies OPT_OFFSET. Next element after the offset is

an int containing element count in the array. */

#define OPT_TIME 0x10000

#define OPT_DOUBLE 0x20000

#define OPT_INPUT 0x40000

#define OPT_OUTPUT 0x80000

union {

void *dst_ptr;

int (*func_arg)(void *, const char *, const char *);

size_t off;

} u;

const char *help;

const char *argname;

} OptionDef;

Important fields:

Name: the name used to store options. For example, "i", "f", "codec" and so on.

flags: type of storage option value. For example: HAS_ARG (including option values), OPT_STRING (option value is string type), OPT_TIME (option value is time type).

u: The handler that stores this option.

help: description of the option.

FFmpeg uses an array named options and type OptionDef to store all options. Some common options are stored in cmdutils_common_opts.h medium. These options are applicable to FFmpeg, FFplay and FFprobe.

fftools/cmdutils.h contents are as follows:

{ "L", OPT_EXIT, { .func_arg = show_license }, "show license" }, \

{ "h", OPT_EXIT, { .func_arg = show_help }, "show help", "topic" }, \

{ "?", OPT_EXIT, { .func_arg = show_help }, "show help", "topic" }, \

{ "help", OPT_EXIT, { .func_arg = show_help }, "show help", "topic" }, \

{ "-help", OPT_EXIT, { .func_arg = show_help }, "show help", "topic" }, \

{ "version", OPT_EXIT, { .func_arg = show_version }, "show version" }, \

{ "buildconf", OPT_EXIT, { .func_arg = show_buildconf }, "show build configuration" }, \

{ "formats", OPT_EXIT, { .func_arg = show_formats }, "show available formats" }, \

{ "muxers", OPT_EXIT, { .func_arg = show_muxers }, "show available muxers" }, \

{ "demuxers", OPT_EXIT, { .func_arg = show_demuxers }, "show available demuxers" }, \

{ "devices", OPT_EXIT, { .func_arg = show_devices }, "show available devices" }, \

{ "codecs", OPT_EXIT, { .func_arg = show_codecs }, "show available codecs" }, \

{ "decoders", OPT_EXIT, { .func_arg = show_decoders }, "show available decoders" }, \

{ "encoders", OPT_EXIT, { .func_arg = show_encoders }, "show available encoders" }, \

{ "bsfs", OPT_EXIT, { .func_arg = show_bsfs }, "show available bit stream filters" }, \

{ "protocols", OPT_EXIT, { .func_arg = show_protocols }, "show available protocols" }, \

{ "filters", OPT_EXIT, { .func_arg = show_filters }, "show available filters" }, \

{ "pix_fmts", OPT_EXIT, { .func_arg = show_pix_fmts }, "show available pixel formats" }, \

{ "layouts", OPT_EXIT, { .func_arg = show_layouts }, "show standard channel layouts" }, \

{ "sample_fmts", OPT_EXIT, { .func_arg = show_sample_fmts }, "show available audio sample formats" }, \

{ "colors", OPT_EXIT, { .func_arg = show_colors }, "show available color names" }, \

{ "loglevel", HAS_ARG, { .func_arg = opt_loglevel }, "set logging level", "loglevel" }, \

{ "v", HAS_ARG, { .func_arg = opt_loglevel }, "set logging level", "loglevel" }, \

{ "report", 0, { .func_arg = opt_report }, "generate a report" }, \

{ "max_alloc", HAS_ARG, { .func_arg = opt_max_alloc }, "set maximum size of a single allocated block", "bytes" }, \

{ "cpuflags", HAS_ARG | OPT_EXPERT, { .func_arg = opt_cpuflags }, "force specific cpu flags", "flags" }, \

{ "hide_banner", OPT_BOOL | OPT_EXPERT, {&hide_banner}, "do not show program banner", "hide_banner" },

The options array is defined in ffplay C, as follows:

static const OptionDef options[] = {

CMDUTILS_COMMON_OPTIONS

{ "x", HAS_ARG, { .func_arg = opt_width }, "force displayed width", "width" },

{ "y", HAS_ARG, { .func_arg = opt_height }, "force displayed height", "height" },

{ "s", HAS_ARG | OPT_VIDEO, { .func_arg = opt_frame_size }, "set frame size (WxH or abbreviation)", "size" },

{ "fs", OPT_BOOL, { &is_full_screen }, "force full screen" },

{ "an", OPT_BOOL, { &audio_disable }, "disable audio" },

{ "vn", OPT_BOOL, { &video_disable }, "disable video" },

{ "sn", OPT_BOOL, { &subtitle_disable }, "disable subtitling" },

{ "ast", OPT_STRING | HAS_ARG | OPT_EXPERT, { &wanted_stream_spec[AVMEDIA_TYPE_AUDIO] }, "select desired audio stream", "stream_specifier" },

{ "vst", OPT_STRING | HAS_ARG | OPT_EXPERT, { &wanted_stream_spec[AVMEDIA_TYPE_VIDEO] }, "select desired video stream", "stream_specifier" },

{ "sst", OPT_STRING | HAS_ARG | OPT_EXPERT, { &wanted_stream_spec[AVMEDIA_TYPE_SUBTITLE] }, "select desired subtitle stream", "stream_specifier" },

{ "ss", HAS_ARG, { .func_arg = opt_seek }, "seek to a given position in seconds", "pos" },

{ "t", HAS_ARG, { .func_arg = opt_duration }, "play \"duration\" seconds of audio/video", "duration" },

{ "bytes", OPT_INT | HAS_ARG, { &seek_by_bytes }, "seek by bytes 0=off 1=on -1=auto", "val" },

{ "seek_interval", OPT_FLOAT | HAS_ARG, { &seek_interval }, "set seek interval for left/right keys, in seconds", "seconds" },

{ "nodisp", OPT_BOOL, { &display_disable }, "disable graphical display" },

{ "noborder", OPT_BOOL, { &borderless }, "borderless window" },

{ "alwaysontop", OPT_BOOL, { &alwaysontop }, "window always on top" },

{ "volume", OPT_INT | HAS_ARG, { &startup_volume}, "set startup volume 0=min 100=max", "volume" },

{ "f", HAS_ARG, { .func_arg = opt_format }, "force format", "fmt" },

{ "pix_fmt", HAS_ARG | OPT_EXPERT | OPT_VIDEO, { .func_arg = opt_frame_pix_fmt }, "set pixel format", "format" },

{ "stats", OPT_BOOL | OPT_EXPERT, { &show_status }, "show status", "" },

{ "fast", OPT_BOOL | OPT_EXPERT, { &fast }, "non spec compliant optimizations", "" },

{ "genpts", OPT_BOOL | OPT_EXPERT, { &genpts }, "generate pts", "" },

{ "drp", OPT_INT | HAS_ARG | OPT_EXPERT, { &decoder_reorder_pts }, "let decoder reorder pts 0=off 1=on -1=auto", ""},

{ "lowres", OPT_INT | HAS_ARG | OPT_EXPERT, { &lowres }, "", "" },

{ "sync", HAS_ARG | OPT_EXPERT, { .func_arg = opt_sync }, "set audio-video sync. type (type=audio/video/ext)", "type" },

{ "autoexit", OPT_BOOL | OPT_EXPERT, { &autoexit }, "exit at the end", "" },

{ "exitonkeydown", OPT_BOOL | OPT_EXPERT, { &exit_on_keydown }, "exit on key down", "" },

{ "exitonmousedown", OPT_BOOL | OPT_EXPERT, { &exit_on_mousedown }, "exit on mouse down", "" },

{ "loop", OPT_INT | HAS_ARG | OPT_EXPERT, { &loop }, "set number of times the playback shall be looped", "loop count" },

{ "framedrop", OPT_BOOL | OPT_EXPERT, { &framedrop }, "drop frames when cpu is too slow", "" },

{ "infbuf", OPT_BOOL | OPT_EXPERT, { &infinite_buffer }, "don't limit the input buffer size (useful with realtime streams)", "" },

{ "window_title", OPT_STRING | HAS_ARG, { &window_title }, "set window title", "window title" },

{ "left", OPT_INT | HAS_ARG | OPT_EXPERT, { &screen_left }, "set the x position for the left of the window", "x pos" },

{ "top", OPT_INT | HAS_ARG | OPT_EXPERT, { &screen_top }, "set the y position for the top of the window", "y pos" },

#if CONFIG_AVFILTER

{ "vf", OPT_EXPERT | HAS_ARG, { .func_arg = opt_add_vfilter }, "set video filters", "filter_graph" },

{ "af", OPT_STRING | HAS_ARG, { &afilters }, "set audio filters", "filter_graph" },

#endif

{ "rdftspeed", OPT_INT | HAS_ARG| OPT_AUDIO | OPT_EXPERT, { &rdftspeed }, "rdft speed", "msecs" },

{ "showmode", HAS_ARG, { .func_arg = opt_show_mode}, "select show mode (0 = video, 1 = waves, 2 = RDFT)", "mode" },

{ "default", HAS_ARG | OPT_AUDIO | OPT_VIDEO | OPT_EXPERT, { .func_arg = opt_default }, "generic catch all option", "" },

{ "i", OPT_BOOL, { &dummy}, "read specified file", "input_file"},

{ "codec", HAS_ARG, { .func_arg = opt_codec}, "force decoder", "decoder_name" },

{ "acodec", HAS_ARG | OPT_STRING | OPT_EXPERT, { &audio_codec_name }, "force audio decoder", "decoder_name" },

{ "scodec", HAS_ARG | OPT_STRING | OPT_EXPERT, { &subtitle_codec_name }, "force subtitle decoder", "decoder_name" },

{ "vcodec", HAS_ARG | OPT_STRING | OPT_EXPERT, { &video_codec_name }, "force video decoder", "decoder_name" },

{ "autorotate", OPT_BOOL, { &autorotate }, "automatically rotate video", "" },

{ "find_stream_info", OPT_BOOL | OPT_INPUT | OPT_EXPERT, { &find_stream_info },

"read and decode the streams to fill missing information with heuristics" },

{ "filter_threads", HAS_ARG | OPT_INT | OPT_EXPERT, { &filter_nbthreads }, "number of filter threads per graph" },

{ NULL, },

};

Therefore, when we do customization based on ffplay or ffmpeg, if we need to add our own customized command line parameters, we can add them here.

SDL_Init()

SDL_Init() is used to initialize SDL. SDL is used for video display and sound playback in FFplay.

SDL_CreateWindow()

Create an SDL window.

Function prototype:

DL_Window * SDL_CreateWindow(const char *title, int x, int y, int w, int h, Uint32 flags);

Parameters:

| parameter | interpretation |

|---|---|

| title | Window title, encoded using UTF-8 |

| x | The x coordinate of the upper left starting point of the window. SDL is often used_ WINDOWPOS_ Centered or SDL_WINDOWPOS_UNDEFINED |

| y | The y coordinate of the upper left starting point of the window. SDL is often used_ WINDOWPOS_ Centered or SDL_WINDOWPOS_UNDEFINED |

| w | Width of SDL window |

| h | Height of SDL window |

| flags | Window flags, 0, 1 or more |

Returns the creation window, and returns a null pointer in case of failure

SDL_CreateWindow() Wiki

SDL_CreateRedner()

Create SDL rendering context for SDL window

Function prototype:

SDL_Renderer * SDL_CreateRenderer(SDL_Window * window, int index, Uint32 flags);

Parameters:

| parameter | interpretation |

|---|---|

| window | Displays the rendered window, SDL_CreateWindow() creates and returns a new window |

| index | The index of the rendering driver to initialize, or - 1 initialize the first driver that supports the request flag |

| flags | Window flags, 0, 1 or more |

stream_open()

stream_ The function of open() is to open the input media. This function is relatively complex, including the creation of various threads in FFplay. Its function call structure is shown in the figure below.

frame_queue_init()

It is the queue initialization function provided by the structure FrameQueue.

Refer to these two blog posts: ffplay frame queue analysis 1,ffplay packet queue analysis 2

ffplay uses frame queue to save the decoded data.

Firstly, a structure Frame is defined to save a Frame of video picture, audio or subtitles:

typedef struct Frame {

AVFrame *frame; //Decoded data of video or audio

AVSubtitle sub; //Decoded caption data

int serial;

double pts; /* Timestamp of frame */

double duration; /* Estimated duration of frame */

int64_t pos; /* Byte position of the frame in the input file */

int width;

int height;

int format;

AVRational sar;

int uploaded;

int flip_v;

} Frame;

The design of frame is intended to "fuse" three kinds of data: video, audio and subtitle with one structure. Although AVFrame can represent both video and audio, AVSubtitle and some other fields, such as width/height, need to be introduced to supplement AVSubtitle when fusing subtitles, So the whole structure looks "patchwork" (even the flip_v field dedicated to video). Here, just focus on the frame and sub fields.

Then a FrameQueue is designed to represent the whole frame queue:

typedef struct FrameQueue {

Frame queue[FRAME_QUEUE_SIZE];//Queue element, which simulates a queue with an array

int rindex;//Is the read frame data index, which is equivalent to the head of the queue

int windex;//Is to write the frame data index, which is equivalent to the end of the queue

int size;//Number of nodes currently stored (or the number of nodes currently written)

int max_size;//Maximum number of storage nodes allowed

int keep_last;//Do you want to keep the last read node

int rindex_shown;//Is the current node already displayed

SDL_mutex *mutex;

SDL_cond *cond;

PacketQueue *pktq;//Associated PacketQueue

} FrameQueue;

FrameQueue does not use arrays to implement queues (ring buffers)

From the definition of fields, it can be seen that the design of FrameQueue is obviously more complex than PacketQueue. Before in-depth code analysis, give its design concept:

- Efficient read / write model (reviewing the design of PacketQueue, the whole queue needs to be locked for each access, with a large range of locks)

- Efficient memory model (node memory is pre allocated in the form of array without dynamic allocation)

- Ring buffer design, and the last read node can be accessed at the same time

The following are the open external interfaces:

- frame_queue_init: initialization

- frame_queue_peek_last: get the frame displayed by the current player

- frame_queue_peek: get the first frame to be displayed

- frame_queue_peek_next: get the second frame to be displayed

- frame_queue_peek_writable: get a Frame size writable memory in the queue

- frame_queue_peek_readable: this method and frame_queue_peek has the same function: it is to obtain the first frame to be displayed

- frame_queue_push: push a Frame of data. In fact, the data has been filled in before calling this method. The function of this method is to move the write index of the queue (i.e. the end of the queue) backward and add one to the number of frames in the queue.

- frame_queue_next: move the read index (queue head) back one bit, and reduce the number of frames in the queue by one

- frame_queue_nb_remaining: returns the number of frames to be displayed in the queue

- frame_queue_last_pos: returns the position of the frame being displayed

- Frame_ queue_ Destroy: release the Frame, and release the mutex and mutex

- frame_queue_unref_item: cancel all buffers referenced by the reference frame, reset the frame field, and release all allocated data in the given caption structure.

packet_queue_init()

The PacketQueue operation provides the following methods:

- packet_queue_init: initialization

- packet_queue_destroy: Destroy

- packet_queue_start: enable and set abort_ If the request is 0, put a flush first_ pkt;

- packet_queue_abort: abort

- packet_queue_get: get a node

- packet_queue_put: save to a node

- packet_queue_put_nullpacket: store an empty node

- packet_queue_flush: clear all nodes in the queue

ffplay uses AVPacket to save the unpacked data (compression state), that is, AVPacket is saved, and AVFrame is used to store the decoded audio or video data.

ffplay first defines a structure AVFifoBuffer:

typedef struct AVFifoBuffer {

uint8_t *buffer;

uint8_t *rptr, *wptr, *end;

uint32_t rndx, wndx;

} AVFifoBuffer;

uint8_t * buffer buffer

uint8_t * rptr read pointer

uint8_t * wptr write pointer

uint8_t * end end pointer

uint32_t rndx read index

uint32_t wndx write index

Next, define another structure, PacketQueue:

/* Save the unpacked data, that is, save AVPacket */

typedef struct PacketQueue {

AVFifoBuffer *pkt_list; ///< AV FIFO buffer

int nb_packets; ///< how many nodes are there in the queue

int size; ///< total bytes of all nodes in the queue, used to calculate the cache size

int64_t duration; ///< total duration of all nodes in the queue

int abort_request; ///< do you want to abort the queue operation for safe and quick exit playback

int serial; ///< serial number has the same function as MyAVPacketList, but the timing of change is slightly different

SDL_mutex *mutex; ///< used to maintain mu lt ithreading safety of PacketQueue (SDL_mutex can be understood as pthread_mutex_t)

SDL_cond *cond; ///< for mutual notification between read and write threads (SDL_cond can be understood as pthread_cond_t)

} PacketQueue;

read_thread()

read_thread() calls the following functions:

avformat_open_input(): open the media.

avformat_find_stream_info(): get media information.

av_dump_format(): output media information to the console.

stream_component_open(): open video / audio / subtitle decoding threads respectively.

refresh_thread(): video refresh thread.

av_read_frame(): obtain a frame of compressed encoded data (i.e. an AVPacket).

packet_queue_put(): put it into different packetqueues according to different types of compressed encoded data (video / audio / subtitles).

refresh_thread()

refresh_thread() calls the following functions:

SDL_PushEvent(FF_REFRESH_EVENT): send FF_ REFRESH_ SDL of event_ Event

av_usleep(): a period of time between two transmissions.

stream_component_open()

stream_component_open() is used to open the thread for video / audio / caption decoding. stream_component_open() calls the following functions:

avcodec_find_decoder(): get the decoder.

avcodec_open2(): open the decoder.

audio_open(): open audio decoding.

SDL_PauseAudio(0): a function that plays audio in SDL.

video_thread(): create a video decoding thread.

subtitle_thread(): creates a caption decoding thread.

packet_queue_start(): initialize PacketQueue.

audio_open() calls the following functions

SDL_OpenAudio(): a function to open an audio device in SDL. Note that it is based on SDL_ The audiospec parameter opens the audio device. SDL_ The callback field in audiospec specifies the callback function SDL for audio playback_ audio_ callback(). This callback function will be called when the audio device needs more data. Therefore, the function will be called repeatedly.

Let's take a look at SDL_ Callback function SDL specified in audiospec_ audio_ callback().

sdl_audio_callback() calls the following function

audio_decode_frame(): decodes audio data.

update_sample_display(): this function is called when audio waveform is displayed instead of video image.

audio_decode_frame() calls the following functions

packet_queue_get(): get audio compression encoded data (an AVPacket).

avcodec_decode_audio4(): decode audio compression encoded data (get an AVFrame).

swr_init(): initialize the SwrContext in libswresample. Libswresample is used to convert audio sampling data (PCM).

swr_convert(): converts the audio sampling rate to a format suitable for system playback.

swr_free(): release SwrContext.

video_thread() calls the following function

avcodec_alloc_frame(): initializes an AVFrame.

get_video_frame(): get an AVFrame that stores decoded data.

queue_picture():

get_video_frame() calls the following functions

packet_queue_get(): obtain video compression encoded data (an AVPacket).

avcodec_decode_video2(): decode the video compression encoded data (get an AVFrame).

queue_picture() calls the following functions

SDL_LockYUVOverlay(): locks an SDL_Overlay.

sws_getCachedContext(): initialize the SwsContext in libswscale. Libswscale is used for conversion between Raw format data (YUV, RGB) of images. Pay attention to sws_getCachedContext() and SWS_ The getcontext () function is consistent.

sws_scale(): convert image data to a format suitable for system playback.

SDL_UnlockYUVOverlay(): unlocks an SDL_Overlay.

subtitle_thread() calls the following function

packet_queue_get(): get the subtitle compressed encoding data (an AVPacket).

avcodec_decode_subtitle2(): decode subtitles and compress encoded data.

event_loop()

After FFplay opens the media again, it will enter event_loop() function, never stop looping. This function is used to receive and process various messages. It's a bit like the message loop mechanism of Windows.

According to event_ SDL in loop()_ SDL received by waitevent()_ Different types of events will call different functions for processing (from a programming point of view, it is a switch() syntax). Only a few examples are listed in the figure:

SDLK_ESCAPE: do_exit(). Exit the program.

SDLK_f (press the "f" key): toggle_full_screen(). Toggle full screen display.

SDLK_SPACE: toggle_pause(). Toggle pause.

SDLK_DOWN: stream_seek(). Jump to the specified point in time.

SDL_ Video size: SDL_SetVideoMode(). Reset the width and height.

FF_REFRESH_EVENT: video_refresh(). Refresh the video.

programme

Since the goal of this time is to blacken the specified block, you can only clear the Y data of the target part during operation.

So it can be in video_ image_ The image to be displayed inside the display function is processed directly, and then the processed image is displayed

static void video_image_display(VideoState *is)

{

Frame *vp;

Frame *sp = NULL;

SDL_Rect rect;

vp = frame_queue_peek_last(&is->pictq); //Take the last frame of the video frame to be displayed

if (is->subtitle_st) { //Subtitle display logic

if (frame_queue_nb_remaining(&is->subpq) > 0) {

sp = frame_queue_peek(&is->subpq);

if (vp->pts >= sp->pts + ((float) sp->sub.start_display_time / 1000)) {

if (!sp->uploaded) {

uint8_t* pixels[4];

int pitch[4];

int i;

if (!sp->width || !sp->height) {

sp->width = vp->width;

sp->height = vp->height;

}

if (realloc_texture(&is->sub_texture, SDL_PIXELFORMAT_ARGB8888, sp->width, sp->height, SDL_BLENDMODE_BLEND, 1) < 0)

return;

for (i = 0; i < sp->sub.num_rects; i++) {

AVSubtitleRect *sub_rect = sp->sub.rects[i];

sub_rect->x = av_clip(sub_rect->x, 0, sp->width );

sub_rect->y = av_clip(sub_rect->y, 0, sp->height);

sub_rect->w = av_clip(sub_rect->w, 0, sp->width - sub_rect->x);

sub_rect->h = av_clip(sub_rect->h, 0, sp->height - sub_rect->y);

is->sub_convert_ctx = sws_getCachedContext(is->sub_convert_ctx,

sub_rect->w, sub_rect->h, AV_PIX_FMT_PAL8,

sub_rect->w, sub_rect->h, AV_PIX_FMT_BGRA,

0, NULL, NULL, NULL);

if (!is->sub_convert_ctx) {

av_log(NULL, AV_LOG_FATAL, "Cannot initialize the conversion context\n");

return;

}

if (!SDL_LockTexture(is->sub_texture, (SDL_Rect *)sub_rect, (void **)pixels, pitch)) {

sws_scale(is->sub_convert_ctx, (const uint8_t * const *)sub_rect->data, sub_rect->linesize,

0, sub_rect->h, pixels, pitch);

SDL_UnlockTexture(is->sub_texture);

}

}

sp->uploaded = 1;

}

} else

sp = NULL;

}

}

//Fit the frame width and height to the window according to the maximum sar

calculate_display_rect(&rect, is->xleft, is->ytop, is->width, is->height, vp->width, vp->height, vp->sar);

if (!vp->uploaded) { //If the previous frame is displayed repeatedly, the uploaded is 1

// if (upload_texture(&is->vid_texture, add_filter_deal(&is->add_filter, vp->frame), &is->img_convert_ctx) < 0)

if (upload_texture(&is->vid_texture,vp->frame, &is->img_convert_ctx) < 0)

return;

vp->uploaded = 1;

vp->flip_v = vp->frame->linesize[0] < 0;

}

set_sdl_yuv_conversion_mode(vp->frame);

SDL_RenderCopyEx(renderer, is->vid_texture, NULL, &rect, 0, NULL, vp->flip_v ? SDL_FLIP_VERTICAL : 0);

set_sdl_yuv_conversion_mode(NULL);

if (sp) {

#if USE_ONEPASS_SUBTITLE_RENDER

SDL_RenderCopy(renderer, is->sub_texture, NULL, &rect);

#else

int i;

double xratio = (double)rect.w / (double)sp->width;

double yratio = (double)rect.h / (double)sp->height;

for (i = 0; i < sp->sub.num_rects; i++) {

SDL_Rect *sub_rect = (SDL_Rect*)sp->sub.rects[i];

SDL_Rect target = {.x = rect.x + sub_rect->x * xratio,

.y = rect.y + sub_rect->y * yratio,

.w = sub_rect->w * xratio,

.h = sub_rect->h * yratio};

SDL_RenderCopy(renderer, is->sub_texture, sub_rect, &target);

}

#endif

}

}

A careful observation reveals a comment:

// if (upload_texture(&is->vid_texture, add_filter_deal(&is->add_filter, vp->frame), &is->img_convert_ctx) < 0)

It can be processed at this location, add_ filter_ The deal function is a processing function written by myself, and finally returns a video frame.

This position is modified before display. Is there any other way? Of course, see the following:

static int video_thread(void *arg)

{

VideoState *is = arg;

AVFrame *frame = av_frame_alloc();

double pts;

double duration;

int ret;

AVRational tb = is->video_st->time_base;

AVRational frame_rate = av_guess_frame_rate(is->ic, is->video_st, NULL);

#if CONFIG_AVFILTER

AVFilterGraph *graph = NULL;

AVFilterContext *filt_out = NULL, *filt_in = NULL;

int last_w = 0;

int last_h = 0;

enum AVPixelFormat last_format = -2;

int last_serial = -1;

int last_vfilter_idx = 0;

#endif

if (!frame)

return AVERROR(ENOMEM);

for (;;) {

ret = get_video_frame(is, frame);

if (ret < 0)

goto the_end;

if (!ret)

continue;

#if CONFIG_AVFILTER

if ( last_w != frame->width

|| last_h != frame->height

|| last_format != frame->format

|| last_serial != is->viddec.pkt_serial

|| last_vfilter_idx != is->vfilter_idx) {

av_log(NULL, AV_LOG_DEBUG,

"Video frame changed from size:%dx%d format:%s serial:%d to size:%dx%d format:%s serial:%d\n",

last_w, last_h,

(const char *)av_x_if_null(av_get_pix_fmt_name(last_format), "none"), last_serial,

frame->width, frame->height,

(const char *)av_x_if_null(av_get_pix_fmt_name(frame->format), "none"), is->viddec.pkt_serial);

avfilter_graph_free(&graph);

graph = avfilter_graph_alloc();

if (!graph) {

ret = AVERROR(ENOMEM);

goto the_end;

}

graph->nb_threads = filter_nbthreads;

if ((ret = configure_video_filters(graph, is, vfilters_list ? vfilters_list[is->vfilter_idx] : NULL, frame)) < 0) {

SDL_Event event;

event.type = FF_QUIT_EVENT;

event.user.data1 = is;

SDL_PushEvent(&event);

goto the_end;

}

filt_in = is->in_video_filter;

filt_out = is->out_video_filter;

last_w = frame->width;

last_h = frame->height;

last_format = frame->format;

last_serial = is->viddec.pkt_serial;

last_vfilter_idx = is->vfilter_idx;

frame_rate = av_buffersink_get_frame_rate(filt_out);

}

frame = add_filter_deal(&is->add_filter, frame);

ret = av_buffersrc_add_frame(filt_in, frame);//TODO

if (ret < 0)

goto the_end;

while (ret >= 0) {

is->frame_last_returned_time = av_gettime_relative() / 1000000.0;

ret = av_buffersink_get_frame_flags(filt_out, frame, 0);

if (ret < 0) {

if (ret == AVERROR_EOF)

is->viddec.finished = is->viddec.pkt_serial;

ret = 0;

break;

}

is->frame_last_filter_delay = av_gettime_relative() / 1000000.0 - is->frame_last_returned_time;

if (fabs(is->frame_last_filter_delay) > AV_NOSYNC_THRESHOLD / 10.0)

is->frame_last_filter_delay = 0;

tb = av_buffersink_get_time_base(filt_out);

#endif

duration = (frame_rate.num && frame_rate.den ? av_q2d((AVRational){frame_rate.den, frame_rate.num}) : 0);

pts = (frame->pts == AV_NOPTS_VALUE) ? NAN : frame->pts * av_q2d(tb);

ret = queue_picture(is, frame, pts, duration, frame->pkt_pos, is->viddec.pkt_serial);

av_frame_unref(frame);

#if CONFIG_AVFILTER

if (is->videoq.serial != is->viddec.pkt_serial)

break;

}

#endif

if (ret < 0)

goto the_end;

}

the_end:

#if CONFIG_AVFILTER

avfilter_graph_free(&graph);

#endif

av_frame_free(&frame);

return 0;

}

There is such a sentence:

frame = add_filter_deal(&is->add_filter, frame);

Are you very familiar with it? See add_filter_deal function, which directly modifies YUV data when reading and decoding video frames.

In fact, when processing video data, we should analyze what data to process, know what data we want to process, and then know where the data will appear, and then just process the data at last.

The following is the source code of the requirement:

ypedef struct AddImgFilter {

int array_width; // Preset the width of the video frame to be processed

int array_height; // Preset the height of the video frame to be processed

int slide_count_width; //Line travel when processing Y component of video frame

int slide_count_height; //Column stroke when processing Y component of video frame

int array_len; //Store the array length of the filter

uint8_t *array; //Pointer to array filter data store

uint8_t buf[1024]; //Cache buffer

uint8_t enable_fileter;

AVFrame *frame; //Store the original data of the input frame of the processing function, and then process and return it

} AddImgFilter;

static void get_bin_file(void)

{

uint8_t buf[1024];

buf[0] = 0x7e; // 0 start frame header

buf[1] = 0x00; // 1 start 4 bytes data length

buf[2] = 0x00;

buf[3] = 0x00;

buf[4] = 0x64;

buf[5] = 0x00; // 5 start 4 byte filter width

buf[6] = 0x00;

buf[7] = 0x01;

buf[8] = 0xe0;

buf[9] = 0x00; // 9 start 4 byte filter high

buf[10] = 0x00;

buf[11] = 0x01;

buf[12] = 0x10;

buf[13] = 0x00; // 13 start 4-byte filter array length

buf[14] = 0x00;

buf[15] = 0x00;

buf[16] = 0x58;

memset(&buf[17], 0xaa, 88); // 17 start n-byte filter array data

memset(&buf[105], 0x7e, 1);

FILE *bin_file;

if((bin_file=fopen("filterbin.bin","wb")) != NULL)

{

fwrite(buf, 1, 106, bin_file);

fclose(bin_file);

}

else

printf( "\nCan not open the path: %s \n", "filterbin.bin");

}

// AddImgFilter constructor

static void add_filter_init(AddImgFilter *add_filter)

{

get_bin_file();

memset(add_filter, 0, sizeof(*add_filter));

add_filter->enable_fileter = 0; //Close the filter

FILE *bin_file;

if((bin_file=fopen("filterbin.bin","rb")) != NULL)

{

fread(add_filter->buf, sizeof(uint8_t), 1024, bin_file);

// for(int i = 0; i<1024; i++)

// printf("add_filter->buf[%d] %x\n", i, add_filter->buf[i]);

fclose(bin_file);

if(add_filter->buf[0] == 0x7e) //Frame header

{

printf("add_filter 1byte ->buf[0] -> %x \n", add_filter->buf[0] );

int data_len = (add_filter->buf[1] << 24) | (add_filter->buf[2] << 16) | (add_filter->buf[3] << 8) | add_filter->buf[4];

printf("data_len 4byte -> %d\n", data_len);

if(add_filter->buf[data_len + 5] == 0x7e) //data_len does not include the end of the frame (1 byte) and itself (4 bytes)

{

add_filter->array_width = (add_filter->buf[5] << 24) | (add_filter->buf[6] << 16) | (add_filter->buf[7] << 8) | add_filter->buf[8];

printf("add_filter->array_width 4byte -> %d\n", add_filter->array_width);

add_filter->array_height = (add_filter->buf[9] << 24) | (add_filter->buf[10] << 16) | (add_filter->buf[11] << 8) | add_filter->buf[12];

printf("add_filter->array_height 4byte -> %d\n", add_filter->array_height);

add_filter->array_len = (add_filter->buf[13] << 24) | (add_filter->buf[14] << 16) | (add_filter->buf[15] << 8) | add_filter->buf[16];

printf("add_filter->array_len 4byte -> %d\n", add_filter->array_len);

add_filter->array = &add_filter->buf[17];

printf("add_filter %dbyte ->buf[%d]-> %x\n",data_len , data_len + 5, add_filter->buf[data_len + 5]);

add_filter->enable_fileter = 1; //Analyze the correct filter information and turn on the filter

}

}

// add_filter->format = AV_PIX_FMT_YUV420P; ///< planar YUV 4:2:0, 12bpp, (1 Cr & Cb sample per 2x2 Y samples)

add_filter->slide_count_width = 0;

add_filter->slide_count_height = 0;

}

else

printf( "\nCan not open the path: %s \n", "filterbin.bin");

}

// AddImgFilter destructor

static void add_filter_uninit(AddImgFilter *add_filter)

{

add_filter = NULL; // AddImgFilter, as a member of VideoState, will be destroyed in the destructor of VideoState

}

static void add_group_filter(AddImgFilter *add_filter, int tar_row, int tar_col)

{

for(int col = 0; col < 16; col++) // Number of columns 0 ~ 15

{

if(tar_col + col < add_filter->array_height)

{

for(int row = 0; row < 16; row++) //Number of rows 0 ~ 15

{

if(tar_row + row < add_filter->array_width)

{

add_filter->frame->data[0][(tar_col + col) * add_filter->frame->linesize[0] + (tar_row + row)] = 0;

}

}

}

}

}

// AddImgFilter frame handler

static AVFrame *add_filter_deal(AddImgFilter *add_filter, AVFrame *frame)

{

add_filter->frame = av_frame_clone(frame);

add_filter->slide_count_width = 0;

add_filter->slide_count_height = 0;

if(add_filter->enable_fileter == 1) //Only when the filter is on can it be processed

{

if(add_filter->array_width != add_filter->frame->width || add_filter->array_height != add_filter->frame->height)

{

add_filter->enable_fileter = 0;

printf("The width height of input video is different from that of setting filter, and the filter processing will not be carried out ");

}

for(int i = 0; i < add_filter->array_len ; i++)

{

for(int j = 7; j >= 0; j--)

{

if((add_filter->array[i] >> j) & 0x01)

{

add_group_filter(add_filter, add_filter->slide_count_width, add_filter->slide_count_height);

}

add_filter->slide_count_width = (add_filter->slide_count_width < add_filter->array_width)? add_filter->slide_count_width + 16 : 0;

add_filter->slide_count_height = (add_filter->slide_count_width == 0)? add_filter->slide_count_height + 16 : add_filter->slide_count_height;

}

}

}

return add_filter->frame;

}