Upgrade and rollback of Pod

When a service in the cluster needs to be upgraded, we need to stop all pods related to the service, then download the new version image and create a new Pod. If the cluster scale is relatively large, this work becomes a challenge, and the way of stopping all and then gradually upgrading will lead to the unavailability of services for a long time.

Kubernetes provides a rolling upgrade function to solve the above problems.

If the Pod is created through Deployment, the user can modify the Pod definition (spec.template) or image name of the Deployment at runtime and apply it to the Deployment object, and the system can complete the automatic update operation of the Deployment. If an error occurs during the update process, you can also restore the version of the Pod through the rollback operation.

Deployment upgrade

# nginx-deployment.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

[root@k8s-master01 pod]# kubectl apply -f nginx-deployment.yaml deployment.apps/nginx-deployment created [root@k8s-master01 pod]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-5bf87f5f59-h2llz 1/1 Running 0 3s 10.244.2.44 k8s-node01 <none> <none> nginx-deployment-5bf87f5f59-mlz84 1/1 Running 0 3s 10.244.2.45 k8s-node01 <none> <none> nginx-deployment-5bf87f5f59-qdjvm 1/1 Running 0 3s 10.244.1.25 k8s-node02 <none> <none>

Now the Pod image needs to be updated to Nginx:1.9.1. We can set a new image name for Deployment through kubectl set image command:

kubectl set image deployment/nginx-development nginx=nginx:1.9.1

Another way to update is to use kubectl edit command to modify the configuration of Deployment and change spec.template spec.containers[0]. Image changed from Nginx:1.7.9 to Nginx:1.9.1:

kubectl edit deployment/nginx-deployment

Once the image name (or Pod definition) is modified, the system will be triggered to complete the rolling upgrade operation of all running pods in Deployment

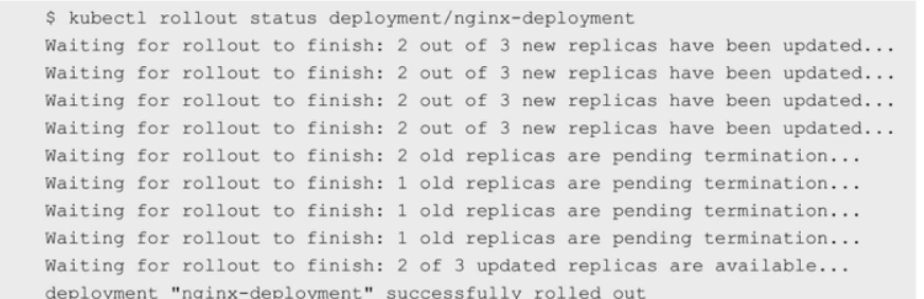

You can use the kubectl rollout status command to view the update process of Deployment:

View the image used by Pod, which has been updated to Nginx:1.9.1:

[root@k8s-master01 pod]# kubectl describe pod nginx-deployment-678645bf77-4ltnc Image: nginx:1.9.1

Use the kubectl describe deployments / nginx Deployment command to carefully observe the update process of Deployment.

Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 6m4s deployment-controller Scaled down replica set nginx-deployment-5bf87f5f59 to 2 Normal ScalingReplicaSet 6m4s deployment-controller Scaled up replica set nginx-deployment-678645bf77 to 2 Normal ScalingReplicaSet 6m3s deployment-controller Scaled up replica set nginx-deployment-678645bf77 to 3 Normal ScalingReplicaSet 6m1s deployment-controller Scaled down replica set nginx-deployment-5bf87f5f59 to 0 Normal ScalingReplicaSet 4m55s deployment-controller Scaled up replica set nginx-deployment-5bf87f5f59 to 1 Normal ScalingReplicaSet 4m54s deployment-controller Scaled down replica set nginx-deployment-678645bf77 to 2 Normal ScalingReplicaSet 4m53s (x2 over 8m4s) deployment-controller Scaled up replica set nginx-deployment-5bf87f5f59 to 3 Normal ScalingReplicaSet 103s (x2 over 6m5s) deployment-controller Scaled up replica set nginx-deployment-678645bf77 to 1 Normal ScalingReplicaSet 101s (x2 over 6m3s) deployment-controller Scaled down replica set nginx-deployment-5bf87f5f59 to 1 Normal ScalingReplicaSet 100s (x7 over 4m54s) deployment-controller (combined from similar events): Scaled down replica set nginx-deployment-5bf87f5f59 to 0

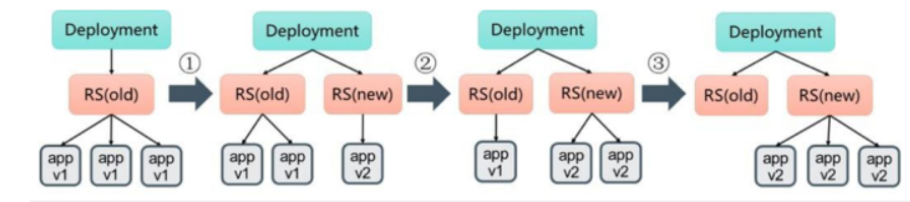

When initially creating a Deployment, the system creates a ReplicaSet (nginx-deployment-5bf87f5f59) and creates three Pod replicas according to the user's needs. When the number of replicas of the old system is expanded to 45671-862, and then the number of replicas is reduced to a new one. After that, the system continues to adjust the old and new replicasets one by one according to the same update strategy. Finally, the new ReplicaSet runs three new versions of Pod replicas, while the number of old ReplicaSet replicas is reduced to 0. As shown in the figure.

[root@k8s-master01 pod]# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deployment-5bf87f5f59 0 0 0 17m nginx-deployment-678645bf77 3 3 3 15m

During the whole upgrade process, the system will ensure that at least two pods are available and at most four pods are running at the same time, which is completed by deployment through complex algorithms. Deployment needs to ensure that only a certain number of pods may be unavailable during the whole update process. By default, deployment ensures that the total number of available pods is at least 1 less than the required number of copies (specified), that is, at most 1 is unavailable (maxUnavailable=1). Deployment also needs to ensure that the total number of pods does not exceed the required number of copies throughout the update process. By default, deployment ensures that the total number of pods is at most 1 more than the required number of pods, that is, at most 1 surge value (maxSurge=1). Starting from version 1.6 of Kubernetes, the default values of maxUnavailable and maxSurge will be updated from 1 and 1 to 25% and 25% of the required number of copies.

In this way, during the upgrade process, Deployment can ensure that the service is not interrupted, and the number of replicas is always maintained at the number specified by the user.

The update strategy is described below.

In the definition of Deployment, you can specify the strategy of Pod update through spec.strategy. At present, two strategies are supported: Recreate and RollingUpdate. The default value is RollingUpdate. The RollingUpdate policy was used in the previous example.

- Recreate: set spec.strategy Type = recreate, which means that when the Deployment updates the Pod, it will kill all running pods first, and then create a new Pod.

- RollingUpdate: set spec.strategy Type = RollingUpdate, which means that the Deployment will update the Pod one by one in the way of rolling update. At the same time, you can set spec.strategy Two parameters under RollingUpdate (maxUnavailable and maxSurge) control the process of rolling update.

The following describes the two main parameters during rolling update.

- spec.strategy.rollingUpdate.maxUnavailable: used to specify the upper limit of the number of pods that are unavailable during the update process. The maxUnavailable value can be an absolute value (e.g. 5) or a percentage of the number of copies expected by Pod (e.g. 10%). If it is set as a percentage, the system will first calculate the absolute value (integer) by rounding down. When another parameter maxSurge is set to 0, maxUnavailable must be set to an absolute value greater than 0 (starting from Kubernetes 1.6, the default value of maxUnavailable is changed from 1 to 25%). For example, when maxUnavailable is set to 30%, the old ReplicaSet can immediately reduce the number of replicas to 70% of the total number of replicas required at the beginning of the rolling update. Once the new Pod is created and ready, the old ReplicaSet will further shrink and the new ReplicaSet will continue to expand. In the whole process, the system can ensure that the total number of available pods accounts for at least 70% of the total number of expected replicas of the Pod at any time.

- spec.strategy.rollingUpdate.maxSurge: used to specify the maximum value that the total number of pods exceeds the expected number of copies of the Pod during the process of updating the Pod by Deployment. The value of the maxSurge can be an absolute value (e.g. 5) or a percentage of the number of copies expected by the Pod (e.g. 10%). If it is set as percentage, the system will first calculate the absolute value (integer) by rounding up. Starting with Kubernetes 1.6, the default value of maxSurge has been changed from 1 to 25%. For example, when the value of maxSurge is set to 30%, the new ReplicaSet can immediately expand the number of replicas at the beginning of rolling update, just ensure that the sum of the number of Pod replicas of the old and new ReplicaSet does not exceed 130% of the expected number of replicas. Once the old Pod is killed, the new ReplicaSet will be further expanded. In the whole process, the system can ensure that the sum of the total number of Pod copies of the old and new ReplicaSet does not exceed 130% of the required number of copies at any time.

Here, we need to pay attention to the situation of multiple Rollover. If the last update of the Deployment is in progress and the user initiates the update operation of the Deployment again, the Deployment will create a ReplicaSet for each update, and each time after the new ReplicaSet is created successfully, the number of Pod replicas will be increased one by one, and the ReplicaSet that was being expanded before will be stopped expanding (updating), Add it to the list of previous versions of ReplicaSet, and then start the operation of shrinking to 0.

For example, suppose we create a Deployment, which starts to create five Pod copies of Nginx:1.7.9. When the creation of Pod has not been completed, we update the Deployment, and change the image in the Pod template to Nginx:1.9.1 when the number of copies remains unchanged. Suppose that the Deployment has created three Pod copies of Nginx:1.7.9 at this time, The Deployment will immediately kill the three created Nginx:1.7.9 pods and start creating Nginx:1.9.1 pods. The Deployment will not update until the Pod of Nginx:1.7.9 is created to 5.

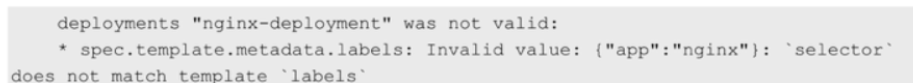

Note that the selector label needs to be updated. Generally speaking, updating the Label Selector of Deployment is not encouraged, because this will change the Pod list selected by Deployment and may conflict with other controllers. If you must update the Label Selector, be careful to ensure that no other problems occur. The following notes about the update of the Deployment selector label.

1) When adding a selector label, you must synchronously modify the label of the Pod configured by the Deployment and add a new label for the Pod, otherwise the update of the Deployment will report a verification error and fail:

Adding a label selector is not backward compatible, which means that the new label selector will not match and use the ReplicaSets and pods created by the old selector. Therefore, adding a selector will cause all old versions of ReplicaSets and pods created by the old ReplicaSets to be orphaned (not automatically deleted by the system and not controlled by the new ReplicaSet).

After adding a new label to the label selector and Pod template (using kubectl edit deployment command), the effect is as follows:

# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deployment-4087004473 0 0 0 52m nginx-deployment-3599678771 3 3 3 1m nginx-deployment-3661742516 3 3 3 2s

You can see three new pods created by the new ReplicaSet (nginx-deployment-3661742516):

# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-deployment-3599678771-01h26 1/1 Running 0 2m nginx-deployment-3599678771-57thr 1/1 Running 0 2m nginx-deployment-3599678771-s8p21 1/1 Running 0 2m nginx-deployment-3661742516-46djm 1/1 Running 0 52s nginx-deployment-3661742516-kws84 1/1 Running 0 52s nginx-deployment-3661742516-wq30s 1/1 Running 0 52s

(2) Updating the label selector, that is, changing the key or value of the label in the selector, also has the same effect as adding a selector label.

(3) Delete tag selector, that is, delete one or more tags from the tag selector of the Deployment, and the ReplicaSet and Pod of the Deployment will not be affected. However, it should be noted that the deleted tags will still exist on the existing Pod and ReplicaSets.

Rollback of Deployment

Sometimes (for example, when the new Deployment is unstable), we may need to roll back the Deployment to the old version. By default, the release history of all deployments is kept in the system so that we can roll back at any time (the number of history records can be configured).

Suppose that when updating the Deployment image, the container image name is mistakenly set to Nginx:1.91 (a nonexistent image):

kubectl set image deployment/nginx-deployment nginx=nginx:1.9.1

Then the Deployment process of the Deployment will get stuck:

kubectl rollout status deployments nginx-deployment Waiting for rollout to finish: 1 out of 3 new replicas have been updated...

Check the Deployment history

[root@k8s-master01 pod]# kubectl rollout history deployment/nginx-deployment deployment.apps/nginx-deployment REVISION CHANGE-CAUSE 5 <none> 6 <none>

Note that when you use the – record parameter when creating a Deployment, you can see the commands used by each version in the change-case column. In addition, the update operation of the Deployment is triggered when the Deployment is deployed (Rollout), which means that a new revision version will be created when and only when the Deployment's Pod template (i.e. spec.template) is changed, such as updating the template label or container image. Other update operations (such as expanding the number of copies) will not trigger the update operation of the Deployment, which also means that when we roll back the Deployment to the previous version, only the Pod template part of the Deployment will be modified.

If you need to view the details of a specific version, you can add the – revision = < n > parameter:

To view the information of a specific version, you can add the – revision = < n > parameter:

[root@k8s-master01 pod]# kubectl rollout history deployment/nginx-deployment --revision=5

deployment.apps/nginx-deployment with revision #5

Pod Template:

Labels: app=nginx

pod-template-hash=5bf87f5f59

Containers:

nginx:

Image: nginx:1.7.9

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Now we decide to undo this release and roll back to the previous deployment version:

[root@k8s-master01 pod]# kubectl rollout undo deployment/nginx-deployment deployment.apps/nginx-deployment rolled back

You can also use the -- to – revision parameter to specify the deployment version number to rollback to:

[root@k8s-master01 pod]# kubectl rollout undo deployment/nginx-deployment --to-revision=6 deployment.apps/nginx-deployment rolled back

Suspend and resume the Deployment operation of the Deployment, and the complex modification has been completed

For a complex Deployment configuration modification, in order to avoid frequently triggering the update operation of Deployment, you can first suspend the update operation of Deployment, then modify the configuration, and then restore Deployment. By triggering the complete update operation at one time, you can avoid unnecessary update operation of Deployment

Take the Nginx created earlier as an example:

[root@k8s-master01 pod]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 3/3 3 3 42m [root@k8s-master01 pod]# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deployment-5bf87f5f59 0 0 0 43m nginx-deployment-678645bf77 3 3 3 41m

Pause the update operation of deployment through kubectl rollout pause command

[root@k8s-master01 pod]# kubectl rollout pause deployment/nginx-deployment deployment.apps/nginx-deployment paused

Then modify the image information of the Deployment:

kubectl set image deployment/nginx-deployment nginx=nginx: 1.9.1

Check the Deployment history and find that no new Deployment operation is triggered

[root@k8s-master01 pod]# kubectl rollout history deployment/nginx-deployment deployment.apps/nginx-deployment REVISION CHANGE-CAUSE 11 kubectl set image deployment/nginx-deployment nginx=nginx:1.7.9 --record=true 12 kubectl set image deployment/nginx-deployment nginx=nginx:1.9.1 --record=true

After suspending the Deployment deployment, you can update the configuration as many times as needed. For example, update the resource limit of the container again:

[root@k8s-master01 pod]# kubectl set resources deployment nginx-deployment -c=nginx --limits=cpu=200m,memory=512Mi deployment.apps/nginx-deployment resource requirements updated

Finally, restore the Deployment operation of this Deployment:

kubectl rollout resume deploy nginx-deployment

You can see that a new ReplicaSet is created

[root@k8s-master01 pod]# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deployment-56bbb744cc 3 3 3 12s nginx-deployment-5bf87f5f59 0 0 0 50m nginx-deployment-678645bf77 0 0 0 48m

Viewing the event information of the Deployment, you can see that the Deployment has completed the update:

[root@k8s-master01 pod]# kubectl describe deployment nginx-deployment

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx:1.9.1

Port: 80/TCP

Host Port: 0/TCP

Limits:

cpu: 200m

memory: 512Mi

Note that a suspended Deployment cannot be rolled back until it is resumed.

Use the kubectl rolling update command to complete the rolling upgrade of RC

kubernetes also provides a kubectl rolling update command to implement the rolling upgrade of RC. This command creates a new RC, and then automatically controls the number of Pod copies in the old RC to be reduced to 0. At the same time, the number of Pod copies in the new RC is increased from 0 to the target value one by one to complete the upgrade of Pod. It should be noted that the system requires the new RC and the old RC to be in the same namespace.

# tomcat-controller-v1.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: tomcat

labels:

name: tomcat

version: v1

spec:

replicas: 2

selector:

name: tomcat

template:

metadata:

labels: #You can define your own key value

name: tomcat

spec:

containers:

- name: tomcat

image: tomcat:6.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

env: #environment variable

- name: GET_HOSTS_FROM

value: dns

[root@k8s-master01 pod]# kubectl apply -f tomcat-controller-v1.yaml [root@k8s-master01 pod]# kubectl get rc -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR tomcat 2 2 2 16s tomcat tomcat:6.0 name=tomcat

- RC rolling upgrade

# tomcat-controller-v2.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: tomcat2

labels:

name: tomcat

version: v2

spec:

replicas: 2

selector:

name: tomcat2

template:

metadata:

labels: #You can define your own key value

name: tomcat2

spec:

containers:

- name: tomcat

image: tomcat:7.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

env: #environment variable

- name: GET_HOSTS_FROM

value: dns

[root@k8s-master01 pod]# kubectl rolling-update tomcat -f tomcat-controller-v2.yaml

The following two points should be noted in the configuration file:

- The name of the RC cannot be the same as the name of the old RC.

- At least one Label in the selector should be different from the Label of the old RC to identify it as the new RC. In this example, a new Label named version is added to distinguish it from the old RC. That is, ensure that at least one Value of the same Key is different.

After all the new pods are started, all the old pods will be destroyed, thus completing the update of the container cluster.

The other method is to use kubectl rolling update command and – image parameter to specify the name of the new version image without using the configuration file to complete the rolling upgrade of Pod

kubectl rolling-update tomcat --image=tomcat:6.0

Different from using the configuration file, the result of execution is that the old RC is deleted, and the new RC will still use the name of the old RC.

It can be seen that kubectl completes the update of the whole RC step by step by creating a new version of Pod and stopping an old version of Pod.

It can be seen that kubectl adds a Label with the key "deployment" to RC (the name of this key can be modified through the – deployment Label key parameter). The value of Label is the value of RC content after Hash calculation, which is equivalent to signature. In this way, it is easy to compare whether the Image name and other information in RC have changed.

If the configuration error is found during the update process, the user can interrupt the update operation and complete the rollback of Pod version by executing kubectl rolling - Update - rollback:

kubectl rolling-update tomcat --image=tomcat:6.0 --rollback

It can be seen that the rolling upgrade of RC does not have the functions such as the historical record of Deployment in the application version upgrade process and the fine control of the number of old and new versions. In the evolution process of Kubernetes, RC will be gradually replaced by RS and Deployment. It is recommended that users give priority to using Deployment to complete the Deployment and upgrade of Pod.

Update policies for other management objects

Since version 1.6, Kubernetes has introduced a rolling upgrade similar to Deployment in the update strategy of DaemonSet and stateful set, which automatically completes the version upgrade of the application through different strategies.

1. Update strategy of daemonset

At present, there are two upgrade strategies for DaemonSet: OnDelete and RollingUpdate.

(1) OnDelete: the default upgrade strategy of DaemonSet, which is consistent with Kubernetes of version 1.5 and earlier. When OnDelete is used as the upgrade strategy, the new Pod will not be automatically created after creating a new DaemonSet configuration. The new operation will not be triggered until the user manually deletes the old version of Pod.

(2) RollingUpdate: introduced from Kubernetes version 1.6. When the old version of poolset is automatically updated, the new version of poolset will be automatically updated by using the poolupdate policy. The whole process is as controllable as the rolling upgrade of ordinary Deployment. However, there are two points different from the rolling upgrade of ordinary pods: first, Kubernetes does not support viewing and managing the update history of DaemonSet; Second, the Rollback of DaemonSet cannot be realized directly through kubectl rollback command like Deployment, but must be realized by submitting the old version configuration again.

2. Update strategy of statefulset

Since version 1.6, Kubernetes has gradually aligned the update strategy of stateful set with that of Deployment and daemon. It will also implement RollingUpdate, partitioned and OnDelete strategies to ensure that all pods in stateful set are updated orderly and one by one, and can retain the update history and rollback to a historical version.