This paper mainly refers to DataWhale graph neural network team learning

The statistics of the three data sets are as follows:

| data set | Cora | CiteSeer | PubMed |

|---|---|---|---|

| Number of nodes | 2708 | 3327 | 19717 |

| Number of sides | 5278 | 4552 | 44324 |

| Number of training nodes | 140 | 120 | 60 |

| Number of verification nodes | 500 | 500 | 500 |

| Number of test nodes | 1000 | 1000 | 1000 |

| Number of node categories | 7 | 6 | 3 |

| Feature dimension | 1433 | 3703 | 500 |

| Edge density | 0.0014 | 0.0008 | 0.0002 |

Calculation formula of edge density:

p

=

2

m

n

2

p = \frac{2 m}{n^{2}}

p=n22m

Among them,

m

m

m is the number of sides,

n

n

n represents the number of nodes.

This article only uses Cora data set for demonstration.

Practical problem 1: try to use different network layers in PyG to replace GCNConv, as well as different layers and different out_channels to implement the node classification task.

Load dataset

import torch from torch_geometric.datasets import Planetoid from torch_geometric.transforms import NormalizeFeatures

dataset = Planetoid(root='dataset/Cora', name='Cora',

transform=NormalizeFeatures())

Build three layers of GAT [256, 128, 64], plus a linear layer (64 - > 7)

import torch

import torch.nn.functional as F

from torch_geometric.nn import GATConv, Sequential

from torch.nn import Linear

from torch.nn import ReLU

class GAT(torch.nn.Module):

def __init__(self, num_features, hidden_channels_list, num_classes):

super(GAT, self).__init__()

hns = [num_features] + hidden_channels_list

conv_list = []

for idx in range(len(hidden_channels_list)):

conv_list.append((GATConv(hns[idx], hns[idx+1]), 'x, edge_index -> x'))

conv_list.append(ReLU(inplace=True),)

self.convseq = Sequential('x, edge_index', conv_list)

self.linear = Linear(hidden_channels_list[-1], num_classes)

def forward(self, x, edge_index):

x = self.convseq(x, edge_index)

x = F.dropout(x, p=0.5, training=self.training)

x = self.linear(x)

return F.log_softmax(x, dim=1)

Initialize the model and accelerate with GPU

device = torch.device('cuda' if torch.cuda.is_available else 'cpu')

data = dataset[0].to(device)

hidden_channels_list = [256, 128, 64]

model = GAT(dataset.num_features, hidden_channels_list, dataset.num_classes)

model = model.to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

model training

def train():

model.train()

optimizer.zero_grad()

out = model(data.x, data.edge_index)

loss = F.nll_loss(out[data.train_mask], data.y[data.train_mask])

loss.backward()

optimizer.step()

return loss

for epoch in range(1,201):

loss = train()

print(f'Epoch:{epoch:03d}, Loss:{loss:.4f}')

Model test

def test():

model.eval()

out = model(data.x, data.edge_index)

pred = out.argmax(dim=1)

test_correct = (pred[data.test_mask] == data.y[data.test_mask]).sum()

test_acc = int(test_correct) / data.test_mask.sum()

return test_acc

test_acc = test()

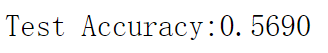

print(f'Test Accuracy:{test_acc:.4f}')

visualization

import matplotlib.pyplot as plt

from sklearn.manifold import TSNE

def visualize(h, color):

z = TSNE(n_components=2).fit_transform(h.detach().cpu().numpy())

plt.figure(figsize=(10,10))

plt.xticks([])

plt.yticks([])

plt.scatter(z[:, 0], z[:, 1], s=70, c=color.cpu().detach().numpy()

, cmap='Paired'

)

plt.show()

out = model(data.x, data.edge_index)

visualize(out[data.test_mask], data.y[data.test_mask])

Build four layers of GAT, [256, 128, 64, 32], plus a linear layer (32 ----- > 7) (the method is the same as above)

import torch

import torch.nn.functional as F

from torch_geometric.nn import GATConv, Sequential

from torch.nn import Linear

from torch.nn import ReLU

class GAT(torch.nn.Module):

def __init__(self, num_features, hidden_channels_list, num_classes):

super(GAT, self).__init__()

hns = [num_features] + hidden_channels_list

conv_list = []

for idx in range(len(hidden_channels_list)):

conv_list.append((GATConv(hns[idx], hns[idx+1]), 'x, edge_index -> x'))

conv_list.append(ReLU(inplace=True),)

self.convseq = Sequential('x, edge_index', conv_list)

self.linear = Linear(hidden_channels_list[-1], num_classes)

def forward(self, x, edge_index):

x = self.convseq(x, edge_index)

x = F.dropout(x, p=0.5, training=self.training)

x = self.linear(x)

return F.log_softmax(x, dim=1)

device = torch.device('cuda' if torch.cuda.is_available else 'cpu')

data = dataset[0].to(device)

hidden_channels_list = [256, 128, 64, 32]

model = GAT(dataset.num_features, hidden_channels_list, dataset.num_classes)

model = model.to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

def train():

model.train()

optimizer.zero_grad()

out = model(data.x, data.edge_index)

loss = F.nll_loss(out[data.train_mask], data.y[data.train_mask])

loss.backward()

optimizer.step()

return loss

for epoch in range(1,201):

loss = train()

print(f'Epoch:{epoch:03d}, Loss:{loss:.4f}')

def test():

model.eval()

out = model(data.x, data.edge_index)

pred = out.argmax(dim=1)

test_correct = (pred[data.test_mask] == data.y[data.test_mask]).sum()

test_acc = int(test_correct) / data.test_mask.sum()

return test_acc

test_acc = test()

print(f'Test Accuracy:{test_acc:.4f}')

visualization

out = model(data.x, data.edge_index) visualize(out[data.test_mask], data.y[data.test_mask])

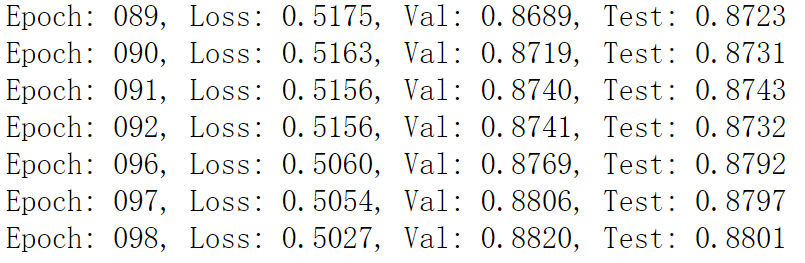

Practical problem 2: try to use torch in edge prediction task_ geometric. nn. Sequential container construction diagram neural network.

Using Sequential container and GCN to construct neural network

import torch

from torch_geometric.nn import GCNConv, Sequential

import torch.nn.functional as F

from torch.nn import Linear

from torch.nn import ReLU

class Net(torch.nn.Module):

def __init__(self, num_features, hidden_channels_list):

super(Net, self).__init__()

hns = [num_features] + hidden_channels_list

conv_list = []

for idx in range(len(hidden_channels_list)):

conv_list.append((GCNConv(hns[idx], hns[idx+1]), 'x, edge_index -> x'))

conv_list.append(ReLU(inplace=True),)

self.convseq = Sequential('x, edge_index', conv_list)

def encode(self, x, edge_index):

x = F.dropout(x, p=0.6, training=self.training)

x = self.convseq(x, edge_index)

return x

def decode(self, z, pos_edge_index, neg_edge_index):

edge_index = torch.cat([pos_edge_index, neg_edge_index], dim=-1)

return (z[edge_index[0]] * z[edge_index[1]]).sum(dim=-1)

def decode_all(self, z):

prob_adj = z @ z.t()

return (prob_adj > 0).nonzero(as_tuple=False).t()

def get_link_labels(pos_edge_index, neg_edge_index):

num_links = pos_edge_index.size(1) + neg_edge_index.size(1)

link_labels = torch.zeros(num_links, dtype=torch.float)

link_labels[:pos_edge_index.size(1)] = 1.

return link_labels

def train(data, model, optimizer):

model.train()

neg_edge_index = negative_sampling( edge_index=data.train_pos_edge_index,

num_nodes=data.num_nodes,

num_neg_samples=data.train_pos_edge_index.size(1))

optimizer.zero_grad()

z = model.encode(data.x, data.train_pos_edge_index)

link_logits = model.decode(z, data.train_pos_edge_index, neg_edge_index)

link_labels = get_link_labels(data.train_pos_edge_index, neg_edge_index).to(data.x.device)

loss = F.binary_cross_entropy_with_logits(link_logits, link_labels)

loss.backward()

optimizer.step()

return loss // An highlighted block

var foo = 'bar';

from sklearn.metrics import roc_auc_score

@torch.no_grad()

def test(data, model):

model.eval()

z = model.encode(data.x, data.train_pos_edge_index)

results = []

for prefix in ['val', 'test']:

pos_edge_index = data[f'{prefix}_pos_edge_index']

neg_edge_index = data[f'{prefix}_neg_edge_index']

link_logits = model.decode(z, pos_edge_index, neg_edge_index)

link_probs = link_logits.sigmoid()

link_labels = get_link_labels(pos_edge_index, neg_edge_index)

results.append(roc_auc_score(link_labels.cpu(), link_probs.cpu()))

return results

def main():

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

dataset = Planetoid(root='dataset/Cora', name='Cora',

transform=NormalizeFeatures())

data = dataset[0]

ground_truth_edge_index = data.edge_index.to(device)

data.train_mask = data.val_mask = data.test_mask = data.y = None

data = train_test_split_edges(data)

data = data.to(device)

model = Net(dataset.num_features, [128,64]).to(device)

optimizer = torch.optim.Adam(params=model.parameters(), lr=0.01)

best_val_auc = test_auc = 0

for epoch in range(1, 101):

loss = train(data, model, optimizer)

val_auc, tmp_test_auc = test(data, model)

if val_auc > best_val_auc:

best_val_auc = val_auc

test_auc = tmp_test_auc

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}, Val: {val_auc:.4f}, '

f'Test: {test_auc:.4f}')

z = model.encode(data.x, data.train_pos_edge_index)

final_edge_index = model.decode_all(z)

if __name__ == "__main__":

main()

Using Sequential container and GAT to construct neural network

import torch

from torch_geometric.nn import GATConv, Sequential

import torch.nn.functional as F

from torch.nn import Linear

from torch.nn import ReLU

class Net(torch.nn.Module):

def __init__(self, num_features, hidden_channels_list):

super(Net, self).__init__()

# torch.manual_seed(12345)

hns = [num_features] + hidden_channels_list

conv_list = []

for idx in range(len(hidden_channels_list)):

conv_list.append((GATConv(hns[idx], hns[idx+1]), 'x, edge_index -> x'))

conv_list.append(ReLU(inplace=True),)

self.convseq = Sequential('x, edge_index', conv_list)

def encode(self, x, edge_index):

x = F.dropout(x, p=0.6, training=self.training)

x = self.convseq(x, edge_index)

return x

def decode(self, z, pos_edge_index, neg_edge_index):

edge_index = torch.cat([pos_edge_index, neg_edge_index], dim=-1)

return (z[edge_index[0]] * z[edge_index[1]]).sum(dim=-1)

def decode_all(self, z):

prob_adj = z @ z.t()

return (prob_adj > 0).nonzero(as_tuple=False).t()

def get_link_labels(pos_edge_index, neg_edge_index):

num_links = pos_edge_index.size(1) + neg_edge_index.size(1)

link_labels = torch.zeros(num_links, dtype=torch.float)

link_labels[:pos_edge_index.size(1)] = 1.

return link_labels

def train(data, model, optimizer):

model.train()

neg_edge_index = negative_sampling( edge_index=data.train_pos_edge_index,

num_nodes=data.num_nodes,

num_neg_samples=data.train_pos_edge_index.size(1))

optimizer.zero_grad()

z = model.encode(data.x, data.train_pos_edge_index)

link_logits = model.decode(z, data.train_pos_edge_index, neg_edge_index)

link_labels = get_link_labels(data.train_pos_edge_index, neg_edge_index).to(data.x.device)

loss = F.binary_cross_entropy_with_logits(link_logits, link_labels)

loss.backward()

optimizer.step()

return loss

from sklearn.metrics import roc_auc_score

@torch.no_grad()

def test(data, model):

model.eval()

z = model.encode(data.x, data.train_pos_edge_index)

results = []

for prefix in ['val', 'test']:

pos_edge_index = data[f'{prefix}_pos_edge_index']

neg_edge_index = data[f'{prefix}_neg_edge_index']

link_logits = model.decode(z, pos_edge_index, neg_edge_index)

link_probs = link_logits.sigmoid()

link_labels = get_link_labels(pos_edge_index, neg_edge_index)

results.append(roc_auc_score(link_labels.cpu(), link_probs.cpu()))

return results

def main():

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

dataset = Planetoid(root='dataset/Cora', name='Cora',

transform=NormalizeFeatures())

data = dataset[0]

ground_truth_edge_index = data.edge_index.to(device)

data.train_mask = data.val_mask = data.test_mask = data.y = None

data = train_test_split_edges(data)

data = data.to(device)

model = Net(dataset.num_features, [128,64]).to(device)

optimizer = torch.optim.Adam(params=model.parameters(), lr=0.01)

best_val_auc = test_auc = 0

for epoch in range(1, 101):

loss = train(data, model, optimizer)

val_auc, tmp_test_auc = test(data, model)

if val_auc > best_val_auc:

best_val_auc = val_auc

test_auc = tmp_test_auc

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}, Val: {val_auc:.4f}, '

f'Test: {test_auc:.4f}')

z = model.encode(data.x, data.train_pos_edge_index)

final_edge_index = model.decode_all(z)

if __name__ == "__main__":

main()

Think about question 3: as shown in the code below, we use data train_ pos_ edge_ Index is the actual parameter to sample the negative samples of the training set, but the negative samples obtained by such sampling may include some positive samples of the verification set and the positive samples of the test set, that is, the real positive samples may be marked as negative samples, which will lead to conflict. But we still do it. Why?

neg_edge_index = negative_sampling( edge_index=data.train_pos_edge_index, num_nodes=data.num_nodes, num_neg_samples=data.train_pos_edge_index.size(1))

For example, there are 2708 nodes in the Cora dataset. Assuming that each node is connected to all other nodes (including itself), there are 2708 * 2708 = 7333264 edges in the train_ pos_ edge_ There are 8976 edges in the index, so the negative samples are sampled from the other more than 7 million edges. Therefore, even if the negative samples sampled are positive samples in the verification set or test set, the probability of such coincidence is very low and will not have a great impact on the overall generalization ability of the model.