matlab has to find the extreme value function findpack can only find one-dimensional extreme value, and opencv has no corresponding function, so-called extreme value is larger than the surrounding values, and then it depends on the degree of extreme value required:

/*Calculate the center point of the divisible stone -- the big stone will be a little over divided

* singlechannel----Single channel distance transformed image, type float

* lengthresh-------If there is no larger number than the peak point within the lengthresh pixels around the peak point, it is the true peak; otherwise, do not use this peak value

* peaks------------Find the center point of each divisible stone of the input image, i.e. the peak point that meets the requirements

* */

int OreStoneDegreeCalculate::findPeaks(Mat &singlechannel,int lengthresh,vector<Point> &peaks)

{

if(peaks.size()!=0)

{

peaks.clear();

}

int rownumber = singlechannel.rows;

int colnumber = singlechannel.cols;

if(rownumber < 3 || colnumber < 3){

return -1;

}

for(int start_row = 0;start_row!=rownumber;start_row++)

{

for(int start_col = 0;start_col!=colnumber;start_col++)

{

float current=singlechannel.ptr<float>(start_row)[start_col];

if(current<2)

{

continue;

}

//8 neighbors to brush

//If the value of a pixel is higher than all the values of the surrounding 8 neighborhoods, then it may be a peak point

bool ispeak=true;

for(int rr=-1;rr!=2;rr++)

{

if(!ispeak)

{

break;

}

for(int cc=-1;cc!=2;cc++)

{

if((rr==0 && cc==0)||((start_row+rr<0 || start_col+cc<0)||(start_row+rr>=singlechannel.rows || start_col+cc>=singlechannel.cols)))

{

continue;

}

if(current<singlechannel.ptr<float>(start_row+rr)[start_col+cc])

{

ispeak=false;

break;

}

}

}

//Do you want to reset the chain point? Do you want to lat peak

//Make a second judgment on the initially found peak to see whether it is a true peak or a false peak

if(ispeak)

{

//If all points in the range of lengthresh around this peak point are smaller than the value of the peak point, then this is a true peak point, that is, the stone center

//If there is more than this peak point in the surrounding length range of this peak point, then this peak point is false.

int length=2;

bool ignore=false;

while((!ignore) && (length<lengthresh))

{

for(int rr=-1*length;rr!=1*length+1;rr++)

{

if(ignore)

{

break;

}

for(int cc=-1*length;cc!=1*length+1;cc++)

{

if((rr==0 && cc==0)||((start_row+rr<0 || start_col+cc<0)||(start_row+rr>=singlechannel.rows || start_col+cc>=singlechannel.cols)))

{

continue;

}

if(current<singlechannel.ptr<float>(start_row+rr)[start_col+cc])

{

ignore=true;

break;

}

if(current==singlechannel.ptr<float>(start_row+rr)[start_col+cc])

{

singlechannel.ptr<float>(start_row)[start_col]=1;

ignore=true;

break;

}

}

}

length++;

}

if(!ignore)

{

peaks.push_back(Point(start_col,start_row));

}

}

//The truth of this peak point has been judged

//The correction value is also a vacuum brake breaking test

}

}

//All the points in the image are traversed and all the peaks are found.

//Test all points of the image

return 0;

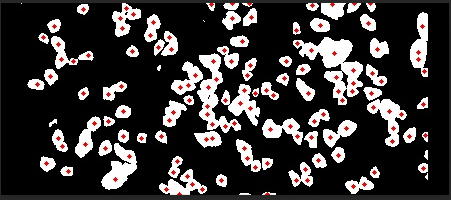

}For the conglutinated target, I use this extremum method to find the extremum and then + watershed segmentation. The effect is better than the segmentation method I tried before, and it is simple and time-consuming.

In this way, the center points are all relatively accurate, and then there will be less over segmentation in watershed segmentation.

But this method should be optimized again, because the extremum found in this way is not friendly to a single big goal, and a single big goal will find several extremum. As for the optimized code, it will not be distributed (for internal use).

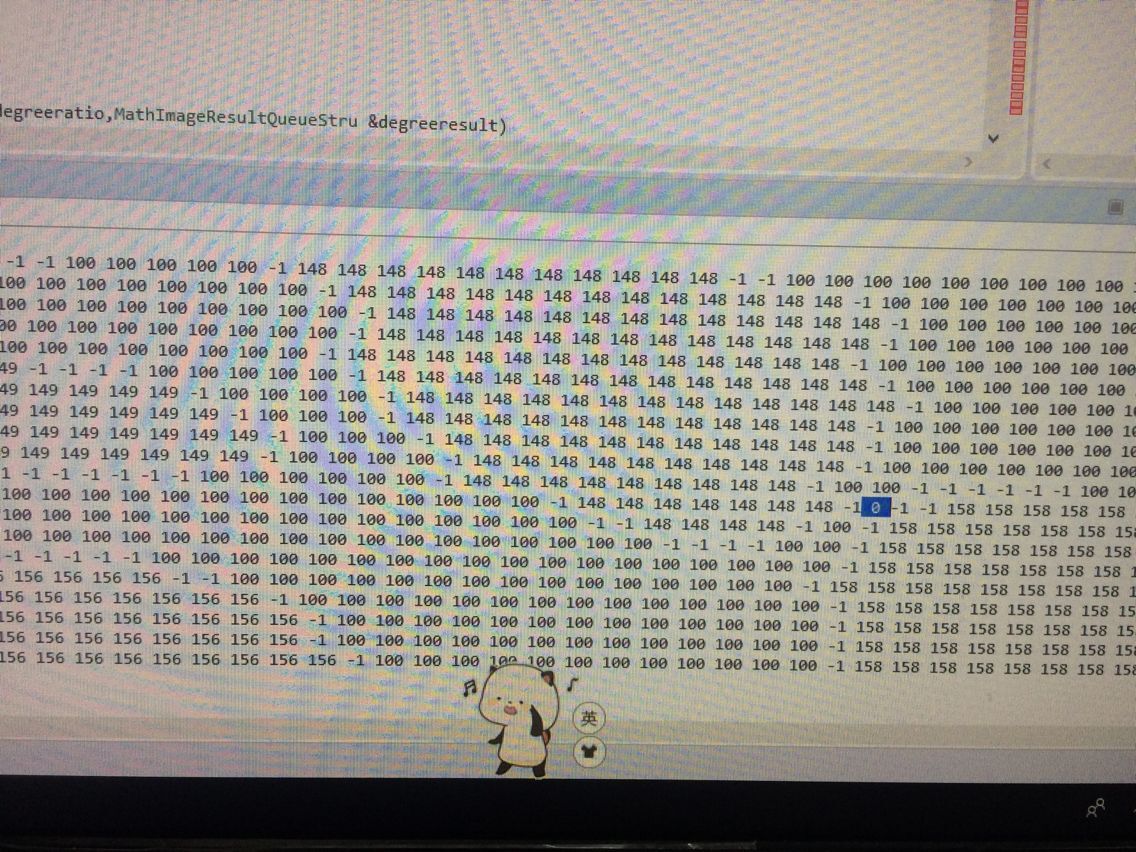

There is a puzzle: how can there be any unknown areas not allocated in the watershed results when debugging today:

There is still 0 --- the value of the unknown area, which is clearly the result of the watershed:

/*Watershed segmentation

* srcmatbw--------Binary graph single channel

* everycenters----Center of the divisible stone with black background 0 and white center 255

* imglabels-------Watershed result background is 100, boundary is - 1, divisible stone is 1~N (there may be 0 fault tolerance)

* */

int watershedSegmentProc(Mat &srcmatbw,Mat &everycenters,Mat &imglabels)

{

// int not_zero_count=countNonZero(srcmatbw);

// float white_count_thre=srcmatbw.rows*srcmatbw.cols;

// white_count_thre*=0.8;

// if((not_zero_count<20 )||(not_zero_count>(int)white_count_thre))

// {

// return 2;

// }

Mat element = getStructuringElement(MORPH_ELLIPSE, Size(11, 11));

Mat element2 = getStructuringElement(MORPH_ELLIPSE, Size(3, 3));

Mat binary_dilate;//every centers

dilate(everycenters, binary_dilate, element2, Point(-1, -1), 1);

Mat binary_8UC3;

vector<Mat> resultmats;

resultmats.push_back(srcmatbw);

resultmats.push_back(srcmatbw);

resultmats.push_back(srcmatbw);

merge(resultmats,binary_8UC3);

Mat unknown;//Get the unknown area, that is, the area involved in dividing the boundary

bitwise_xor(srcmatbw,binary_dilate,unknown);

//Merge tag images

Mat imgstats, imgcentroid;

connectedComponentsWithStats(binary_dilate, imglabels, imgstats, imgcentroid); //Connected domain marker

imglabels.convertTo(imglabels, CV_32SC1); //Image type conversion

imglabels = imglabels + 100;//Background area pixels are 100

for (int i=0;i<unknown.rows;i++)

{

uchar* ptr = unknown.ptr<uchar>(i);

for (int j=0;j<unknown.cols;j++)

{

if (255==ptr[j])

{

imglabels.at<int>(i, j) = 0; //Unknown area pixel is 0

}

}

}

//Watershed segmentation

watershed(binary_8UC3, imglabels);

}I don't know what happened to that 0?!