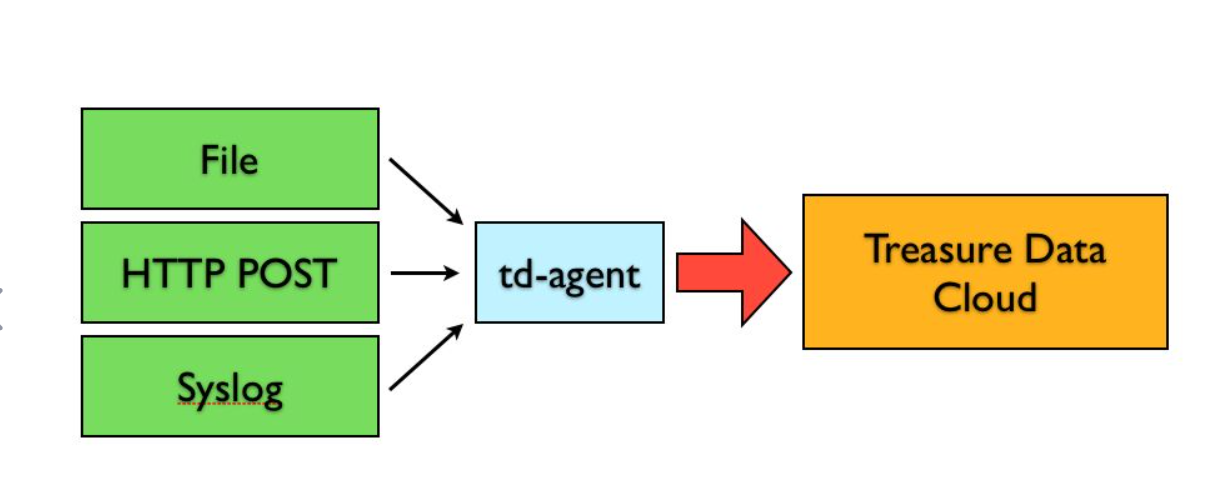

1. What is TD agent

TD agent is a log collector, which provides rich plug-ins to adapt to different data sources, output destinations and so on

In use, we can collect information from different sources to different places through simple configuration, and first send it to fluent D, and then fluent D forwards the information to different places through different plug-ins according to the configuration, such as files, SaaS Platform, databases, or even to another fluent D.

2. How to install TD agent

Linux system: centos

2.1 execution script

curl -L https://toolbelt.treasuredata.com/sh/install-redhat-td-agent3.sh | sh

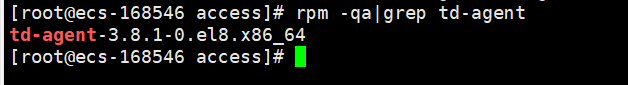

2.2 check whether it is installed

rpm -qa|grep td-agent

2.3 start command

start-up td-agent systemctl start td-agent

Start service /etc/init.d/td-agent start View service status /etc/init.d/td-agent status Out of Service /etc/init.d/td-agent stop Restart service /etc/init.d/td-agent restart

2.4 default profile path

/etc/td-agent/td-agent.conf

2.5 default log file path:

/var/log/td-agent/td-agent.log

3. Noun interpretation

Source: Specifies the data source

match: Specifies the output address

filter: specifies an event handling procedure

System: used to set the system configuration

label: group output and filter

@Include: use it to include other configuration files in the configuration file

Plug ins: when collecting and sending logs, fluent D uses plug-ins. Some plug-ins are built-in. To use non built-in plug-ins, you need to install plug-ins

4. Profile resolution

# Receive events from 20000/tcp

# This is used by log forwarding and the fluent-cat command

<source>

@type forward

port 20000

</source>

# http://this.host:8081/myapp.access?json={"event":"data"}

<source>

@type http

port 8081

</source>

<source>

@type tail

path /root/shell/test.log

tag myapp.access

</source>

# Match events tagged with "myapp.access" and

# store them to /var/log/td-agent/access.%Y-%m-%d

# Of course, you can control how you partition your data

# with the time_slice_format option.

<match myapp.access>

@type file

path /var/log/td-agent/access

</match>

sources configure the source of the log file

@type: specifies where the configuration file comes from

forward: from another fluent

http: parameter passed from an http request

tail: from a log file

Port: the developed data transmission port when reading data transmitted by other machines

path: read data location

Tag: the tag of the data, which matches the tag configured by match

match data forwarding configuration

myapp.access: the output label matches the input label

@type: output location, which can be output to kafak, local file, database and monggo

Path: when outputting to a file, the path of the file. If outputting to other locations, there will be other special configurations, such as the following configuration. Because it is forwarded to Kafka, many Kafka configurations are also configured in the match tag,

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-wU9d5qLk-1642839362691)(img/1642670826444.png)]

The store tag in the tag. Each store indicates the storage direction of the data of the tag attribute. We can configure storage to Kafka and local file storage

<source> type tail format none path /var/log/apache2/access_log pos_file /var/log/apache2/access_log.pos tag mytail </source>

5. Partial parameter interpretation

format:Configure the expression to filter the data. Only when the format The string of an expression can only be match In progress store Storage. type tail: tail The way is Fluentd The principle of the built-in input method is to continuously obtain the incremental log from the source file, and linx command tail Similarly, other input methods can be used, such as http,forward And so on. You can also use the input plug-in to tail Change to the corresponding plug-in name, such as: type tail_ex ,be careful tail_ex Underline. format apache: Specify use Fluentd Built in Apache Log parser. You can configure the expression yourself. path /var/log/apache2/access_log: Specify the location of the collection log file. Pos_file /var/log/apache2/access_log.pos:This parameter is strongly recommended, access_log.pos Files can be generated automatically. Note that access_log.pos Write permission to file because access_log The last read length is written to the file, which is mainly guaranteed in fluentd After the service is down and restarted, it can continue to collect, avoid the loss of log data collection and ensure the integrity of data collection.

6. Profile case

6.1. Transmit data to the log and Kafka simultaneously via http

# http://this.host:8888/mytail?json={"event":"data"}

<source>

@type http

port 8081

</source>

<match mytail>

@type copy

<store>

@type kafka

brokers localhost:9092

default_topic test1

default_message_key message

ack_timeout 2000

flush_interval 1

required_acks -1

</store>

<store>

@type file

path /var/log/td-agent/access

</store>

</match>

6.2 transfer data to log and Kafka at the same time by reading files

<source> type tail format none path /var/log/apache2/access_log pos_file /var/log/apache2/access_log.pos tag mytail </source> <match mytail> @type copy <store> @type kafka brokers localhost:9092 default_topic test1 default_message_key message ack_timeout 2000 flush_interval 1 required_acks -1 </store> <store> @type file path /var/log/td-agent/access </store> </match>