shell web monitoring script, the whole set is in it, decision rules changed several times, multiple combinations can see the old version, follow-up lazy to write new.

Old version links: https://blog.51cto.com/junhai/2407485

This time there are three scripts, write down the process of implementation.

The effect of calculating failure time is as follows: https://blog.51cto.com/junhai/2430313

Using Wechat Robot: https://blog.51cto.com/junhai/2424374

Because the useless database can only use 3 txt like this, in fact, I will not... --! Multiple sed can be written in one sentence, and they are too lazy to take the time to optimize.

url.txt > url. del (store unreachable address, second script analysis after) > url. add (third script analysis whether the address is restored)

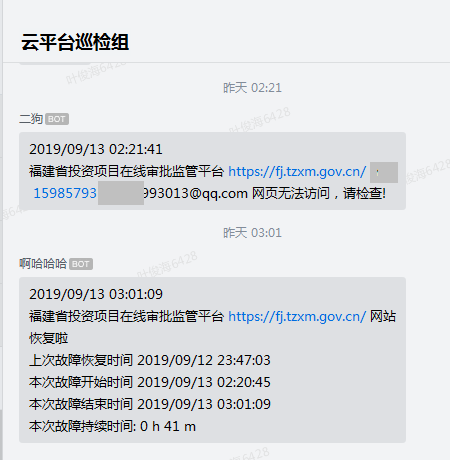

The monitoring effect is as follows:

For external use, alarms are sent by mail, because too many web s monitored by the government cloud need to send mail. Mailboxes are often considered to be for sending spam, and are blocked many times.

One alarm and one recovery, which prevents multiple alarms, only two emails will be sent out in one failure, and the third script control will be used for WEB monitoring of government cloud platform.

Three unreachable stop alarms, one recovery alarm, alarm @ related system personnel, using the second script control, for Changwei internal system monitoring alarm. Mail is optional, internal use of enterprise Wechat is faster.

#!/bin/bash

#20190914

#QQ450433231

. /root/weixin.sh

cur_time(){

date "+%Y/%m/%d %H:%M:%S"

}

systemname(){

name=`cat url2.txt|grep $url|wc -l`

if [ $name -eq 1 ];then

cat url2.txt|grep -w $url

else

echo "$url"

fi

}

[ ! -f /root/url.txt ] && echo "url.txt file does not exist" && exit 1

sed -i '/^$/d' url.txt

while read url

do

[ -z $url ] && echo "url.txt Existence of spaces Check file format" && exit 1

for ((i=1;i<7;i++))

do

rule=`curl -i -s -k -L -m 10 $url|grep -w "HTTP/1.1 200"|wc -l`

if [ $rule -eq 1 ];then

echo "$(cur_time) The first $i Secondary inspection $url Successful Web Access" >> check.log

break

elif [ $i = 5 ];then

echo $url >> url.del

info=`echo -e "$(cur_time) \n$(systemname) Web page can not be accessed, please check!"`

wx_web

echo -e "$(cur_time) \n\n$(systemname) Web page can not be accessed, please check!"|mail -s "[Important Alert] Web page unavailable" 450433231@qq.com

echo "$(cur_time) $(systemname) Webpage $(expr $i \* 3)Seconds can not be accessed, please check!" >> checkfail.log

sh /root/checkdel.sh #The second script

else

echo "$(cur_time) The first $i Secondary inspection $url Web page access failure" >> checkfail.log

sleep 3

fi

done

done < /root/url.txt

sh /root/checkadd.sh #The third script. I like to have the third script separately set at intervals of 1 minute.#!/bin/bash

. /root/weixin.sh

cur_time(){

date "+%Y/%m/%d %H:%M:%S"

} #Display time

sed -i '/^$/d' url.del

sed -i '/^$/d' url.delout

cat url.del|sort|uniq -c >> url.delout

while read line

do

i=`echo $line|awk '{print$1}'`

newurl=`echo $line|awk '{print$2}'`

if [ -z $newurl ];then

continue

elif [ $i -eq 1 ];then

echo $newurl >> url.add

sed -i "s|$newurl||" url.txt

sed -i "s|$newurl||" url.del

sed -i '/^$/d' url.txt

sed -i '/^$/d' url.del

#info=`echo-e'$(cur_time)\n $i alarm site $newurl not restored, suspend sending alarms' ___________.`

#wx_web

#echo'$(cur_time) $i warning website $newurl not restored suspend sending alarm'| mail-s' [suspend alarm]"450433231@qq.com

#echo "$(cur_time) website $newurl not restored suspended alarm" > checkfail.log

sed -i "s|$newurl||" url.delout

sed -i '/^$/d' url.delout

else

echo > url.del

continue

fi

done< url.delout

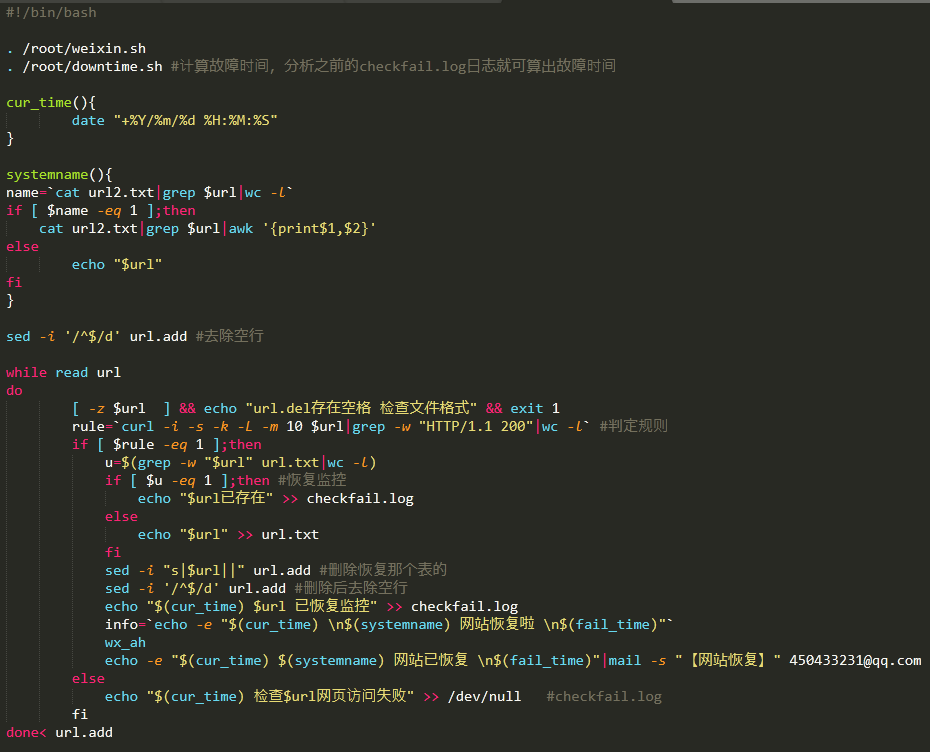

echo > url.delout#!/bin/bash

. /root/weixin.sh

. /root/downtime.sh

cur_time(){

date "+%Y/%m/%d %H:%M:%S"

}

systemname(){

name=`cat url2.txt|grep $url|wc -l`

if [ $name -eq 1 ];then

cat url2.txt|grep $url|awk '{print$1,$2}'

else

echo "$url"

fi

}

sed -i '/^$/d' url.add

while read url

do

[ -z $url ] && echo "url.del Existence of spaces Check file format" && exit 1

rule=`curl -i -s -k -L -m 10 $url|grep -w "HTTP/1.1 200"|wc -l`

if [ $rule -eq 1 ];then

u=$(grep -w "$url" url.txt|wc -l)

if [ $u -eq 1 ];then

echo "$url Already exist" >> checkfail.log

else

echo "$url" >> url.txt

fi

sed -i "s|$url||" url.add

sed -i '/^$/d' url.add

echo "$(cur_time) $url Monitoring has been restored" >> checkfail.log

info=`echo -e "$(cur_time) \n$(systemname) The website has been restored \n$(fail_time)"`

wx_ah

echo -e "$(cur_time) $(systemname) The website has been restored \n$(fail_time)"|mail -s "[Website Restoration]" 450433231@qq.com

else

echo "$(cur_time) inspect $url Web page access failure" >> /dev/null #checkfail.log

fi

done< url.addLater, I wrote a silly interactive script, which will be used to add and delete the sh script adds to the monitoring website to add the monitoring website in batches.

#!/bin/bash

#20190831

case $1 in

add )

[ -z $2 ] && echo "Please be there. add Later input to increase the monitoring site address Usage: add baidu.com" && exit 1

u=$(grep -w "$2" url.txt|wc -l)

if [ $u -eq 1 ];then

echo "$2 Website already exists"

else

echo $2 >> url.txt

echo "$2 Website has been added to the monitoring list"

fi

;;

del )

[ -z $2 ] && echo "Please be there. del Later enter the site address to remove the monitoring Usage: del baidu.com" && exit 1

u=$(grep -w "$2" url.txt|wc -l)

if [ $u -eq 1 ];then

sed -i "s|$2||" url.txt

sed -i '/^$/d' url.txt

echo "$2 Website deleted"

else

echo "$2 Website not found"

fi

;;

update )

vi url2.txt

;;

dis )

cat url.txt|sort|uniq -c

;;

back )

cp url.txt url.bk

echo "Monitoring List Backup File url.bk"

;;

disuniq )

cp url.txt url.bk

cat url.txt|sort|uniq -c|awk '{print$2}' > url.new

cat url.new > url.txt

rm -rf url.new

sed -i '/^$/d' url.txt

echo "Monitoring List Re-Completion Backup file url.bk"

;;

* )

echo "-----------`date`-----------------"

echo "sh $0 add Increase monitoring websites"

echo "sh $0 del Delete monitoring websites"

echo "sh $0 update Modify the contact information of the website"

echo "sh $0 dis Display monitoring list"

echo "sh $0 back Backup monitoring list"

echo "sh $0 disuniq Monitoring list de-duplication"

echo "----------------------------------"

;;

esacIncidentally, the usual crontab call frequency is used to read the old version of the blog.

*/5 * * * * sh /root/check.sh */1 * * * * sh /root/checkadd.sh 0 0 * * * echo > url.del