I. Preparation

I have a website on Google VM here, so I plan to install ELK on a server I purchase to process the access log for analyzing nginx.

- Operating System Version: CentOS 7

- ELK Version: 7.1

1.1. Server environment preparation

We still use the official yum source for installation here. It is easy and easy to configure the official yum warehouse first.

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

cat >> /etc/yum.repos.d/elasticsearch.repo <<EOF [elasticsearch-7.x] name=Elasticsearch repository for 7.x packages baseurl=https://artifacts.elastic.co/packages/7.x/yum gpgcheck=1 gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch enabled=1 autorefresh=1 type=rpm-md EOF

Close the firewall.

systemctl stop firewalld.service systemctl disable firewalld.service

II. Installation of ES

The Elasticsearch package comes with a version of OpenJDK, so we don't need to install the JDK separately here, just use the official one.

yum install elasticsearch -y

Since the default listening IP is 127.0.0.1, we need to open one of the parameters network.host in the configuration file/etc/elasticsearch/elasticsearch.yml and write our own local IP.

2.1. Small problems encountered

After modifying the configuration file like this, I find I can't start up and I don't see any errors in the log, so I do the review manually.

[root@elk ~]# su - elasticsearch -s /bin/sh -c "/usr/share/elasticsearch/bin/elasticsearch"

su: warning: cannot change directory to /nonexistent: No such file or directory

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

[2019-05-31T03:20:42,902][INFO ][o.e.e.NodeEnvironment ] [elk] using [1] data paths, mounts [[/ (rootfs)]], net usable_space [7.7gb], net total_space [9.9gb], types [rootfs]

[2019-05-31T03:20:42,908][INFO ][o.e.e.NodeEnvironment ] [elk] heap size [990.7mb], compressed ordinary object pointers [true]

.................................

[2019-05-31T03:20:50,083][INFO ][o.e.x.s.a.s.FileRolesStore] [elk] parsed [0] roles from file [/etc/elasticsearch/roles.yml]

[2019-05-31T03:20:50,933][INFO ][o.e.x.m.p.l.CppLogMessageHandler] [elk] [controller/5098] [Main.cc@109] controller (64 bit): Version 7.1.1 (Build fd619a36eb77df) Copyright (c) 2019 Elasticsearch BV

[2019-05-31T03:20:51,489][DEBUG][o.e.a.ActionModule ] [elk] Using REST wrapper from plugin org.elasticsearch.xpack.security.Security

[2019-05-31T03:20:51,881][INFO ][o.e.d.DiscoveryModule ] [elk] using discovery type [zen] and seed hosts providers [settings]

[2019-05-31T03:20:53,019][INFO ][o.e.n.Node ] [elk] initialized

[2019-05-31T03:20:53,020][INFO ][o.e.n.Node ] [elk] starting ...

[2019-05-31T03:20:53,178][INFO ][o.e.t.TransportService ] [elk] publish_address {10.154.0.3:9300}, bound_addresses {10.154.0.3:9300}

[2019-05-31T03:20:53,187][INFO ][o.e.b.BootstrapChecks ] [elk] bound or publishing to a non-loopback address, enforcing bootstrap checks

ERROR: [2] bootstrap checks failed

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65535]

[2]: the default discovery settings are unsuitable for production use; at least one of [discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured

[2019-05-31T03:20:53,221][INFO ][o.e.n.Node ] [elk] stopping ...

[2019-05-31T03:20:53,243][INFO ][o.e.n.Node ] [elk] stopped

[2019-05-31T03:20:53,244][INFO ][o.e.n.Node ] [elk] closing ...

[2019-05-31T03:20:53,259][INFO ][o.e.n.Node ] [elk] closed

[2019-05-31T03:20:53,264][INFO ][o.e.x.m.p.NativeController] [elk] Native controller process has stopped - no new native processes can be startedWe can see the following two errors, one is that the maximum number of open files is relatively small, the other is that a discovery setting must be opened, so let's do it separately.

#Temporary changes, effective immediately ulimit -n 655350 #Permanent modification echo "* soft nofile 655360" >> /etc/security/limits.conf echo "* hard nofile 655360" >> /etc/security/limits.conf

My modified configuration file is as follows:

node.name: node-1 path.data: /var/lib/elasticsearch path.logs: /var/log/elasticsearch network.host: 10.154.0.3 cluster.initial_master_nodes: ["node-1", "node-2"]

Then we can start.

systemctl daemon-reload systemctl enable elasticsearch.service systemctl start elasticsearch.service

Visit the page.

{

"name" : "node-1",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "P0GGZU9pRzutp-zHDZvYuQ",

"version" : {

"number" : "7.1.1",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "7a013de",

"build_date" : "2019-05-23T14:04:00.380842Z",

"build_snapshot" : false,

"lucene_version" : "8.0.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}3. Install Kibana

To save machine resources, we use install on one machine here, because the environment is already configured, we install directly here.

yum install kibana -y

Edit the configuration file/etc/kibana/kibana.yml to modify the following:

server.port: 5601 server.host: "10.154.0.3" elasticsearch.hosts: ["http://10.154.0.3:9200"]

Start the service.

systemctl daemon-reload systemctl enable kibana.service systemctl start kibana.service

The access screen displays normally when we install it.

4. Install Logstash

yum install logstash -y

Create a configuration file/etc/logstash/conf.d/logstash.conf, adding the following:

input {

beats {

port => 5044

}

}

filter {

if [fields][type] == "nginx_access" {

grok {

match => { "message" => "%{NGINXACCESS}" }

}

}

if [fields][type] == "apache_access" {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

mutate {

remove_field =>["message"]

remove_field =>["host"]

remove_field =>["input"]

remove_field =>["prospector"]

remove_field =>["beat"]

}

geoip {

source => "clientip"

}

date {

match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

}

}

output {

if [fields][type] == "nginx_access" {

elasticsearch {

hosts => "172.18.8.200:9200"

index => "%{[fields][type]}-%{+YYYY.MM.dd}"

}

}

if [fields][type] == "apache_access" {

elasticsearch {

hosts => "172.18.8.200:9200"

index => "%{[fields][type]}-%{+YYYY.MM.dd}"

}

}

}Not finished yet.

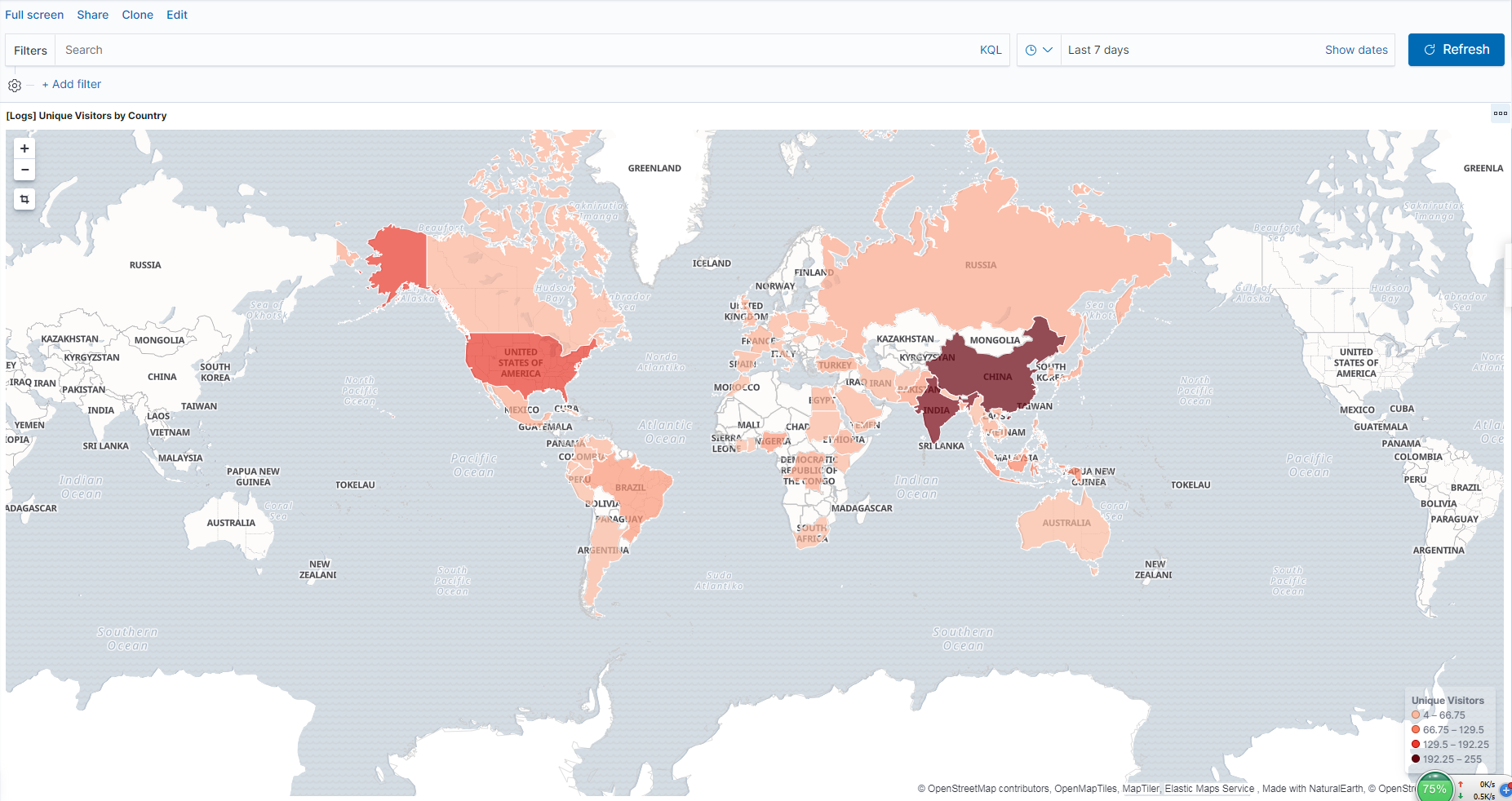

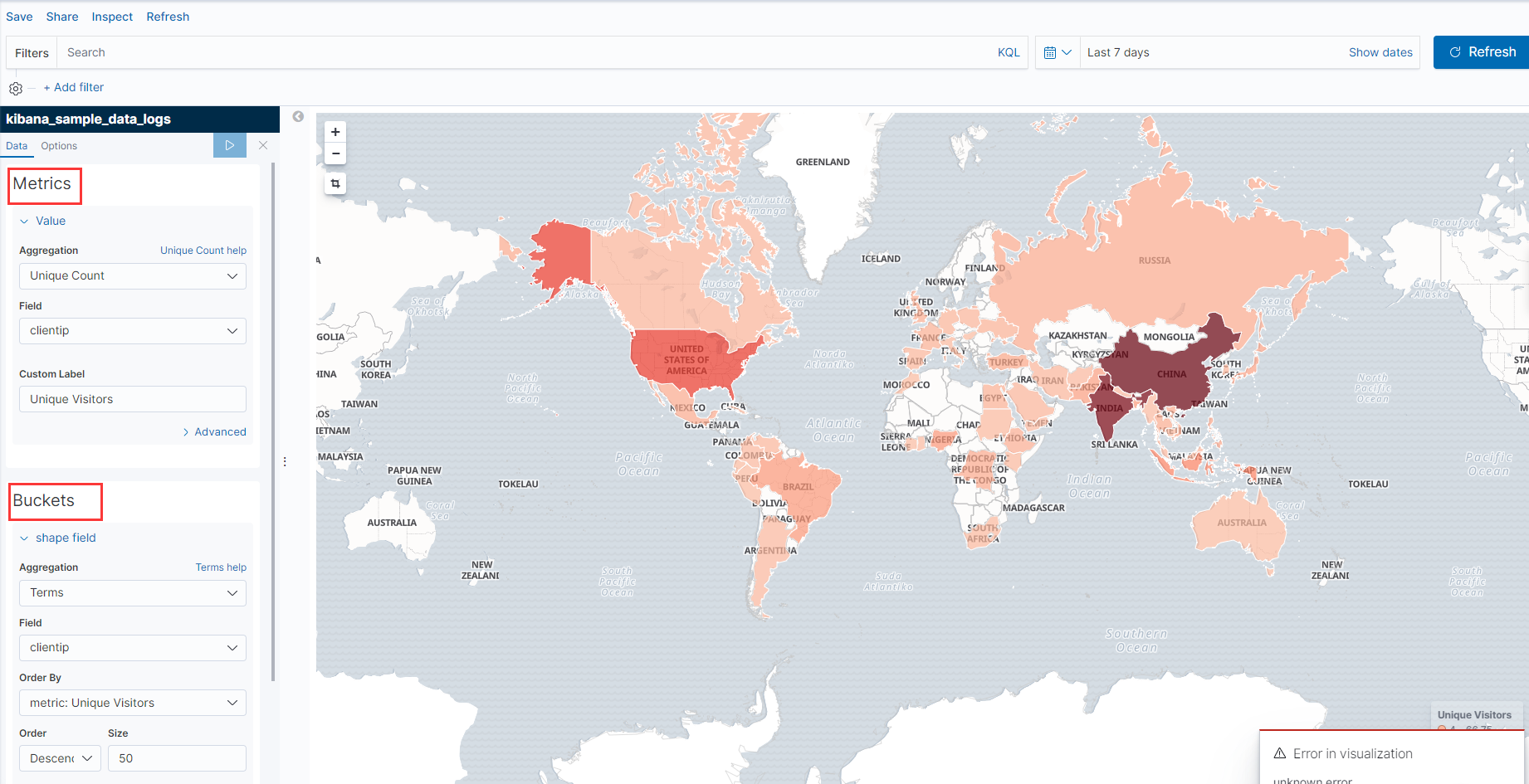

The final result is as follows: