Title Description

Title Link: http://glidedsky.com/level/crawler-font-puzzle-2

Topic analysis

compared with the previous font anti crawl question, more glyphs are used in this question, so it will be much more troublesome to construct the mapping from glyph image to real numbers, but once the mapping relationship is constructed, the rest will be the same as the previous font anti crawl question. The contents of font files have been introduced in font anti crawl 1. If you don't know about fonts, you can read my explanation of font anti crawl 1, which will not be repeated in this article. The article address is: Click me to check

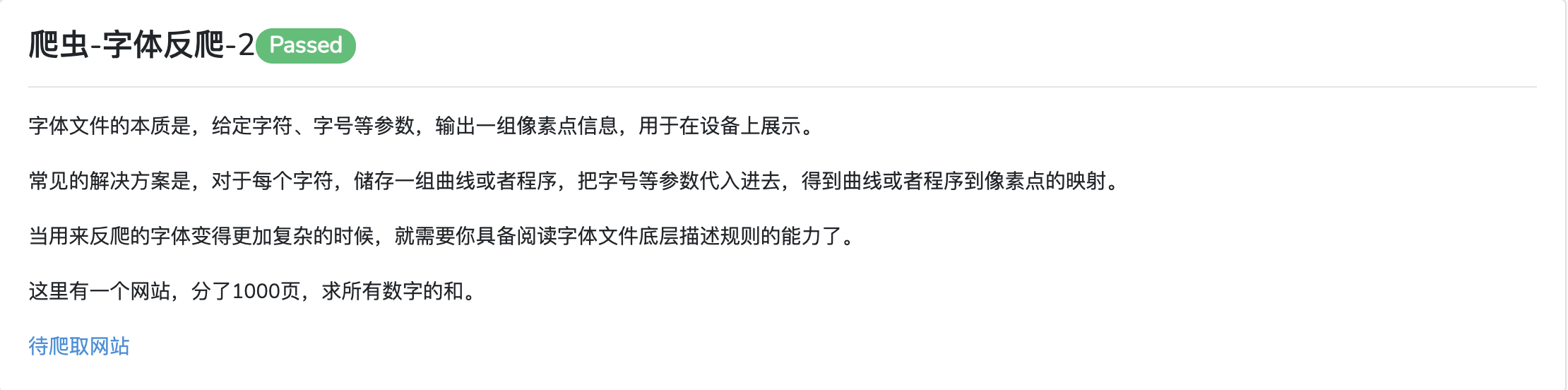

the following is the analysis of this question. First, open the data page to be crawled and take a look at its source code, as shown in the figure below.

in font backcrawl 1, although the content in the source code is inconsistent with that displayed in the web page, at least the number in the source code, but in font backcrawl 2, what you see in the source code is actually Chinese characters. This result may seem surprising at first glance, but when you think about it carefully, it is not fundamentally different from font anti climbing 1. The essence of font file is the mapping from characters to font images. It can be mapped from numbers to font images. Of course, it can also be mapped from Chinese characters to font images, because both numbers and Chinese characters belong to characters.

there are 10 glyph graphics in font backcrawl 1, representing the 10 numbers 0-9 respectively. It can be found that the number of glyph images cannot be obtained directly by refreshing the data page of font backcrawl 2 for many times. Therefore, an intuitive idea is to traverse all data pages to obtain all glyph information and save it to the font file, At the same time, the md5 coding of font information is recorded for subsequent use to construct the mapping relationship between font information and real numbers. The process of obtaining font information will be explained in detail below.

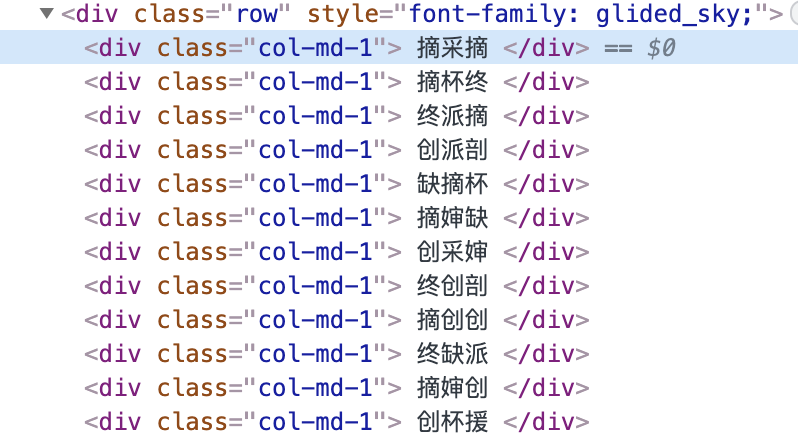

in the first step, we need to obtain the base64 encoding of font data from any page, and then decode it with Base64 and store it as ttf file, and then open the font file with the font editing tool (I use FontForge). The open interface is shown in the following figure.

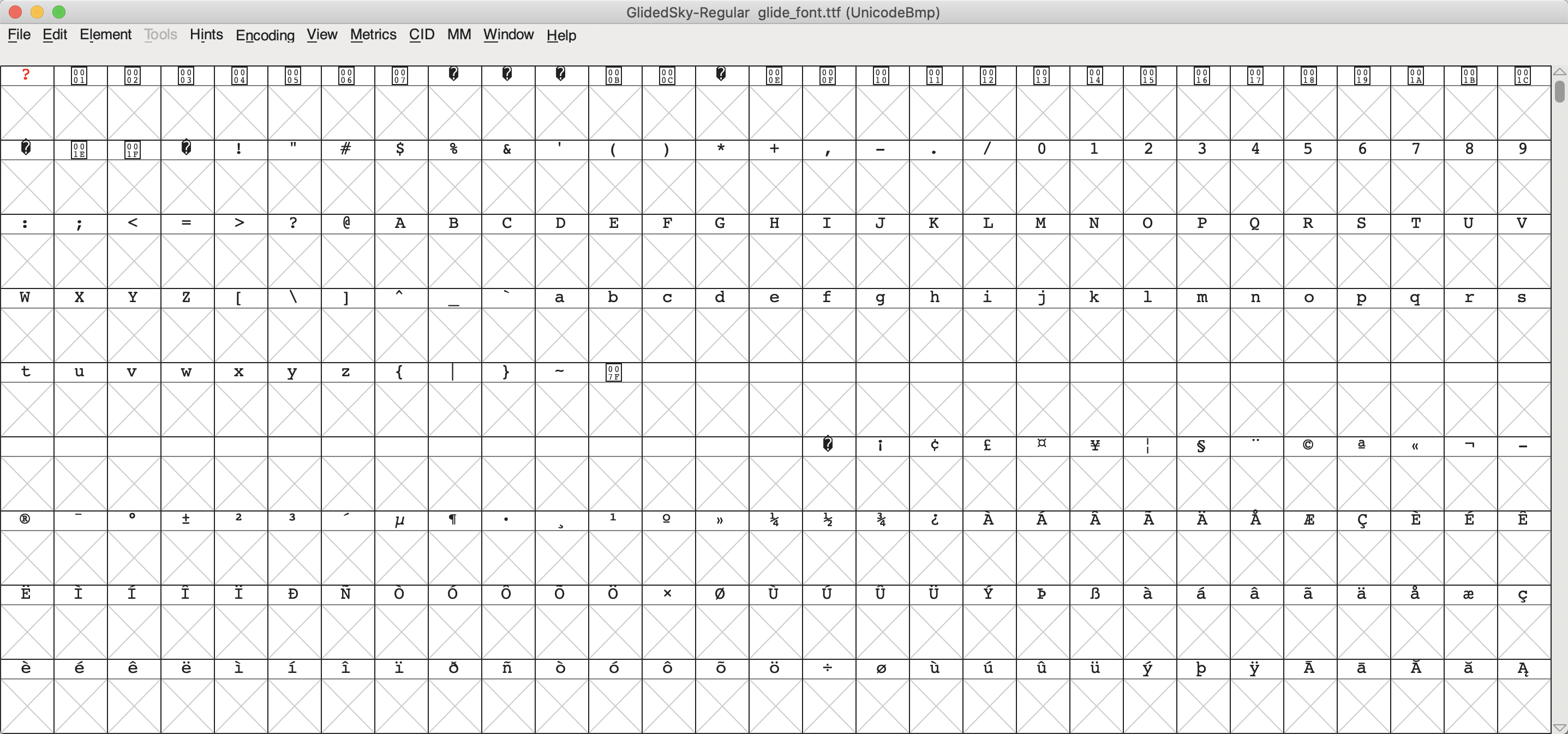

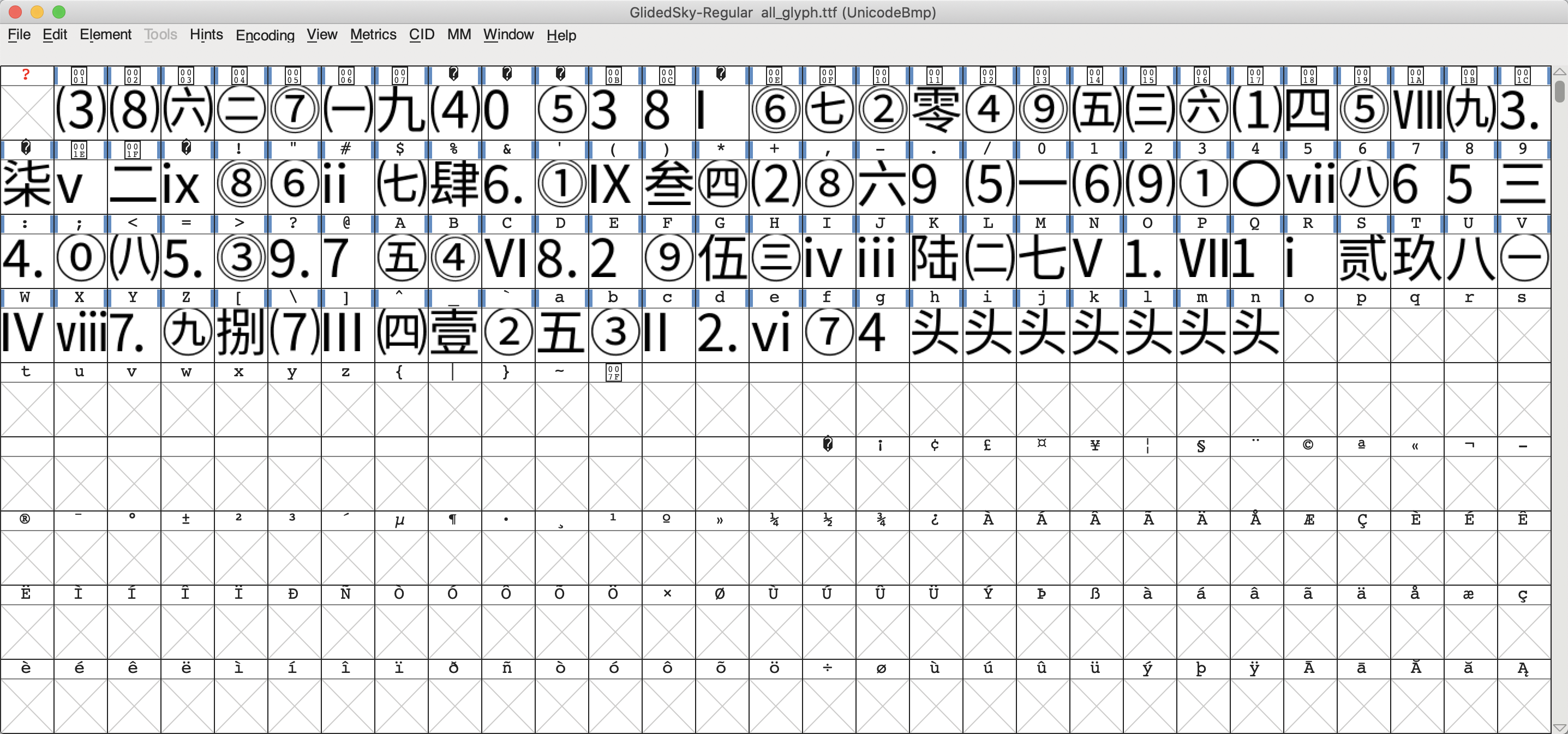

we want to store all the font information obtained in this font file because different data pages may contain different font images. If one data page is viewed from one data page to another, this will greatly affect the efficiency of constructing the mapping table from font images to real numbers. We start from the location where the font name is uni0001. Each time we obtain a new font information, we add it to the font file in turn. For example, the first font information obtained is added to the location of uni0001, the second font information obtained is added to the location of uni0002, and so on. Because there is no way to use fonttools to add font information for a unicode code, but only a way to modify a font information, you need to initialize the previous font with the font viewing tool first. It doesn't matter what the initialization content is, as long as there is font information. According to my statistics, there are 103 different glyphs in total, so at least the first 103 glyphs should be initialized during initialization.

the initialization operation is also very simple. Just find an existing font, copy it with Ctrl+C, select the first 103 squares, and then paste it with Ctrl+V. After initialization, as shown in the figure below.

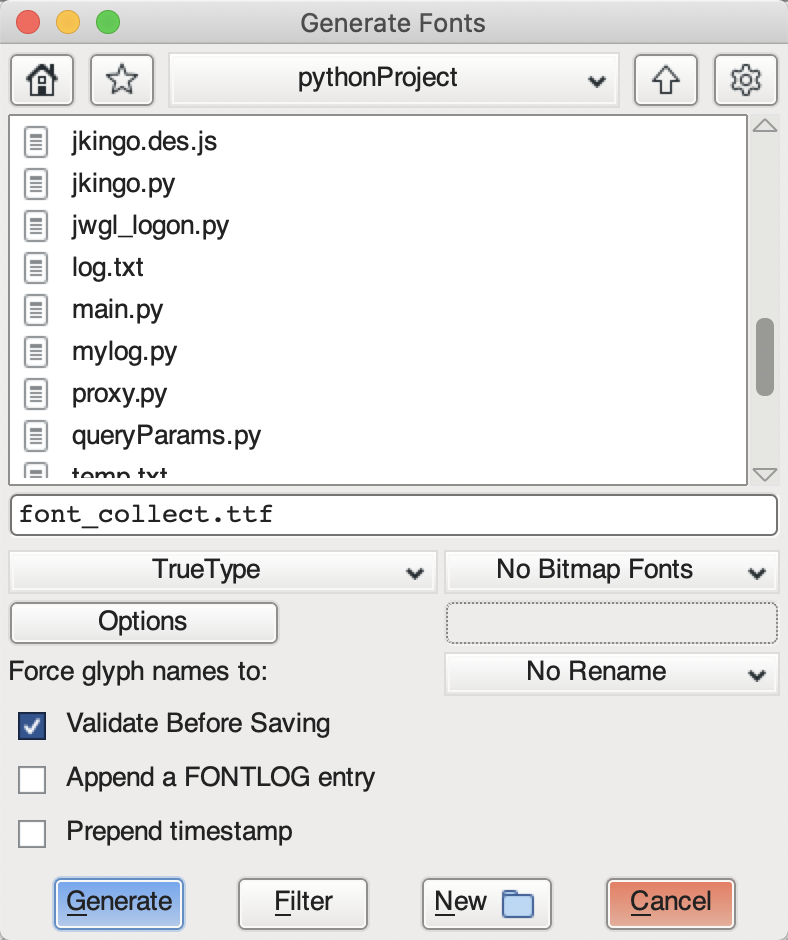

after the initialization operation, you also need to save the font file. The saving methods of different font viewing tools may be different. The saving method of FontForge I use is: File > generate fonts, then enter the file name and save it as TrueType type. The relevant settings are shown in the figure below.

after completing the above work, you can write code to obtain font information from the data page. For each data page, we traverse each number in this page. What we extract from the source code is actually Chinese characters, that is, the characters in the font file. Then, we use the mapping table from characters to font names to obtain the font names corresponding to the current characters, and then obtain font information according to the font names, Then judge whether the current font information has been added to the font file. If not, add it to the font file, and write its md5 code and font name into the file. For the detailed code of this process, see get in the source code_ all_ Info function.

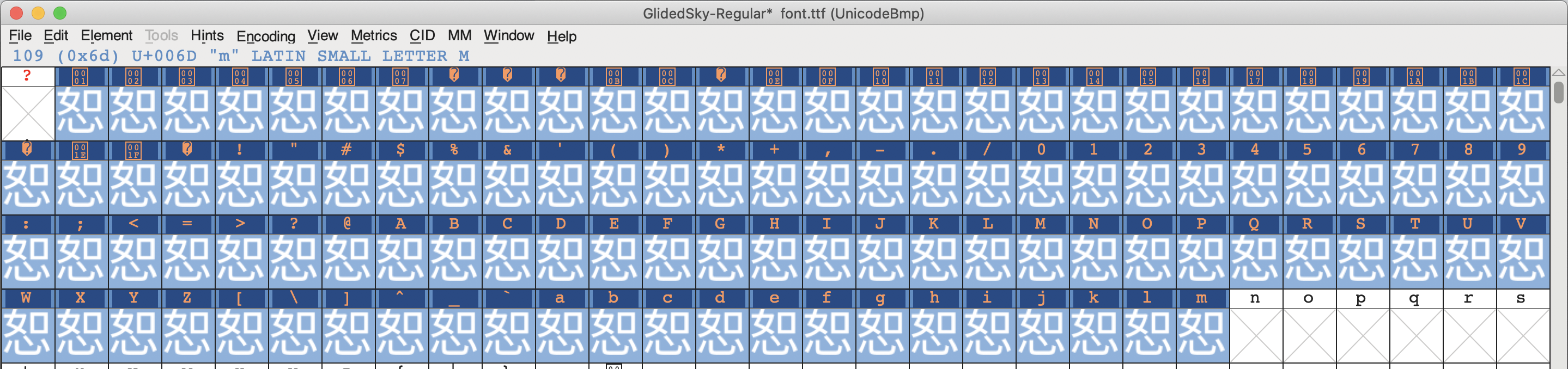

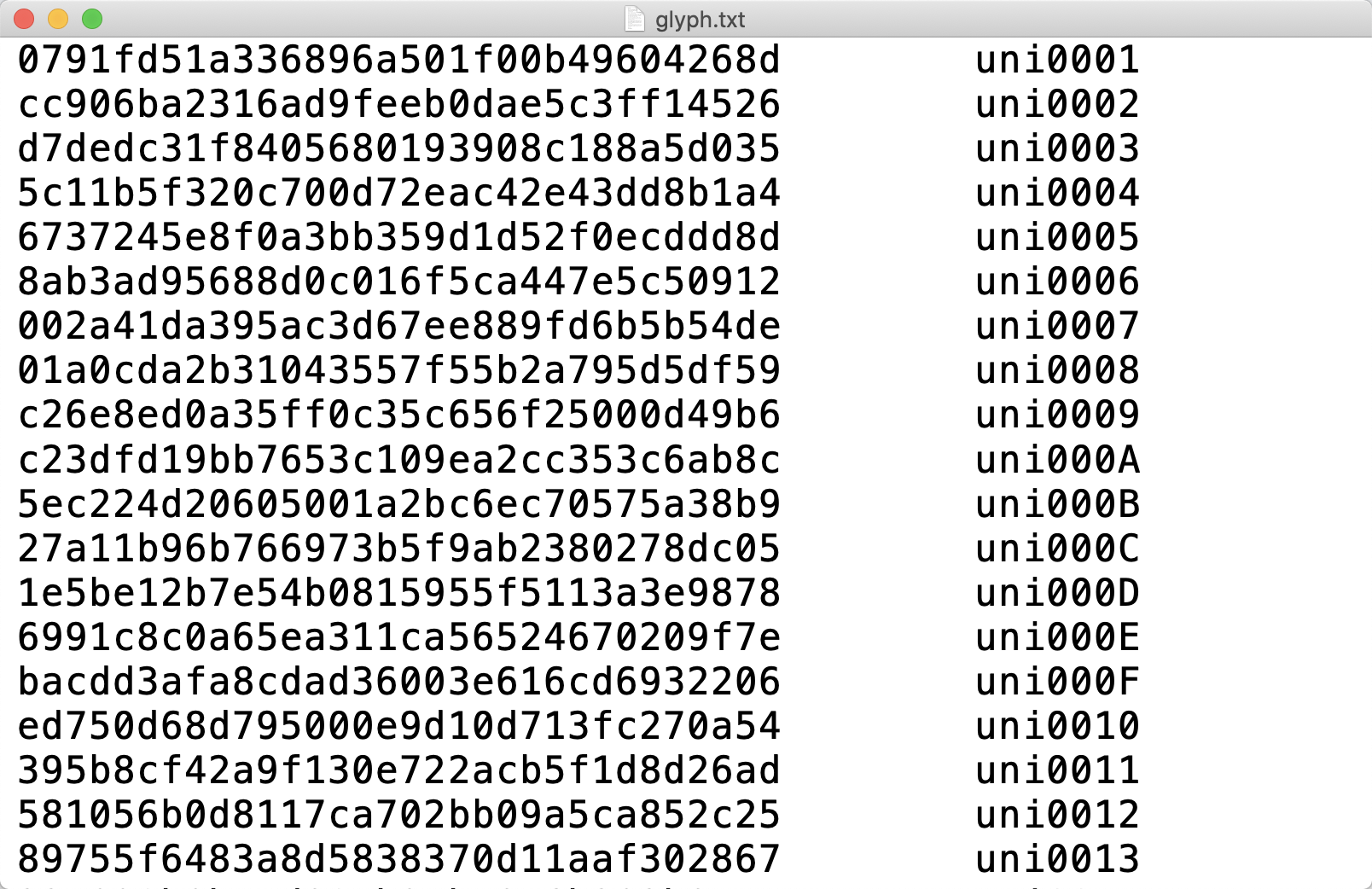

after running the above code, we will get two files, one containing all font information ttf file and md5 encoded text file storing font information. We open these two files with font viewing tool and text reader respectively, as shown in the figure below. (ignore glyph image for initialization)

the next work is to fill the characters represented by the font image into the corresponding positions in the text file against the font file. For example, the first glyph image in the font file, that is, the glyph image named uni0001, represents a number of 3, so you should write 3 after the first line of the text file, and the number represented by the second glyph image is 8, so write 8 after the second line of the text file, and so on. Next, I put out the mapping relationship between the font image I constructed and the real characters. If the website does not change the font file, you can directly use the mapping table I constructed (saved as glyph.txt).

31692a08b33c1cd55f8203f711d06c90 uni0001 3 4437d2ffe9ec92372cc0f640cb11120a uni0002 8 d7dedc31f8405680193908c188a5d035 uni0003 6 58c05f93982e300697362feabf166819 uni0004 2 bacdd3afa8cdad36003e616cd6932206 uni0005 7 1e5be12b7e54b0815955f5113a3e9878 uni0006 1 f146d3c66fea91f1277602c9f1ce4ee6 uni0007 9 7035cd5d47e60cf8caa6d11248c30b0d uni0008 4 c2612c67ed6dfd1f98a3a8fde68b214d uni0009 0 be7d35d18bc27d0f5076b72775f48c87 uni000A 5 5ec224d20605001a2bc6ec70575a38b9 uni000B 3 4713958b58e1772d2e40db62b3dba7fd uni000C 8 d2bcd3d13e8c9ce1f3a298f494356821 uni000D 1 ecd64d2ffb2e0f1546b2401a75ada5f2 uni000E 6 901393ec27b101bab3280022bfdad962 uni000F 7 7e8d7251a6b630bd7bd9982d0fdb98fd uni0010 2 c26e8ed0a35ff0c35c656f25000d49b6 uni0011 0 01a0cda2b31043557f55b2a795d5df59 uni0012 4 9fb6898cd9c76d9d9b77df63af9a753e uni0013 9 ad2d6fdc5ae62ed0a6939b65d88364df uni0014 5 0791fd51a336896a501f00b49604268d uni0015 3 df3d8d1417acdf73fd7a3b61b6afb7c5 uni0016 6 8ab3ad95688d0c016f5ca447e5c50912 uni0017 1 26a26c39adb946c7fa76b61d4b32cc11 uni0018 2 483ae06a1682cafa38dad7da463293ac uni0019 4 775c290ab3230d7389e304b7ff845784 uni001A 7 c23dfd19bb7653c109ea2cc353c6ab8c uni001B 5 755e16dea3f29af0cdcff54b87f3ccb1 uni001C 8 89755f6483a8d5838370d11aaf302867 uni001D 9 816d3223522f7d99108fb6f2f455b5ed uni001E 3 9ba99f6690d3d7f0857404b544626d2c uni001F 1 ed750d68d795000e9d10d713fc270a54 space 2 cb480ad6b7e3e4bf83a636ad5be6b4eb exclam 9 26d9b72d6a33e5e61bac52f558aa4338 quotedbl 8 a5506625d5df15773e8065d384bd4ff7 numbersign 6 b3c1e59a4f73925450db0d401a9badae dollar 7 581056b0d8117ca702bb09a5ca852c25 percent 4 6991c8c0a65ea311ca56524670209f7e ampersand 6 f605030ca1c493374cc659b6949ab321 quotesingle 3 34d2ca319b5fcf514d0ae0f911091c86 parenleft 1 27a11b96b766973b5f9ab2380278dc05 parenright 8 334820b8be4d905b25b7946b383b9eec asterisk 5 5451937ac93511d0f1784b6aeebb1a59 plus 3 5cc8e5d30cbf8c6697a6972120b695c3 comma 4 d0053dd2d680b17315ebb32311429a25 hyphen 2 5e53c7157460fb2908b2d8ca2523d00c period 9 94c3bb3365c04e51dbf85b3b8a2e8634 slash 2 9a136dfd568947434254da65a9f0af63 zero 1 a39a4de4559c4625fd372cfde81c695f one 8 4c99f2ea79b7ff597d6d2faf837c7eb5 two 1 d0db96d84b083371406a705e2bd606a7 three 9 21c898d17868eb35e6066a024f199390 four 2 9dc74fc07ae654008705bcc9c0dd2bbb five 6 7b292fd193f25aecf088b8227129e342 six 5 395b8cf42a9f130e722acb5f1d8d26ad seven 0 3eaa04fe209652ebe24c2290f9ecb4c3 eight 7 3b1ad98fef6f3875efa03c514211a173 nine 9 d83b57e4e122ae2299f931aada7a41bf colon 7 12833526bfd4fb677cf9490b1e82ea17 semicolon 5 c626261d2ebea798161c2691e182a76e less 3 71a67a5bfdbadbedb92f13312171af98 equal 9 5b1f984d2e4ebd220f7e12451073db8d greater 0 1bbee07e18b3da73315c8befdc53e26d question 1 6761bb5217b27a9adea0e7fa7b5d6898 at 2 9de28a71d8cfe695aaab4dc8d6c2b39c A 9 1cce3e86809e5b320bf2310e7e2ef022 B 6 38ae39c0f099c8876ad0d5206e334e26 C 7 34ce24acdb99fdf4e5187954e6d73e51 D 8 493ba364ac31cf20703fd1c2a53677d7 E 4 c4df47cdf8328cffd9f13ec88174d25d F 7 5c11b5f320c700d72eac42e43dd8b1a4 G 2 7f48ef43967259613a4842ee54c4ed23 H 3 7acb79979f999661d9e0f6a2240ad200 I 8 626960b359ec5cc52c71d0894cacff08 J 2 6737245e8f0a3bb359d1d52f0ecddd8d K 7 f7c4752cf5c6da8d2178ce13551a00c9 L 3 f2cad9f518d3e0350b167a9cba7ed2cd M 8 4c107789528479c5fc616a776888bb6a N 3 9d0b67d7fbef3d6dccf761094bd81476 O 4 59982690ca4e77be899e415b74c4e389 P 4 f29f2ab8eca2504b740abbd76f7910b8 Q 5 d6038eaee1d8e453958bcf4a40c59ddc R 7 0bf285578a6997e76d4bb38505240263 S 1 a8e9e0f039db3e118863b47e74952f5f T 4 21960b23ce182ffcec65be42105d4807 U 6 c54b5e5ebf48d6858035475d3c98fb56 V 5 4301d2c43f94cf86329146ef7b1c4617 W 9 311640c178625378b90c2bbeac8ec255 X 1 b2eedf65b778daeae51c662664394551 Y 6 a87f1031326b96853c36d9dfb61fb2d1 Z 3 f55fb746c326c30b4133c1e4f8723493 bracketleft 5 0498e2b4fbc4da153b0061bb7feacf27 backslash 4 28f2d02d6a6f0949f95c40e56e9b6305 bracketright 6 92b467e60847efc6a4b713700d5366e0 asciicircum 6 a757a43de49bd32d28e048b2d6974fba underscore 7 002a41da395ac3d67ee889fd6b5b54de grave 9 a83b22495427dafd9789ecea2260f94b a 4 df7a7cf4419da95e95ac5b56e7070d0d b 1 1d89f61dcd85b591aae8fdacff700115 c 8 cc906ba2316ad9feeb0dae5c3ff14526 d 8 0cfcd8987c82e25f8abf9fc838931b8c e 2 b8e0c5860727158f7ede6e2102ac2dd8 f 5 73dcc63068b6079380ad65ae15aba260 g 5

with the mapping relationship between the font information above and the real characters, the rest is the same as the font anti crawl 1, which will not be repeated in this paper.

source code

import base64

import requests

from fontTools.ttLib import TTFont

import re

import hashlib

from bs4 import BeautifulSoup

from io import BytesIO

from os.path import exists

params = {

'email': '',

'password': '',

'_token': 's9OikhiHKa8CoplaD7g8sxXx6XnsCc5HRGj24znl',

}

def login():

url = 'http://glidedsky.com/login'

session = requests.Session()

r = session.get(url)

html = r.text

result = re.search('input type="hidden" name="_token" value="(.*?)"', html)

_token = result.group(1)

params['_token'] = _token

session.post(url=url, data=params)

return session

def parse(html, info2num):

page_sum = 0

font_bs64 = re.search('base64,(.*?)\) format\("truetype"\)', html).group(1)

font_bytes = base64.b64decode(font_bs64)

font = TTFont(BytesIO(font_bytes))

code2name = font.getBestCmap() # Character to font name mapping table

soup = BeautifulSoup(html, 'lxml')

numbers = soup.select('.col-md-1')

for number in numbers:

number = number.string.strip()

real_num = ''

for num_ele in number:

code = ord(num_ele)

name = code2name[code]

info = font['glyf'].glyphs.get(name).data

info = hashlib.md5(info).hexdigest()

real_num += info2num[info]

page_sum += int(real_num)

return page_sum

def get_all_info():

"""

Get all font information that may appear in 1000 data pages

:return:

"""

session = login()

base_url = 'http://glidedsky.com/level/web/crawler-font-puzzle-2?page='

f = open('glyph.txt', mode='wt')

font_collect = TTFont('font_collect.ttf')

code2name_collect = font_collect.getBestCmap()

glyph_set = set()

code_cnt = 1

for k in range(1, 1001):

url = base_url + str(k)

r = session.get(url)

html = r.text

font_bs64 = re.search('base64,(.*?)\) format\("truetype"\)', html).group(1)

font_bytes = base64.b64decode(font_bs64)

font = TTFont(BytesIO(font_bytes))

code2name = font.getBestCmap()

soup = BeautifulSoup(html, 'lxml')

numbers = soup.select('.col-md-1')

for number in numbers:

number = number.string.strip()

for num_ele in number:

code = ord(num_ele)

name = code2name[code]

info = font['glyf'].glyphs.get(name).data

info_md5 = hashlib.md5(info).hexdigest()

if info_md5 not in glyph_set:

font_collect['glyf'].glyphs.get(code2name_collect[code_cnt]).data = info

glyph_set.add(info_md5)

f.write(info_md5 + '\t' + code2name_collect[code_cnt] + '\t\n')

code_cnt += 1

print(k)

font_collect.save('all_glyph.ttf')

f.close()

def crawler():

session = login()

base_url = 'http://glidedsky.com/level/web/crawler-font-puzzle-2?page='

ans = 0

info2num = {} # Mapping table from font information to real characters

with open('glyph.txt', mode='rt') as f:

for line in f.readlines():

line = line.split()

info2num[line[0]] = line[2]

for k in range(1, 1001):

url = base_url + str(k)

r = session.get(url)

html = r.text

ans += parse(html, info2num)

print(k)

print(ans)

if __name__ == '__main__':

if exists('glyph.txt'):

crawler()

else:

get_all_info()

print('The mapping relationship between font information and real characters is missing, please all_glyph.ttf and glyph.txt structure')

# 103 characters in total