Let's take a look at VXGI technology this time. First, let's understand its basic situation: Parse Voxel Octree GI (also known as Voxel Cone Tracing) and VXGI are NVIDIA technologies. VXGI is the name of SVOGI after its final production.

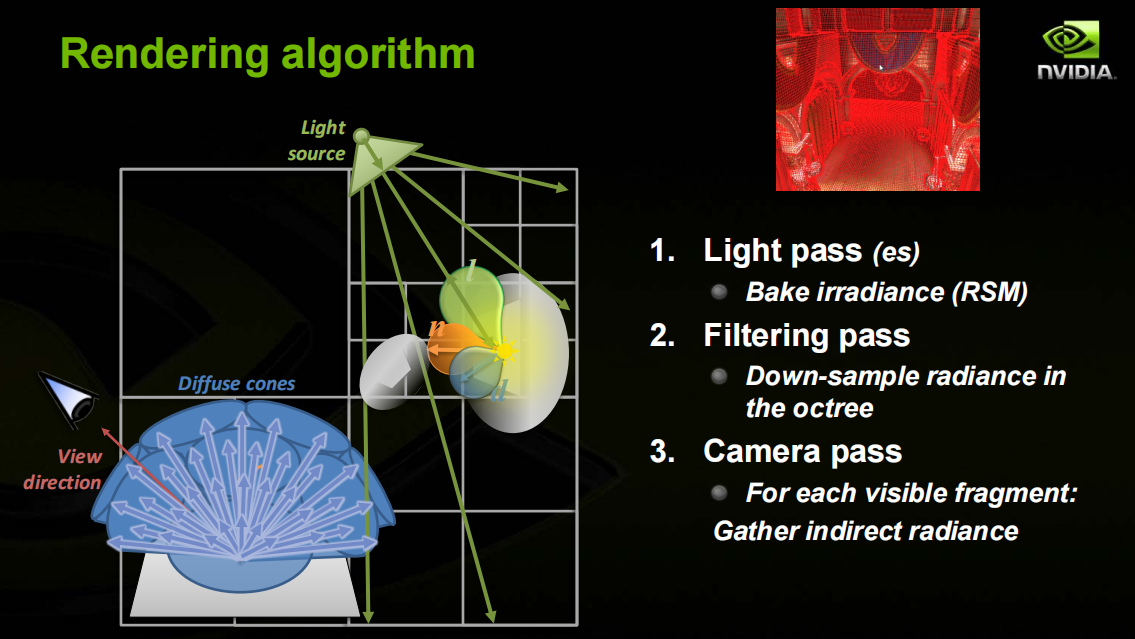

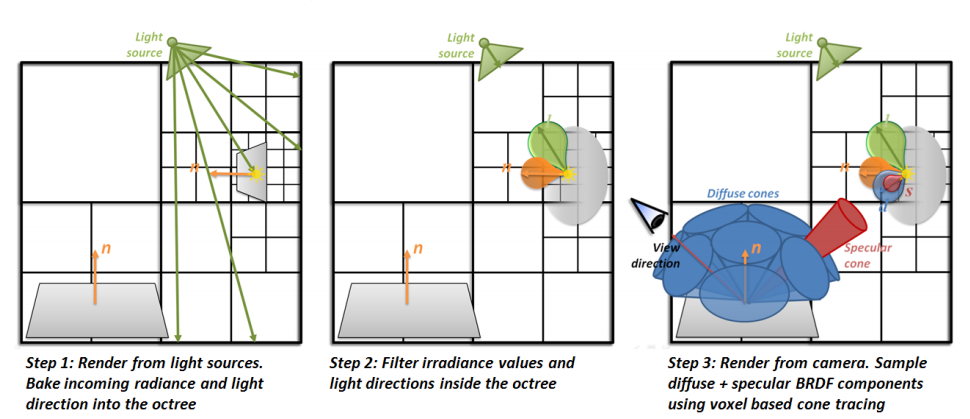

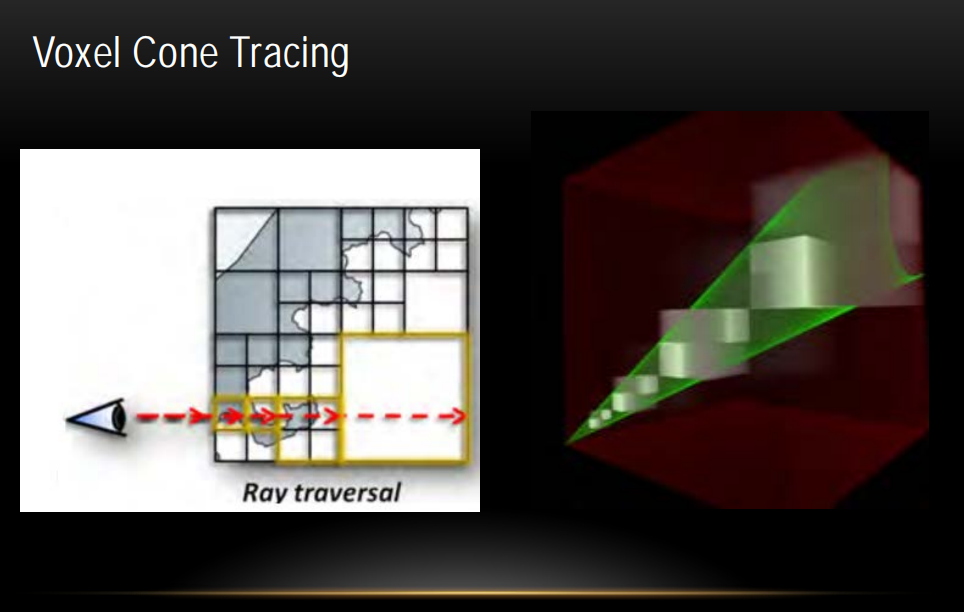

The general steps of this algorithm are as follows:

- Voxel the whole scene, and then save the voxeled scene in a three-dimensional data structure.

- Then write the direct illumination information into the three-dimensional data structure by using Reflective Shadow Map or direct injection.

- During rendering, after obtaining the pixel position and normal information, trace some cones like ray tracing. During tracing, read the lighting information from the voxeled data structure with direct lighting information, and then get the lighting acting on the current pixel to complete rendering.

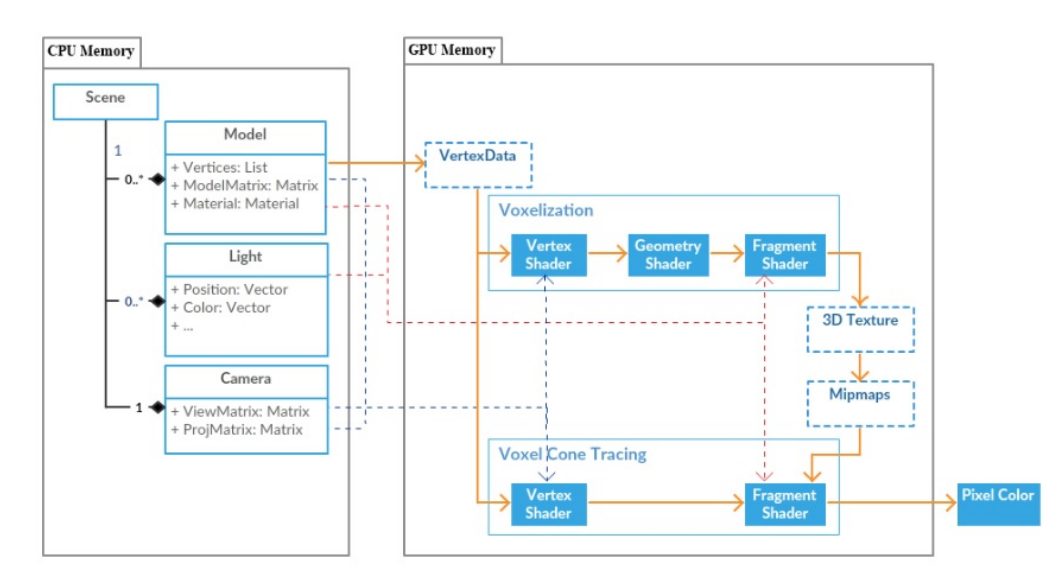

The algorithm flow can be intuitively understood as shown in the figure below:

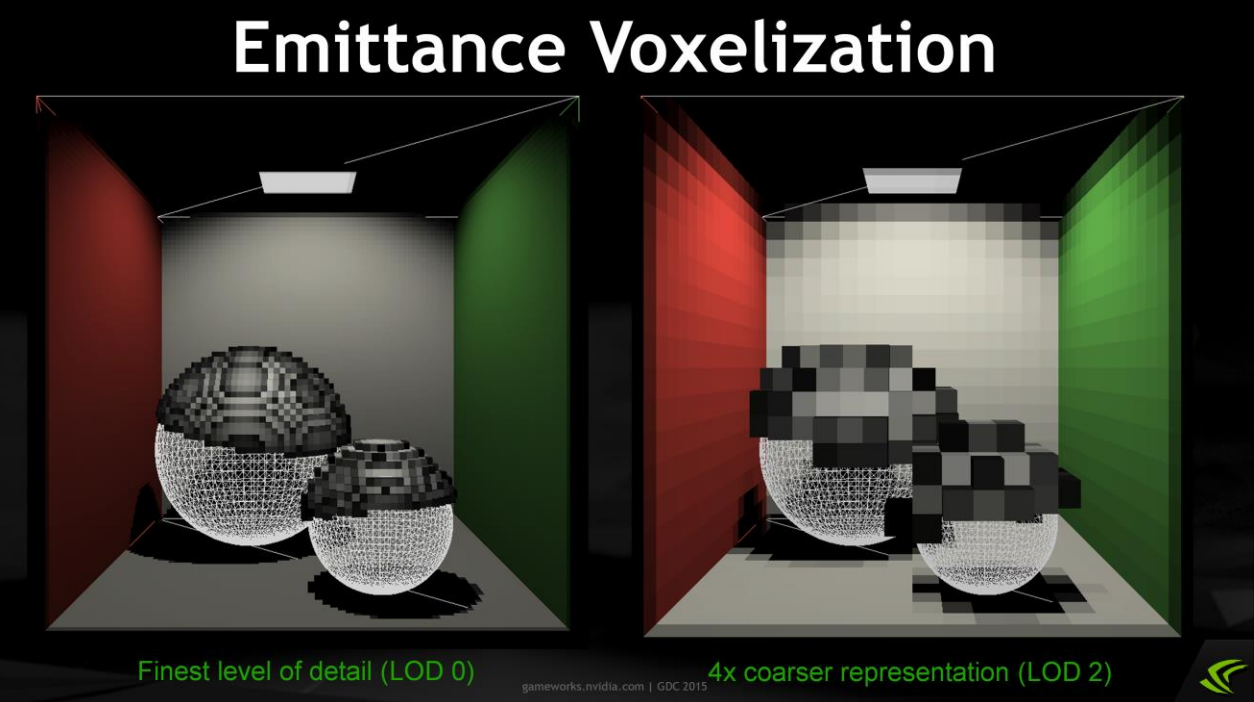

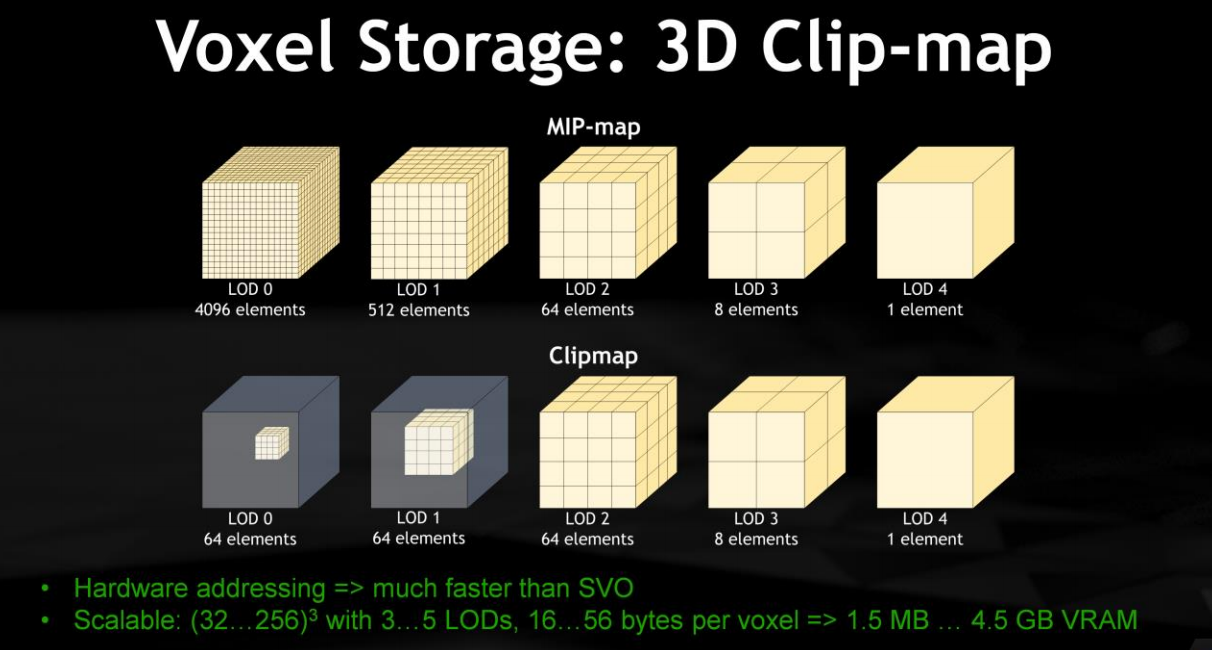

In addition, VXGI uses clip Map technology. Clip Map is a storage method similar to mip map, but only the information of the center is saved at several level s of the lowest LOD. In the algorithm, the center of the Map is of course your camera viewpoint, that is, the farther away from the viewpoint, the coarser the voxel information of the scene. Because VXGI can adjust the angle of the tracked Cone, it can approximate the reflection of Glossy by tracking a very thin Cone.

However, VXGI also has some problems: scene voxeling (of course, only the dynamic part of voxeling) takes time for each frame, and 3d art and science costs more video memory. Like LPV, the accuracy of lighting depends on the accuracy of scene voxeling, so there will be light leakage and other artifact s.

The above briefly describes the basic problems of VXGI. Let's explain the implementation process and code examples step by step.

Model voxel

Voxelization is the basis of the whole GI algorithm. So the first thing we do is create a voxel representation of the scene geometry. We perform the transformation from a scene composed of triangular meshes to our representation by using shaders.

However, there are many methods that can be used for voxelization, mainly including the following:

- Collision detection between voxels and scene directly. This method is primitive and inefficient. Although it can also be accelerated with the help of GPU, it is still inconvenient to use in this specific occasion;

- Voxel operation based on CUDA is also a method of acceleration using GPU. For example, a rasterization method using CUDA here can also be used to realize voxelization with a little modification;

- GPU based

Rasterization method. The advantage of using this method is that it does not need special Pipeline, and it is easy to integrate it into existing games or rendering engines;

Because the whole algorithm needs to deal with the dynamic scene and dynamic light source, the voxel operation here also needs to be updated in real time, so it needs the voxel operation algorithm with the highest efficiency as possible; Therefore, the third method is based on the implementation of voxelization algorithm.

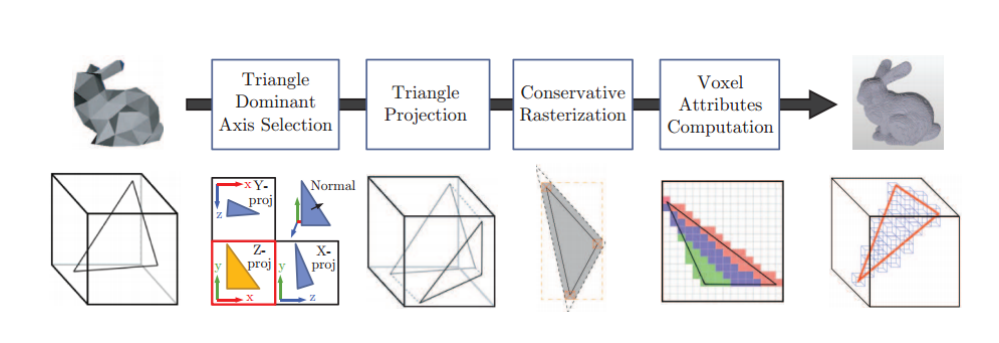

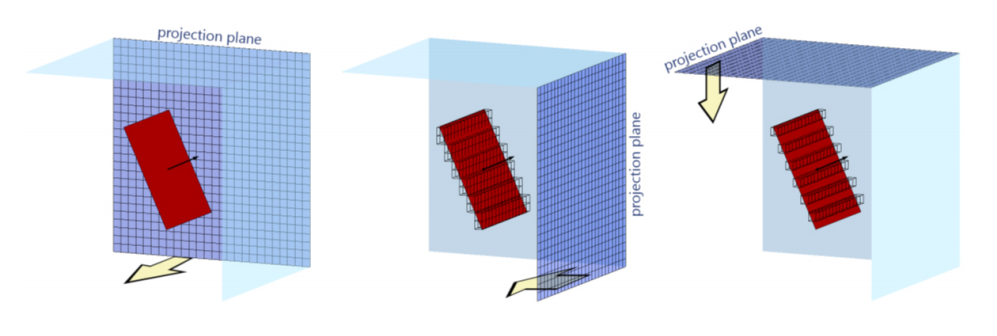

The process of voxelization is mainly shown in the following figure:

The main processes are:

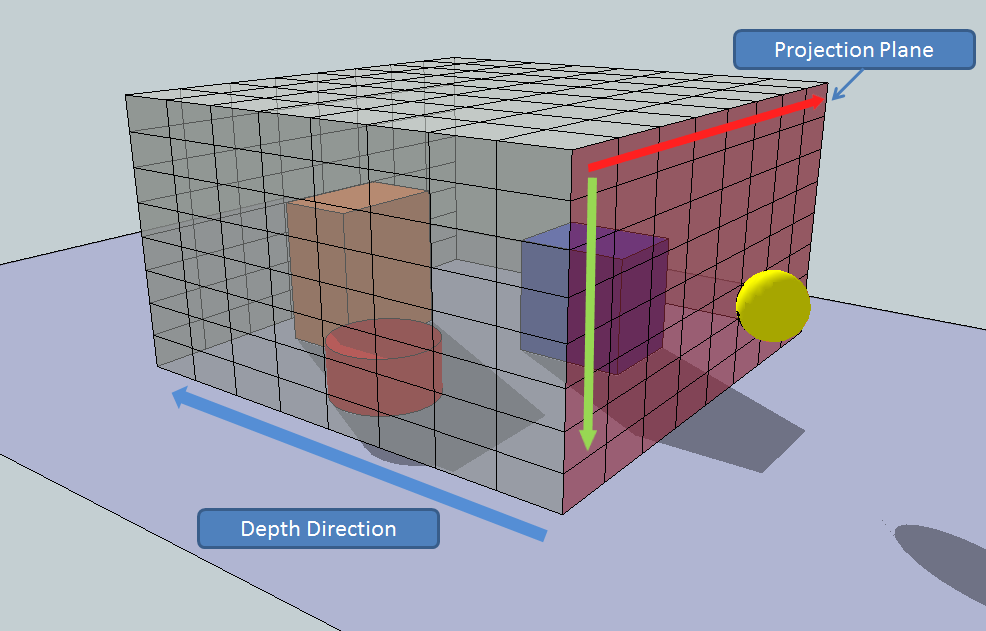

- Each triangle in the 3D mesh is rendered using an orthogonal projection window with the same resolution as the voxeled subdivision;

- For each triangle, a projection matrix with the largest projection area is calculated, and then rasterization is done at this position, so as to maximize the rasterization efficiency; Each pixel rasterized corresponds to a voxel in this direction;

- The Shader is executed in each pixel rasterized, and the voxel information corresponding to the pixel is written into the 3D Texture using the imageStore method;

- After the above operations are performed on the six projection axis directions respectively, 6 3D textures are obtained; Then merge them to get the final 3D

Texture, which contains the complete voxel results of the whole scene.

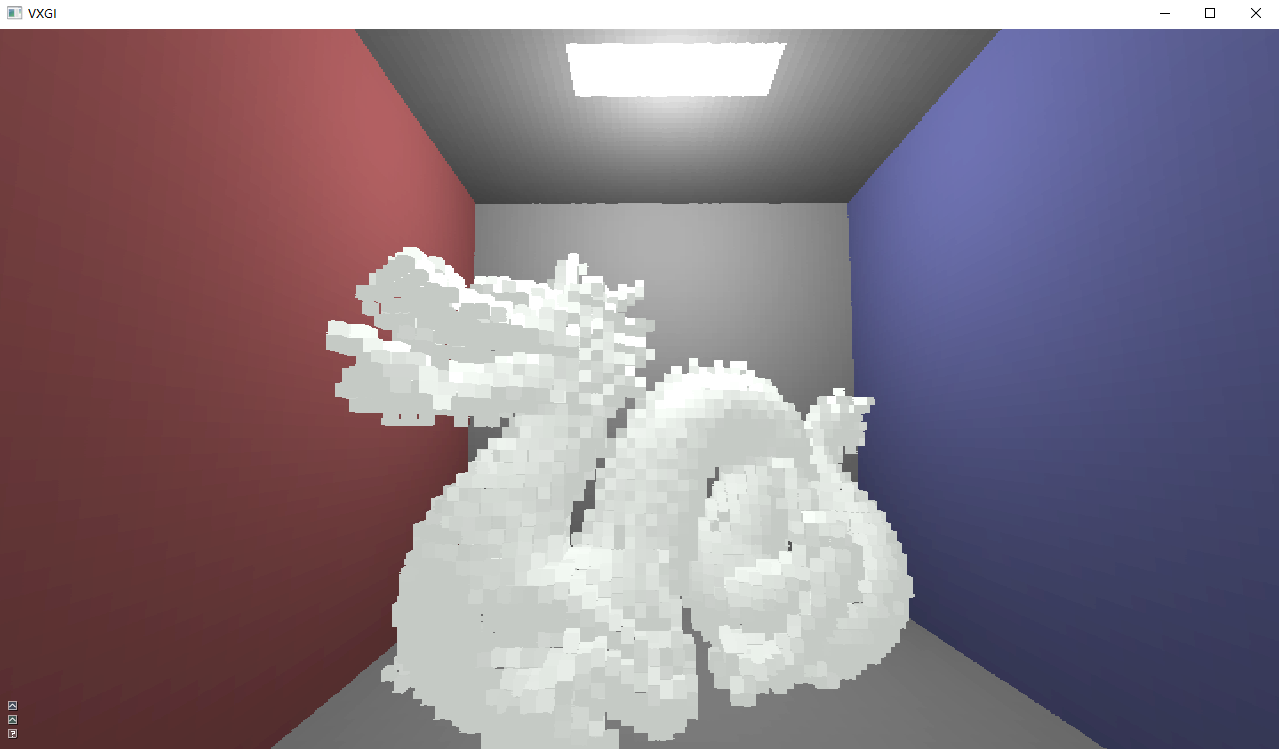

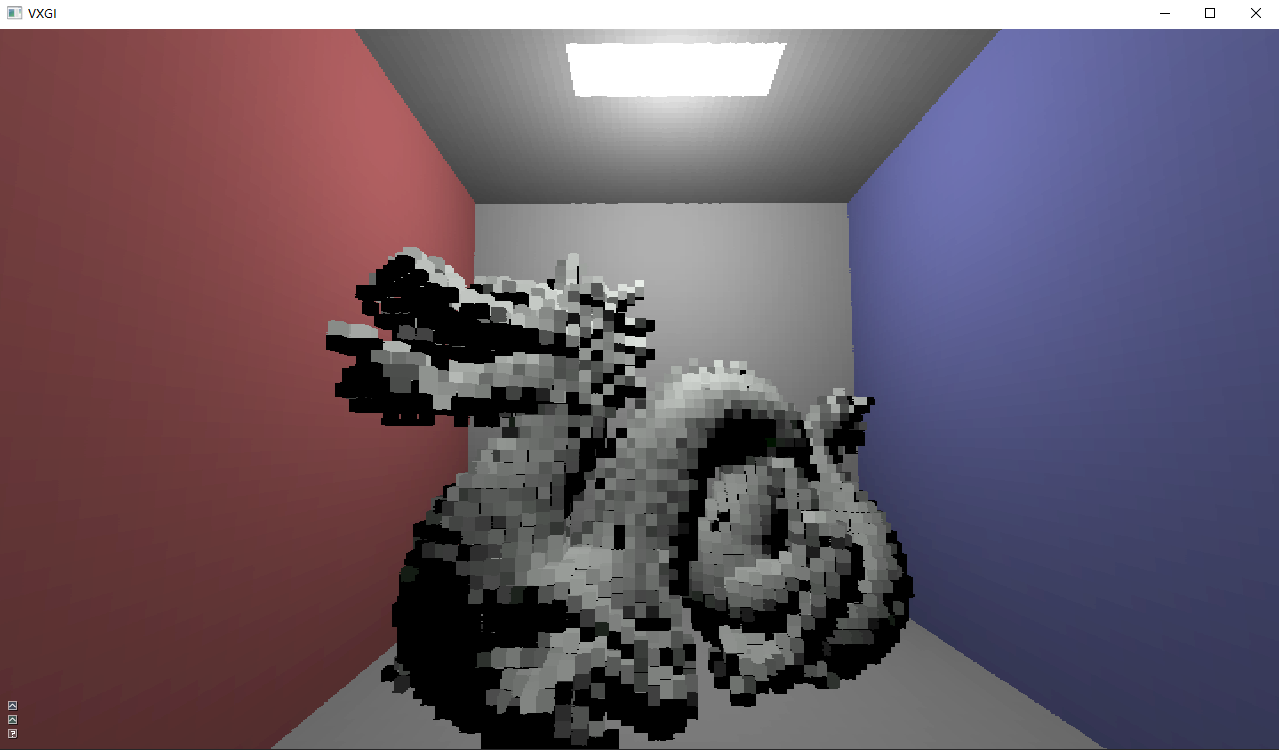

The results of voxelization using the above method are as follows:

It should be noted that the orthogonal projection matrix is used and the projection direction is judged

Because voxels are essentially axis aligned cubes, in order to represent each triangular surface of the model with a pile of voxels, we need to use orthogonal projection, as shown in the following figure:

But we all know that a cube has six faces. Do we need six projections? The answer is No. firstly, because it is an orthogonal projection, the result projected above and below is the same. The left and right surfaces and front and rear surfaces are the same, so three surfaces can be excluded first.

See the following process for the overall generation process:

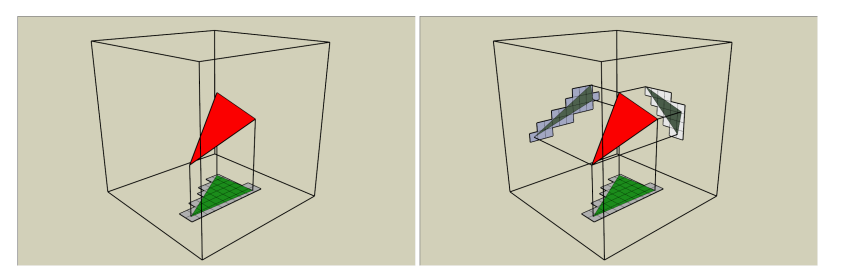

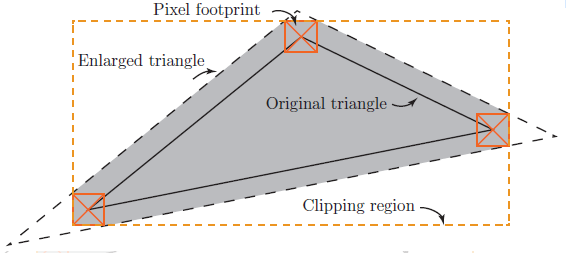

Secondly, attention should be paid to the problem of cracks, holes and light leakage.

This is mainly because the above voxelization method uses conservative judgment operations, so some voxels may be omitted. In this case, a feasible solution is to expand the triangle around as follows:

Based on the expanded triangle, the same conservative judgment operation will be carried out to obtain the original voxels, which will improve the effect of voxelization.

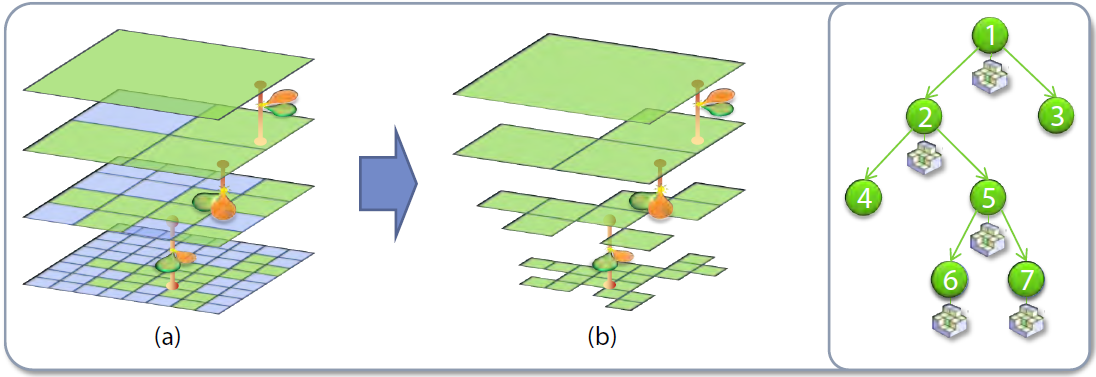

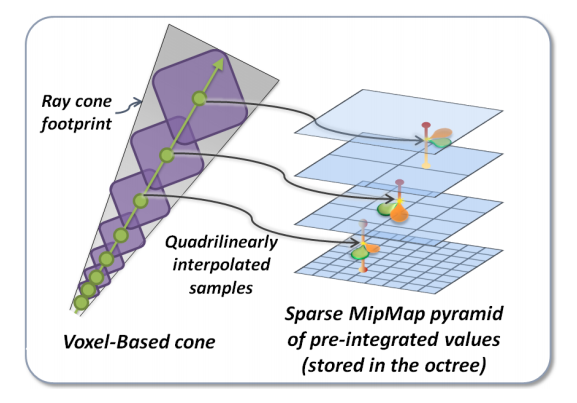

2, Construct voxel MipMap

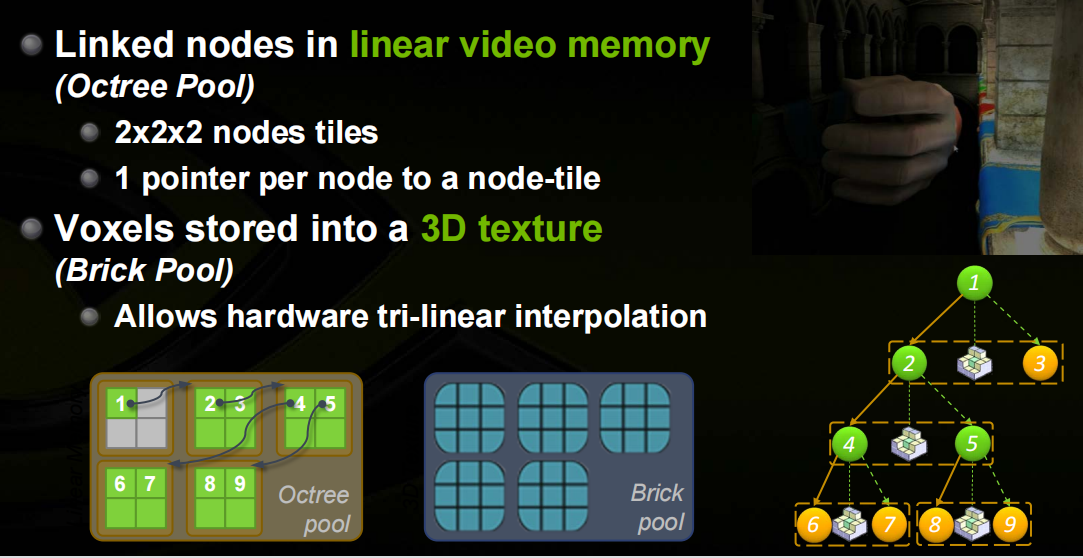

Next, we mipmap the 3D voxel texture so that we can sample it later when the light travels during rendering. We need to use a data structure to manage it. The main idea of octree is to divide a three-dimensional space (cube) into eight secondary cubes and iterate continuously until each cube uniquely represents an object (or part of it) - leaf node, so it should be a tree structure extended from the root node, But now the situation is that we have completed voxelization - that is, all leaf nodes have been calculated. Is it very similar to mipmap to construct an octree by merging eight adjacent voxels into one and iterating until they are merged into a voxel of the same size as the AABB bounding box of the model.

Its specific performance is as follows:

Direct / self emission light injection:

Before that, we also need to know how to store lighting data, as shown in the figure below:

In it, we bake view independent direct lighting data, which will be used for subsequent indirect lighting determination. In essence, the radiation from additional light reflection is determined by storing the direct light from the light source on the surface related to the pixel in question - just like the contribution of light to our pixel rather than directly from light. If you understand this, you will understand that we can also store self luminescence data from luminescent materials, which will later be converted into direct illumination from the materials, and other useful data can be baked.

You who have done scene management should know octree very well. This is a very common and simple space segmentation method, which can be used to speed up collision detection and other operations. It is very practical. The Cone Tracing algorithm here is also based on a similar octree structure. The main problem here is how to quickly create a spatial octree structure based on voxel information. The following figure shows the visual display of 3D textures of different mipmaps.

Therefore, after the voxel operation is completed in the first step, the scene discrete information stored in the 3D Texture can be obtained. At this time, it is equivalent to saving the bottom information of the OCTree, that is, the information on each leaf. Next, you need to get the structure of the whole tree through these leaves. The method used here is to create OCTree from bottom to top. Usually, the top-down method is to divide each current node into eight sub nodes; Bottom up is to merge every eight child nodes to get their corresponding parent nodes, so as to end up at the top node. Here, the principle of Mipmap is used to generate different levels of Mipamp for 3D Texture, which is equivalent to obtaining the OCTree structure at different depths, as follows:

Each Texel in the current Mipmap layer represents a scene voxel node. After merging it, a larger node of the upper level (i.e. the OCTree of the upper level) is obtained, which is carried out until the top level. In this way, for example, a scene segmentation OCTree with a corresponding depth of 10 is obtained in a 3D Texture containing 10 levels of Mipmap, which is used as the basic spatial data structure for Cone Tracing in the next step. When used in GI, RGBA is enough for the information to be stored in each voxel.

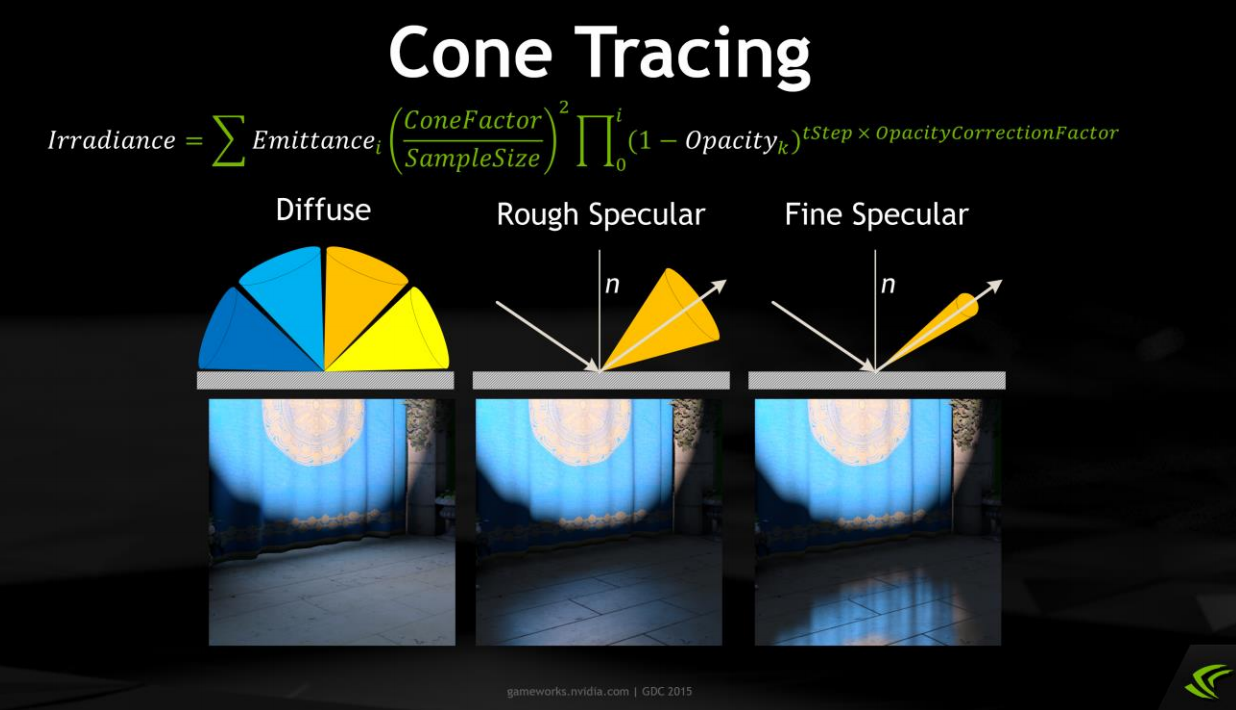

3, Cone Tracing

Finally, we perform cone tracking in screen space to determine the contribution of indirect diffuse and indirect specular illumination to our pixels. In implementation, you can also choose to calculate direct diffuse illumination from cone tracking.

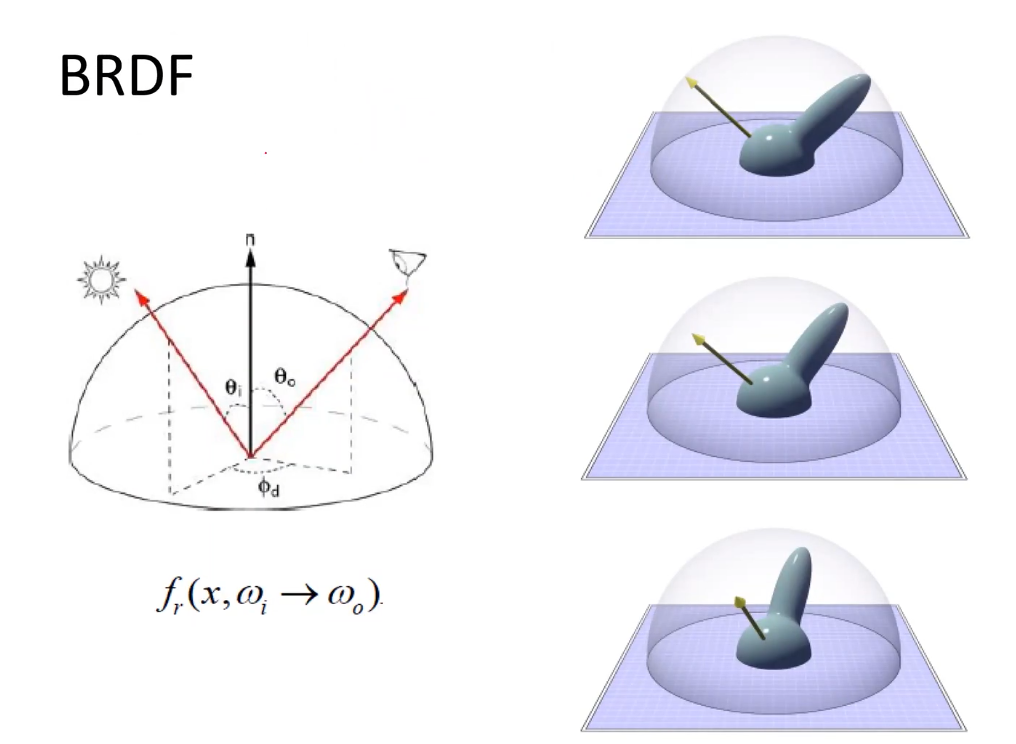

As shown in the figure below, cones of different quantities and orders of magnitude can be used to simulate the material properties of the object surface.

Here we can also take a visual look at the visual representation of BRDF:

From the above two pictures, you must find that their 3D visual representation is very similar, so we can find that all rendering essence is similar.

Firstly, at each point on each surface, the hemispherical integral space in the traditional GI calculation is divided into multiple independent Cones. The space obtained by the combination of these Cones (there will be overlap or cracks in the middle) is used to approximate the original hemispherical space, and iridiance is collected on it.

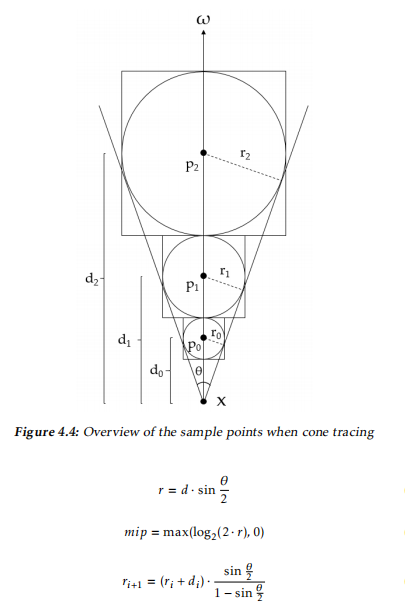

Then, for each independent Cone, the following method is used for approximation:

That is, within each Cone, it is approximated by multiple densely arranged cubes. The method of using Cube is that it will make the Tracing of OCTree very convenient. The size of each Cube can be calculated according to the specific Cone attributes (such as included angle, maximum length, etc.). Generally speaking, the number of cubes divided from each Cone will not be too large

For the Tracing of each Cube in OCTree, the method used is also relatively simple: directly calculate the Size of the Cube, and then find the Mipmap layer that best fits it according to this Size. The principle here is that the Size of the Cube should be as close as possible to the node Size in the Mipmap layer. Finally, directly use the position information of this Cube to sample the node values at the corresponding positions in Mipmap to complete the Tracing of this Cube.

After Tracing each Cube in the Cone, the cumulative iridiance result in the current Cone direction can be considered as the superposition of all Cube sampling results in the Cone. Although this seems a little unreasonable, the visual effect approximation is already very good. Consider each Cube as a Transparent attribute, and then the iridiance will continuously pass it.

Refer to the calculation method in the paper for the specific calculation and search hierarchical relationship:

4, Code implementation

First, let's look at the overall architecture of rendering

Next, the implementation of the shader can refer to the process on the right of the figure above:

4.1 Voxelization

- VS:

#version 450 core

layout(location = 0) in vec3 position;

layout(location = 1) in vec3 normal;

uniform mat4 M;

uniform mat4 V;

uniform mat4 P;

out vec3 worldPositionGeom;

out vec3 normalGeom;

void main(){

worldPositionGeom = vec3(M * vec4(position, 1));

normalGeom = normalize(mat3(transpose(inverse(M))) * normal);

gl_Position = P * V * vec4(worldPositionGeom, 1);

}

- GS:

#version 450 core

layout(triangles) in;

layout(triangle_strip, max_vertices = 3) out;

in vec3 worldPositionGeom[];

in vec3 normalGeom[];

out vec3 worldPositionFrag;

out vec3 normalFrag;

void main(){

const vec3 p1 = worldPositionGeom[1] - worldPositionGeom[0];

const vec3 p2 = worldPositionGeom[2] - worldPositionGeom[0];

const vec3 p = abs(cross(p1, p2));

for(uint i = 0; i < 3; ++i){

worldPositionFrag = worldPositionGeom[i];

normalFrag = normalGeom[i];

if(p.z > p.x && p.z > p.y){

gl_Position = vec4(worldPositionFrag.x, worldPositionFrag.y, 0, 1);

} else if (p.x > p.y && p.x > p.z){

gl_Position = vec4(worldPositionFrag.y, worldPositionFrag.z, 0, 1);

} else {

gl_Position = vec4(worldPositionFrag.x, worldPositionFrag.z, 0, 1);

}

EmitVertex();

}

EndPrimitive();

}

- PS:

#version 450 core

// Light settings

#define POINT_LIGHT_INTENSITY 1

#define MAX_LIGHTS 1

// Sets the attenuation factor for the light

#define DIST_FACTOR 1.1f /* Distance is multiplied by this when calculating attenuation. */

#define CONSTANT 1

#define LINEAR 0

#define QUADRATIC 1

// Returns the attenuation factor for a given distance

float attenuate(float dist){ dist *= DIST_FACTOR; return 1.0f / (CONSTANT + LINEAR * dist + QUADRATIC * dist * dist); }

struct PointLight {

vec3 position;

vec3 color;

};

struct Material {

vec3 diffuseColor;

vec3 specularColor;

float diffuseReflectivity;

float specularReflectivity;

float emissivity;

float transparency;

};

uniform Material material;

uniform PointLight pointLights[MAX_LIGHTS];

uniform int numberOfLights;

uniform vec3 cameraPosition;

layout(RGBA8) uniform image3D texture3D;

in vec3 worldPositionFrag;

in vec3 normalFrag;

vec3 calculatePointLight(const PointLight light){

const vec3 direction = normalize(light.position - worldPositionFrag);

const float distanceToLight = distance(light.position, worldPositionFrag);

const float attenuation = attenuate(distanceToLight);

const float d = max(dot(normalize(normalFrag), direction), 0.0f);

return d * POINT_LIGHT_INTENSITY * attenuation * light.color;

};

vec3 scaleAndBias(vec3 p) { return 0.5f * p + vec3(0.5f); }

bool isInsideCube(const vec3 p, float e) { return abs(p.x) < 1 + e && abs(p.y) < 1 + e && abs(p.z) < 1 + e; }

void main(){

vec3 color = vec3(0.0f);

if(!isInsideCube(worldPositionFrag, 0)) return;

// The contribution of diffuse illumination is calculated

const uint maxLights = min(numberOfLights, MAX_LIGHTS);

for(uint i = 0; i < maxLights; ++i) color += calculatePointLight(pointLights[i]);

vec3 spec = material.specularReflectivity * material.specularColor;

vec3 diff = material.diffuseReflectivity * material.diffuseColor;

color = (diff + spec) * color + clamp(material.emissivity, 0, 1) * material.diffuseColor;

// Output lighting information to 3D texture

vec3 voxel = scaleAndBias(worldPositionFrag);

ivec3 dim = imageSize(texture3D);

float alpha = pow(1 - material.transparency, 4); // For soft shadows to work better with transparent materials.

vec4 res = alpha * vec4(vec3(color), 1);

imageStore(texture3D, ivec3(dim * voxel), res);

}

4.2 Voxel Cone Tracing

- VS:

#version 450 core

layout(location = 0) in vec3 position;

layout(location = 1) in vec3 normal;

uniform mat4 M;

uniform mat4 V;

uniform mat4 P;

out vec3 worldPositionFrag;

out vec3 normalFrag;

void main(){

worldPositionFrag = vec3(M * vec4(position, 1));

normalFrag = normalize(mat3(transpose(inverse(M))) * normal);

gl_Position = P * V * vec4(worldPositionFrag, 1);

}

- PS:

#version 450 core

#define TSQRT2 2.828427

#define SQRT2 1.414213

#define ISQRT2 0.707106

// --------------------------------------

// Light (voxel) cone tracking settings

// --------------------------------------

#define MIPMAP_HARDCAP 5.4f /* mipmap level, which affects the rendering effect*/

#define VOXEL_SIZE (1/64.0) / * voxel size 128x128x128 => 1/128 = 0.0078125. */

#define SHADOWS 1 / * shadow switch*/

#define DIFFUSE_INDIRECT_FACTOR 0.52f / * intensity of diffuse indirect illumination*/

// --------------------------------------

// Other lighting settings

// --------------------------------------

#define SPECULAR_MODE 1 /* 0 == Blinn-Phong , 1 == reflection model. */

#define SPECULAR_FACTOR 4.0f / * mirror intensity adjustment factor*/

#define SPECULAR_POWER 65.0f / * mirror strength roughness*/

#define DIRECT_LIGHT_INTENSITY 0.96f / * (direct) point light intensity factor*/

#define MAX_LIGHTS 1 / * number of lights*/

// Factor of light attenuation. See the function "attenuate()"

#define DIST_FACTOR 1.1f / * when calculating attenuation, multiply the distance by this coefficient*/

#define CONSTANT 1

#define LINEAR 0 /* . */

#define QUADRATIC 1

// Other data

#define GAMMA_CORRECTION 1 / * whether gamma correction is used*/

// Base point light

struct PointLight {

vec3 position;

vec3 color;

};

// Base material

struct Material {

vec3 diffuseColor;

float diffuseReflectivity;

vec3 specularColor;

float specularDiffusion; // "Reflection and refraction" specular diffusion

float specularReflectivity;

float emissivity; // The emission material uses the diffuse color as the emission color

float refractiveIndex;

float transparency;

};

struct Settings {

bool indirectSpecularLight; // Whether to render indirect reflections

bool indirectDiffuseLight; // Whether to render indirect diffuse light

bool directLight; // Do you want to render direct light

bool shadows; // Whether to render shadows

};

uniform Material material;

uniform Settings settings;

uniform PointLight pointLights[MAX_LIGHTS];

uniform int numberOfLights; // Number of lights

uniform vec3 cameraPosition; // Camera position in world space

uniform int state; // .

uniform sampler3D texture3D; // Voxel texture

in vec3 worldPositionFrag;

in vec3 normalFrag;

out vec4 color;

vec3 normal = normalize(normalFrag);

float MAX_DISTANCE = distance(vec3(abs(worldPositionFrag)), vec3(-1));

// Returns the attenuation factor for a given distance

float attenuate(float dist){ dist *= DIST_FACTOR; return 1.0f / (CONSTANT + LINEAR * dist + QUADRATIC * dist * dist); }

// Returns a vector orthogonal to u

vec3 orthogonal(vec3 u){

u = normalize(u);

vec3 v = vec3(0.99146, 0.11664, 0.05832); // Pick any normalized vector.

return abs(dot(u, v)) > 0.99999f ? cross(u, vec3(0, 1, 0)) : cross(u, v);

}

// (from [-1, 1] to [0, 1]).

vec3 scaleAndBias(const vec3 p) { return 0.5f * p + vec3(0.5f); }

// Returns true if the point p is in the unit cube

bool isInsideCube(const vec3 p, float e) { return abs(p.x) < 1 + e && abs(p.y) < 1 + e && abs(p.z) < 1 + e; }

//Use shadow cone tracking to return a soft shadow blend.

//Each step uses 2 samples, which has a large performance overhead.

float traceShadowCone(vec3 from, vec3 direction, float targetDistance){

from += normal * 0.05f;

float acc = 0;

float dist = 3 * VOXEL_SIZE;

// Scope of influence

const float STOP = targetDistance - 16 * VOXEL_SIZE;

while(dist < STOP && acc < 1){

vec3 c = from + dist * direction;

if(!isInsideCube(c, 0)) break;

c = scaleAndBias(c);

float l = pow(dist, 2); // Square off shadows

float s1 = 0.062 * textureLod(texture3D, c, 1 + 0.75 * l).a;

float s2 = 0.135 * textureLod(texture3D, c, 4.5 * l).a;

float s = s1 + s2;

acc += (1 - acc) * s;

dist += 0.9 * VOXEL_SIZE * (1 + 0.05 * l);

}

return 1 - pow(smoothstep(0, 1, acc * 1.4), 1.0 / 1.4);

}

// Trace the diffuse reflector voxel cone

vec3 traceDiffuseVoxelCone(const vec3 from, vec3 direction){

direction = normalize(direction);

const float CONE_SPREAD = 0.325;

vec4 acc = vec4(0.0f);

//Control the light leakage of the closed surface.

//If shadow cone tracking is used, lower values can result in poor results.

float dist = 0.1953125;

// Trace.

while(dist < SQRT2 && acc.a < 1){

vec3 c = from + dist * direction;

c = scaleAndBias(from + dist * direction);

float l = (1 + CONE_SPREAD * dist / VOXEL_SIZE);

float level = log2(l);

float ll = (level + 1) * (level + 1);

vec4 voxel = textureLod(texture3D, c, min(MIPMAP_HARDCAP, level));

acc += 0.075 * ll * voxel * pow(1 - voxel.a, 2);

dist += ll * VOXEL_SIZE * 2;

}

return pow(acc.rgb * 2.0, vec3(1.5));

}

// Trace the highlight voxel cone

vec3 traceSpecularVoxelCone(vec3 from, vec3 direction){

direction = normalize(direction);

const float OFFSET = 8 * VOXEL_SIZE;

const float STEP = VOXEL_SIZE;

from += OFFSET * normal;

vec4 acc = vec4(0.0f);

float dist = OFFSET;

// Trace.

while(dist < MAX_DISTANCE && acc.a < 1){

vec3 c = from + dist * direction;

if(!isInsideCube(c, 0)) break;

c = scaleAndBias(c);

float level = 0.1 * material.specularDiffusion * log2(1 + dist / VOXEL_SIZE);

vec4 voxel = textureLod(texture3D, c, min(level, MIPMAP_HARDCAP));

float f = 1 - acc.a;

acc.rgb += 0.25 * (1 + material.specularDiffusion) * voxel.rgb * voxel.a * f;

acc.a += 0.25 * voxel.a * f;

dist += STEP * (1.0f + 0.125f * level);

}

return 1.0 * pow(material.specularDiffusion + 1, 0.8) * acc.rgb;

}

//Indirect diffuse light is calculated using voxel cone tracking. The current implementation uses nine cones as shown.

vec3 indirectDiffuseLight(){

const float ANGLE_MIX = 0.5f; // Angle blending (1.0F = > orthogonal direction, 0.0F = > normal direction)

const float w[3] = {1.0, 1.0, 1.0}; // The weight of the cone

// Find the bottom of the edge cone, and the normal vector is one of its bottom vectors

const vec3 ortho = normalize(orthogonal(normal));

const vec3 ortho2 = normalize(cross(ortho, normal));

// Find the base vector of the pyramid

const vec3 corner = 0.5f * (ortho + ortho2);

const vec3 corner2 = 0.5f * (ortho - ortho2);

//Find the starting position of the trace (starting from the offset)

const vec3 N_OFFSET = normal * (1 + 4 * ISQRT2) * VOXEL_SIZE;

const vec3 C_ORIGIN = worldPositionFrag + N_OFFSET;

// For cumulative indirect diffuse light

vec3 acc = vec3(0);

//We offset forward in the normal direction and backward in the cone direction.

//The backward cone direction can improve GI, while the forward direction can remove artifacts.

const float CONE_OFFSET = -0.01;

// Tracking front cone

acc += w[0] * traceDiffuseVoxelCone(C_ORIGIN + CONE_OFFSET * normal, normal);

// Track 4 edge cones

const vec3 s1 = mix(normal, ortho, ANGLE_MIX);

const vec3 s2 = mix(normal, -ortho, ANGLE_MIX);

const vec3 s3 = mix(normal, ortho2, ANGLE_MIX);

const vec3 s4 = mix(normal, -ortho2, ANGLE_MIX);

acc += w[1] * traceDiffuseVoxelCone(C_ORIGIN + CONE_OFFSET * ortho, s1);

acc += w[1] * traceDiffuseVoxelCone(C_ORIGIN - CONE_OFFSET * ortho, s2);

acc += w[1] * traceDiffuseVoxelCone(C_ORIGIN + CONE_OFFSET * ortho2, s3);

acc += w[1] * traceDiffuseVoxelCone(C_ORIGIN - CONE_OFFSET * ortho2, s4);

// Track the 4 center cones

const vec3 c1 = mix(normal, corner, ANGLE_MIX);

const vec3 c2 = mix(normal, -corner, ANGLE_MIX);

const vec3 c3 = mix(normal, corner2, ANGLE_MIX);

const vec3 c4 = mix(normal, -corner2, ANGLE_MIX);

acc += w[2] * traceDiffuseVoxelCone(C_ORIGIN + CONE_OFFSET * corner, c1);

acc += w[2] * traceDiffuseVoxelCone(C_ORIGIN - CONE_OFFSET * corner, c2);

acc += w[2] * traceDiffuseVoxelCone(C_ORIGIN + CONE_OFFSET * corner2, c3);

acc += w[2] * traceDiffuseVoxelCone(C_ORIGIN - CONE_OFFSET * corner2, c4);

// Return results

return DIFFUSE_INDIRECT_FACTOR * material.diffuseReflectivity * acc * (material.diffuseColor + vec3(0.001f));

}

// Indirect specular light is calculated using voxel cone tracking.

vec3 indirectSpecularLight(vec3 viewDirection){

const vec3 reflection = normalize(reflect(viewDirection, normal));

return material.specularReflectivity * material.specularColor * traceSpecularVoxelCone(worldPositionFrag, reflection);

}

// Use voxel cone tracking to calculate refracted light

vec3 indirectRefractiveLight(vec3 viewDirection){

const vec3 refraction = refract(viewDirection, normal, 1.0 / material.refractiveIndex);

const vec3 cmix = mix(material.specularColor, 0.5 * (material.specularColor + vec3(1)), material.transparency);

return cmix * traceSpecularVoxelCone(worldPositionFrag, refraction);

}

//Calculate the diffuse and specular direct light of a given point light.

//Use shadow cones to track soft shadows.

vec3 calculateDirectLight(const PointLight light, const vec3 viewDirection){

vec3 lightDirection = light.position - worldPositionFrag;

const float distanceToLight = length(lightDirection);

lightDirection = lightDirection / distanceToLight;

const float lightAngle = dot(normal, lightDirection);

// --------------------

// Diffuse lighting.

// --------------------

float diffuseAngle = max(lightAngle, 0.0f); // Lambertian.

// --------------------

// Specular lighting.

// --------------------

#if (SPECULAR_MODE == 0) /* Blinn-Phong. */

const vec3 halfwayVector = normalize(lightDirection + viewDirection);

float specularAngle = max(dot(normal, halfwayVector), 0.0f);

#endif

#if (SPECULAR_MODE == 1) /* Perfect reflection. */

const vec3 reflection = normalize(reflect(viewDirection, normal));

float specularAngle = max(0, dot(reflection, lightDirection));

#endif

float refractiveAngle = 0;

if(material.transparency > 0.01){

vec3 refraction = refract(viewDirection, normal, 1.0 / material.refractiveIndex);

refractiveAngle = max(0, material.transparency * dot(refraction, lightDirection));

}

// --------------------

// Shadows.

// --------------------

float shadowBlend = 1;

#if (SHADOWS == 1)

if(diffuseAngle * (1.0f - material.transparency) > 0 && settings.shadows)

shadowBlend = traceShadowCone(worldPositionFrag, lightDirection, distanceToLight);

#endif

// --------------------

// Light superposition

// --------------------

diffuseAngle = min(shadowBlend, diffuseAngle);

specularAngle = min(shadowBlend, max(specularAngle, refractiveAngle));

const float df = 1.0f / (1.0f + 0.25f * material.specularDiffusion); // Diffusion factor.

const float specular = SPECULAR_FACTOR * pow(specularAngle, df * SPECULAR_POWER);

const float diffuse = diffuseAngle * (1.0f - material.transparency);

const vec3 diff = material.diffuseReflectivity * material.diffuseColor * diffuse;

const vec3 spec = material.specularReflectivity * material.specularColor * specular;

const vec3 total = light.color * (diff + spec);

return attenuate(distanceToLight) * total;

};

// Accumulates all direct rays (including diffuse and specular) from a point light source

vec3 directLight(vec3 viewDirection){

vec3 direct = vec3(0.0f);

const uint maxLights = min(numberOfLights, MAX_LIGHTS);

for(uint i = 0; i < maxLights; ++i) direct += calculateDirectLight(pointLights[i], viewDirection);

direct *= DIRECT_LIGHT_INTENSITY;

return direct;

}

void main(){

color = vec4(0, 0, 0, 1);

const vec3 viewDirection = normalize(worldPositionFrag - cameraPosition);

// Indirect diffuse light

if(settings.indirectDiffuseLight && material.diffuseReflectivity * (1.0f - material.transparency) > 0.01f)

color.rgb += indirectDiffuseLight();

// Indirect specular light (specular reflection)

if(settings.indirectSpecularLight && material.specularReflectivity * (1.0f - material.transparency) > 0.01f)

color.rgb += indirectSpecularLight(viewDirection);

// Self luminous.

color.rgb += material.emissivity * material.diffuseColor;

// transparent

if(material.transparency > 0.01f)

color.rgb = mix(color.rgb, indirectRefractiveLight(viewDirection), material.transparency);

// Direct illumination

if(settings.directLight)

color.rgb += directLight(viewDirection);

#if (GAMMA_CORRECTION == 1)

color.rgb = pow(color.rgb, vec3(1.0 / 2.2));

#endif

}

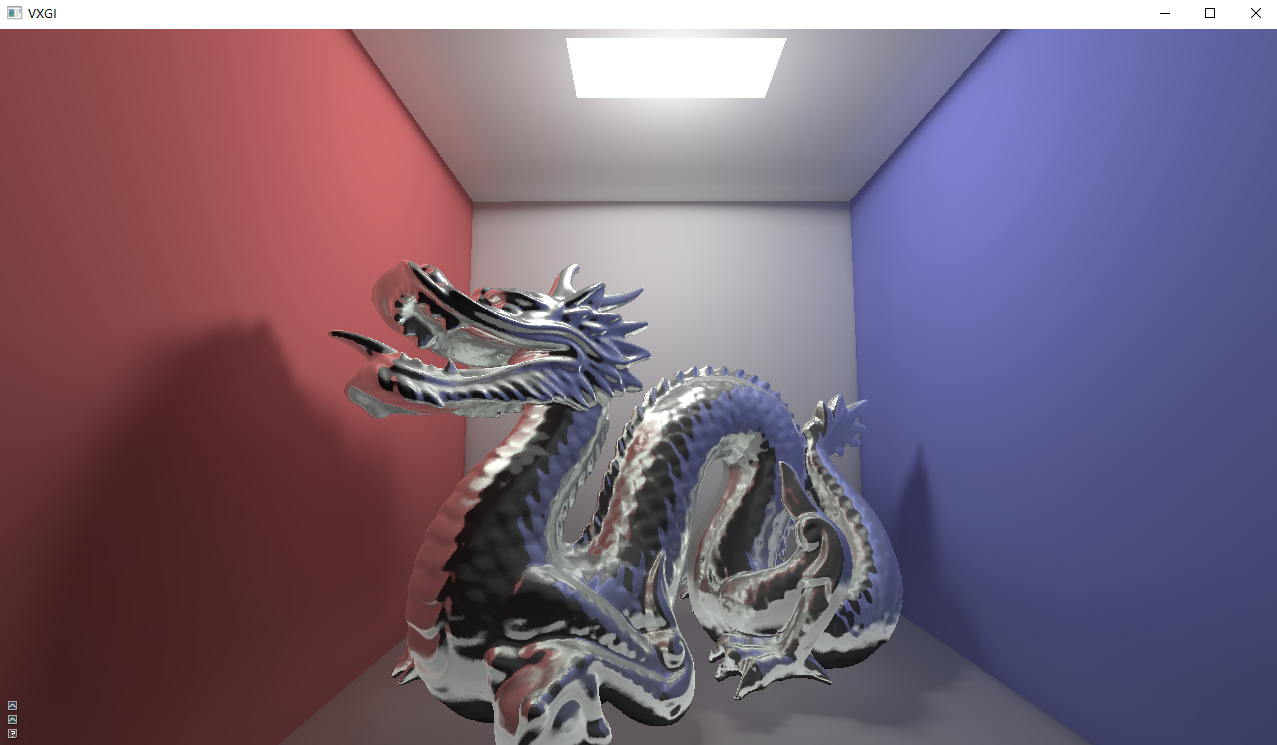

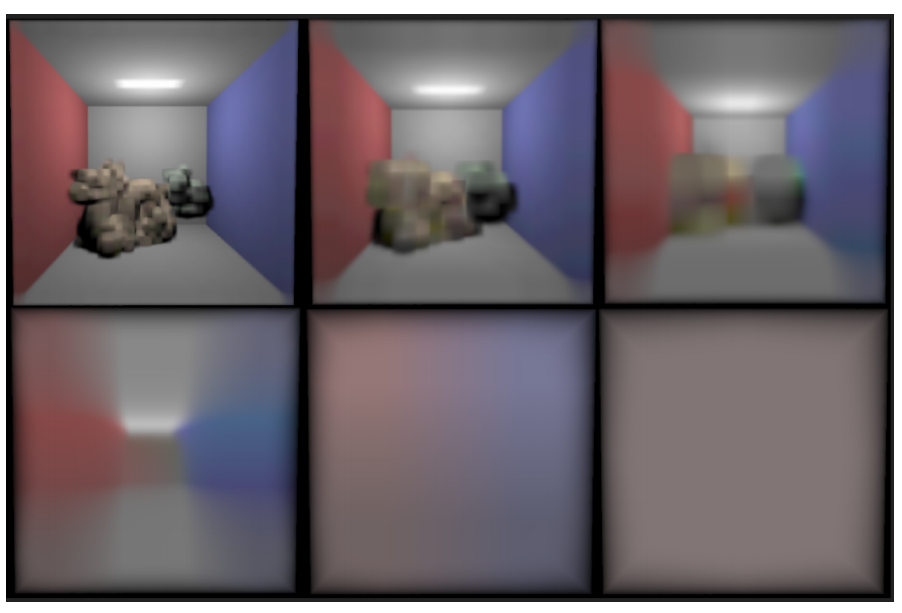

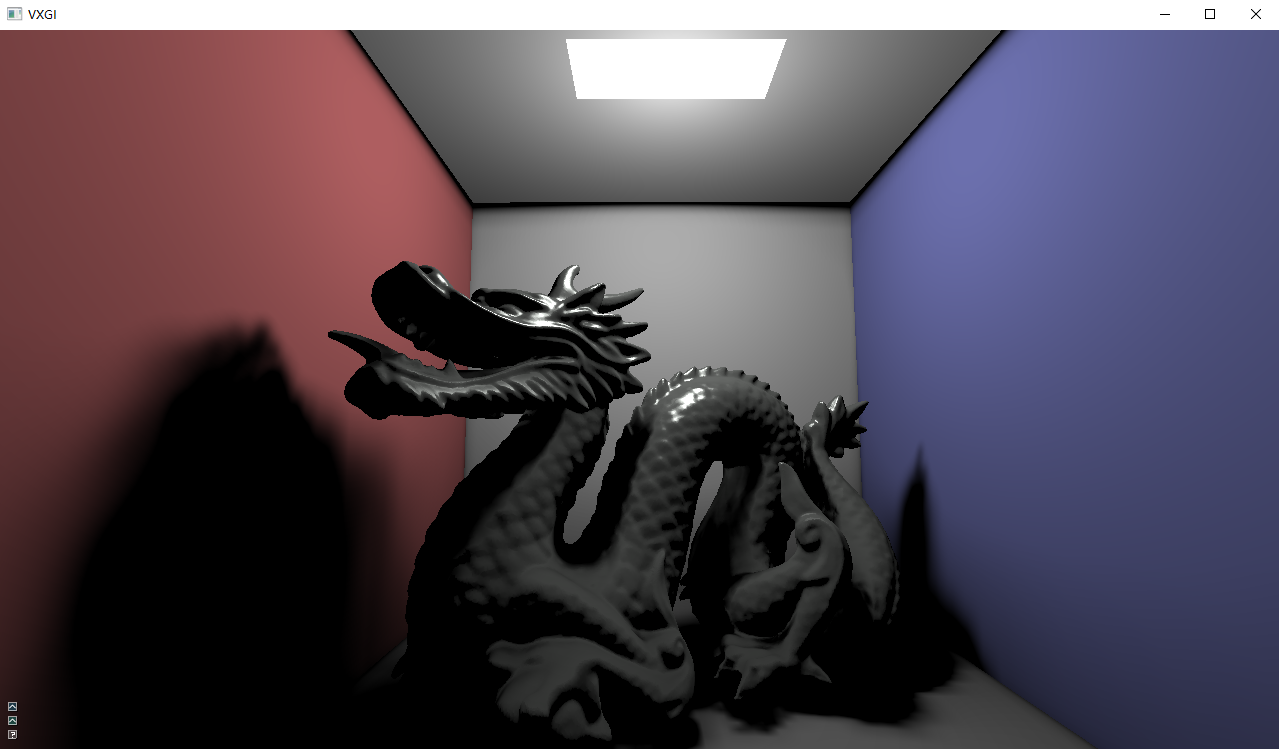

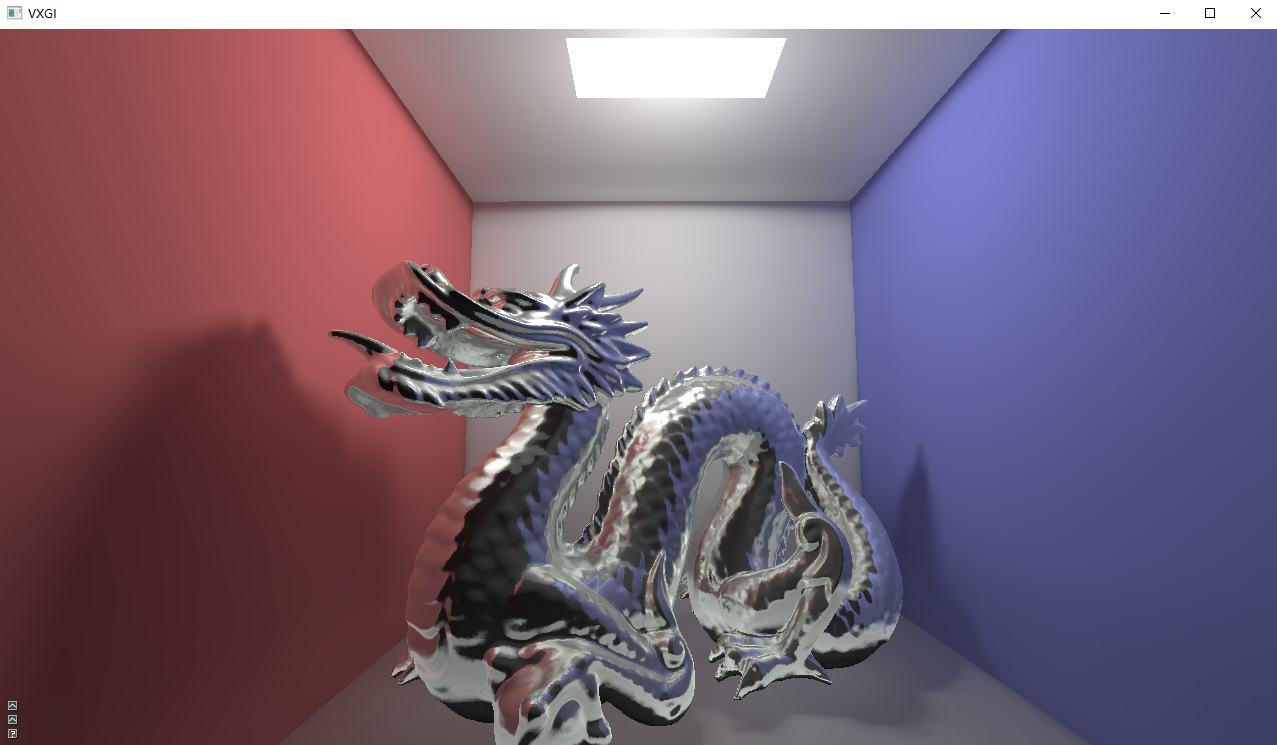

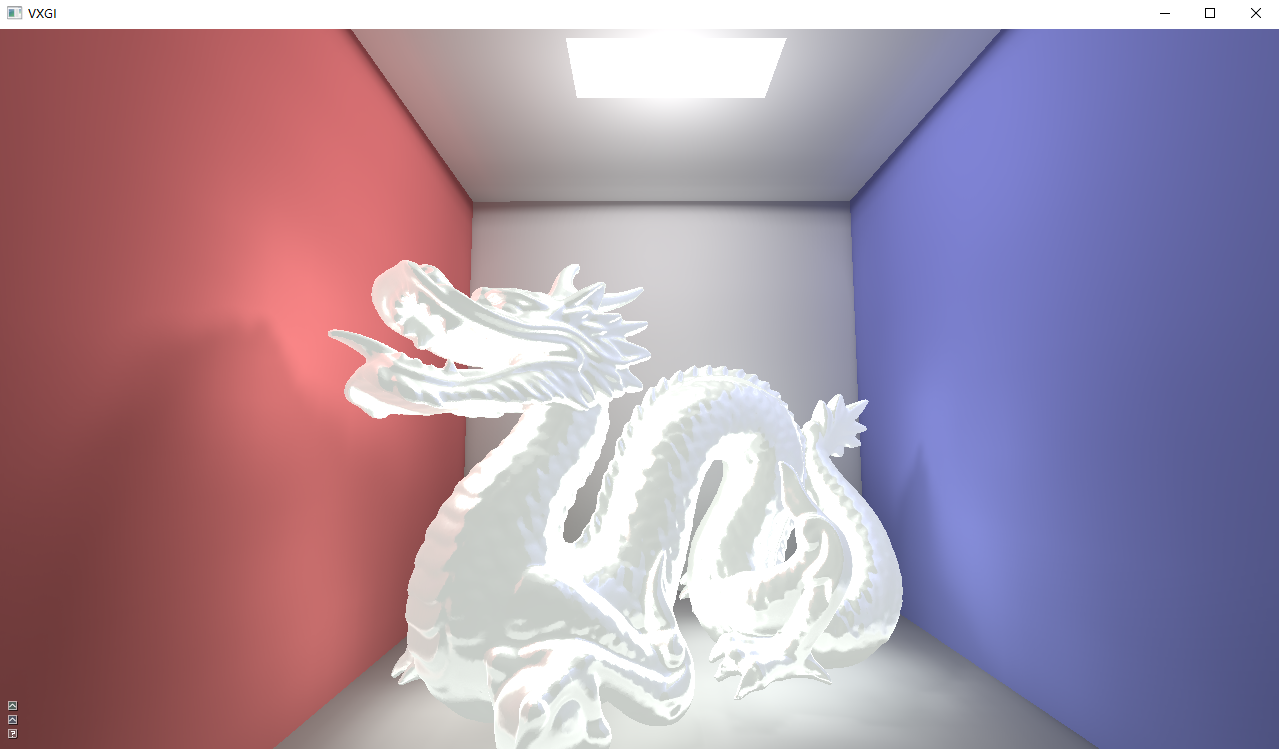

The above is all the Shader codes of VXGI. The running results can be seen:

Direct illumination is as follows:

Indirect illumination_ Diffuse, as follows:

Indirect illumination_ Specular reflection, as follows:

Combined with soft shadows, they are superimposed as follows:

We can also add self illumination to the object material, so we can render as follows:

4.3 voxel_visualization (voxel visualization)

VS:

#version 450 core

layout(location = 0) in vec3 position;

uniform mat4 M;

uniform mat4 V;

uniform mat4 P;

out vec3 worldPosition;

void main(){

worldPosition = vec3(M * vec4(position, 1));

gl_Position = P * V * vec4(worldPosition, 1);

}

PS:

#version 450 core

in vec3 worldPosition;

out vec4 color;

void main(){ color.rgb = worldPosition; }

VS:

#version 450 core

uniform mat4 V;

layout(location = 0) in vec3 position;

out vec2 textureCoordinateFrag;

// ( from [-1, 1] to [0, 1]).

vec2 scaleAndBias(vec2 p) { return 0.5f * p + vec2(0.5f); }

void main(){

textureCoordinateFrag = scaleAndBias(position.xy);

gl_Position = vec4(position, 1);

}

PS:

// 3D texture shader for visualization: simple path tracking clip shader

#version 450 core

#define INV_STEP_LENGTH (1.0f/STEP_LENGTH)

#define STEP_LENGTH 0.005f

uniform sampler2D textureBack; // FBO on the back of the unit cube

uniform sampler2D textureFront; // FBO on the front of the unit cube

uniform sampler3D texture3D; // Texture of storage element

uniform vec3 cameraPosition; // The location of the world camera

uniform int state = 0; // mipmap sampling level

in vec2 textureCoordinateFrag;

out vec4 color;

// (from [-1, 1] to [0, 1]).

vec3 scaleAndBias(vec3 p) { return 0.5f * p + vec3(0.5f); }

// Returns true if p is within a unified cube (+ e) centered on (0,0,0)

bool isInsideCube(vec3 p, float e) { return abs(p.x) < 1 + e && abs(p.y) < 1 + e && abs(p.z) < 1 + e; }

void main() {

const float mipmapLevel = state;

// Initialize ray

const vec3 origin = isInsideCube(cameraPosition, 0.2f) ?

cameraPosition : texture(textureFront, textureCoordinateFrag).xyz;

vec3 direction = texture(textureBack, textureCoordinateFrag).xyz - origin;

const uint numberOfSteps = uint(INV_STEP_LENGTH * length(direction));

direction = normalize(direction);

// Trace.

color = vec4(0.0f);

for(uint step = 0; step < numberOfSteps && color.a < 0.99f; ++step) {

const vec3 currentPoint = origin + STEP_LENGTH * step * direction;

vec3 coordinate = scaleAndBias(currentPoint);

vec4 currentSample = textureLod(texture3D, scaleAndBias(currentPoint), mipmapLevel);

color += (1.0f - color.a) * currentSample;

}

color.rgb = pow(color.rgb, vec3(1.0 / 2.2));

}

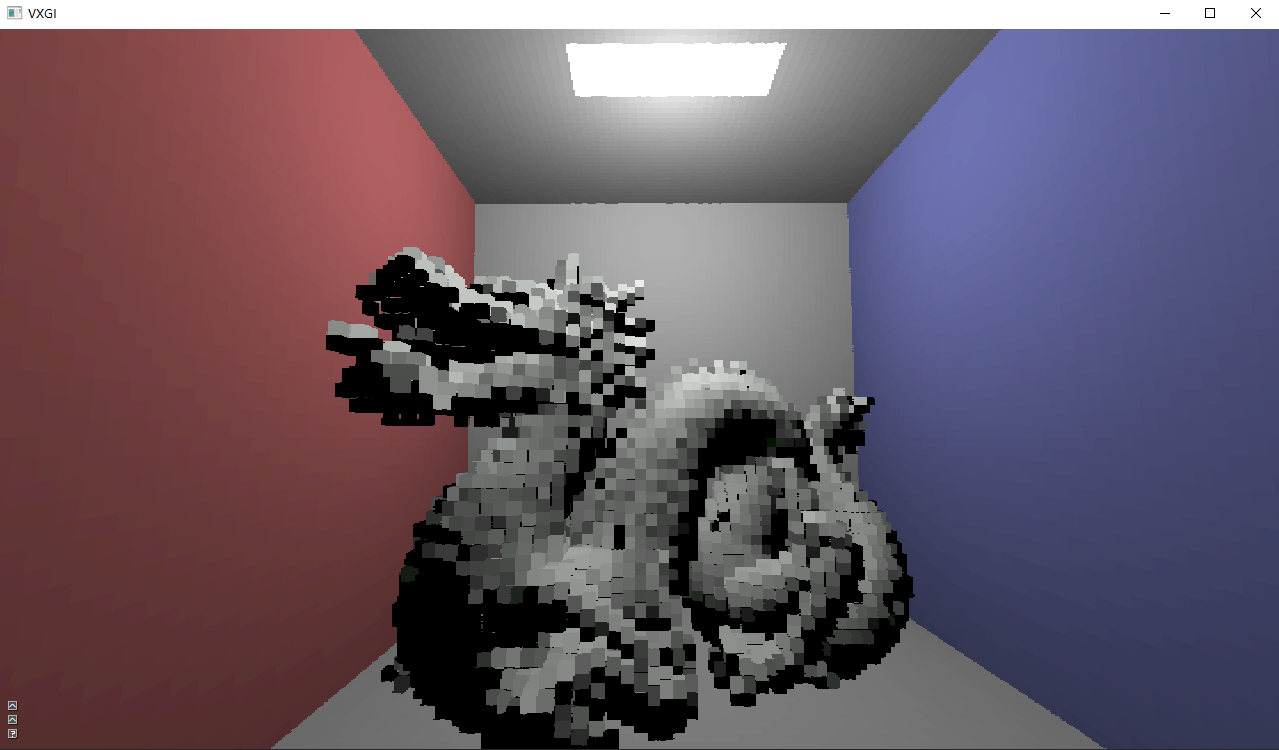

The voxels visible when executing this shader are as follows:

Let's take a look at the voxels that emit light: