Go Concurrency: goroutine and channel

I believe you are already familiar with processes and threads. In order to squeeze the performance of multi-core processors, all languages show their power, and Go is no exception. However, Go is somewhat different from other languages, and users need to control and allocate resources themselves.

goroutine Preface

This mechanism is called goroutine in go. In fact, the main() function used all the time is using it. When the program starts, the Go program will create a default goroutine for the main() function.

Although we control not to use goroutine, the scheduling and management of its own runtime is still controlled by go. The Go program will intelligently allocate the tasks in goroutine to each CPU, and we don't need to worry about the mechanism of process scheduling and context switching

goroutine usage

It's easy to use. You can start a goroutine by using the keyword go. Let's create an example and try

demo1. Go (without goroutine)

package main

import (

"fmt"

"time"

)

func goroutineEmp(i int) {

fmt.Println("Hello, i m ", i)

time.Sleep(time.Second)

}

func main(){

startTime := time.Now()

for i := 0; i < 10; i++ {

goroutineEmp(i)

}

fmt.Println("============")

spendTime := time.Since(startTime)

fmt.Println("Spend Time:", spendTime)

}

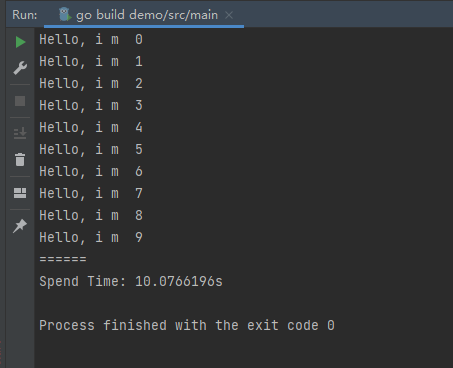

Output results

demo1. Go (use goroutine)

package main

import (

"fmt"

"time"

)

func goroutineEmp(i int) {

fmt.Println("Hello, i m ", i)

time.Sleep(time.Second)

fmt.Println("wake up!")

}

func main(){

startTime := time.Now()

for i := 0; i < 10; i++ {

go goroutineEmp(i)

}

fmt.Println("============")

spendTime := time.Since(startTime)

fmt.Println("Spend Time:", spendTime)

}

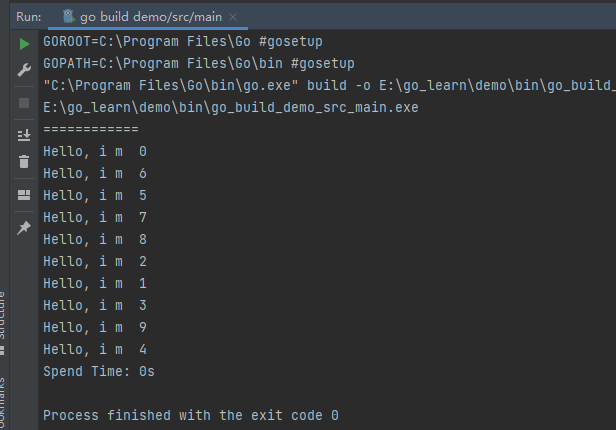

function

It can be found that the wakeup in goroutineEmp has not been started, and the ten Hello parts are all finished, because calling ten goroutine processes at the same time has run and exited before waking up in one second. You may find that some have finished before printing hello. So how to ensure the running sequence and use concurrency that people love and hate? Let's introduce the concept of process pipeline

Channel

It doesn't matter whether the channel has a channel or a channel. Don't make a mistake in English. It seems almost meaningless if you can't exchange data in the concurrent execution function, and exchanging data here can solve our sequence problem. You may ask, can't shared memory be used for data exchange, but it will obviously lead to data inconsistency in different goroutine s. In order to solve new problems, lock it again. What else?

The solution given by Go is to use communication instead of shared memory, that is, to use channels

The data structure of the pipeline is a first in first out queue to ensure the order of data sending and receiving

Create method

channel example := make(chan data type) chan1 := make(chan int) //Create a shaped pipe

Send & receive

ch := make(chan int) //Declare a chan of type int ch <- 0 //Pipeline sends messages (here is to insert 0) data := <-ch //< - CH fetch message operation, assign the fetched message to data

Note that it cannot be run directly here. For example, the sending and receiving of pipeline information needs to be carried out in two different goroutine s

Let's change the above demo and introduce the concept of pipeline to ensure data order

demo2.go

package main

import (

"fmt"

"time"

)

func goroutineEmpChan(chan1 chan int) {

fmt.Println("Hello, i m from chan ", <-chan1)

time.Sleep(time.Second)

}

func main(){

startTime := time.Now()

chan1 := make(chan int)

for i := 0; i < 10; i++ {

go goroutineEmpChan(chan1)

chan1 <- i

}

fmt.Println("============")

spendTime := time.Since(startTime)

fmt.Println("Spend Time:", spendTime)

}

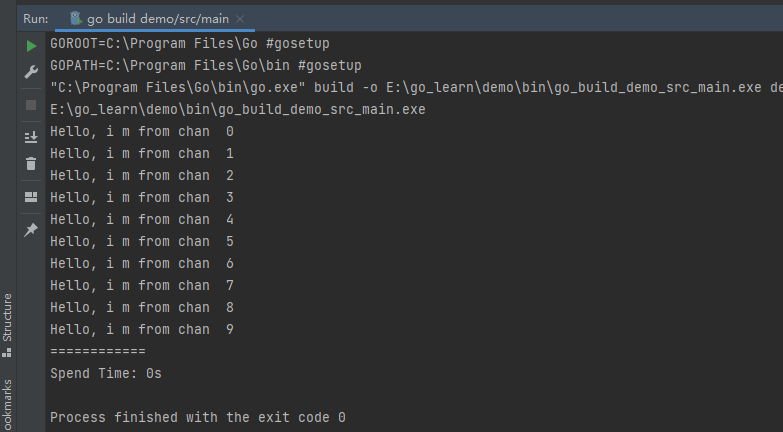

function

The discovery is in line with expectations, but there are still many characteristics of the channel

We found that the above pipeline is equivalent to throwing out the data without storing it. What if several data are not taken away in time?

Unbuffered pipe

demo3.go

func main(){

chan2 := make(chan int)

go func() {

for i := 0; i < 3; i++ {

chan2 <- i

fmt.Println("send out ", i, " Here's the pipe")

time.Sleep(time.Second)

}

}()

fmt.Println("The first data received is", <-chan2)

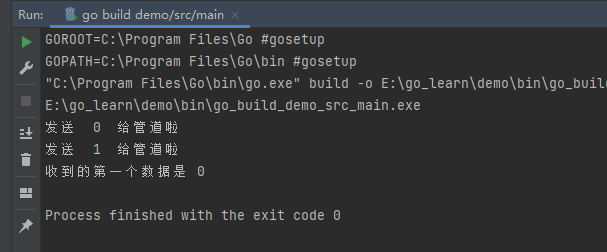

}

For simple demonstration, we only put an anonymous function to test and found that the second data can not be sent out, which is like the relationship between producers and consumers. You produce a resource to the pipeline, and the pipeline can only receive one at a time. Moreover, if this is not consumed, the second one will not be received, because it can't store things, and if no one consumes, it won't purchase goods, Ridiculous? At this time, we introduced the buffered pipeline

Buffered pipe

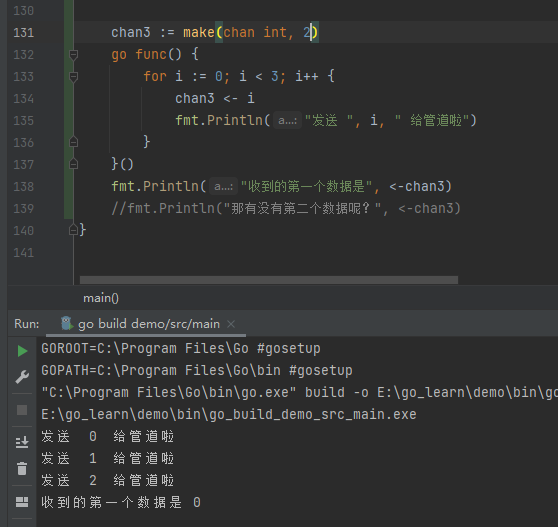

demo4.go

func main(){

chan3 := make(chan int, 1) //The second parameter here is the length of the channel

go func() {

for i := 0; i < 3; i++ {

chan3 <- i

fmt.Println("send out ", i, " Here's the pipe")

}

}()

fmt.Println("The first data received is", <-chan3)

//fmt.Println("is there a second data?"<- chan3)

}

function

It can be found that we took it once and sent two messages in because the length of the pipeline is 1. We can install an additional message for consumption. What if the length is changed to 2?

There's really nothing wrong. If you don't believe it, you can also call len(channel) to query the length of the pipeline