http://blog.csdn.net/wild46cat/article/details/53423472

Hadoop 2.7.3 configures multiple namenodes (federation clusters) in the cluster

First of all, configuring multiple namenodes in a cluster and using secondary Namenode in a cluster are two completely different things. I will write an official translation of haoop later, explaining the difference between the two. Let's talk about it briefly. The second aryNamenode's role is to share the pressure on the namenode and help the namenode do some processing regularly. Configuring multiple namenodes is equivalent to configuring a federated cluster, where each anmenode does not communicate and manages its own namespace.

Okay, here we go.

Of course, the premise of completing this configuration is:

1. Has been able to configure a hadoop cluster with a single namenode.

2. The haoop cluster should be fully distributed (pseudo-distributed is not tested, but single-point estimation is not feasible).

1. Hardware environment:

host1

192.168.1.221

host2

192.168.1.222

host3

192.168.1.223

Configuration files

Where host1 is used as a namenode, host2 as a namenode, and host3 as a datanode.

Configuration file (same on each host): hdfs-site.xml

-

<?xml version="1.0" encoding="UTF-8"?>

-

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

-

<configuration>

-

-

<property>

-

<name>dfs.namenode.name.dir</name>

-

<value>file:/home/hadoop/dfs/name</value>

-

</property>

-

<property>

-

<name>dfs.datanode.data.dir</name>

-

<value>file:/home/hadoop/dfs/data</value>

-

</property>

-

<property>

-

<name>dfs.replication</name>

-

<value>2</value>

-

</property>

-

<property>

-

<name>dfs.webhdfs.enabled</name>

-

<value>true</value>

-

</property>

-

<property>

-

<name>dfs.datanode.max.transfer.threads</name>

-

<value>4096</value>

-

</property>

-

-

<property>

-

<name>dfs.federation.nameservices</name>

-

<value>host1,host2</value>

-

</property>

-

-

<property>

-

<name>dfs.namenode.rpc-address.host1</name>

-

<value>host1:9000</value>

-

</property>

-

<property>

-

<name>dfs.namenode.http-address.host1</name>

-

<value>host1:50070</value>

-

</property>

-

<property>

-

<name>dfs.namenode.secondary.http-address.host1</name>

-

<value>host1:9001</value>

-

</property>

-

-

<property>

-

<name>dfs.namenode.rpc-address.host2</name>

-

<value>host2:9000</value>

-

</property>

-

<property>

-

<name>dfs.namenode.http-address.host2</name>

-

<value>host2:50070</value>

-

</property>

-

<property>

-

<name>dfs.namenode.secondary.http-address.host2</name>

-

<value>host2:9001</value>

-

</property>

-

</configuration>

Configuration file on host1: core-site.xml

-

<?xml version="1.0" encoding="UTF-8"?>

-

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

-

-

<configuration>

-

<property>

-

<name>fs.defaultFS</name>

-

<value>hdfs://host1:9000</value>

-

</property>

-

<property>

-

<name>hadoop.tmp.dir</name>

-

<value>file:/home/hadoop/tmp</value>

-

</property>

-

<property>

-

<name>io.file.buffer.size</name>

-

<value>131702</value>

-

</property>

-

</configuration>

Configuration file on host2: core-site.xml

-

<?xml version="1.0" encoding="UTF-8"?>

-

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

-

-

<configuration>

-

<property>

-

<name>fs.defaultFS</name>

-

<value>hdfs://host2:9000</value>

-

</property>

-

<property>

-

<name>hadoop.tmp.dir</name>

-

<value>file:/home/hadoop/tmp</value>

-

</property>

-

<property>

-

<name>io.file.buffer.size</name>

-

<value>131702</value>

-

</property>

-

</configuration>

Configuration file on host3: core-site.xml (df.defaultFS here is configurable to any one, as you need to explain)

-

<?xml version="1.0" encoding="UTF-8"?>

-

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

-

-

<configuration>

-

<property>

-

<name>fs.defaultFS</name>

-

<value>hdfs://host1:9000</value>

-

</property>

-

<property>

-

<name>hadoop.tmp.dir</name>

-

<value>file:/home/hadoop/tmp</value>

-

</property>

-

<property>

-

<name>io.file.buffer.size</name>

-

<value>131702</value>

-

</property>

-

</configuration>

Note that host3 as a datanode does not use the configuration in core-site.xml, while host1 and host2 read the local core-site.xml configuration file first when they read the file.

3. Test screenshots

Here is a simple test to show and prove that the two namenode s have their own namespaces separately.

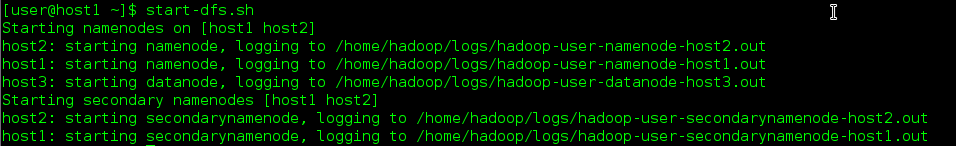

First, start hadoop:

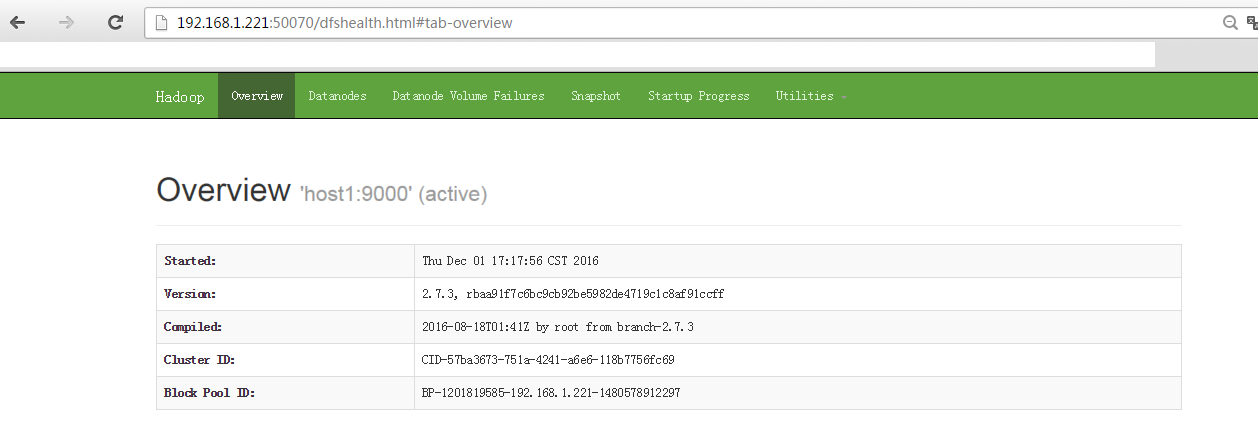

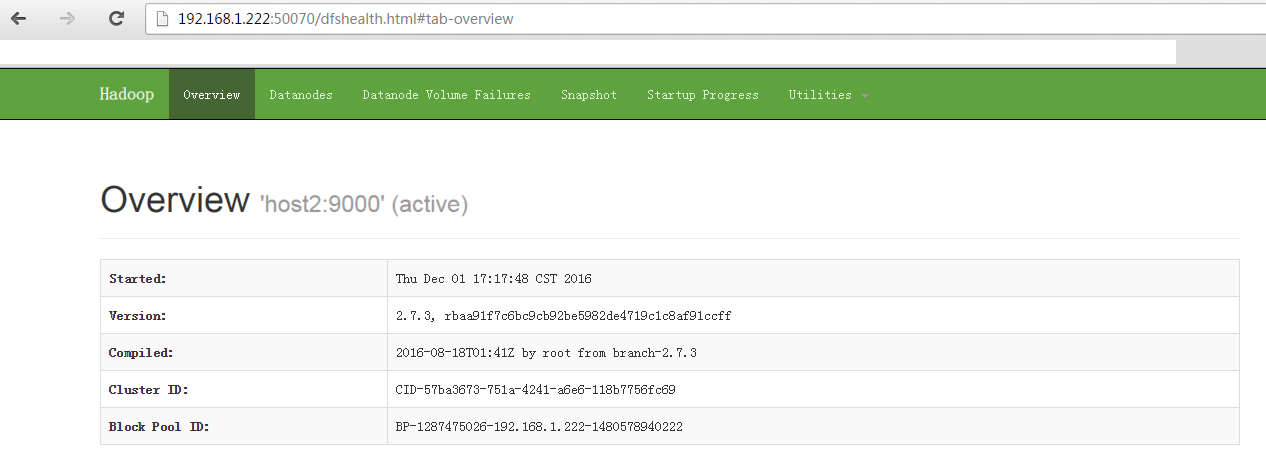

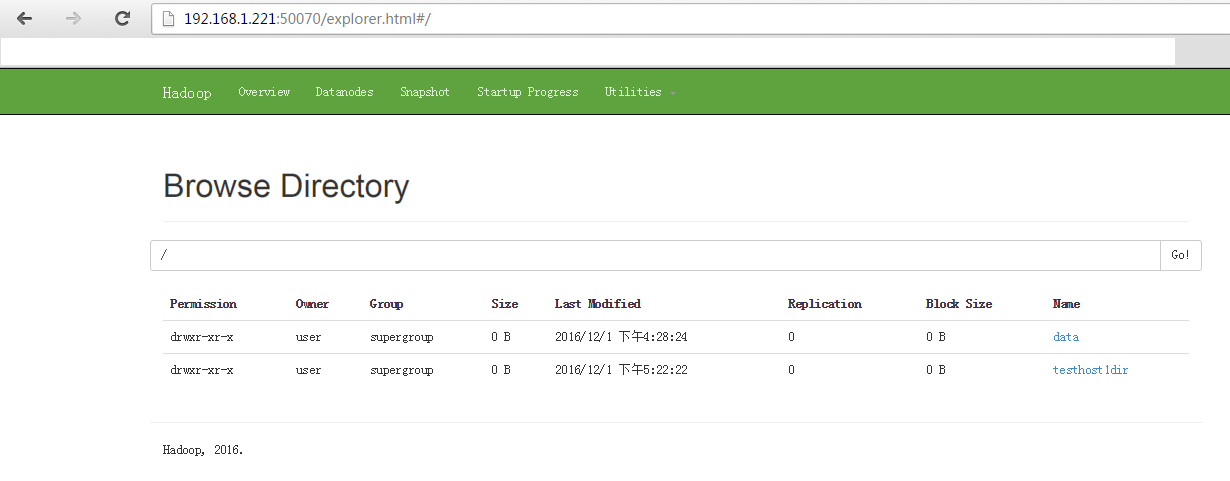

After startup, look at two namenode s from the web:

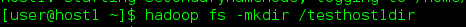

Create a directory in host1.

Folder in host1:

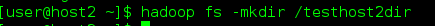

Create a directory in host2.

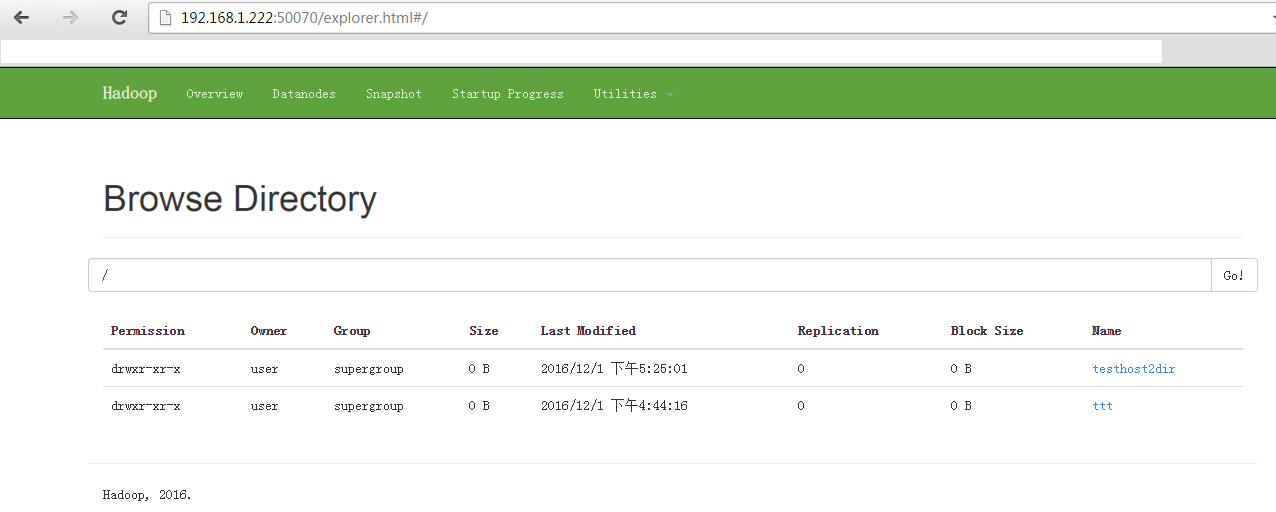

The folder in host2:

This shows that the two namenode s are separated and keep their corresponding tables of file blocks.