Familiar with common HDFS operation

1. Create a txt file in the "/ home/hadoop /" directory of the local Linux file system, where you can enter some words at will

2. View file location locally (ls)

3. Display file content locally

cd /home/hadoop ls touch test.txt vim test.txt cat test.txt

4. Use the command to upload the "txt" in the local file system to the input directory of the current user directory in HDFS.

5. View files (- ls) in hdfs

6. Display the contents of this file in hdfs

cd /usr/local/hadoop ./sbin/start-all.sh #Start service ./bin/hdfs dfs -put /home/hadoop/test.txt input #Upload file ./bin/hdfs dfs -ls input

Unable to upload

Prompt info SASL SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Common commands:

hdfs dfs -mkdir /input #Create / input folder hdfs dfs -rm -r /input #Delete / input folder hdfs dfs -put a.log /input #Upload a.log to / input folder hdfs dfs -get /input/a.log #Download a.log to your local computer hdfs dfs -cat a.log #View the contents of a.log hdfs dfs -rmdir /input #Delete directory

Experimental content

Upload file:

echo "test"+$(date) > test.txt echo $(date) > time.txt #Upload file hdfs dfs -put ./test.txt input hdfs dfs -put ./time.txt input

Additional files:

hdfs dfs -appendToFile test.txt time.txt hdfs dfs -ls input hdfs dfs -cat input/test.txt

Overwrite remote files from local:

hdfs dfs -copyFromLocal -f time.txt input/test.txt hdfs dfs -cat input/test.txt

Time is displayed Txt content

Download File

And rename

if [ -f ~/zhouqi1.txt ] then hadoop fs -get /input/test.txt ./test.txt else hadoop fs -get /input/test.txt ./test2.txt fi

Displays the read-write permission, size, creation time, path and other information of the file specified in HDFS

hdfs dfs -ls input/test.txt hdfs dfs -ls -h input/test.txt # -h set the file size in human readable format (kb,Mb, etc.) hdfs dfs -ls -t input/test.txt # -t sort the output by modification time (the latest takes precedence) hdfs dfs -ls -S input/test.txt #Sort the output by file size.

Given a directory in HDFS, output the read-write permission, size, creation time, path and other information of all files in the directory. If the file is a directory, recursively output the relevant information of all files in the directory;

hadoop fs -mkdir -p /input/1/2/3/4 #-p recursive creation hadoop fs -ls -R /input

Provide a path to the file in HDFS, and create and delete the file. If the directory where the file is located does not exist, the directory will be created automatically;

vim 6.sh #Write the following #!/bin/bash hadoop fs -test -d /input/6 if [ "$?" == "0" ] then hadoop fs -touchz /input/6/6.txt else hadoop fs -mkdir -p /input/6/6.txt fi #Add execution permission to the script chmod +x 6.sh #Execute script sh 6.sh #View run results hdfs dfs -ls -R input #Delete file hdfs dfs -rm input/6/6.txt #View run results hdfs dfs -ls -R input

Provide a path to the directory of HDFS, and create and delete the directory. When creating a directory, if the directory where the directory file is located does not exist, the corresponding directory will be automatically created; When deleting a directory, the user specifies whether to delete the directory when the directory is not empty;

vim 7.sh #Write the following #!/bin/bash hadoop fs -test -d /input/7 if [ "$?" != "0" ] then hadoop fs -mkdir /input/7 fi #Add execution permission to the script chmod +x 7.sh #Execute script sh 7.sh #View run results hdfs dfs -ls -R input #Delete directory hdfs dfs -rmdir input/7 #If the last instruction is not executed successfully, it shows that there are files in the directory hdfs dfs rm -r input/7 #View run results hdfs dfs -ls -R input

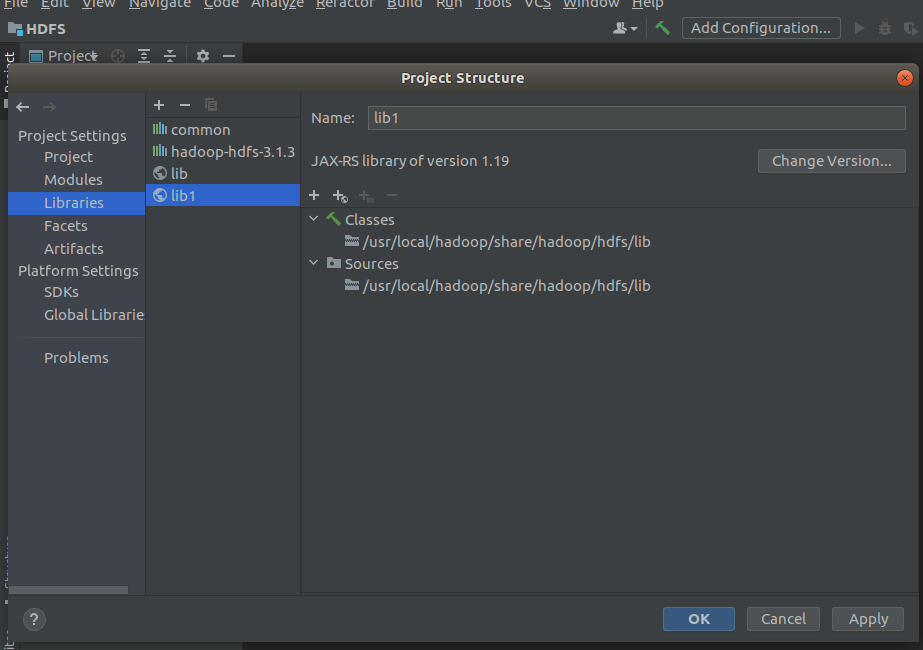

Install IDEA

download

Download directly from the official website: https://www.jetbrains.com/idea/download/#section=linux

https://download.jetbrains.com.cn/idea/ideaIU-2021.1.1.tar.gz

install

# Copy to specified path sudo cp ideaIU-2021.1.1.tar.gz /usr/local cd /usr/local # decompression sudo tar -zxvf ideaIU-2021.1.1.tar.gz # Delete file sudo rm -rf ideaIU-2021.1.1.tar.gz su - #function /usr/local/idea-IU-211.7142.45/bin/idea.sh

java

to configure

New project default options next

Open Project Structure

Add package

Import all the jar packages required by the project (you need to import all the following jar packages)

(1) Hadoop-common-3.1.3. In / usr/local/hadoop/share/hadoop/common directory Jar and haoop-nfs-3.1.3 jar;

(2) All JAR packages in / usr/local/hadoop/share/hadoop/common/lib directory;

(3) / usr/local/hadoop/share/hadoop/hdfs

haoop-hdfs-3.1.3.jar

haoop-hdfs-nfs-3.1.3.jar

hadoop-hdfs-client-3.1.3.jar

(4) All JAR packages in / usr/local/hadoop/share/hadoop/hdfs/lib directory.

It is recommended to import all packages in / usr/local/hadoop/share/hadoop

2

Start hadoop

Create a class named MyFSDataInputStream

code:

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.commons.io.IOUtils;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.net.MalformedURLException;

import java.net.URL;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.FsUrlStreamHandlerFactory;

import org.apache.hadoop.fs.Path;

public class MyFSDataInputStream extends FSDataInputStream {

private static Configuration conf ;

static{

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

}

MyFSDataInputStream(InputStream in)

{

super(in);

}

public static void Config(){

conf= new Configuration();

conf.set("fs.defaultFS","hdfs://localhost:9000");

conf.set("fs.hdfs.impl","org.apache.hadoop.hdfs.DistributedFileSystem");

}

public static int ReadLine(String path) throws IOException{

FileSystem fs = FileSystem.get(conf);

Path file = new Path(path);

FSDataInputStream getIt = fs.open(file);

BufferedReader d = new BufferedReader(new InputStreamReader(getIt));

String content;// = d.readLine(); // Read one line of file

if((content=d.readLine())!=null){

System.out.println(content);

}

// System.out.println(content);

d.close(); //Close file

fs.close(); //Turn off hdfs

return 0;

}

public static void PrintFile() throws MalformedURLException, IOException{

String FilePath="hdfs://localhost:9000/hadoop/test.txt";

InputStream in=null;

in=new URL(FilePath).openStream();

IOUtils.copy(in,System.out);

}

public static void main(String[] arg) throws IOException{

MyFSDataInputStream.Config();

MyFSDataInputStream.ReadLine("test.txt");

MyFSDataInputStream.PrintFile();

}

}

Run directly in IDEA after saving

error

Class org.apache.hadoop.hdfs.DistributedFileSystem not found

To import / usr / local / Hadoop / share / Hadoop / HDFS / hadoop-hdfs-client-3.1.3 Jar package

RPC response exceeds maximum data length

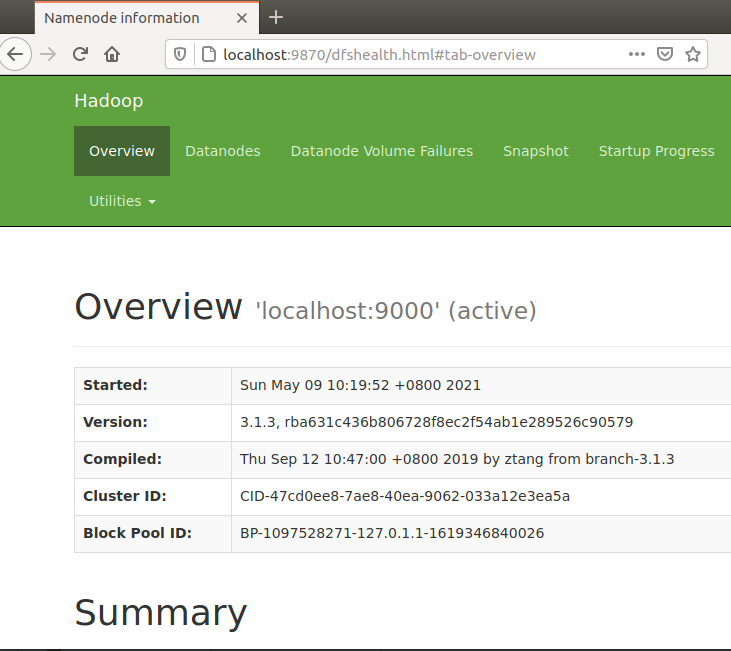

Failed on local exception: org.apache.hadoop.ipc.RpcException: RPC response exceeds maximum data length; Host Details : local host is: "nick-virtual-machine/127.0.1.1"; destination host is: "localhost":9870;

Enter jps to check whether NameNode and SecondNameNode are started

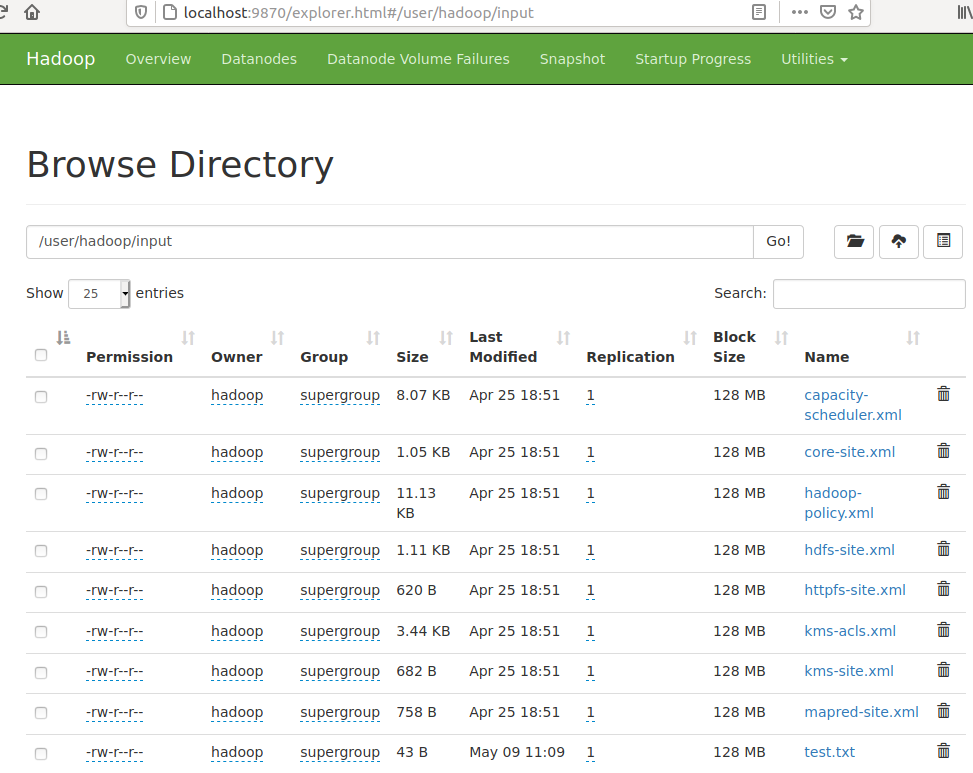

Visit localhost:9870 to confirm that the hadoop localhost:9000 port has been started

Or check: netstat -ano |grep 9000

This value uses the server core site Fs.xml file default. Value of name

There is also the problem that the file cannot be found. You can use the Utilities in the web page to find the file copy path.

Implement the above shell command with code

Code reference http://dblab.xmu.edu.cn/blog/2808-2/

1 upload files

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi {

/**

* Determine whether the path exists

*/

public static boolean test(Configuration conf, String path) throws IOException {

FileSystem fs = FileSystem.get(conf);

return fs.exists(new Path(path));

}

/**

* Copy files to the specified path

* If the path already exists, overwrite it

*/

public static void copyFromLocalFile(Configuration conf, String localFilePath, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path localPath = new Path(localFilePath);

Path remotePath = new Path(remoteFilePath);

/* fs.copyFromLocalFile The first parameter indicates whether to delete the source file, and the second parameter indicates whether to overwrite it */

fs.copyFromLocalFile(false, true, localPath, remotePath);

fs.close();

}

/**

* Add file content

*/

public static void appendToFile(Configuration conf, String localFilePath, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

/* Create a file read in stream */

FileInputStream in = new FileInputStream(localFilePath);

/* Create a file output stream, and the output content will be appended to the end of the file */

FSDataOutputStream out = fs.append(remotePath);

/* Read and write file contents */

byte[] data = new byte[1024];

int read = -1;

while ( (read = in.read(data)) > 0 ) {

out.write(data, 0, read);

}

out.close();

in.close();

fs.close();

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String localFilePath = "/home/hadoop/text.txt"; // Local path

String remoteFilePath = "/user/hadoop/text.txt"; // HDFS path

String choice = "append"; // If the file exists, append to the end of the file

// String choice = "overwrite"; // Overwrite if file exists

try {

/* Determine whether the file exists */

Boolean fileExists = false;

if (HDFSApi.test(conf, remoteFilePath)) {

fileExists = true;

System.out.println(remoteFilePath + " Already exists.");

} else {

System.out.println(remoteFilePath + " non-existent.");

}

/* Processing */

if ( !fileExists) { // If the file does not exist, upload it

HDFSApi.copyFromLocalFile(conf, localFilePath, remoteFilePath);

System.out.println(localFilePath + " Uploaded to " + remoteFilePath);

} else if ( choice.equals("overwrite") ) { // Select overlay

HDFSApi.copyFromLocalFile(conf, localFilePath, remoteFilePath);

System.out.println(localFilePath + " Covered " + remoteFilePath);

} else if ( choice.equals("append") ) { // Select append

HDFSApi.appendToFile(conf, localFilePath, remoteFilePath);

System.out.println(localFilePath + " Appended to " + remoteFilePath);

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

2 download files to local

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi {

/**

* Download files locally

* Judge whether the local path already exists. If it already exists, it will be renamed automatically

*/

public static void copyToLocal(Configuration conf, String remoteFilePath, String localFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

File f = new File(localFilePath);

/* If the file name exists, rename it automatically (add _0, _1...) after the file name */

if (f.exists()) {

System.out.println(localFilePath + " Already exists.");

Integer i = 0;

while (true) {

f = new File(localFilePath + "_" + i.toString());

if (!f.exists()) {

localFilePath = localFilePath + "_" + i.toString();

break;

}

}

System.out.println("Rename to: " + localFilePath);

}

// Download files locally

Path localPath = new Path(localFilePath);

fs.copyToLocalFile(remotePath, localPath);

fs.close();

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String localFilePath = "/home/hadoop/text.txt"; // Local path

String remoteFilePath = "/user/hadoop/text.txt"; // HDFS path

try {

HDFSApi.copyToLocal(conf, remoteFilePath, localFilePath);

System.out.println("Download complete");

} catch (Exception e) {

e.printStackTrace();

}

}

}

3 output display

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi {

/**

* Read file contents

*/

public static void cat(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FSDataInputStream in = fs.open(remotePath);

BufferedReader d = new BufferedReader(new InputStreamReader(in));

String line = null;

while ( (line = d.readLine()) != null ) {

System.out.println(line);

}

d.close();

in.close();

fs.close();

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String remoteFilePath = "/user/hadoop/text.txt"; // HDFS path

try {

System.out.println("read file: " + remoteFilePath);

HDFSApi.cat(conf, remoteFilePath);

System.out.println("\n Read complete");

} catch (Exception e) {

e.printStackTrace();

}

}

}

4 document information

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

import java.text.SimpleDateFormat;

public class HDFSApi {

/**

* Displays information about the specified file

*/

public static void ls(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FileStatus[] fileStatuses = fs.listStatus(remotePath);

for (FileStatus s : fileStatuses) {

System.out.println("route: " + s.getPath().toString());

System.out.println("jurisdiction: " + s.getPermission().toString());

System.out.println("size: " + s.getLen());

/* The returned time stamp is converted into time date format */

Long timeStamp = s.getModificationTime();

SimpleDateFormat format = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String date = format.format(timeStamp);

System.out.println("time: " + date);

}

fs.close();

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String remoteFilePath = "/user/hadoop/text.txt"; // HDFS path

try {

System.out.println("Read file information: " + remoteFilePath);

HDFSApi.ls(conf, remoteFilePath);

System.out.println("\n Read complete");

} catch (Exception e) {

e.printStackTrace();

}

}

}

5 directory file information

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

import java.text.SimpleDateFormat;

public class HDFSApi {

/**

* Displays the information of all files in the specified folder (recursive)

*/

public static void lsDir(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

/* Recursively obtain all files in the directory */

RemoteIterator<LocatedFileStatus> remoteIterator = fs.listFiles(dirPath, true);

/* Output information for each file */

while (remoteIterator.hasNext()) {

FileStatus s = remoteIterator.next();

System.out.println("route: " + s.getPath().toString());

System.out.println("jurisdiction: " + s.getPermission().toString());

System.out.println("size: " + s.getLen());

/* The returned time stamp is converted into time date format */

Long timeStamp = s.getModificationTime();

SimpleDateFormat format = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String date = format.format(timeStamp);

System.out.println("time: " + date);

System.out.println();

}

fs.close();

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String remoteDir = "/user/hadoop"; // HDFS path

try {

System.out.println("(recursion)Read the information of all files in the directory: " + remoteDir);

HDFSApi.lsDir(conf, remoteDir);

System.out.println("Read complete");

} catch (Exception e) {

e.printStackTrace();

}

}

}

6 file creation / deletion

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi {

/**

* Determine whether the path exists

*/

public static boolean test(Configuration conf, String path) throws IOException {

FileSystem fs = FileSystem.get(conf);

return fs.exists(new Path(path));

}

/**

* Create directory

*/

public static boolean mkdir(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

boolean result = fs.mkdirs(dirPath);

fs.close();

return result;

}

/**

* create a file

*/

public static void touchz(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FSDataOutputStream outputStream = fs.create(remotePath);

outputStream.close();

fs.close();

}

/**

* Delete file

*/

public static boolean rm(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

boolean result = fs.delete(remotePath, false);

fs.close();

return result;

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String remoteFilePath = "/user/hadoop/input/text.txt"; // HDFS path

String remoteDir = "/user/hadoop/input"; // Directory corresponding to HDFS path

try {

/* Judge whether the path exists. If it exists, delete it. Otherwise, create it */

if ( HDFSApi.test(conf, remoteFilePath) ) {

HDFSApi.rm(conf, remoteFilePath); // delete

System.out.println("Delete path: " + remoteFilePath);

} else {

if ( !HDFSApi.test(conf, remoteDir) ) { // If the directory does not exist, create it

HDFSApi.mkdir(conf, remoteDir);

System.out.println("create folder: " + remoteDir);

}

HDFSApi.touchz(conf, remoteFilePath);

System.out.println("Create path: " + remoteFilePath);

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

7 directory creation / deletion

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi {

/**

* Determine whether the path exists

*/

public static boolean test(Configuration conf, String path) throws IOException {

FileSystem fs = FileSystem.get(conf);

return fs.exists(new Path(path));

}

/**

* Determine whether the directory is empty

* true: Null, false: not null

*/

public static boolean isDirEmpty(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

RemoteIterator<LocatedFileStatus> remoteIterator = fs.listFiles(dirPath, true);

return !remoteIterator.hasNext();

}

/**

* Create directory

*/

public static boolean mkdir(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

boolean result = fs.mkdirs(dirPath);

fs.close();

return result;

}

/**

* Delete directory

*/

public static boolean rmDir(Configuration conf, String remoteDir) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path dirPath = new Path(remoteDir);

/* The second parameter indicates whether to delete all files recursively */

boolean result = fs.delete(dirPath, true);

fs.close();

return result;

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String remoteDir = "/user/hadoop/input"; // HDFS directory

Boolean forceDelete = false; // Force deletion

try {

/* Judge whether the directory exists. If it does not exist, it will be created and deleted */

if ( !HDFSApi.test(conf, remoteDir) ) {

HDFSApi.mkdir(conf, remoteDir); // Create directory

System.out.println("Create directory: " + remoteDir);

} else {

if ( HDFSApi.isDirEmpty(conf, remoteDir) || forceDelete ) { // Directory is empty or forcibly deleted

HDFSApi.rmDir(conf, remoteDir);

System.out.println("Delete directory: " + remoteDir);

} else { // Directory is not empty

System.out.println("The directory is not empty and cannot be deleted: " + remoteDir);

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

8. Additional contents

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi {

/**

* Determine whether the path exists

*/

public static boolean test(Configuration conf, String path) throws IOException {

FileSystem fs = FileSystem.get(conf);

return fs.exists(new Path(path));

}

/**

* Append text content

*/

public static void appendContentToFile(Configuration conf, String content, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

/* Create a file output stream, and the output content will be appended to the end of the file */

FSDataOutputStream out = fs.append(remotePath);

out.write(content.getBytes());

out.close();

fs.close();

}

/**

* Add file content

*/

public static void appendToFile(Configuration conf, String localFilePath, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

/* Create a file read in stream */

FileInputStream in = new FileInputStream(localFilePath);

/* Create a file output stream, and the output content will be appended to the end of the file */

FSDataOutputStream out = fs.append(remotePath);

/* Read and write file contents */

byte[] data = new byte[1024];

int read = -1;

while ( (read = in.read(data)) > 0 ) {

out.write(data, 0, read);

}

out.close();

in.close();

fs.close();

}

/**

* Move files to local

* After deleting the source file

*/

public static void moveToLocalFile(Configuration conf, String remoteFilePath, String localFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

Path localPath = new Path(localFilePath);

fs.moveToLocalFile(remotePath, localPath);

}

/**

* create a file

*/

public static void touchz(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FSDataOutputStream outputStream = fs.create(remotePath);

outputStream.close();

fs.close();

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String remoteFilePath = "/user/hadoop/text.txt"; // HDFS file

String content = "New additions\n";

String choice = "after"; //Append to end of file

// String choice = "before"; // Append to beginning of file

try {

/* Determine whether the file exists */

if ( !HDFSApi.test(conf, remoteFilePath) ) {

System.out.println("file does not exist: " + remoteFilePath);

} else {

if ( choice.equals("after") ) { // Append at the end of the file

HDFSApi.appendContentToFile(conf, content, remoteFilePath);

System.out.println("Appended to end of file" + remoteFilePath);

} else if ( choice.equals("before") ) { // Append to beginning of file

/* There is no corresponding api to operate directly, so move the file locally first*/

/*Create a new HDFS and append the contents in order */

String localTmpPath = "/user/hadoop/tmp.txt";

// Move to local

HDFSApi.moveToLocalFile(conf, remoteFilePath, localTmpPath);

// Create a new file

HDFSApi.touchz(conf, remoteFilePath);

// Write new content first

HDFSApi.appendContentToFile(conf, content, remoteFilePath);

// Write the original content again

HDFSApi.appendToFile(conf, localTmpPath, remoteFilePath);

System.out.println("Appended content to beginning of file: " + remoteFilePath);

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

9 delete specified file

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi {

/**

* Delete file

*/

public static boolean rm(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

boolean result = fs.delete(remotePath, false);

fs.close();

return result;

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String remoteFilePath = "/user/hadoop/text.txt"; // HDFS file

try {

if ( HDFSApi.rm(conf, remoteFilePath) ) {

System.out.println("File deletion: " + remoteFilePath);

} else {

System.out.println("Operation failed (file does not exist or deletion failed)");

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

10 moving files

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import java.io.*;

public class HDFSApi {

/**

* move file

*/

public static boolean mv(Configuration conf, String remoteFilePath, String remoteToFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path srcPath = new Path(remoteFilePath);

Path dstPath = new Path(remoteToFilePath);

boolean result = fs.rename(srcPath, dstPath);

fs.close();

return result;

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String remoteFilePath = "hdfs:///user/hadoop/text.txt "; / / source file HDFS path

String remoteToFilePath = "hdfs:///user/hadoop/new.txt "; / / destination HDFS path

try {

if ( HDFSApi.mv(conf, remoteFilePath, remoteToFilePath) ) {

System.out.println("Will file " + remoteFilePath + " Move to " + remoteToFilePath);

} else {

System.out.println("operation failed(The source file does not exist or the move failed)");

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

MyFSDataInputStream

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.*;

public class MyFSDataInputStream extends FSDataInputStream {

public MyFSDataInputStream(InputStream in) {

super(in);

}

/**

* Read by line

* Read in one character at a time, end with "\ n" and return one line of content

*/

public static String readline(BufferedReader br) throws IOException {

char[] data = new char[1024];

int read = -1;

int off = 0;

// When the loop is executed, br each time it will continue reading from the end of the last reading

//So in this function, off starts from 0 every time

while ( (read = br.read(data, off, 1)) != -1 ) {

if (String.valueOf(data[off]).equals("\n") ) {

off += 1;

break;

}

off += 1;

}

if (off > 0) {

return String.valueOf(data);

} else {

return null;

}

}

/**

* Read file contents

*/

public static void cat(Configuration conf, String remoteFilePath) throws IOException {

FileSystem fs = FileSystem.get(conf);

Path remotePath = new Path(remoteFilePath);

FSDataInputStream in = fs.open(remotePath);

BufferedReader br = new BufferedReader(new InputStreamReader(in));

String line = null;

while ( (line = MyFSDataInputStream.readline(br)) != null ) {

System.out.println(line);

}

br.close();

in.close();

fs.close();

}

/**

* Main function

*/

public static void main(String[] args) {

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://localhost:9000");

String remoteFilePath = "/user/hadoop/text.txt"; // HDFS path

try {

MyFSDataInputStream.cat(conf, remoteFilePath);

} catch (Exception e) {

e.printStackTrace();

}

}

}

HDFS reads through URL

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import java.io.*;

import java.net.URL;

public class HDFSApi {

static{

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

}

/**

* Main function

*/

public static void main(String[] args) throws Exception {

String remoteFilePath = "hdfs:///user/hadoop/text. Txt "; / / HDFS file

InputStream in = null;

try{

/* Open the data stream through the URL object and read data from it */

in = new URL(remoteFilePath).openStream();

IOUtils.copyBytes(in,System.out,4096,false);

} finally{

IOUtils.closeStream(in);

}

}

}