preface

Hello, everyone, I'm asong. Last article: Hands on implementation of a localcache - Design This paper introduces the points to consider in designing a local cache. Some readers' feedback can learn from the storage design of bigcache, which can reduce the pressure of GC. This is something I didn't consider before. This excellent open source design is worth learning. Therefore, before I started, I read several high-quality local cache libraries and summarized the excellent designs of each open source library, Let's take a look at this article.

Efficient concurrent access

A simple implementation of local caching can use map [string] interface {} + sync Combination of rwmutex, using sync Rwmutex optimizes the read, but when the concurrency comes up, it becomes a serial read, and the goroutine waiting for the lock will block. In order to solve this problem, we can divide barrels and use a lock for each barrel to reduce competition. Bucket splitting can also be understood as fragmentation. Each cache object is hashed (key) according to its key, and then segmented: hash(key)%N, n is the number of slices to be segmented; Ideally, each request falls on its own slice on average, and there is basically no lock competition.

The implementation of sharding mainly considers two points:

The selection of hash algorithm shall have the following characteristics:

- The hash result has high dispersion rate, that is, high randomness

- Avoid unnecessary memory allocation and avoid the pressure caused by garbage collection

- Hash algorithm is efficient

- The number of slices is not the more, the better. According to experience, we can choose the second power of N for the number of slices. In order to improve efficiency, we can also use bit operation instead of remainder.

In the open source local cache library, bigcache, go cache and freecache all implement the fragmentation function. The hash of bigcache selects fnv64a algorithm, the hash of go cache selects djb2 algorithm, and freecache selects xxhash algorithm. These three algorithms are all non encrypted hash algorithms. Which algorithm is better? We need to comprehensively consider the above three points. First, compare the operation efficiency. In the case of the same string, compare the benchmark:

func BenchmarkFnv64a(b *testing.B) {

b.ResetTimer()

for i:=0; i < b.N; i++{

fnv64aSum64("test")

}

b.StopTimer()

}

func BenchmarkXxxHash(b *testing.B) {

b.ResetTimer()

for i:=0; i < b.N; i++{

hashFunc([]byte("test"))

}

b.StopTimer()

}

func BenchmarkDjb2(b *testing.B) {

b.ResetTimer()

max := big.NewInt(0).SetUint64(uint64(math.MaxUint32))

rnd, err := rand.Int(rand.Reader, max)

var seed uint32

if err != nil {

b.Logf("occur err %s", err.Error())

seed = insecurerand.Uint32()

}else {

seed = uint32(rnd.Uint64())

}

for i:=0; i < b.N; i++{

djb33(seed,"test")

}

b.StopTimer()

}

Operation results:

goos: darwin goarch: amd64 pkg: github.com/go-localcache cpu: Intel(R) Core(TM) i9-9880H CPU @ 2.30GHz BenchmarkFnv64a-16 360577890 3.387 ns/op 0 B/op 0 allocs/op BenchmarkXxxHash-16 331682492 3.613 ns/op 0 B/op 0 allocs/op BenchmarkDjb2-16 334889512 3.530 ns/op 0 B/op 0 allocs/op

Through the comparison results, we can observe that the operation efficiency of Fnv64a algorithm is still very high. Next, we compare the randomness. First, we randomly generate 100000 strings, which are different;

func init() {

insecurerand.Seed(time.Now().UnixNano())

for i := 0; i < 100000; i++{

randString[i] = RandStringRunes(insecurerand.Intn(10))

}

}

var letterRunes = []rune("abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ")

func RandStringRunes(n int) string {

b := make([]rune, n)

for i := range b {

b[i] = letterRunes[insecurerand.Intn(len(letterRunes))]

}

return string(b)

}Then we run unit tests to count the number of conflicts:

func TestFnv64a(t *testing.T) {

m := make(map[uint64]struct{})

conflictCount :=0

for i := 0; i < len(randString); i++ {

res := fnv64aSum64(randString[i])

if _,ok := m[res]; ok{

conflictCount++

}else {

m[res] = struct{}{}

}

}

fmt.Printf("Fnv64a conflict count is %d", conflictCount)

}

func TestXxxHash(t *testing.T) {

m := make(map[uint64]struct{})

conflictCount :=0

for i:=0; i < len(randString); i++{

res := hashFunc([]byte(randString[i]))

if _,ok := m[res]; ok{

conflictCount++

}else {

m[res] = struct{}{}

}

}

fmt.Printf("Xxxhash conflict count is %d", conflictCount)

}

func TestDjb2(t *testing.T) {

max := big.NewInt(0).SetUint64(uint64(math.MaxUint32))

rnd, err := rand.Int(rand.Reader, max)

conflictCount := 0

m := make(map[uint32]struct{})

var seed uint32

if err != nil {

t.Logf("occur err %s", err.Error())

seed = insecurerand.Uint32()

}else {

seed = uint32(rnd.Uint64())

}

for i:=0; i < len(randString); i++{

res := djb33(seed,randString[i])

if _,ok := m[res]; ok{

conflictCount++

}else {

m[res] = struct{}{}

}

}

fmt.Printf("Djb2 conflict count is %d", conflictCount)

}

Operation results:

Fnv64a conflict count is 27651--- PASS: TestFnv64a (0.01s) Xxxhash conflict count is 27692--- PASS: TestXxxHash (0.01s) Djb2 conflict count is 39621--- PASS: TestDjb2 (0.01s)

Comprehensive comparison, using fnv64a algorithm will be better.

Reduce GC

Go language has a garbage collector, and the process of GC is also very time-consuming. Therefore, to achieve high performance, how to avoid GC is also an important thinking point. Freecache and bigcache both claim to avoid high GC. The design of bigcache to avoid high GC is based on the special treatment of map during garbage collection in go language; In go1 After 5, if the key and value in the map object do not contain pointers, the garbage collector will ignore them. The keys and values at this point do not use pointers, so GC can be avoided. Bigcache uses the hash value as the key, then serializes the cache data and puts it into a pre allocated byte array, and uses offset as the value. Using the pre allocated slice will only add an additional object to the GC. Because the byte slice does not contain other pointer data except its own object, the marking time of the GC for the whole object is O(1). The specific principle still needs to be understood through the source code. It is recommended to see the original author's article: https://dev.to/douglasmakey/h... ; The author wrote a simple version of cache based on BigCache, and then explained the above principle through code, which is more easy to understand.

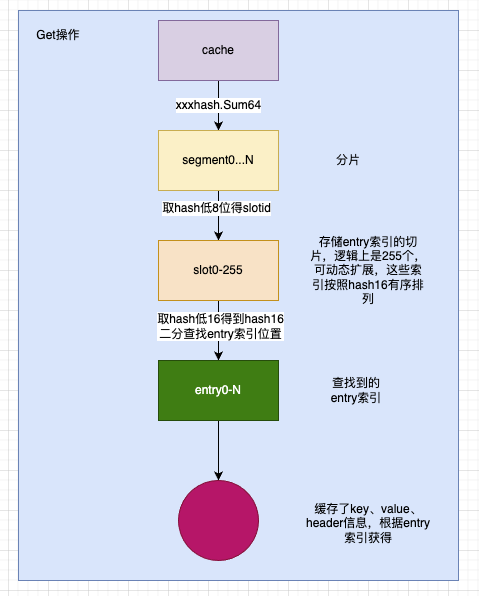

freecache implements a ringbuffer structure by itself. By reducing the number of pointers, the map is realized with zero GC overhead. The key and value are saved in ringbuffer, and the index is used to find objects. freecache is different from the traditional hash table implementation. There is a slot concept in the implementation. Draw a summary diagram, and don't look at the source code:

Recommended articles

- https://colobu.com/2019/11/18...

- https://dev.to/douglasmakey/h...

- https://studygolang.com/artic...

- https://blog.csdn.net/chizhen...

summary

In an efficient local cache, concurrent access and reducing GC are the most important. Before starting, I read the elegant design in these libraries and directly overturned the code I wrote before. There is really no perfect design. No matter how the design is designed, there will be sacrifices at some points, which is inevitable. The road of software development is still blocked and long. The code implemented by ourselves is still in the process of sewing and patching. It will be issued after it is improved. It all depends on your help.

Well, that's the end of this article. I'm asong. I'll see you next time.

* * welcome to the official account: Golang DreamWorks.