Author: you Luying, member of Datawhale, Fuzhou University

Image segmentation is another basic task in computer vision besides classification and detection. It means to divide the picture into different blocks according to the content. Compared with image classification and detection, segmentation is a more delicate work, because each pixel needs to be classified.

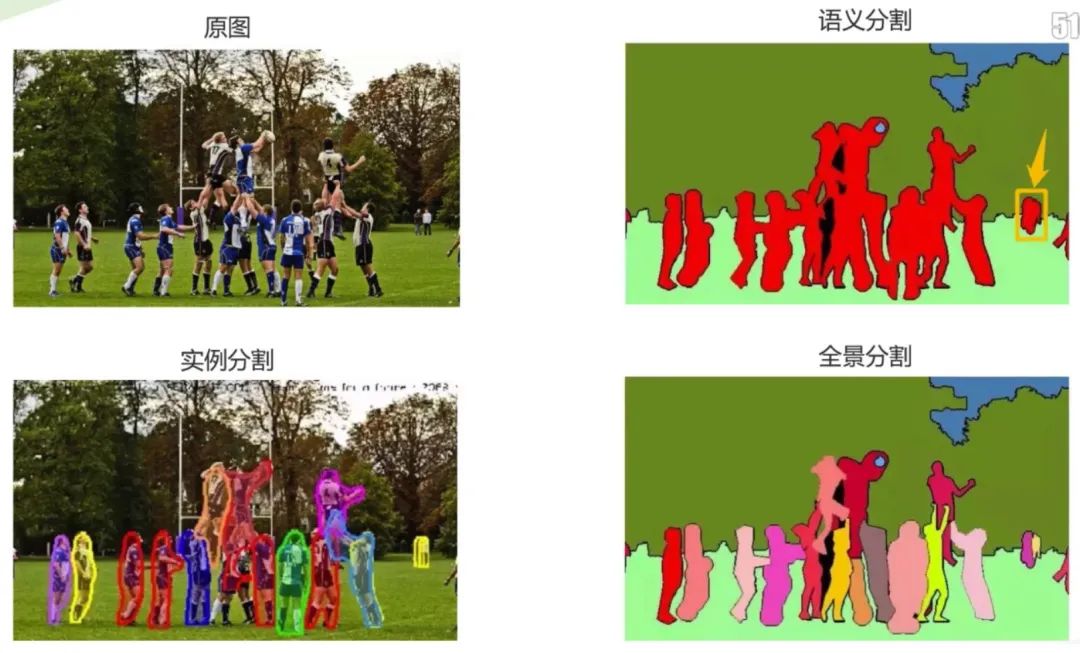

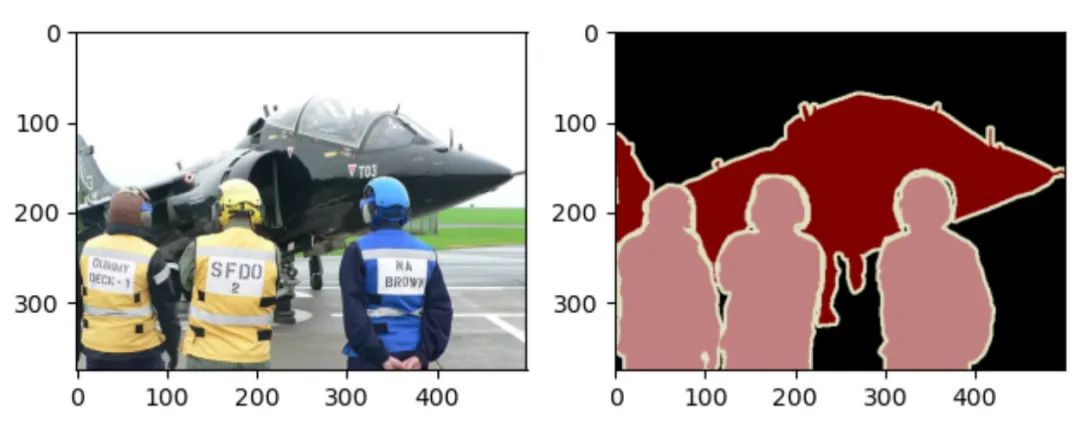

As shown in the street scene segmentation in the figure below, because each pixel is classified, the outline of the object is accurately outlined, rather than giving the boundary box as detection.

Image segmentation can be divided into the following three sub areas: semantic segmentation, instance segmentation and panoramic segmentation.

It can be found from the comparison diagram that semantic segmentation is to identify the image from the pixel level and formulate category markers for each pixel in the image. At present, it is widely used in medical images and unmanned driving; Instance segmentation is relatively more challenging. It not only needs to correctly detect the target in the image, but also accurately segment each instance; Panoramic segmentation integrates two tasks, which requires that each pixel in the image must be assigned to a semantic label and an instance id.

01 key steps in semantic segmentation

In network training, it is often necessary to preprocess semantic label graph or instance segmentation graph. For example, for a color label map, the category represented by each color is obtained through the color mapping table, and then it is converted into the corresponding mask or Onehot coding to complete the training. The key steps will be explained here.

Firstly, taking the semantic segmentation task as an example, the different expressions of tags are introduced.

1.1 semantic label diagram

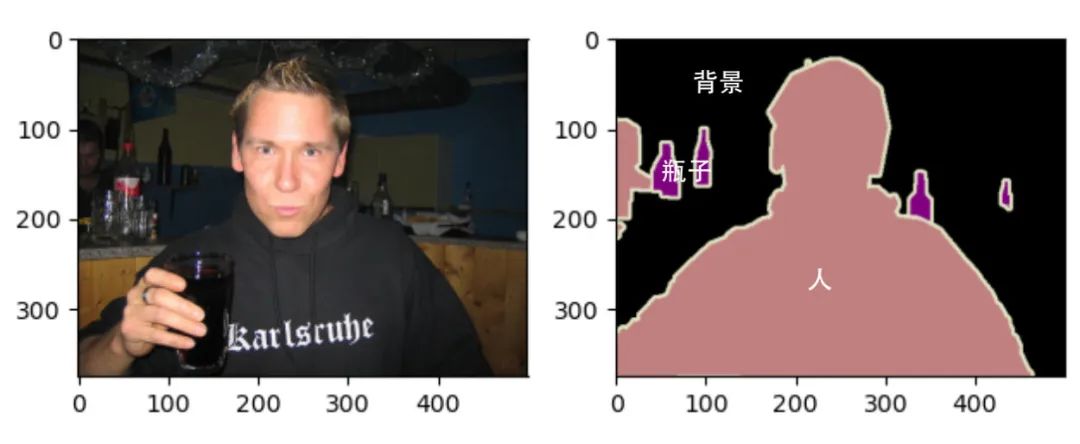

The semantic segmentation dataset includes original image and semantic label image, both of which are RGB images with the same size.

In the label image, white and black represent the border and background respectively, while other different colors represent different categories:

1.2 single channel mask

If the RGB value of each label corresponds to its own label category, it is easy to find the category index of each pixel in the label and generate a single channel Mask.

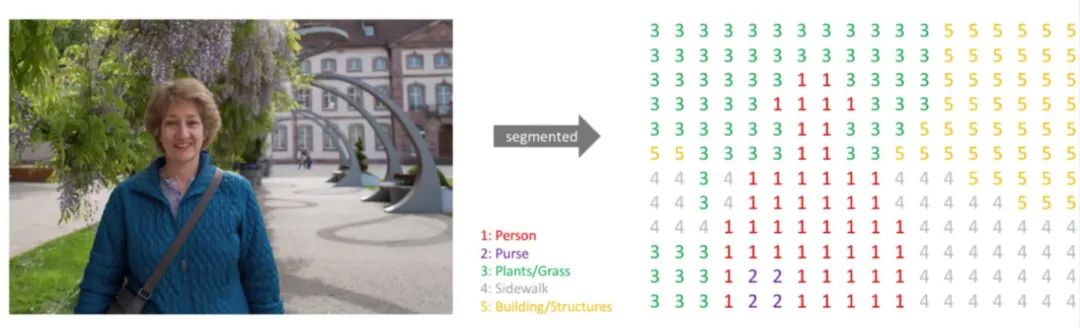

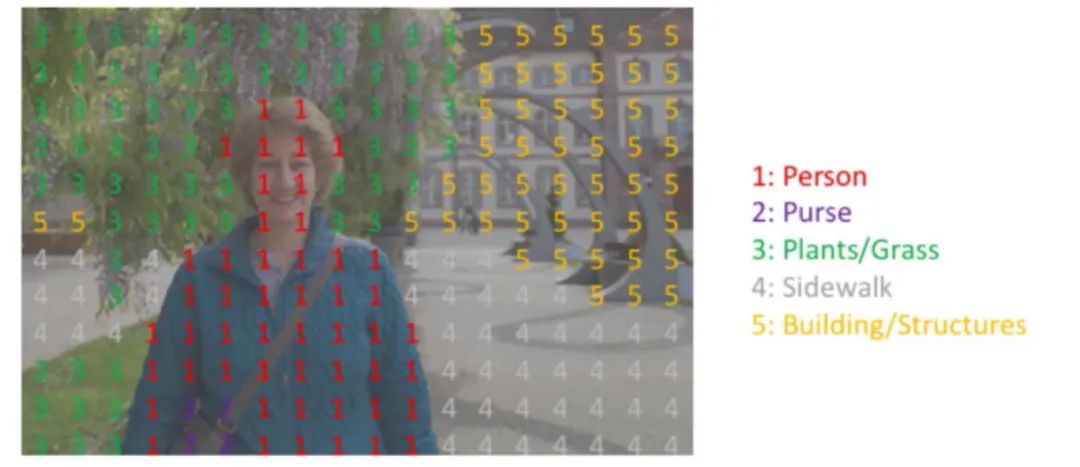

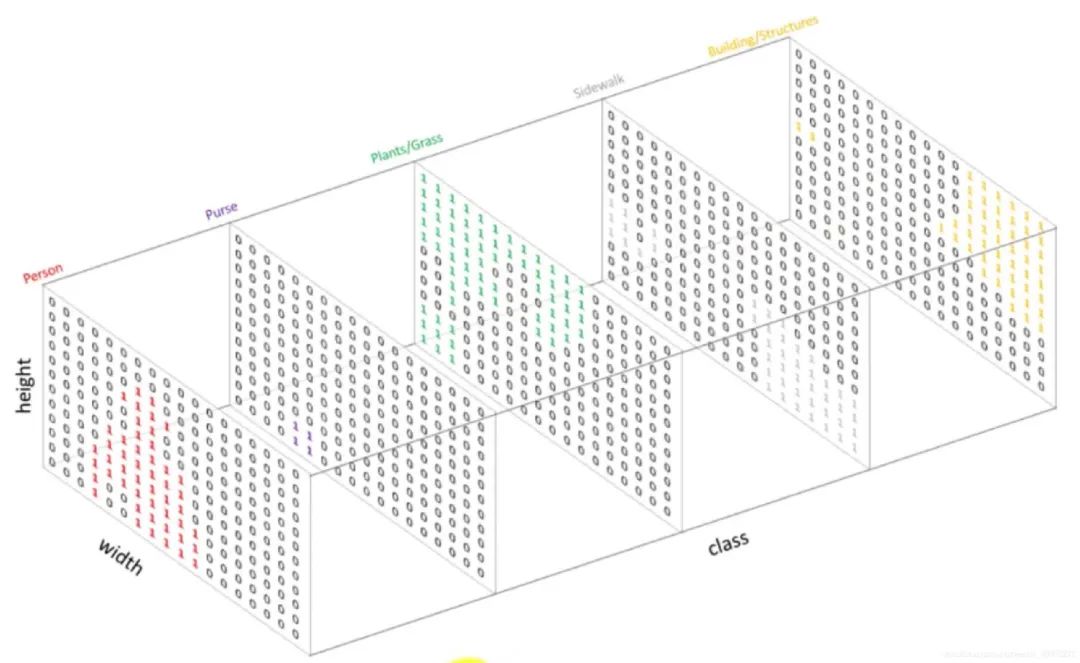

As shown in the figure below, the annotation categories include: Person, purpose, Plants, Sidewalk and Building. After converting the semantic label map into a single channel mask, it is shown in the right figure. The size remains the same, but the number of channels changes from 3 to 1.

Each pixel position corresponds one by one.

1.3 Onehot coding

As an encoding method, Onehot can encode each single channel mask.

For example, for the Mask image Mask, the image size is [H,W,1], and there are five label categories. We need to change the Mask into a Onehot output with five channels, and the size is [H,W,5], that is, extract the pixels with all values of 1 in the Mask to generate a graph, set the corresponding position to 1, and the rest to 0. Then extract and regenerate all 2 into a graph, set the corresponding position to 1, the rest to 0, and so on.

02 semantic segmentation practice

Next, take Pascal VOC 2012 semantic segmentation dataset as an example to introduce how different expressions should be converted to each other.

Pascal VOC 2012 semantic segmentation dataset is adopted in practice. It is a very important dataset in semantic segmentation task. There are 20 categories of targets, including human, motor vehicle and other categories, which can be used for target category or background segmentation.

Data set open source address:

https://gas.graviti.cn/dataset/yluy/VOC2012Segmentation

2.1 data set reading

This time, the grid titanium data platform service is used to complete the online reading of data sets. The platform supports a variety of data set types and provides many public data sets for easy use. Make some necessary preparations before use:

- Fork dataset: if you need to use a public dataset, you need to fork it to your own account first.

- Get AccessKey: get the key required to interact with Gewu titanium data platform using SDK. The link is https://gas.graviti.cn/tensorbay/developer

- Understanding Segment: further division of data set. For example, VOC data set is divided into "train" and "test".

import os

from tensorbay import GAS

from tensorbay.dataset import Data, Dataset

from tensorbay.label import InstanceMask, SemanticMask

from PIL import Image

import numpy as np

import torchvision

import matplotlib.pyplot as plt

ACCESS_KEY = "<YOUR_ACCESSKEY>"

gas = GAS(ACCESS_KEY)

def read_voc_images(is_train=True, index=0):

"""

read voc image using tensorbay

"""

dataset = Dataset("VOC2012Segmentation", gas)

if is_train:

segment = dataset["train"]

else:

segment = dataset["test"]

data = segment[index]

feature = Image.open(data.open()).convert("RGB")

label = Image.open(data.label.semantic_mask.open()).convert("RGB")

visualize(feature, label)

return feature, label # PIL Image

def visualize(feature, label):

"""

visualize feature and label

"""

fig = plt.figure()

ax = fig.add_subplot(121) # First subgraph

ax.imshow(feature)

ax2 = fig.add_subplot(122) # Second subgraph

ax2.imshow(label)

plt.show()

train_feature, train_label = read_voc_images(is_train=True, index=10)

train_label = np.array(train_label) # (375, 500, 3)

2.2 color mapping table

After the color semantic label map is obtained, a color table mapping can be constructed to list the values of each RGB color in the label and its labeled categories.

def colormap_voc():

"""

create a colormap

"""

colormap = [[0, 0, 0], [128, 0, 0], [0, 128, 0], [128, 128, 0],

[0, 0, 128], [128, 0, 128], [0, 128, 128], [128, 128, 128],

[64, 0, 0], [192, 0, 0], [64, 128, 0], [192, 128, 0],

[64, 0, 128], [192, 0, 128], [64, 128, 128], [192, 128, 128],

[0, 64, 0], [128, 64, 0], [0, 192, 0], [128, 192, 0],

[0, 64, 128]]

classes = ['background', 'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair', 'cow',

'diningtable', 'dog', 'horse', 'motorbike', 'person',

'potted plant', 'sheep', 'sofa', 'train', 'tv/monitor']

return colormap, classes

2.3 Label and Onehot conversion

According to the mapping table, realize the mutual conversion between semantic tag map and Onehot coding:

def label_to_onehot(label, colormap):

"""

Converts a segmentation label (H, W, C) to (H, W, K) where the last dim is a one

hot encoding vector, C is usually 1 or 3, and K is the number of class.

"""

semantic_map = []

for colour in colormap:

equality = np.equal(label, colour)

class_map = np.all(equality, axis=-1)

semantic_map.append(class_map)

semantic_map = np.stack(semantic_map, axis=-1).astype(np.float32)

return semantic_map

def onehot_to_label(semantic_map, colormap):

"""

Converts a mask (H, W, K) to (H, W, C)

"""

x = np.argmax(semantic_map, axis=-1)

colour_codes = np.array(colormap)

label = np.uint8(colour_codes[x.astype(np.uint8)])

return label

colormap, classes = colormap_voc()

semantic_map = mask_to_onehot(train_label, colormap)

print(semantic_map.shape) # [H, W, K] = [375, 500, 21] including 21 categories of background

label = onehot_to_label(semantic_map, colormap)

print(label.shape) # [H, W, K] = [375, 500, 3]

2.4 Onehot and Mask conversion

Similarly, with the help of the mapping table, the mutual conversion between single channel Mask and Onehot coding is realized:

def onehot2mask(semantic_map):

"""

Converts a mask (K, H, W) to (H,W)

"""

_mask = np.argmax(semantic_map, axis=0).astype(np.uint8)

return _mask

def mask2onehot(mask, num_classes):

"""

Converts a segmentation mask (H,W) to (K,H,W) where the last dim is a one

hot encoding vector

"""

semantic_map = [mask == i for i in range(num_classes)]

return np.array(semantic_map).astype(np.uint8)

mask = onehot2mask(semantic_map.transpose(2,0,1))

print(np.unique(mask)) # [0 1 15] the index corresponds to background, aircraft and people

print(mask.shape) # (375, 500)

semantic_map = mask2onehot(mask, len(colormap))

print(semantic_map.shape) # (21, 375, 500)