1, Viewing storage system information

hdfs dfsadmin -report [-live] [-dead] [-decommissioning]

Output the basic information and relevant data statistics of the file system

[root@master ~]# hdfs dfsadmin -report

Output the basic information and relevant data statistics of online nodes in the file system

[root@master ~]# hdfs dfsadmin -report -live

Output the basic information and relevant data statistics of failed nodes in the file system

[root@master ~]# hdfs dfsadmin -report -dead

Output the basic information and related data statistics of deactivated nodes in the file system

[root@master ~]# hdfs dfsadmin -report -decommissioning

2, File operation command

[root@master ~]# hdfs dfs //All operation commands of hdfs can be queried

(1) Basic operation of HDFS directory

1. Lists the files and directory information under the directory path

hdfs dfs -ls [-d] [-h] [-R] [

...]

-d: Take path as a normal file and output file information

-h: Format output file and directory information

-R: Recursively lists the contents of a directory

Example:

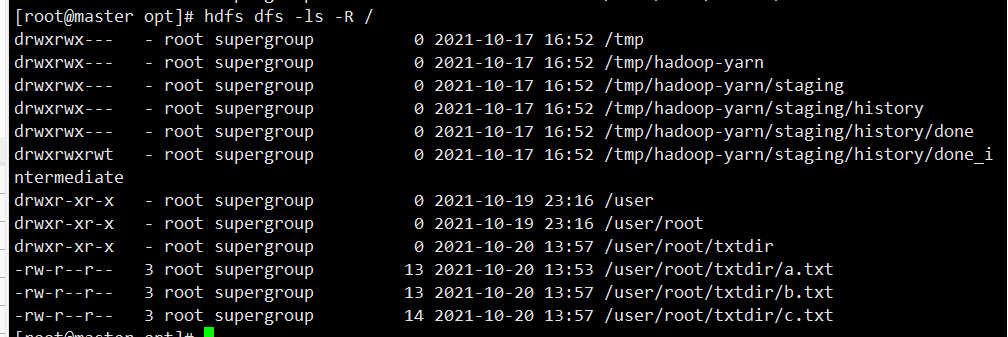

[root@master ~]# hdfs dfs -ls /

2. Create directory

hdfs dfs -mkdir [-p]

...

-p: No, you can only create directories level by level. If you add, you can create directories at multiple levels

[root@master ~]# hdfs dfs -mkdir -p /user/root/txtdir //Create / user/root/txtdir directory

3. Upload files to HDFS

| command | explain |

|---|---|

| hdfs dfs -put [-f] [-p] [-l] ... | Copy the file from the local system to the HDFS file system. The main parameters are the local file path and the upload target path. |

| hdfs dfs -copyFromLocal [-f] [-p] [-l] ... | Same as put |

| hdfs dfs -moveFromLocal ... | The same as put, but the local data source file will be deleted after copying. |

-f: Overwrite the target path, if any

-p: Retain the original attributes and permissions of the file

-l: Allow DataNode to delay persistent files to disk

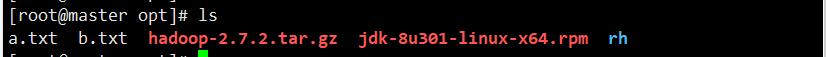

//Create a, b, c files in the / opt directory [root@master opt]# echo "I am student" >a.txt [root@master opt]# echo "I am teacher" >b.txt [root@master opt]# echo "I like Hadoop" >c.txt //And upload the file through the upload command [root@master opt]# hdfs dfs -put a.txt /user/root/txtdir [root@master opt]# hdfs dfs -copyFromLocal b.txt /user/root/txtdir [root@master opt]# hdfs dfs -moveFromLocal c.txt /user/root/txtdir

There are only a and b files in the view directory, but no c file. That's because the c file is uploaded with - moveFromlocal. It will copy the file to HDFS and delete the source file

View directories and files by traversing queries

4. View file contents

| command | explain |

|---|---|

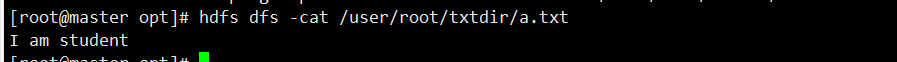

| hdfs dfs -cat [-ignoreCrc] ... | View the contents of the HDFS file. The main transmission indicates the file path. |

| hdfs dfs -text [-ignoreCrc] ... | Get the source file and output it in text format. The allowed formats are zip and TextRecordInputStream and Avro. |

| hdfs dfs -tail [-f] | Output the last 1KB data of HDFS file. The main parameters specify the file- f indicates the data added when the file grows |

Example:

5. Merge local small files and upload to HDFS

hdfs dfs -appendToFile ...

[root@master opt]# hdfs dfs -appendToFile a.txt b.txt /user/root/txtdir/merge.txt [root@master opt]# hdfs dfs -cat /user/root/txtdir/merge.txt I am student I am teacher

6. Merge all files in one directory of HDFS and download to local

hdfs dfs -getmerge [-nl]

nl: add a newline character at the end of each file

Example:

[root@master opt]# hdfs dfs -getmerge /user/root/txtdir/ /opt/merge [root@master opt]# cat merge I am student I am teacher I like Hadoop I am student I am teacher

7. Download file to local system

| command | explain |

|---|---|

| hdfs dfs -get [-p] [-ignoreCrc] [-crc] ... | Obtain the file of the specified path on the HDFS file system to the local file system. The main parameter is the HDFS file system path, which is the local file system path- p denotes reserved access and modification time, ownership, and mode. |

| hdfs dfs -copyToLocal [-p] [-ibnoreCrc] [-crc] ... | Same as get |

Example:

[root@master opt]# hdfs dfs -get /user/root/txtdir/c.txt /opt [root@master opt]# ls a.txt b.txt c.txt hadoop-2.7.2.tar.gz jdk-8u301-linux-x64.rpm merge rh

8. Delete files and directories

| command | explain |

|---|---|

| hdfs dfs [-rm] [-f] [-R] [-skipTrash] ... | Delete files on HDFS. The main parameter - r is used for recursive deletion, specifying the path to delete files- f: If the file does not exist, do not display diagnostic information or modify the exit status to reflect the error |

| hdfs dfs [-rmdir] [–ignore-fail-on-non-empty] ... | If you are deleting an empty directory, you can use this method to delete the main parameters Specify the directory path. |

[root@master opt]# hdfs dfs -rm -r /user/root/txtdir