1, Foreword

Gesture event collection is the core function of iOS click event collection. The implementation idea of gesture event collection is not complex, but there are many difficulties. This paper gives solutions to these difficulties one by one.

Let's take a look at how to collect gesture events in iOS.

2, Gesture introduction

Apple provides UIGestureRecognizer[1] related classes to handle gesture operations. Common gestures are as follows:

-

UITapGestureRecognizer: click;

-

UILongPressGestureRecognizer: press and hold;

-

UIPinchGestureRecognizer: kneading;

-

UIRotationGestureRecognizer: rotate.

The UIGestureRecognizer class defines a set of common behaviors that can be configured for all specific gesture recognizers.

The gesture recognizer can respond to the touch of a specific view, so it needs to associate the view with the gesture through the - addGestureRecognizer: method of UIView.

A gesture recognizer can have multiple target action pairs, which are independent of each other. After gesture recognition, a message will be sent to each target action pair.

3, Acquisition scheme

Because each gesture recognizer can be associated with multiple target actions, combined with the Method Swizzling of Runtime, we can add an additional target action pair to collect events when users add target actions for gestures.

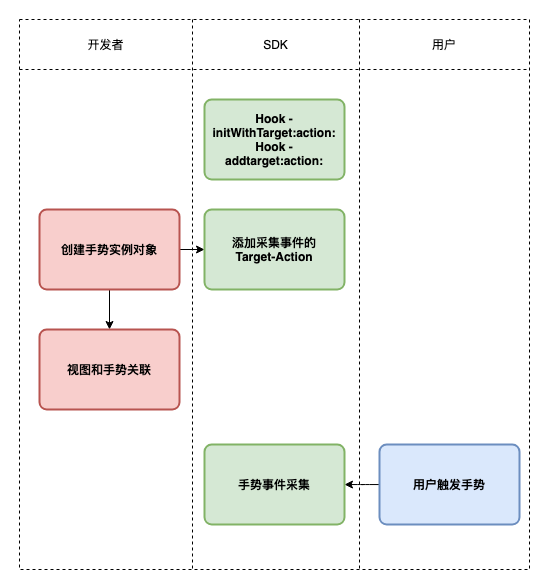

The overall process is shown in Figure 3-1:

Figure 3-1 gesture event collection process

Let's look at the specific code implementation.

1. Method Swizzling:

- (void)enableAutoTrackGesture { static dispatch_once_t onceToken; dispatch_once(&onceToken, ^{ [UIGestureRecognizer sa_swizzleMethod:@selector(initWithTarget:action:) withMethod:@selector(sensorsdata_initWithTarget:action:) error:NULL]; [UIGestureRecognizer sa_swizzleMethod:@selector(addTarget:action:) withMethod:@selector(sensorsdata_addTarget:action:) error:NULL]; });}2. Add a target action to collect events:

- (void)sensorsdata_addTarget:(id)target action:(SEL)action { self.sensorsdata_gestureTarget = [SAGestureTarget targetWithGesture:self]; [self sensorsdata_addTarget:self.sensorsdata_gestureTarget action:@selector(trackGestureRecognizerAppClick:)]; [self sensorsdata_addTarget:target action:action];}3. Gesture event collection:

- (void)trackGestureRecognizerAppClick:(UIGestureRecognizer *)gesture { // Gesture event collection...}Through Method Swizzling, we can collect gesture events as desired, but there is a problem: many behaviors of the system are also realized through gestures, which will also be collected by us, but our original intention is to collect only gestures added by users.

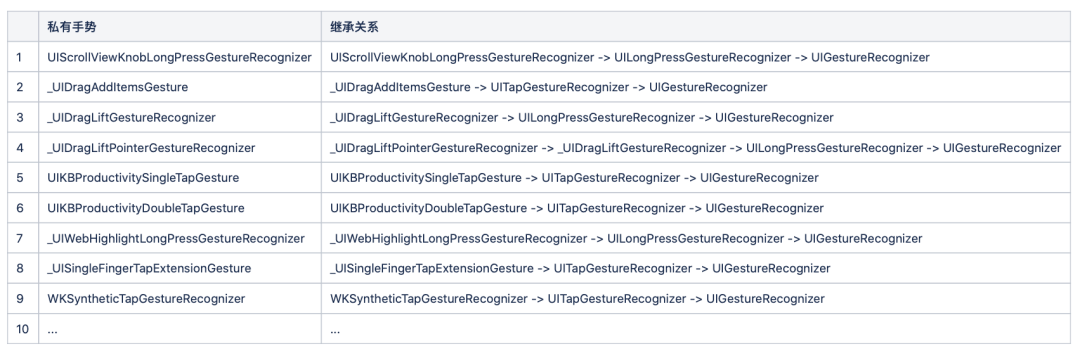

Some private gestures are shown in table 3-1:

Table 3-1 some private gestures

How not to collect private gesture events has become an urgent problem to be solved.

3.1 shielding system

There is no essential difference between private gestures and public gestures. They both inherit or indirectly inherit from UIGestureRecognizer class.

When a Target action is added to the gesture, we can judge whether the current gesture is a private gesture of the system through the Bundle of the class to which the Target object belongs.

The bundle format of the system library is as follows:

/System/Library/PrivateFrameworks/UIKitCore.framework/System/Library/Frameworks/WebKit.framework

The developer's bundle format is as follows:

/private/var/containers/Bundle/Application/8264D420-DE23-48AC-9985-A7F1E131A52A/CDDStoreDemo.app

The implementation is as follows:

- (BOOL)isPrivateClassWithObject:(NSObject *)obj { if (!obj) { return NO; } NSString *bundlePath = [[NSBundle bundleForClass:[obj class]] bundlePath]; if ([bundlePath hasPrefix:@"/System/Library"]) { return YES; } return NO;}It should be noted that this method is not applicable to simulators.

This scheme can distinguish whether it is a system private gesture, but when the added Target is the UIGestureRecognizer instance object itself, it can not distinguish whether it is a gesture event to be collected, so this scheme is not feasible.

3.2 only click and long press gestures are collected

During debugging, it can be found that most system private gestures are subclassed, and developers rarely subclass gestures. Therefore, we can only collect UITapGestureRecognizer and UILongPressGestureRecognizer gestures, and subclassed gestures are not collected.

When we create a Target object, we verify the gesture, and the gesture that meets the conditions returns a valid Target object.

+ (SAGestureTarget * _Nullable)targetWithGesture:(UIGestureRecognizer *)gesture { NSString *gestureType = NSStringFromClass(gesture.class); if ([gesture isMemberOfClass:UITapGestureRecognizer.class] || [gesture isMemberOfClass:UILongPressGestureRecognizer.class]) { return [[SAGestureTarget alloc] init]; } return nil;}4, Overcoming difficulties

So far, it seems that the acquisition of click and long press gestures can be realized normally. However, this is far from the case, and there are still some difficulties to be solved.

Scenario 1: after the developer adds the target action, it is removed;

Scenario 2: after the developer adds the Target action, the Target is released in some scenarios;

Scenario 3: although only UITapGestureRecognizer and UILongPressGestureRecognizer are collected, some private gestures of the system are not subclassed and are collected incorrectly;

Scenario 4: UIAlertController click event collection requires special processing;

Scenario 5: some gesture states need special processing.

4.1 manage target action

For scenario 1 and scenario 2, the SDK should not collect gesture events. However, the SDK has added a target action, so it is necessary to judge whether there is a valid target action in addition to the target action added by the SDK during collection. If it does not exist, gesture events should not be collected.

For UIGestureRecognizer, the system does not provide a public API interface to obtain all target actions of the current gesture. Although you can use private API '_ targets', but it may have an impact on customers. Therefore, we record the number of target actions by ourselves through hook related methods.

Create a new sagesturetactactionmodel class to manage Target and Action:

@interface SAGestureTargetActionModel : NSObject @property (nonatomic, weak) id target;@property (nonatomic, assign) SEL action;@property (nonatomic, assign, readonly) BOOL isValid; - (instancetype)initWithTarget:(id)target action:(SEL)action;+ (SAGestureTargetActionModel * _Nullable)containsObjectWithTarget:(id)target andAction:(SEL)action fromModels:(NSArray <SAGestureTargetActionModel *>*)models; @end

Record the number of targets in - addTarget:action: and - removettarget: Action:

- (void)enableAutoTrackGesture { static dispatch_once_t onceToken; dispatch_once(&onceToken, ^{ ... [UIGestureRecognizer sa_swizzleMethod:@selector(removeTarget:action:) withMethod:@selector(sensorsdata_removeTarget:action:) error:NULL]; });} - (void)sensorsdata_addTarget:(id)target action:(SEL)action { if (self.sensorsdata_gestureTarget) { if (![SAGestureTargetActionModel containsObjectWithTarget:target andAction:action fromModels:self.sensorsdata_targetActionModels]) { SAGestureTargetActionModel *resulatModel = [[SAGestureTargetActionModel alloc] initWithTarget:target action:action]; [self.sensorsdata_targetActionModels addObject:resulatModel]; [self sensorsdata_addTarget:self.sensorsdata_gestureTarget action:@selector(trackGestureRecognizerAppClick:)]; } } [self sensorsdata_addTarget:target action:action];} - (void)sensorsdata_removeTarget:(id)target action:(SEL)action { if (self.sensorsdata_gestureTarget) { SAGestureTargetActionModel *existModel = [SAGestureTargetActionModel containsObjectWithTarget:target andAction:action fromModels:self.sensorsdata_targetActionModels]; if (existModel) { [self.sensorsdata_targetActionModels removeObject:existModel]; } } [self sensorsdata_removeTarget:target action:action];}During event collection, verify whether the collection conditions are met:

- (void)trackGestureRecognizerAppClick:(UIGestureRecognizer *)gesture { if ([SAGestureTargetActionModel filterValidModelsFrom:gesture.sensorsdata_targetActionModels].count == 0) { return NO; } // Gesture event collection...}4.2 blacklist

For scenario 3, the Shence SDK adds a blacklist configuration to shield the collection of these gestures by configuring the View type.

{ "public": [ "UIPageControl", "UITextView", "UITabBar", "UICollectionView", "UISearchBar" ], "private": [ "_UIContextMenuContainerView", "_UIPreviewPlatterView", "UISwitchModernVisualElement", "WKContentView", "UIWebBrowserView" ]}During type comparison, we distinguish between public and private types:

-

Public class name use - isKindOfClass: judgment;

-

The private class name is determined by string matching.

- (BOOL)isIgnoreWithView:(UIView *)view { ... // Public class name usage - iskindofclass: judge ID publicclasses = info [@ "public"]; if ([publicClasses isKindOfClass:NSArray.class]) { for (NSString *publicClass in (NSArray *)publicClasses) { if ([view isKindOfClass:NSClassFromString(publicClass)]) { return YES; } } } // The private class name uses string matching to determine ID privateclasses = info [@ "private"]; if ([privateClasses isKindOfClass:NSArray.class]) { if ([(NSArray *)privateClasses containsObject:NSStringFromClass(view.class)]) { return YES; } } return NO;}4.3 UIAlertController click event collection

UIAlertController implements user interaction through gestures internally, but the View in which the gesture is located is not the View operated by users, and the internal implementation is slightly different in different system versions.

We handle this special logic by using different processors.

Create a new factory class SAGestureViewProcessorFactory to determine the processor to use:

@implementation SAGestureViewProcessorFactory + (SAGeneralGestureViewProcessor *)processorWithGesture:(UIGestureRecognizer *)gesture { NSString *viewType = NSStringFromClass(gesture.view.class); if ([viewType isEqualToString:@"_UIAlertControllerView"]) { return [[SALegacyAlertGestureViewProcessor alloc] initWithGesture:gesture]; } if ([viewType isEqualToString:@"_UIAlertControllerInterfaceActionGroupView"]) { return [[SANewAlertGestureViewProcessor alloc] initWithGesture:gesture]; } return [[SAGeneralGestureViewProcessor alloc] initWithGesture:gesture];} @endThe differences are then processed in a specific processor:

#pragma mark - Adaptation iOS 10 former Alert@implementation SALegacyAlertGestureViewProcessor - (BOOL)isTrackable { if (![super isTrackable]) { return NO; } // Mask the click event of saalertcontroller uiviewcontroller * viewcontroller = [saautotrackutils findnextviewcontrollerbyresponder: self. Portrait. View]; if ([viewController isKindOfClass:UIAlertController.class] && [viewController.nextResponder isKindOfClass:SAAlertController.class]) { return NO; } return YES;} - (UIView *)trackableView { NSArray <UIView *>*visualViews = sensorsdata_searchVisualSubView(@"_UIAlertControllerCollectionViewCell", self.gesture.view); CGPoint currentPoint = [self.gesture locationInView:self.gesture.view]; for (UIView *visualView in visualViews) { CGRect rect = [visualView convertRect:visualView.bounds toView:self.gesture.view]; if (CGRectContainsPoint(rect, currentPoint)) { return visualView; } } return nil;} @ End #pragma mark - for iOS 10 and beyond Alert@implementation SANewAlertGestureViewProcessor - (BOOL)isTrackable { if (![super isTrackable]) { return NO; } // Mask the click event of saalertcontroller uiviewcontroller * viewcontroller = [saautotrackutils findnextviewcontrollerbyresponder: self. Portrait. View]; if ([viewController isKindOfClass:UIAlertController.class] && [viewController.nextResponder isKindOfClass:SAAlertController.class]) { return NO; } return YES;} - (UIView *)trackableView { NSArray <UIView *>*visualViews = sensorsdata_searchVisualSubView(@"_UIInterfaceActionCustomViewRepresentationView", self.gesture.view); CGPoint currentPoint = [self.gesture locationInView:self.gesture.view]; for (UIView *visualView in visualViews) { CGRect rect = [visualView convertRect:visualView.bounds toView:self.gesture.view]; if (CGRectContainsPoint(rect, currentPoint)) { return visualView; } } return nil;} @ end4.4 handle gesture status

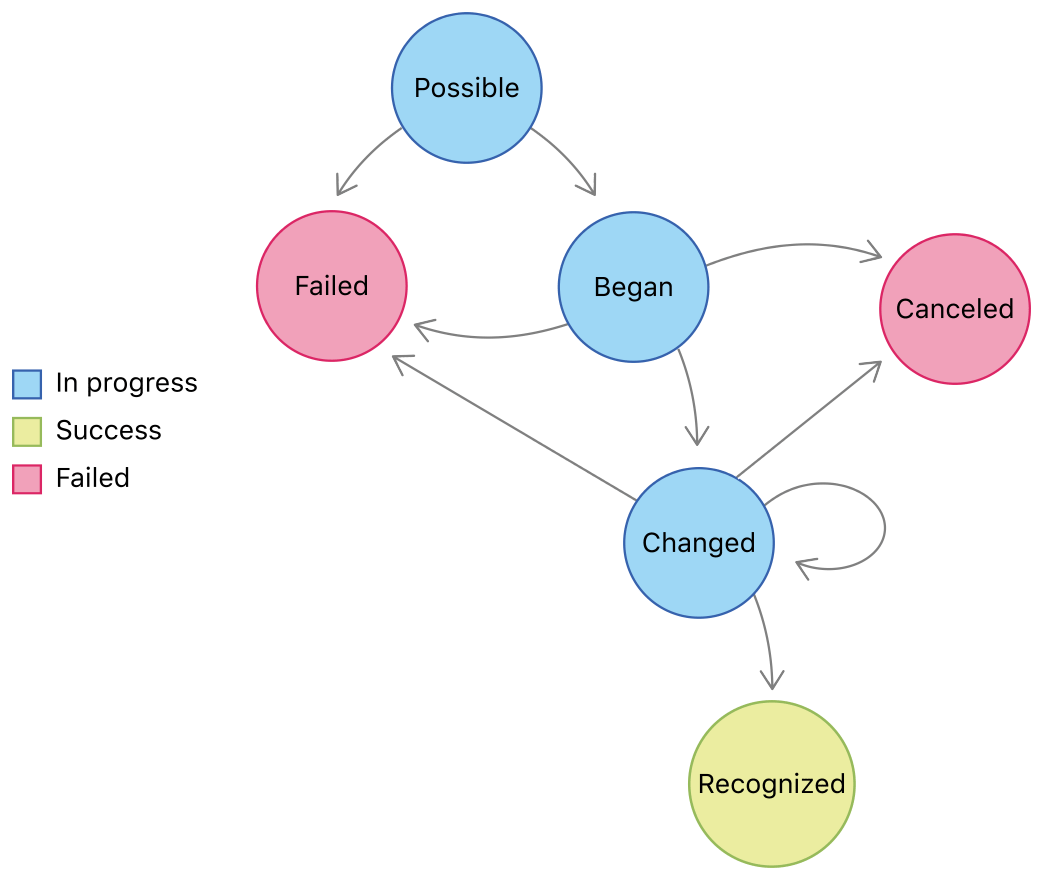

The gesture recognizer is driven by the state machine. The default state is UIGestureRecognizerStatePossible, indicating that it is ready to start processing events.

The transition between States is shown in Figure 4-1:

Figure 4-1 gesture state transition [2]

For fully buried points, no matter whether the gesture state is UIGestureRecognizerStateEnded or UIGestureRecognizerStateCancelled, gesture events should be collected:

- (void)trackGestureRecognizerAppClick:(UIGestureRecognizer *)gesture { if (gesture.state != UIGestureRecognizerStateEnded && gesture.state != UIGestureRecognizerStateCancelled) { return; } // Gesture event collection...}@ end5, Summary

This paper introduces a specific implementation of iOS gesture event collection, and also introduces how to deal with some difficulties. For more details, please refer to the source code of Shence iOS SDK [3]. If you have better ideas, welcome to join the open source community for discussion.

reference:

[1]https://developer.apple.com/documentation/uikit/uigesturerecognizer?language=objc

[2]https://developer.apple.com/documentation/uikit/touches_presses_and_gestures/implementing_a_custom_gesture_recognizer/about_the_gesture_recognizer_state_machine?language=objc

[3]https://github.com/sensorsdata/sa-sdk-ios