1. Introduction

At the end of the previous chapter, we finally generated the multibandtilelayerrdd [spatial key] object, which is all in preparation for the most important step - calculation

2. Calculate NDVI

First, let's review the calculation code:

val ndviTiledRDD: TileLayerRDD[SpatialKey] =

tiledRDD.withContext { rdd =>

rdd.mapValues { tile =>

tile.convert(DoubleConstantNoDataCellType).combineDouble(0, 1) { (r, nir) =>

(nir - r) / (nir + r)

}

}.reduceByKey(_.localMax(_)) // spark calculation optimization, which can be omitted

}

We know that the algorithm of NDVI is (nir-r)/(nir+r), but how to apply the algorithm to Geotrellis? In other words, we need to know who the algorithm operates on

2.1 separate Tile

By reading Official documents , we can clarify two important information about spark parallel computing in Geotrellis:

- Tile is the core of our fine operation

- Metadata needs to be preserved in the calculation so that it does not lose spatial information

Therefore, our first task is to find Tile from the above results

In the previous step, we generated a MultibandTileLayerRDD[SpatialKey] object, that is, RDD[(SpatialKey, MultibandTile)] with Metadata[TileLayerMetadata[SpatialKey]]. To get the MultiBandTile, we need two steps:

- Restore RDD[K,V] with Metadata to RDD[K,V]

- Get the V we need from RDD[K,V], that is, MultibandTile. In this case, it is actually its subclass: ArrayMultiBandTile

At the same time, due to the requirement of retaining metadata mentioned in the document, the code in the paper is finally obtained:

val ndviTiledRDD: TileLayerRDD[SpatialKey] =

tiledRDD.withContext { rdd: RDD[(SpatialKey, MultibandTile)] =>

// Once you get the RDD, you can directly use Spark's mapValues method to extract Tile

rdd.mapValues { tile: MultibandTile =>

// Tile specific operation

}

}

The main body of this part is the withContext method. The code is as follows:

[code in spark/Implicit.scala]

implicit class WithContextWrapper[K, V, M](val rdd: RDD[(K, V)] with Metadata[M]) {

def withContext[K2, V2](f: RDD[(K, V)] => RDD[(K2, V2)]) =

new ContextRDD(f(rdd), rdd.metadata)

}

It can be seen that it is only a relatively simple implicit conversion, which realizes the function of extracting RDD from TileLayerRDD type data for separate calculation, and finally repackaging it with the original metadata into a ContextRDD object

Finally, we get a familiar ContextRDD object, which can be called ContextRDD[SpatialKey, Tile, TileLayerMetadata[SpatialKey]], RDD[(SpatialKey, Tile)] with Metadata[TileLayerMetadata[SpatialKey]] or more convenient TileLayerRDD[SpatialKey]

2.2 Tile and computing core

Back to the core NDVI calculation code:

tile.convert(DoubleConstantNoDataCellType)

.combineDouble(0, 1) { (r, nir) => (nir - r) / (nir + r) }

It can be seen that the code mainly has two steps:

- Type conversion: convert

- Merge bands as specified: combineDouble

In order, start with type conversion

2.2.1 type conversion

In this step, we convert the original data type to DoubleConstantNoDataCellType. Why do we do this conversion?

- First of all, we cannot determine the type of original data. In this example, we need to ensure that the data type is floating point when calculating NDVI. We actually select Double type or float type according to actual needs

- Secondly, we want to use the default value of Geotrellis type as the strategy to identify Nodata value, so we choose DoubleConstantNoDataCellType as the target type

The main body of this part is the Tile.convert method. The sorted code is as follows:

[code in ArrayMultibandTile.scala]

def convert(newCellType: CellType): MultibandTile = {

// Create an array to hold the new data

val newBands = Array.ofDim[Tile](bandCount)

cfor(0)(_ < bandCount, _ + 1) { i =>

// Process each band one by one

newBands(i) = band(i).convert(newCellType)

}

ArrayMultibandTile(newBands)

}

def band(bandIndex: Int): Tile = {

_bands(bandIndex)

}

// Passed in when defining ArrayMultibandTile

class ArrayMultibandTile(_bands: Array[Tile]) extends MultibandTile with MacroMultibandCombiners {

val bandCount = _bands.size

...

}

It can be seen from the code that the core of type conversion is the convert operation on the band. We review the code defining ArrayMultibandTile, and we can see that a band is a [celltype]ArrayTile:

[code in GeoTiffMultibandTile.scala]

case class Chip( window: GridBounds[Int], bands: Array[MutableArrayTile], intersectingSegments: Int, var segmentsBurned: Int = 0 ) ... val bands = Array.fill(bandSubsetLength)(ArrayTile.empty(cellType, window.width, window.height)) val chip = Chip(window, bands, segments.length)

The core of the above code is the ArrayTile.empty method:

[code in ArrayTile.scala]

def empty(t: CellType, cols: Int, rows: Int): MutableArrayTile =

t match {

// Call different classes for initialization according to the actual situation

case _: BitCells => BitArrayTile.empty(cols, rows)

case ct: ByteCells => ByteArrayTile.empty(cols, rows, ct)

...

}

As mentioned above, the core of type conversion is the convert operation on band. Now that we know how [celltype] arraytile (i.e. band) is generated, we can see how its convert is implemented:

[code in ArrayTile.scala]

def convert(targetCellType: CellType): ArrayTile = {

// Creates an empty ArrayTile of the specified type

val tile = ArrayTile.alloc(targetCellType, cols, rows)

// Distinguish and assign the specified floating-point type

if(!cellType.isFloatingPoint) {

cfor(0)(_ < rows, _ + 1) { row =>

cfor(0)(_ < cols, _ + 1) { col =>

tile.set(col, row, get(col, row))

}

}

} else {

cfor(0)(_ < rows, _ + 1) { row =>

cfor(0)(_ < cols, _ + 1) { col =>

tile.setDouble(col, row, getDouble(col, row))

}

}

}

tile

}

// Predefined methods, and the implementation of applyDouble is in specific subclasses

def getDouble(col: Int, row: Int) = applyDouble(row * cols + col)

// Creates an empty ArrayTile of the specified type

def alloc(t: CellType, cols: Int, rows: Int): MutableArrayTile =

t match {

case _: BitCells => BitArrayTile.ofDim(cols, rows)

...

case ct: DoubleCells => DoubleArrayTile.ofDim(cols, rows, ct)

}

The main body of the code is the ofDim method. Let's assume that the source data is of DoubleArrayTile type and see how it is implemented:

[code in DoubleArrayTile.scala]

// Judge Nodata policy according to specific type

def ofDim(cols: Int, rows: Int, cellType: DoubleCells with NoDataHandling): DoubleArrayTile =

cellType match {

// Default policy

case DoubleCellType =>

new DoubleRawArrayTile(Array.ofDim[Double](cols * rows), cols, rows)

// Use default Nodata value

case DoubleConstantNoDataCellType =>

new DoubleConstantNoDataArrayTile(Array.ofDim[Double](cols * rows), cols, rows)

// Use user-defined Nodata values

case udct: DoubleUserDefinedNoDataCellType =>

new DoubleUserDefinedNoDataArrayTile(Array.ofDim[Double](cols * rows), cols, rows, udct)

}

// It corresponds to the applyDouble method under different policies

final case class DoubleConstantNoDataArrayTile(arr: Array[Double], val cols: Int, val rows: Int)

extends DoubleArrayTile(arr, cols, rows) {

...

// No special processing is required when the Nodata value is encountered

def applyDouble(i: Int): Double = arr(i)

...

}

final case class DoubleUserDefinedNoDataArrayTile(arr: Array[Double], val cols: Int, val rows: Int, val cellType: DoubleUserDefinedNoDataCellType)

extends DoubleArrayTile(arr, cols, rows)

with UserDefinedDoubleNoDataConversions {

...

// When it is a user predetermined value, it is processed as a Nodata value

def applyDouble(i: Int): Double = udd2d(arr(i))

...

}

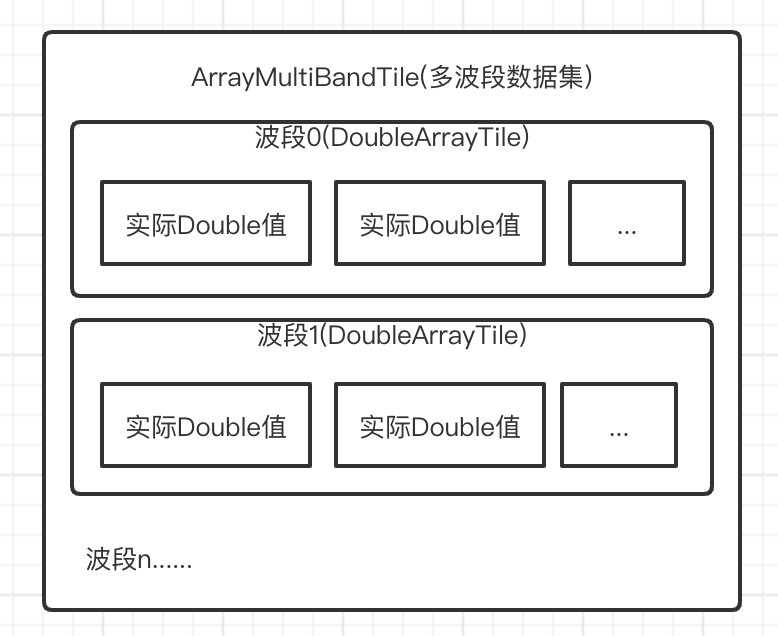

From the above code, we can see that the logic of type conversion is very simple: re value each band in the multi band image according to the data type specified by the user and put it into a new container. We can also see the structure of multi band image data storage:

Next, we can analyze the most critical task: merging bands in the specified way (in this case, NDVI algorithm)

2.2.2 combined bands

The main body of this part is the combine method:

[code in ArrayMultibandTile.scala]

def combineDouble(b0: Int, b1: Int)(f: (Double, Double) => Double): Tile = {

val band1 = band(b0)

val band2 = band(b1)

// Result container

val result = ArrayTile.empty(cellType, cols, rows)

cfor(0)(_ < rows, _ + 1) { row =>

cfor(0)(_ < cols, _ + 1) { col =>

// Assignment after calculation

result.setDouble(col, row, f(band1.getDouble(col, row), band2.getDouble(col, row)))

}

}

result

}

It can be seen that the code logic of this part is very simple, but there is a deeper problem: can it meet our actual use needs?

Obviously, in practice, we may use multiple band fusion, and it is not enough to only support binding two bands. Just observing the code, we can find that Geotrellis provides a more general band merging method:

[code in ArrayMultibandTile.scala]

def combineDouble(subset: Seq[Int])(f: Seq[Double] => Double): Tile = {

subset.foreach({ b => require(0 <= b && b < bandCount, "All elements of subset must be present") })

val subsetSize = subset.size

val subsetArray = subset.toArray

val result = ArrayTile.empty(cellType, cols, rows)

val values: Array[Double] = Array.ofDim(subsetSize)

// Traverse the specified band

cfor(0)(_ < rows, _ + 1) { row =>

cfor(0)(_ < cols, _ + 1) { col =>

cfor(0)(_ < subsetSize, _ + 1) { i =>

values(i) = _bands(subsetArray(i)).getDouble(col, row)

}

result.setDouble(col, row, f(values))

}

}

result

}

However, when we actually use Geotrellis, we can find that we can use the following methods for band merging:

tile.combineDouble(0, 1, 2, 3) { (b0, b1, b2, b3) => /* Specific operation */ }

There can be more bands actually supported. Obviously, this way of calling is more elegant. However, we can't find the definitions of these methods in the actual code. They come from the characteristics of ArrayMutibandTile

3. Characteristics of arraymultibandtile and MultibandTile

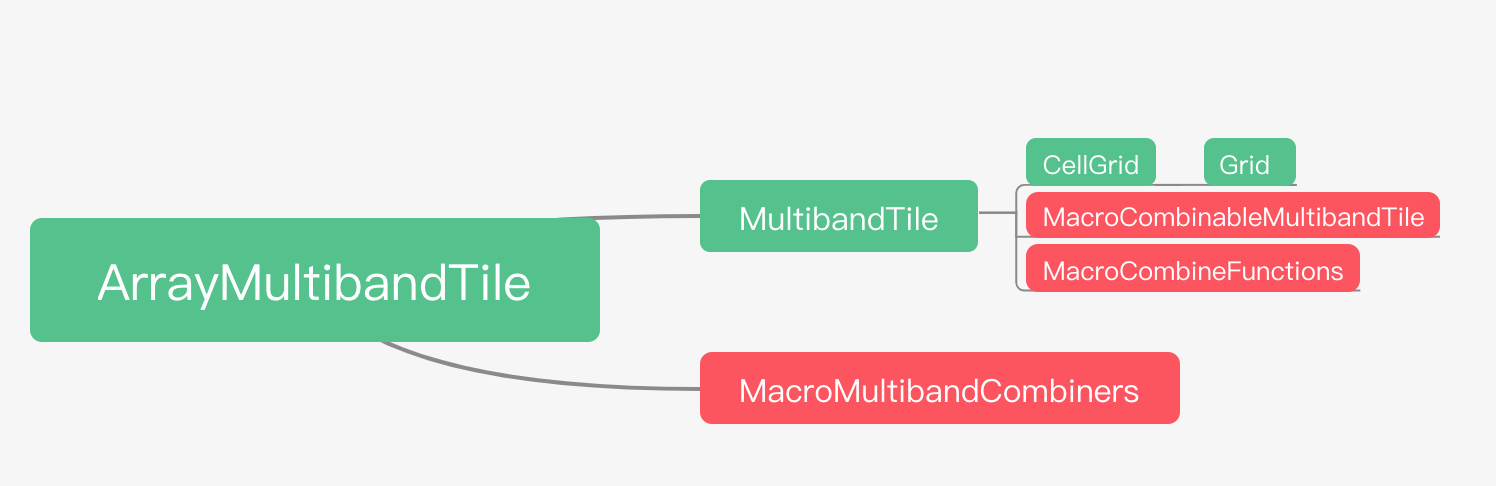

The inheritance structure of ArrayMultibandTile is as follows:

Of which:

- Green is class inheritance

- Red is the feature realization

However, we can find that the corresponding code files cannot be found for the three characteristics, because these codes are generated through text templates before compilation and defined in the project/Boilerplate.scala file. We don't need to know how the template works, we just need to know what the final generated content of the template is, and then analyze it in a conventional way

3.1 characteristics of macrocombinablemultibandtile and MacroCombineFunctions

First, let's take a look at the characteristics of the generated MacroCombinableMultibandTile:

[the code will be generated in geotrellis/macros/MacroCombinableMultibandTile.scala]

package geotrellis.macros

trait MacroCombinableMultibandTile[T] {

def combineIntTileCombiner(combiner: IntTileCombiner3): T

def combineDoubleTileCombiner(combiner: DoubleTileCombiner3): T

// ... and so on

def combineIntTileCombiner(combiner: IntTileCombiner10): T

def combineDoubleTileCombiner(combiner: DoubleTileCombiner10): T

}

It can be seen that the MacroCombinableMultibandTile feature predefines 10 methods to receive TileCombiner types for Int and Double types respectively. It can also be seen that because it is a lot of repetitive work, it is a more convenient implementation method to generate code using templates

The [type]TileCombiner[arity] type in the code also comes from the template (taking Double type as an example, Int type is similar):

[the code will be generated in geotrellis/macros/DoubleTileCombiners.scala]

package geotrellis.macros

trait DoubleTileCombiner3 {

val b0: Int; val b1: Int; val b2: Int

def apply(b0: Double, b1: Double, b2: Double): Double

}

// ... and so on

trait DoubleTileCombiner10 {

val b0: Int; val b1: Int; val b2: Int; val b3: Int; val b4: Int; val b5: Int; val b6: Int; val b7: Int; val b8: Int; val b9: Int

def apply(b0: Double, b1: Double, b2: Double, b3: Double, b4: Double, b5: Double, b6: Double, b7: Double, b8: Double, b9: Double): Double

}

Let's look at the characteristics of the generated MacroCombineFunctions:

[the code will be generated in geotrellis/macros/MacroCombinableMultibandTile.scala]

package geotrellis.macros

import scala.language.experimental.macros

trait MacroCombineFunctions[T, MBT <: MacroCombinableMultibandTile[T]] {

def combine(b0: Int, b1: Int, b2: Int)(f: (Int, Int, Int) => Int): T =

macro MultibandTileMacros.intCombine3_impl[T, MBT]

def combineDouble(b0: Int, b1: Int, b2: Int)(f: (Double, Double, Double) => Double): T =

macro MultibandTileMacros.doubleCombine3_impl[T, MBT]

// ... and so on

def combine(b0: Int, b1: Int, b2: Int, b3: Int, b4: Int, b5: Int, b6: Int, b7: Int, b8: Int, b9: Int)(f: (Int, Int, Int, Int, Int, Int, Int, Int, Int, Int) => Int): T =

macro MultibandTileMacros.intCombine10_impl[T, MBT]

def combineDouble(b0: Int, b1: Int, b2: Int, b3: Int, b4: Int, b5: Int, b6: Int, b7: Int, b8: Int, b9: Int)(f: (Double, Double, Double, Double, Double, Double, Double, Double, Double, Double) => Double): T =

macro MultibandTileMacros.doubleCombine10_impl[T, MBT]

}

[type] combine [arity] in code_ The impl type still comes from the template:

[the code will be generated in geotrellis/macros/MultibandTileMacros.scala]

package geotrellis.macros

import spire.macros.InlineUtil

import scala.reflect.macros.whitebox.Context

import scala.language.experimental.macros

object MultibandTileMacros {

def intCombine3_impl[T, MBT <: MacroCombinableMultibandTile[T]](c: Context)(b0: c.Expr[Int], b1: c.Expr[Int], b2: c.Expr[Int])(f: c.Expr[(Int, Int, Int) => Int]): c.Expr[T] = {

import c.universe._

val self = c.Expr[MacroCombinableMultibandTile[T]](c.prefix.tree)

val tree =

q"""$self.combineIntTileCombiner(new geotrellis.macros.IntTileCombiner3 {

val b0 = $b0; val b1 = $b1; val b2 = $b2

def apply(b0: Int, b1: Int, b2: Int): Int = $f(b0, b1, b2)

})"""

new InlineUtil[c.type](c).inlineAndReset[T](tree)

}

// ... and so on

def doubleCombine10_impl[T, MBT <: MacroCombinableMultibandTile[T]](c: Context)(b0: c.Expr[Int], b1: c.Expr[Int], b2: c.Expr[Int], b3: c.Expr[Int], b4: c.Expr[Int], b5: c.Expr[Int], b6: c.Expr[Int], b7: c.Expr[Int], b8: c.Expr[Int], b9: c.Expr[Int])(f: c.Expr[(Double, Double, Double, Double, Double, Double, Double, Double, Double, Double) => Double]): c.Expr[T] = {

import c.universe._

val self = c.Expr[MacroCombinableMultibandTile[T]](c.prefix.tree)

val tree =

q"""$self.combineDoubleTileCombiner(new geotrellis.macros.DoubleTileCombiner10 {

val b0 = $b0; val b1 = $b1; val b2 = $b2; val b3 = $b3; val b4 = $b4; val b5 = $b5; val b6 = $b6; val b7 = $b7; val b8 = $b8; val b9 = $b9

def apply(b0: Double, b1: Double, b2: Double, b3: Double, b4: Double, b5: Double, b6: Double, b7: Double, b8: Double, b9: Double): Double = $f(b0, b1, b2, b3, b4, b5, b6, b7, b8, b9)

})"""

new InlineUtil[c.type](c).inlineAndReset[T](tree)

}

}

At this point, we can also infer what these two traits do:

- The macrocombine functions feature realizes such a function: it generates overloads of several combine / combineddouble methods through macros to support the fusion method from 3 band parameters to 10 band parameters

- This macro is implemented as follows:

- Restrict the context to a class that inherits MacroCombinableMultibandTile

- Call the combineIntTileCombiner/combineDoubleTileCombiner method in this context and build its required parameters

- Mount the method to the overload of the corresponding combine/combineDouble method through the macro

- In the macro definition, you need to receive the combineIntTileCombiner/combineDoubleTileCombiner method of the specified parameter

- This method is defined in MacroCombinableMultibandTile

- The different parameters required are defined in inttilecombiners / doubletilecmbiners.scala

That is, MacroCombineFunctions defines behavior and MacroCombinableMultibandTile defines type. Therefore, these two characteristics must be inherited by the class at the same time

3.2 characteristics of macromultiband combiners

From the code of MacroCombinableMultibandTile and MacroCombineFunctions, it can be seen that classes that inherit these two characteristics only need to implement specific combineinttilecombiner / combineddoubletilecombiner methods to realize the overload of combineinttilecombiner / combineddouble methods

The MacroMultibandCombiners feature specifically implements the combineinttilecombiner / combineddoubletilecombiner method. Therefore, it enables ArryMultiBand to have a combine method that supports the integration of up to 10 bands at the same time:

[the code will be generated in geotrellis/raster/MacroMultibandCombiners.scala]

package geotrellis.raster

import geotrellis.macros._

import spire.syntax.cfor._

trait MacroMultibandCombiners { self: MultibandTile =>

def combineIntTileCombiner(combiner: IntTileCombiner3): Tile = {

val band0 = band(combiner.b0); val band1 = band(combiner.b1); val band2 = band(combiner.b2)

val result = ArrayTile.empty(targetCellType, cols, rows)

val arr = Array.ofDim[Int](bandCount)

cfor(0)(_ < rows, _ + 1) { row =>

cfor(0)(_ < cols, _ + 1) { col =>

result.set(col, row, combiner(band0.get(col, row), band1.get(col, row), band2.get(col, row)))

}

}

result

}

// ... and so on

def combineDoubleTileCombiner(combiner: DoubleTileCombiner10): Tile = {

val band0 = band(combiner.b0); val band1 = band(combiner.b1); val band2 = band(combiner.b2); val band3 = band(combiner.b3); val band4 = band(combiner.b4); val band5 = band(combiner.b5); val band6 = band(combiner.b6); val band7 = band(combiner.b7); val band8 = band(combiner.b8); val band9 = band(combiner.b9)

val result = ArrayTile.empty(targetCellType, cols, rows)

val arr = Array.ofDim[Int](bandCount)

cfor(0)(_ < rows, _ + 1) { row =>

cfor(0)(_ < cols, _ + 1) { col =>

result.setDouble(col, row, combiner(band0.getDouble(col, row), band1.getDouble(col, row), band2.getDouble(col, row), band3.getDouble(col, row), band4.getDouble(col, row), band5.getDouble(col, row), band6.getDouble(col, row), band7.getDouble(col, row), band8.getDouble(col, row), band9.getDouble(col, row)))

}

}

result

}

}

It is not difficult to see that its principle is consistent with the dual band fusion mentioned above, except that [type]combiner[arity] is used instead of f(b0,b1)

Why do you need to define a new type to wrap the f(b0,b1...) method? In fact, it is very simple, because f(b0,b1...) is defined at run time, while macros are defined at compile time. A wrapper cannot be compiled without defining a wrapper specifically. Therefore, in order to realize this function, a wrapper object needs to be defined specifically

4. Summary

In this paper, we start with computing NDVI and discuss how Geotrellis realizes the basic computing function

In the following article, we will continue to analyze how Geotrellis implements more complex computing operations such as band convolution