Memory pool is a kind of pooling technology. Through pooling, the number of resource object creation can be effectively reduced and the performance of program operation can be improved.

Complex memory problems, how to sort out their own ideas

1, Memory pool introduction

1.1 basic concepts

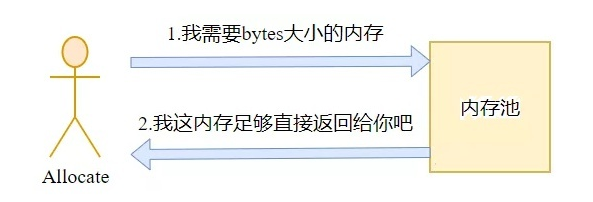

From the above figure, we can understand the general idea of memory pool:

- If the memory pool is not used, the user program directly requests memory from the operating system

- If a memory pool is used, the user program will first ask whether the memory pool can allocate the requested memory size. If not, it will first apply to the operating system for memory to the memory pool, and then the memory pool will indirectly return the memory to the user program

To sum up, the implementation of memory pool is mainly to avoid a large amount of system call overhead caused by the frequent direct application of memory by user programs to the operating system. Using memory pool, you can apply for a large amount of memory from the operating system at one time, and then allocate it to user programs in user state one by one, greatly reducing the number of system calls, so as to improve the performance of the program.

2, Memory pool code implementation

2.1 introduction to basic interface

The memory pool code comes from Google's LevelDB library. The Arena object is a very simple memory pool implementation

// Copyright (c) 2011 The LevelDB Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file. See the AUTHORS file for names of contributors.

class Arena {

public:

Arena();

// Memory pool copy prohibited

Arena(const Arena &) = delete;

Arena &operator=(const Arena &) = delete;

~Arena();

// Memory request, return a pointer to the requested memory block

char *Allocate(size_t bytes);

// Memory statistics, which records how much memory the object has applied for

size_t MemoryUsage() const { return memory_usage_; }

private:

char *AllocateNewBlock(size_t block_bytes);

// Allocation status

char *alloc_ptr_;

size_t alloc_bytes_remaining_;

//The allocated memory block address is recorded to facilitate the release of memory

std::vector<char *> blocks_;

// Statistics of memory requests

size_t memory_usage_;

};

A closer look at the above code shows that the core of the memory pool lies in how to allocate memory and how to free memory. These two parts will be explained in detail below.

2.2 memory pool memory allocation

The memory allocation of the memory pool is mainly divided into three steps. The dependency of these three steps is that if the previous step is completed, the following steps do not need to be continued. These three parts will be explained in detail below:

(1) As shown in the following figure, the Allocate method applies for bytes of memory from the memory pool (Arena). If alloc_bytes_remaining ≥ bytes in the memory pool, the memory pool allocates the memory itself; if alloc_bytes_remaining < bytes in the memory pool, enter step 2 or step 3

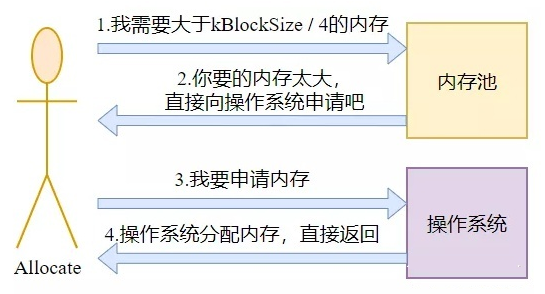

(2) As shown in the following figure, if the memory applied by the Allocate method to the memory pool (Arena) is ≥ kBlockSize / 4, the Allocate method will directly apply for memory from the operating system, that is, call the new/malloc method of the operating system

(3) As shown in the following figure, the Allocate method allocates data to the memory pool (Arena) the requested memory is less than kBlockSize / 4. If you frequently directly request such a small memory from the operating system, it is not cost-effective in performance. Therefore, the memory pool will apply for kBlockSize memory from the operating system, Allocate it to the bytes memory required by the Allocate method, and reserve the kBlockSize - bytes memory (the reserved memory, that is, the remaining memory, uses the alloc_bytes_remaining_member variable to record the size, and uses the alloc_ptr_member variable to record the address)

After understanding the above steps, I believe it is easy to understand the Allocate code

char *Arena::Allocate(size_t bytes) {

assert(bytes > 0);

if (bytes <= alloc_bytes_remaining_) {

// First step of memory allocation

char *result = alloc_ptr_;

alloc_ptr_ += bytes;

alloc_bytes_remaining_ -= bytes;

return result;

}

if (bytes > kBlockSize / 4) {

// Memory allocation step 2

// If it is greater than kBlockSize / 4, allocate newblock allocation is called, that is, find the operating system allocation

char *result = AllocateNewBlock(bytes);

return result;

}

// Memory allocation step 3

alloc_ptr_ = AllocateNewBlock(kBlockSize);

alloc_bytes_remaining_ = kBlockSize;

char *result = alloc_ptr_;

alloc_ptr_ += bytes;

alloc_bytes_remaining_ -= bytes;

return result;

}

// Directly request the specified size of memory from the operating system

char *Arena::AllocateNewBlock(size_t block_bytes) {

char *result = new char[block_bytes];

blocks_.push_back(result);

memory_usage_ += (block_bytes + sizeof(char *));

return result;

}

2.3 memory pool memory release

For the previous memory allocation, the memory pool will use a block_ The private member records the allocated address. The arrow in the following figure indicates the pointer to the address. Then, the work to be done for memory pool destruction is to traverse blocks_, delete the memory pointed to by each pointer

The memory pool is destroyed in the destructor of Arena. The code is as follows

Arena::~Arena() {

for (size_t i = 0; i < blocks_.size(); i++) {

delete[] blocks_[i];

}

}

2.4 summary

The main idea of memory pool is memory allocation and memory destruction. If you understand these, you can start to implement a simple memory pool!

3, Performance comparison

3.1 simple performance comparison

As mentioned earlier, the main function of memory pool is to avoid the overhead caused by user programs frequently applying for memory from the operating system. How to verify that memory pool can really reduce the overhead?

Here is a simple experimental verification:

1 . Group A: do not use the memory pool and constantly apply for memory from the operating system

2 . Group B: use the memory pool and constantly apply for memory from the memory pool

Comparing the time consumption of group A and group B, if the time consumption is short, the performance is higher

void test_allocate_time() {

int N = 100000;

char *p;

clock_t start_time_without_memory_pool;

clock_t end_time_without_memory_pool;

start_time_without_memory_pool = clock();

for (int i = 1; i < N; ++i) {

p = new char[i]; // There is no delete here, which will cause memory leakage. It is not rigorous enough

}

end_time_without_memory_pool = clock();

std::cout << "Allocate time without memorypool: "

<< (end_time_without_memory_pool - start_time_without_memory_pool)

<< std::endl;

Arena arena;

clock_t start_time_with_memory_pool;

clock_t end_time_with_memory_pool;

start_time_with_memory_pool = clock();

for (int i = 1; i < N; ++i) {

p = arena.Allocate(i);

}

end_time_with_memory_pool = clock();

std::cout << "Allocate time with memorypool: "

<< (end_time_with_memory_pool - start_time_with_memory_pool)

<< std::endl;

}

The final experimental results are as follows:

Allocate time without memorypool: 340470 Allocate time with memorypool: 245339

It can be seen that using the memory pool to allocate memory takes less CPU clock cycles, that is, the allocation time is shorter, so the performance is higher in this scenario.

Linux C / C + + server development / Architect learning address

Linux server development / Architect interview questions, learning materials, teaching videos and learning roadmap (materials include C/C + +, Linux, golang technology, Nginx, ZeroMQ, MySQL, Redis, fastdfs, MongoDB, ZK, streaming media, CDN, P2P, K8S, Docker, TCP/IP, collaboration, DPDK, ffmpeg, etc.), you can add them if necessary Learning communication group