catalogue

- Demand 1: user active theme

- Requirement 2: user added theme

- Demand 3: user retention theme

- Demand 4: number of silent users

- Demand 5: number of returned users this week

- Demand 6: number of lost users

- Demand 7: number of active users in the last three consecutive weeks

- Demand 8: number of active users for three consecutive days in the last seven days

- Demand 9: total turnover of GMV (gross merchant volume) in a period of time

- Demand 10: conversion rate = new users / daily users

- Requirement 11: user behavior funnel analysis

- Demand 12: brand repurchase rate

- Demand XIII: analysis of ADS layer brand repurchase rate report

- Demand 14: find the ranking of the top ten commodities with the corresponding repurchase rate of users at each level

Update to requirement 2 first, and I will continue to update the subsequent requirements...... Coming soon!!!!!

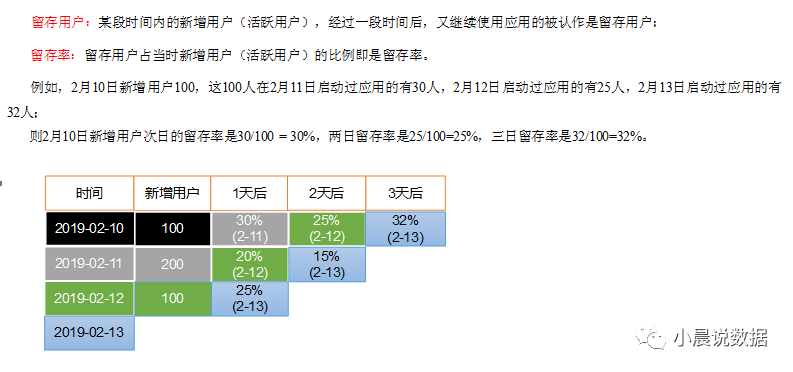

Demand 3: user retention theme

If we do not consider the new users on February 11, 2019 and February 12, 2019: 100 new users will be added on February 10, 2019, and the retention rate will be 30% after one day, 25% on February 12, 2019 and 32% after three days;

Looking at the retention rate of 02-11 on February 12, 2019: 200 new people, and the retention rate of 12 is 20%;

Looking at the retention rate of 02-12 on February 13, 2019: 100 new people are added, and the retention rate is 25% on the 13th, that is, one day later;

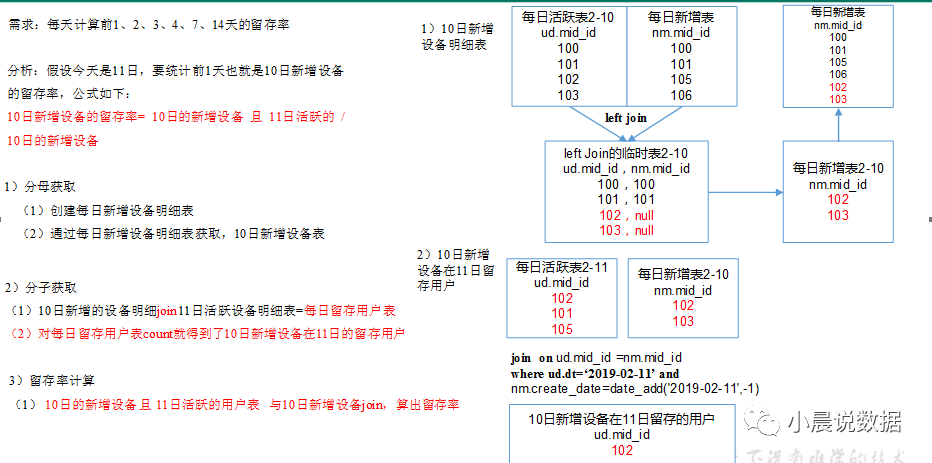

Analysis of user retention rate: new users added yesterday and active today / new users added yesterday

For example, on the 11th of today, it is necessary to count the user retention rate of the 10th day - > new equipment on the 10th day and it is active on the 11th / new equipment on the 10th day. Denominator: new equipment on the 10th day (daily active left join) previous new equipment table (nm) nm mid_ ID is null) molecule: daily activity table (ud) join daily new table (nm) where UD DT = 'today' and nm create_ Date = 'yesterday'

① DWS layer (user details retained every day dws_user_retention_day) analysis retained by users in one day: = = = > >

Retained user = new join added the previous day, active today

User retention rate=Retained users/Added the previous day

Creating tables: dws_user_retention_day

hive (gmall)>

drop table if exists `dws_user_retention_day`;

create table `dws_user_retention_day`

(

`mid_id` string COMMENT 'Unique identification of equipment',

`user_id` string COMMENT 'User ID',

`version_code` string COMMENT 'Program version number',

`version_name` string COMMENT 'Program version name',

`lang` string COMMENT 'System language',

`source` string COMMENT 'Channel number',

`os` string COMMENT 'Android version',

`area` string COMMENT 'region',

`model` string COMMENT 'Mobile phone model',

`brand` string COMMENT 'Mobile phone brand',

`sdk_version` string COMMENT 'sdkVersion',

`gmail` string COMMENT 'gmail',

`height_width` string COMMENT 'Screen width and height',

`app_time` string COMMENT 'Time when the client log was generated',

`network` string COMMENT 'Network mode',

`lng` string COMMENT 'longitude',

`lat` string COMMENT 'latitude',

`create_date` string comment 'Equipment addition time',

`retention_day` int comment 'Retention days as of current date'

) COMMENT 'Daily user retention'

PARTITIONED BY ( `dt` string)

stored as parquet

location '/warehouse/gmall/dws/dws_user_retention_day/'

;

Import data (calculate the retained details of new user access in the previous day every day)

from dws_uv_detail_day active device UD join DWS_ new_ mid_ New equipment added every day nm on UD mid_ id =nm. mid_ id where ud. dt='2019-02-11' and nm. create_ date=date_ add('2019-02-11',-1);

hive (gmall)>

insert overwrite table dws_user_retention_day partition(dt="2019-02-11")

select

nm.mid_id,

nm.user_id ,

nm.version_code ,

nm.version_name ,

nm.lang ,

nm.source,

nm.os,

nm.area,

nm.model,

nm.brand,

nm.sdk_version,

nm.gmail,

nm.height_width,

nm.app_time,

nm.network,

nm.lng,

nm.lat,

nm.create_date,

1 retention_day

from dws_uv_detail_day ud join dws_new_mid_day nm on ud.mid_id =nm.mid_id

where ud.dt='2019-02-11' and nm.create_date=date_add('2019-02-11',-1);

Query the imported data (calculate the retained details accessed by new users in the previous day every day)

hive (gmall)> select count(*) from dws_user_retention_day;

② Directly insert data into DWS layer (1,2,3,n-day user details): DWS_ user_ retention_ Join all in a union table with day; 1) Directly import data (new users accessing the retention details in the first 1, 2, 3 and N days of calculation every day) and directly change this, that is, date_add('2019-02-11',-3); -1 is the retention rate of one day- 2 is the retention rate for two days, - 3 is the retention rate for three days

hive (gmall)>

insert overwrite table dws_user_retention_day partition(dt="2019-02-11")

select

nm.mid_id,

nm.user_id ,

nm.version_code ,

nm.version_name ,

nm.lang ,

nm.source,

nm.os,

nm.area,

nm.model,

nm.brand,

nm.sdk_version,

nm.gmail,

nm.height_width,

nm.app_time,

nm.network,

nm.lng,

nm.lat,

nm.create_date,

1 retention_day

from dws_uv_detail_day ud join dws_new_mid_day nm on ud.mid_id =nm.mid_id

where ud.dt='2019-02-11' and nm.create_date=date_add('2019-02-11',-1)

union all

select

nm.mid_id,

nm.user_id ,

nm.version_code ,

nm.version_name ,

nm.lang ,

nm.source,

nm.os,

nm.area,

nm.model,

nm.brand,

nm.sdk_version,

nm.gmail,

nm.height_width,

nm.app_time,

nm.network,

nm.lng,

nm.lat,

nm.create_date,

2 retention_day

from dws_uv_detail_day ud join dws_new_mid_day nm on ud.mid_id =nm.mid_id

where ud.dt='2019-02-11' and nm.create_date=date_add('2019-02-11',-2)

union all

select

nm.mid_id,

nm.user_id ,

nm.version_code ,

nm.version_name ,

nm.lang ,

nm.source,

nm.os,

nm.area,

nm.model,

nm.brand,

nm.sdk_version,

nm.gmail,

nm.height_width,

nm.app_time,

nm.network,

nm.lng,

nm.lat,

nm.create_date,

3 retention_day

from dws_uv_detail_day ud join dws_new_mid_day nm on ud.mid_id =nm.mid_id

where ud.dt='2019-02-11' and nm.create_date=date_add('2019-02-11',-3);

2) Query imported data (new users' access and retention details in the first 1, 2 and 3 days before calculation every day)

hive (gmall)> select retention_day , count(*) from dws_user_retention_day group by retention_day;

③ Number of users retained in ADS layer ads_user_retention_day_count directly count(*). 1) create ads_user_retention_day_count table:

hive (gmall)> drop table if exists `ads_user_retention_day_count`; create table `ads_user_retention_day_count` ( `create_date` string comment 'Equipment addition date', `retention_day` int comment 'Retention days as of current date', `retention_count` bigint comment 'Retained quantity' ) COMMENT 'Daily user retention' stored as parquet location '/warehouse/gmall/ads/ads_user_retention_day_count/';

Import data create by creation date_ Date and retention days_ group by day;

hive (gmall)>

insert into table ads_user_retention_day_count

select

create_date,

retention_day,

count(*) retention_count

from dws_user_retention_day

where dt='2019-02-11'

group by create_date,retention_day;

Query import data

hive (gmall)> select * from ads_user_retention_day_count;

---> 2019-02-10 1 112

④ Retained user ratio_ count / new_ mid_ Count refers to the number of reserved / newly added tables, creating ads_user_retention_day_rate

hive (gmall)>

drop table if exists `ads_user_retention_day_rate`;

create table `ads_user_retention_day_rate`

(

`stat_date` string comment 'Statistical date',

`create_date` string comment 'Equipment addition date',

`retention_day` int comment 'Retention days as of current date',

`retention_count` bigint comment 'Retained quantity',

`new_mid_count` string comment 'New equipment quantity of the day',

`retention_ratio` decimal(10,2) comment 'Retention '

) COMMENT 'Daily user retention'

stored as parquet

location '/warehouse/gmall/ads/ads_user_retention_day_rate/';

Import data

join ads_new_mid_countt --->Daily new equipment list

hive (gmall)>

insert into table ads_user_retention_day_rate

select

'2019-02-11' ,

ur.create_date,

ur.retention_day,

ur.retention_count ,

nc.new_mid_count,

ur.retention_count/nc.new_mid_count*100

from

(

select

create_date,

retention_day,

count(*) retention_count

from `dws_user_retention_day`

where dt='2019-02-11'

group by create_date,retention_day

) ur join ads_new_mid_count nc on nc.create_date=ur.create_date;

Query import data

hive (gmall)>select * from ads_user_retention_day_rate;

2019-02-11 2019-02-10 1 112 442 25.34

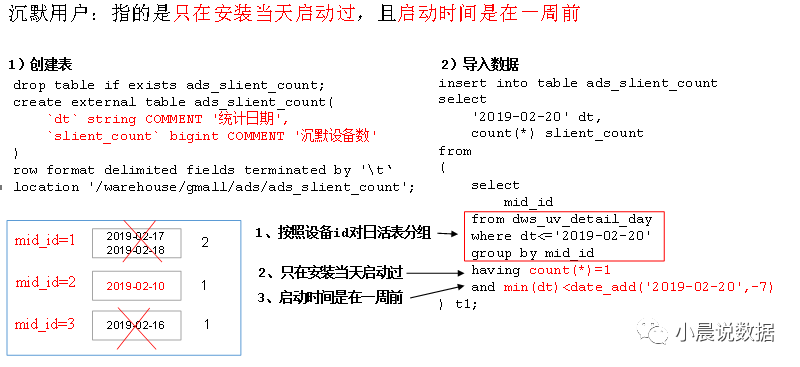

Demand 4: number of silent users

Silent user: refers to the user who has only started on the day of installation and started one week ago

Use daily living schedule dws_uv_detail_day as DWS layer data

Create table statement

hive (gmall)>

drop table if exists ads_slient_count;

create external table ads_slient_count(

`dt` string COMMENT 'Statistical date',

`slient_count` bigint COMMENT 'Number of silent devices'

)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ads/ads_slient_count';

Import data

hive (gmall)>

insert into table ads_slient_count

select

'2019-02-20' dt,

count(*) slient_count

from

(

select mid_id

from dws_uv_detail_day

where dt<='2019-02-20'

group by mid_id

having count(*)=1 and min(dt)<date_add('2019-02-20',-7)

) t1;

Demand 5: number of returned users this week

Return this week = active this week - new this week - active last week

Use daily living schedule dws_uv_detail_day as DWS layer data

Reflow this week (active before last week, inactive last week, active this week) = active this week - new this week - active last week, reflow this week = active this week, left join new this week, left join active last week, and the new id of this week is null, and the active id of last week is null;

Table creation:

hive (gmall)>

drop table if exists ads_back_count;

create external table ads_back_count(

`dt` string COMMENT 'Statistical date',

`wk_dt` string COMMENT 'Week of statistical date',

`wastage_count` bigint COMMENT 'Number of reflux equipment'

)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ads/ads_back_count';

Import data

hive (gmall)>

insert into table ads_back_count

select

'2019-02-20' dt,

concat(date_add(next_day('2019-02-20','MO'),-7),'_',date_add(next_day('2019-02-20','MO'),-1)) wk_dt,

count(*)

from

(

select t1.mid_id

from

(

select mid_id

from dws_uv_detail_wk

where wk_dt=concat(date_add(next_day('2019-02-20','MO'),-7),'_',date_add(next_day('2019-02-20','MO'),-1))

)t1

left join

(

select mid_id

from dws_new_mid_day

where create_date<=date_add(next_day('2019-02-20','MO'),-1) and create_date>=date_add(next_day('2019-02-20','MO'),-7)

)t2

on t1.mid_id=t2.mid_id

left join

(

select mid_id

from dws_uv_detail_wk

where wk_dt=concat(date_add(next_day('2019-02-20','MO'),-7*2),'_',date_add(next_day('2019-02-20','MO'),-7-1))

)t3

on t1.mid_id=t3.mid_id

where t2.mid_id is null and t3.mid_id is null

)t4;

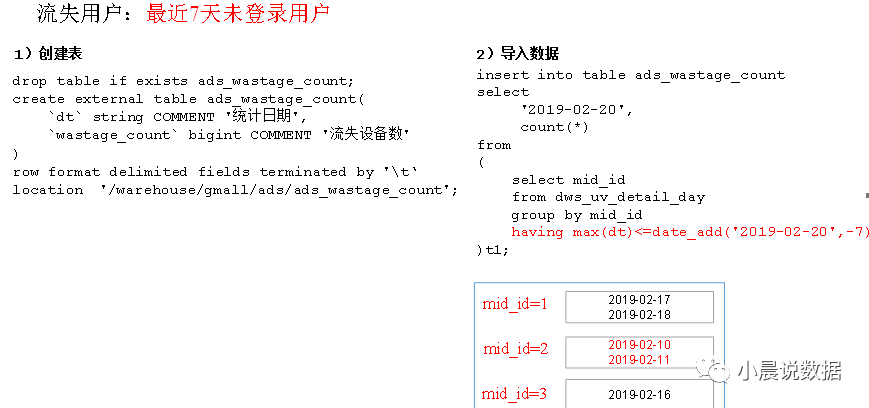

Demand 6: number of lost users

Lost users: those who have not logged in in the last 7 days are called lost users

Use daily living schedule dws_uv_detail_day as DWS layer data

Create table statement

hive (gmall)>

drop table if exists ads_wastage_count;

create external table ads_wastage_count(

`dt` string COMMENT 'Statistical date',

`wastage_count` bigint COMMENT 'Number of lost equipment'

)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ads/ads_wastage_count';

Import data

hive (gmall)>

insert into table ads_wastage_count

select

'2019-02-20',

count(*)

from

(

select mid_id

from dws_uv_detail_day

group by mid_id

having max(dt)<=date_add('2019-02-20',-7)

)t1;

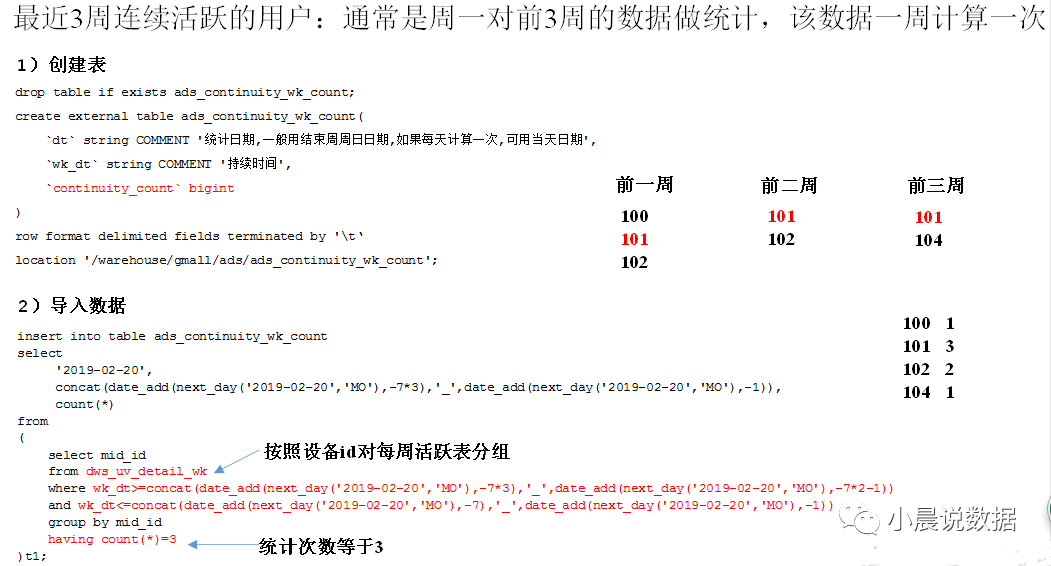

Demand 7: number of active users in the last three consecutive weeks

Users who have been active continuously in the last three weeks: usually make statistics on the data of the first three weeks on Monday, and the data is calculated once a week.

Use weekly schedule dws_uv_detail_wk as DWS layer data

Create table statement

hive (gmall)>

drop table if exists ads_continuity_wk_count;

create external table ads_continuity_wk_count(

`dt` string COMMENT 'Statistical date,Generally, the end week and Sunday date are used,If calculated once a day,Available day date',

`wk_dt` string COMMENT 'Duration',

`continuity_count` bigint

)

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ads/ads_continuity_wk_count';

Import data

hive (gmall)>

insert into table ads_continuity_wk_count

select

'2019-02-20',

concat(date_add(next_day('2019-02-20','MO'),-7*3),'_',date_add(next_day('2019-02-20','MO'),-1)),

count(*)

from

(

select mid_id

from dws_uv_detail_wk

where wk_dt>=concat(date_add(next_day('2019-02-20','MO'),-7*3),'_',date_add(next_day('2019-02-20','MO'),-7*2-1))

and wk_dt<=concat(date_add(next_day('2019-02-20','MO'),-7),'_',date_add(next_day('2019-02-20','MO'),-1))

group by mid_id

having count(*)=3

)t1;

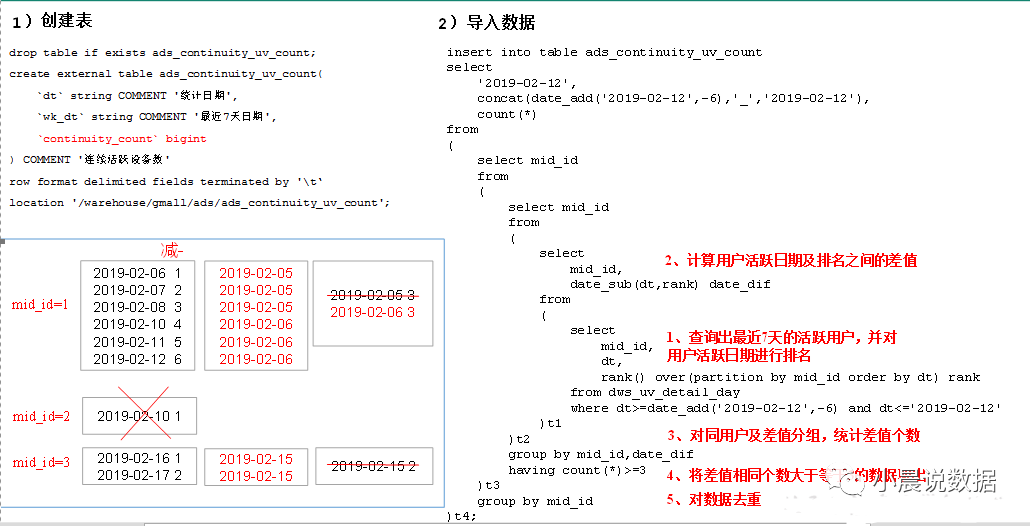

Demand 8: number of active users for three consecutive days in the last seven days

Note: number of active users for 3 consecutive days in the last 7 days

Use daily living schedule dws_uv_detail_day as DWS layer data

Build table

hive (gmall)>

drop table if exists ads_continuity_uv_count;

create external table ads_continuity_uv_count(

`dt` string COMMENT 'Statistical date',

`wk_dt` string COMMENT 'Date of last 7 days',

`continuity_count` bigint

) COMMENT 'Number of continuously active devices'

row format delimited fields terminated by '\t'

location '/warehouse/gmall/ads/ads_continuity_uv_count';

Import data

hive (gmall)>

insert into table ads_continuity_uv_count

select

'2019-02-12',

concat(date_add('2019-02-12',-6),'_','2019-02-12'),

count(*)

from

(

select mid_id

from

(

select mid_id

from

(

select

mid_id,

date_sub(dt,rank) date_dif

from

(

select

mid_id,

dt,

rank() over(partition by mid_id order by dt) rank

from dws_uv_detail_day

where dt>=date_add('2019-02-12',-6) and dt<='2019-02-12'

)t1

)t2

group by mid_id,date_dif

having count(*)>=3

)t3

group by mid_id

)t4;

The ODS layer is as like as two peas.

DWD layer dwd_order_info order form dwd_order_detail order details (orders and goods) dwd_user_info user table dwd_payment_info payment flow dwd_sku_info commodity table (add classification)

Daily user behavior wide table dws_user_action

Field: user_id,order_count,order_amount,payment_count,payment_amount ,comment_count

drop table if exists dws_user_action;

create external table dws_user_action(

user_id string comment 'user id',

order_count bigint comment 'User's next odd number',

order_amount decimal(16, 2) comment 'Order amount',

payment_count bigint comment 'Payment times',

payment_amount decimal(16, 2) comment 'Payment amount',

comment_count bigint comment 'Number of comments'

)comment 'Daily user behavior table'

partitioned by(`dt` string)

stored as parquet

location '/warehouse/gmall/dws/dws_user_action/'

tblproperties("parquet.compression"="snappy");

Import data

0 placeholder, the first field must have an alias

with tmp_order as( select user_id, count(*) order_count, sum(oi.total_amount) order_amount from dwd_order_info oi where date_format(oi.create_time, 'yyyy-MM-dd')='2019-02-10' group by user_id ), tmp_payment as( select user_id, count(*) payment_count, sum(pi.total_amount) payment_amount from dwd_payment_info pi where date_format(pi.payment_time, 'yyyy-MM-dd')='2019-02-10' group by user_id ), tmp_comment as( select user_id, count(*) comment_count from dwd_comment_log c where date_format(c.dt, 'yyyy-MM-dd')='2019-02-10' group by user_id ) insert overwrite table dws_user_action partition(dt='2019-02-10') select user_actions.user_id, sum(user_actions.order_count), sum(user_actions.order_amount), sum(user_actions.payment_count), sum(user_actions.payment_amount), sum(user_actions.comment_count) from( select user_id, order_count, order_amount, 0 payment_count, 0 payment_amount, 0 comment_count from tmp_order union all select user_id, 0, 0, payment_count, payment_amount, 0 from tmp_payment union all select user_id, 0, 0, 0, 0, comment_count from tmp_comment ) user_actions group by user_id;