After several school recruitment interviews, I found that http cache has been used as an interview question. When I first met this question, I was confused, so I summarized the following contents

In any front-end project, it is common to access the server to obtain data. However, if the same data is repeatedly requested more than once, the redundant requests will inevitably waste network bandwidth and delay the browser to render the content to be processed, thus affecting the user experience. If the user accesses the network by volume billing, the redundant requests will implicitly increase the user's network traffic charge. Therefore, considering using caching technology to reuse the obtained resources is an effective strategy to improve website performance and user experience.

The principle of caching is to save a response copy of the requested resource after the first request. When the user initiates the same request again, if it is judged that the cache hits, the request will be intercepted and the previously stored response copy will be returned to the user, so as to avoid re initiating the resource request to the server.

There are many kinds of cache technologies, such as proxy cache, browser cache, gateway cache, load balancer and content distribution network. They can be roughly divided into two categories: shared cache and private cache. Shared cache means that the cached content can be used by multiple users, such as the Web proxy set up within the company; Private cache refers to a cache that can only be used by users alone, such as browser cache.

HTTP caching should be one of the most frequently contacted caching mechanisms in front-end development. It can be subdivided into forced caching and negotiation caching. The biggest difference between them is whether the browser needs to ask the server to negotiate the relevant information of the cache when judging the cache hit, and then judge whether it needs to re request the response content. Let's take a look at the specific mechanism of HTTP caching and the caching decision-making strategy.

Strong cache

// Response header by setting Expires

res.writeHead(200, { // Disadvantages: client time and server time may not be synchronized

Expires: new Date('2021-5-27 21:40').toUTCString()

})

// By setting cache control

res.writeHead(200, {

'Cache-Control': 'max-age=5' // Sliding time in seconds

})

Negotiation cache

// Determine the negotiation cache by setting the file modification time

const { mtime } = fs.statSync('./img/03.jpg')

const ifModifiedSince = req.headers['if-modified-since']

if (ifModifiedSince === mtime.toUTCString()) { // Cache validation

res.statusCode = 304

res.end()

return

}

const data = fs.readFileSync('./img/03.jpg')

// Tell the client that the resource should use the negotiation cache

// Before the client uses the cached data, ask whether the server cache is valid

// Server:

// Valid: returns 304. The client uses local cache resources

// Invalid: directly return new resource data, which can be directly used by the client

res.setHeader('Cache-Control', 'no-cache')

// The server should issue a field to tell the client the update time of this resource

res.setHeader('last-modified', mtime.toUTCString())

res.end(data)

// Last modified deficiency

// See below

// The negotiation cache is judged by whether the file content changes

const data = fs.readFileSync('./img/04.jpg')

// Generate a unique password stamp based on the file content

const etagContent = etag(data)

const ifNoneMatch = req.headers['if-none-match']

if (ifNoneMatch === etagContent) {

res.statusCode = 304

res.end()

return

}

// Tell the client to negotiate caching

res.setHeader('Cache-Control', 'no-cache')

// Send the content password stamp of the resource to the client

res.setHeader('etag', etagContent)

res.end(data)

The negotiation cache implemented through last modified can meet most usage scenarios, but there are two obvious defects:

- Firstly, it only judges according to the last modified timestamp of the resource. Although the requested file resource has been edited, the content has not changed, and the timestamp will also be updated, resulting in the validation of the validity judgment during the negotiation cache, which needs to renew the complete resource request. This will undoubtedly cause a waste of network bandwidth resources and prolong the time for users to obtain the target resources.

- Secondly, the time stamp identifying the file resource modification is in seconds. If the file modification is very fast and it is assumed to be completed within hundreds of milliseconds, the above method of time stamp to verify the effectiveness of the cache cannot identify the update of the file resource.

In fact, the reason for the above two defects is the same, that is, the server cannot identify the real update only according to the time stamp of resource modification, which leads to the re initiation of the request, but the re request uses the cached Bug scenario.

Negotiation cache based on ETag

In order to make up for the deficiency of time stamp judgment, a header information of ETag, namely Entity Tag, is added from HTTP 1.1 specification.

Its content is mainly a string generated by the server through hash operation for different resources. This string is similar to the file fingerprint. As long as the file content encoding is different, the corresponding ETag tag value will be different. Therefore, ETag can be used to more accurately perceive the change of file resources.

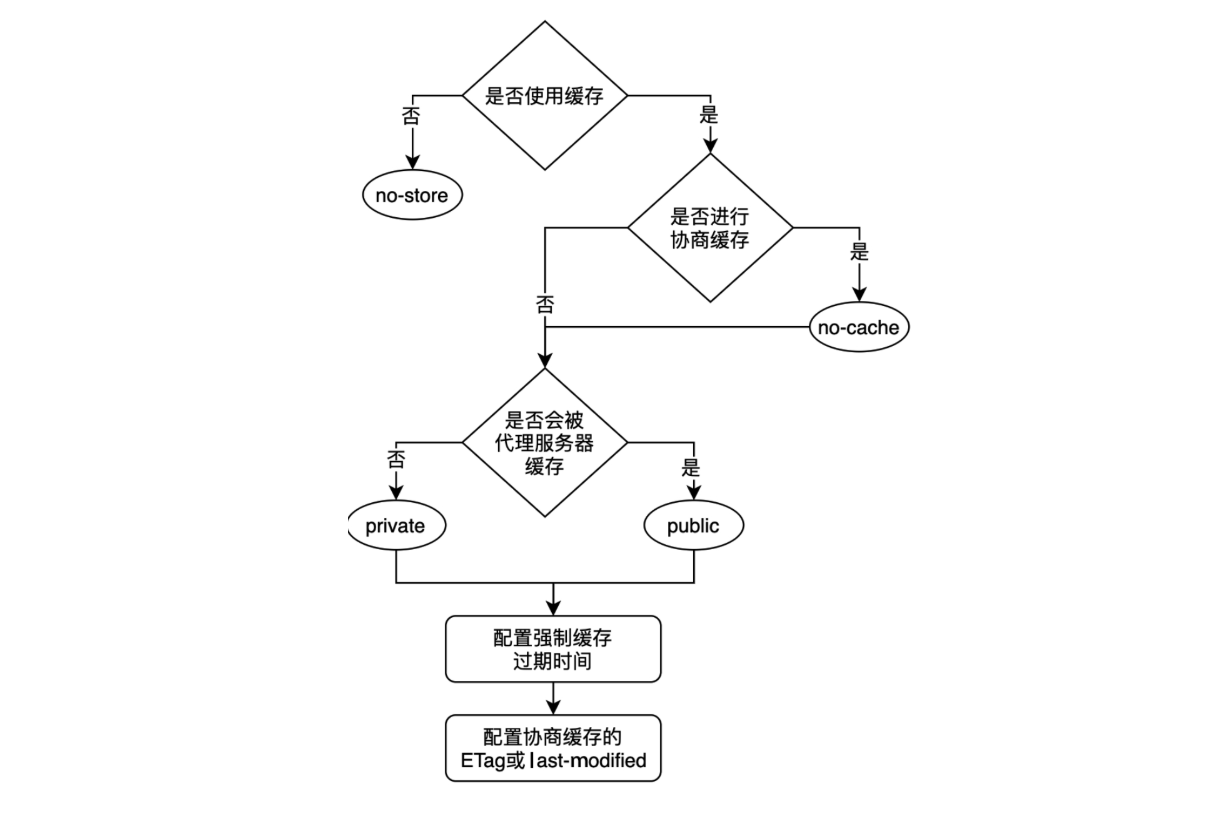

Cache decision tree