Learning from sentinel Leader election and implementation of Raft protocol (Part 2)

In the last article, I introduced you to the basic process of Raft protocol and the basic process of sentinel instance work. The Sentinels perform periodic execution through the serverCron function, and then call the sentinelTimer function in serverCron to realize the periodic processing of sentinel related time events. The time events handled by the sentineltimer function include each primary node that monitors the sentry. It will check the online status of the primary node by calling the sentinelHandleRedisInstance function and perform failover when the primary node goes offline.

In addition, I also showed you the first three steps of the execution process of the sentinelHandleRedisInstance function, which are reconnecting the disconnected instance and sending detection commands to the instance periodically to detect whether the instance is offline subjectively. This also corresponds to the three functions of sentinelReconnectInstance, sentinelSendPeriodicCommands and sentinelchecksubjectively down respectively, You can look back.

Then, in today's article, I will introduce to you the remaining operations during the execution of sentinelHandleRedisInstance function, which are to detect whether the primary node is offline objectively, judge whether failover is required, and the specific process of sentinel Leader election when failover is required.

After learning the content of this lesson, you can have a comprehensive understanding of the process of sentry work. Moreover, you can master how to implement the Raft protocol at the code level to complete the Leader election. In this way, when you realize distributed consensus in distributed system in the future, this part can help guide your code design and implementation.

Next, let's take a look at the objective offline judgment of the master node.

Objective offline judgment of primary node

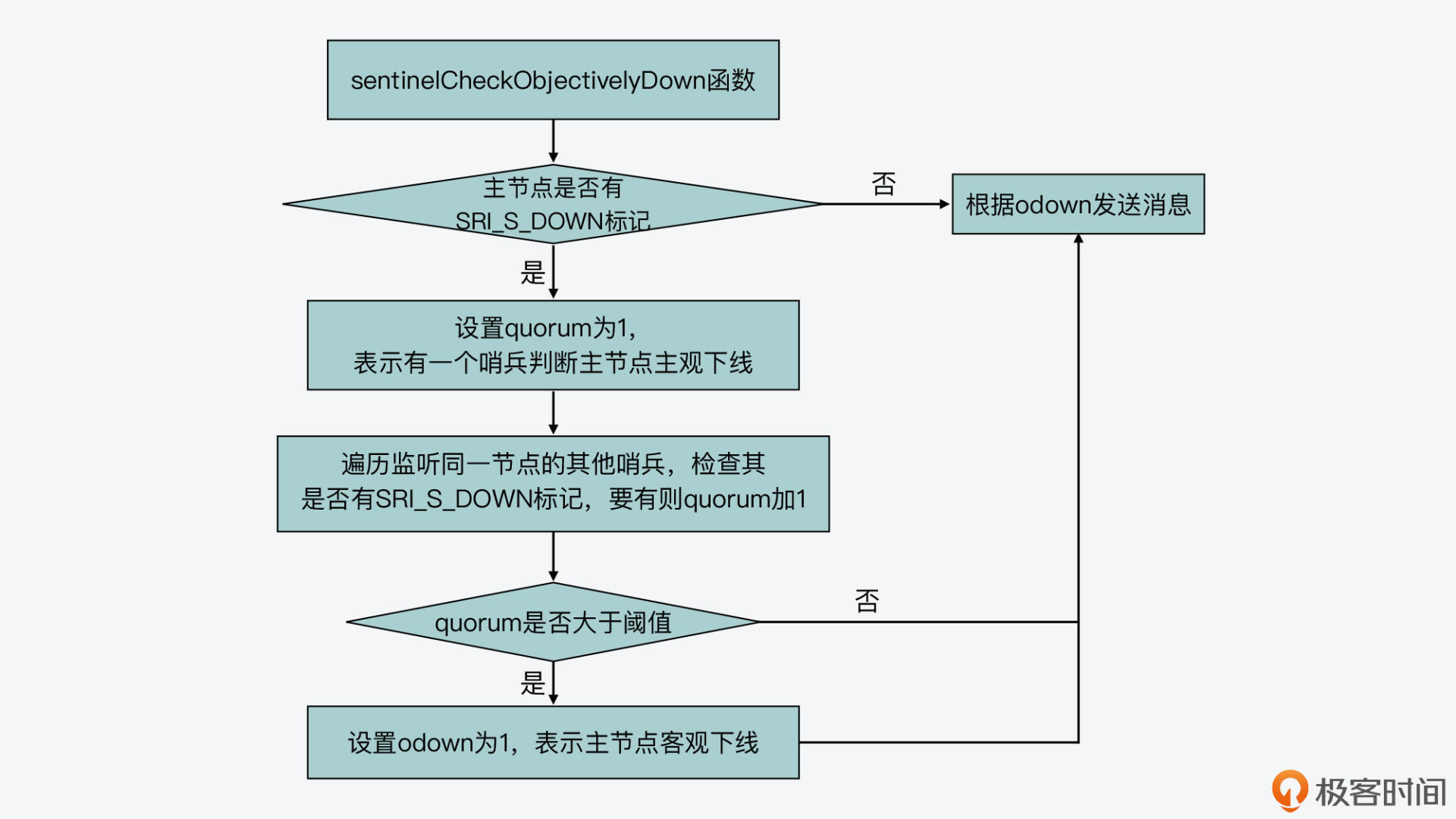

Now we know that the sentinel will call the sentinelCheckObjectivelyDown function (in sentinel.c file) in the sentinelHandleRedisInstance function to detect whether the primary node is offline objectively.

When the sentinelCheckObjectivelyDown function is executed, in addition to checking the judgment results of the current sentinel on the subjective offline of the primary node, it also needs to combine with other sentinels listening to the same primary node to judge the subjective offline of the primary node. It combines these judgment results to make the final judgment of the objective offline of the main node.

From the perspective of code implementation, in the sentinelRedisInstance structure used by the sentinel to record the information of the master node, it has saved other sentinel instances listening to the same master node with a hash table, as shown below:

typedef struct sentinelRedisInstance {

...

dict *sentinels;

...

}

In this way, the sentinelCheckObjectivelyDown function can obtain the judgment results of other sentinel instances on the subjective offline of the same master node by traversing the Sentinels hash table recorded by the master node. This is also because sentinelRedisInstance is also used for sentinelinstances saved in sentinels hash table, and the member variable flags of this structure will record the Sentinel's judgment result of subjective offline of the master node.

Specifically, the sentinelCheckObjectivelyDown function will use the quorum variable to record the number of sentinels who judge that the master node is a subjective offline. If the current sentry has judged that the primary node is a subjective offline, it will first set the quorum value to 1. Then, it will judge the flags variable of other sentinels in turn and check whether SRI is set_ MASTER_ Mark of down. If set, it will increase the quorum value by 1.

After traversing the sentinels hash table, the sentinelCheckObjectivelyDown function will determine whether the quorum value is greater than or equal to the preset quorum threshold, which is saved in the data structure of the master node, that is, master - > quorum, and this threshold is in sentinel Set in the conf configuration file.

If the actual quorum value is greater than or equal to the preset quorum threshold, the sentinelCheckObjectivelyDown function determines that the primary node is the objective offline, and sets the variable odown to 1, which is used to represent the judgment result of the current sentry on the objective offline of the primary node.

The judgment logic of this part is shown in the following code. You can see:

/* Is this instance down according to the configured quorum?

*

* Note that ODOWN is a weak quorum, it only means that enough Sentinels

* reported in a given time range that the instance was not reachable.

* However messages can be delayed so there are no strong guarantees about

* N instances agreeing at the same time about the down state. */

// Is this instance closed according to the configured quorum?

// Note that ODOWN is a weak quorum, which only means that enough Sentinel reports have been reported within a given time range, and the instance is inaccessible.

// However, the message may be delayed, so it is impossible to guarantee that N instances agree to the shutdown state at the same time

void sentinelCheckObjectivelyDown(sentinelRedisInstance *master) {

dictIterator *di;

dictEntry *de;

unsigned int quorum = 0, odown = 0;

// The current master node has been judged as subjective offline by the current sentry

if (master->flags & SRI_S_DOWN) {

/* Is down for enough sentinels? */

// Are there enough sentinels

// The current sentry sets the quorum value to 1

quorum = 1; /* the current sentinel. */

/* Count all the other sentinels. */

di = dictGetIterator(master->sentinels);

// Traverse other sentinels listening to the same master node

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

if (ri->flags & SRI_MASTER_DOWN) quorum++;

}

dictReleaseIterator(di);

// If the quorum value is greater than the preset quorum threshold, set odown to 1.

if (quorum >= master->quorum) odown = 1;

}

/* Set the flag accordingly to the outcome. */

// Set flag according to the result

if (odown) {

// If SRI is not set_ O_ Down flag

if ((master->flags & SRI_O_DOWN) == 0) {

// Send + odown event message

sentinelEvent(LL_WARNING,"+odown",master,"%@ #quorum %d/%d",

quorum, master->quorum);

// Record the SRI in the flags of the master node_ O_ Down flag

master->flags |= SRI_O_DOWN;

// Record the time to judge the objective offline

master->o_down_since_time = mstime();

}

} else {

if (master->flags & SRI_O_DOWN) {

sentinelEvent(LL_WARNING,"-odown",master,"%@");

master->flags &= ~SRI_O_DOWN;

}

}

}

In addition, I also drew a diagram here to show the judgment logic. You can review it again.

In other words, the sentinelCheckObjectivelyDown function determines whether the primary node is offline objectively by traversing the flags variables of other sentinels listening to the same primary node.

However, after reading the code just now, you may have a question. In the sentinelchecksubjectively down function learned in the last lesson, if the sentinel judges that the primary node is a subjective offline, it will set SRI in the flags variable of the primary node_ S_ Down flag, as follows:

//The sentry has judged that the master node is a subjective downline

...

//The flags in the sentinelRedisInstance structure of the corresponding master node do not record the subjective offline

if ((ri->flags & SRI_S_DOWN) == 0) {

...

ri->flags |= SRI_S_DOWN; //Record the mark of subjective offline in the flags of the master node,

}

However, the sentinelCheckObjectivelyDown function checks the SRI in other sentinel flags variables listening to the same master node_ MASTER_ Down flag, then Sri of other Sentinels_ MASTER_ How is the down flag set?

This is related to the sentinelAskMasterStateToOtherSentinels function (in sentinel.c file). Let's learn more about this function.

sentinelAskMasterStateToOtherSentinels function

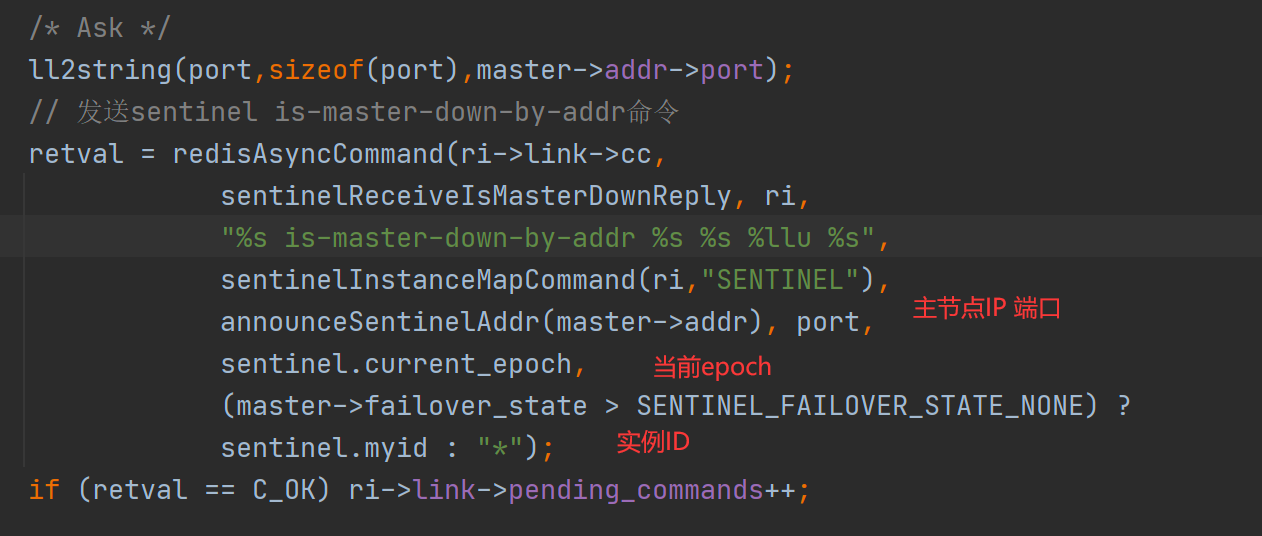

The main purpose of sentinelAskMasterStateToOtherSentinels function is to send the is master down by addr command to other sentinels listening to the same master node, and then ask other sentinels to judge the state of the master node.

It will call the redisAsyncCommand function (in the async.c file) and send the sentinel is master down by addr command to other sentinels in turn. At the same time, it sets the processing function that receives the returned result of the command as sentinelreceiveismaster downreply (in the sentinel.c file), as follows:

/* If we think the master is down, we start sending

* SENTINEL IS-MASTER-DOWN-BY-ADDR requests to other sentinels

* in order to get the replies that allow to reach the quorum

* needed to mark the master in ODOWN state and trigger a failover. */

// If we think the master is down, we start to send a SENTINEL IS-MASTER-DOWN-BY-ADDR request to other sentinels

// To get a reply that allows the agreed number of people needed to mark the master as ODOWN and trigger failover to be reached

#define SENTINEL_ASK_FORCED (1<<0)

void sentinelAskMasterStateToOtherSentinels(sentinelRedisInstance *master, int flags) {

dictIterator *di;

dictEntry *de;

di = dictGetIterator(master->sentinels);

// Traverse other sentinels listening to the same master node

while((de = dictNext(di)) != NULL) {

// Get a sentinel instance

sentinelRedisInstance *ri = dictGetVal(de);

mstime_t elapsed = mstime() - ri->last_master_down_reply_time;

char port[32];

int retval;

/* If the master state from other sentinel is too old, we clear it. */

if (elapsed > SENTINEL_ASK_PERIOD*5) {

ri->flags &= ~SRI_MASTER_DOWN;

sdsfree(ri->leader);

ri->leader = NULL;

}

/* Only ask if master is down to other sentinels if:

*

* 1) We believe it is down, or there is a failover in progress.

* 2) Sentinel is connected.

* 3) We did not receive the info within SENTINEL_ASK_PERIOD ms. */

// Only ask if the master is closed to other sentinels if:

// 1) We think it's down or failing over.

// 2) Sentinel is connected.

// 3) We're not in SENTINEL_ASK_PERIOD (1000) milliseconds.

if ((master->flags & SRI_S_DOWN) == 0) continue;

if (ri->link->disconnected) continue;

if (!(flags & SENTINEL_ASK_FORCED) &&

mstime() - ri->last_master_down_reply_time < SENTINEL_ASK_PERIOD)

continue;

/* Ask */

ll2string(port,sizeof(port),master->addr->port);

// Send sentinel is master down by addr command

retval = redisAsyncCommand(ri->link->cc,

sentinelReceiveIsMasterDownReply, ri,

"%s is-master-down-by-addr %s %s %llu %s",

sentinelInstanceMapCommand(ri,"SENTINEL"),

announceSentinelAddr(master->addr), port,

sentinel.current_epoch,

(master->failover_state > SENTINEL_FAILOVER_STATE_NONE) ?

sentinel.myid : "*");

if (retval == C_OK) ri->link->pending_commands++;

}

dictReleaseIterator(di);

}

In addition, from the code, we can see that the sentinel is master down by addr command also includes the master node IP, the master node port number, the current era (sentinel.current_epoch) and the instance ID. The format of this command is shown below:

sentinel is-master-down-by-addr Master node IP Primary node port current epoch example ID

The sentinel will set the instance ID according to the current state of the master node. If the master node is about to start failover, the instance ID will be set to the ID of the current sentry itself, otherwise it will be set to the * sign

Here you should note that the data structure of the master node uses master - > failover_ State to record the status of failover, and its initial value is SENTINEL_FAILOVER_STATE_NONE (the corresponding value is 0). When the primary node starts failover, this status value will be greater than SENTINEL_FAILOVER_STATE_NONE.

Well, after understanding the basic execution process of the sentinelAskMasterStateToOtherSentinels function, we also need to know: after the sentinelAskMasterStateToOtherSentinels function sends the sentinel is master down by addr command to other sentinels, how do other sentinels handle it?

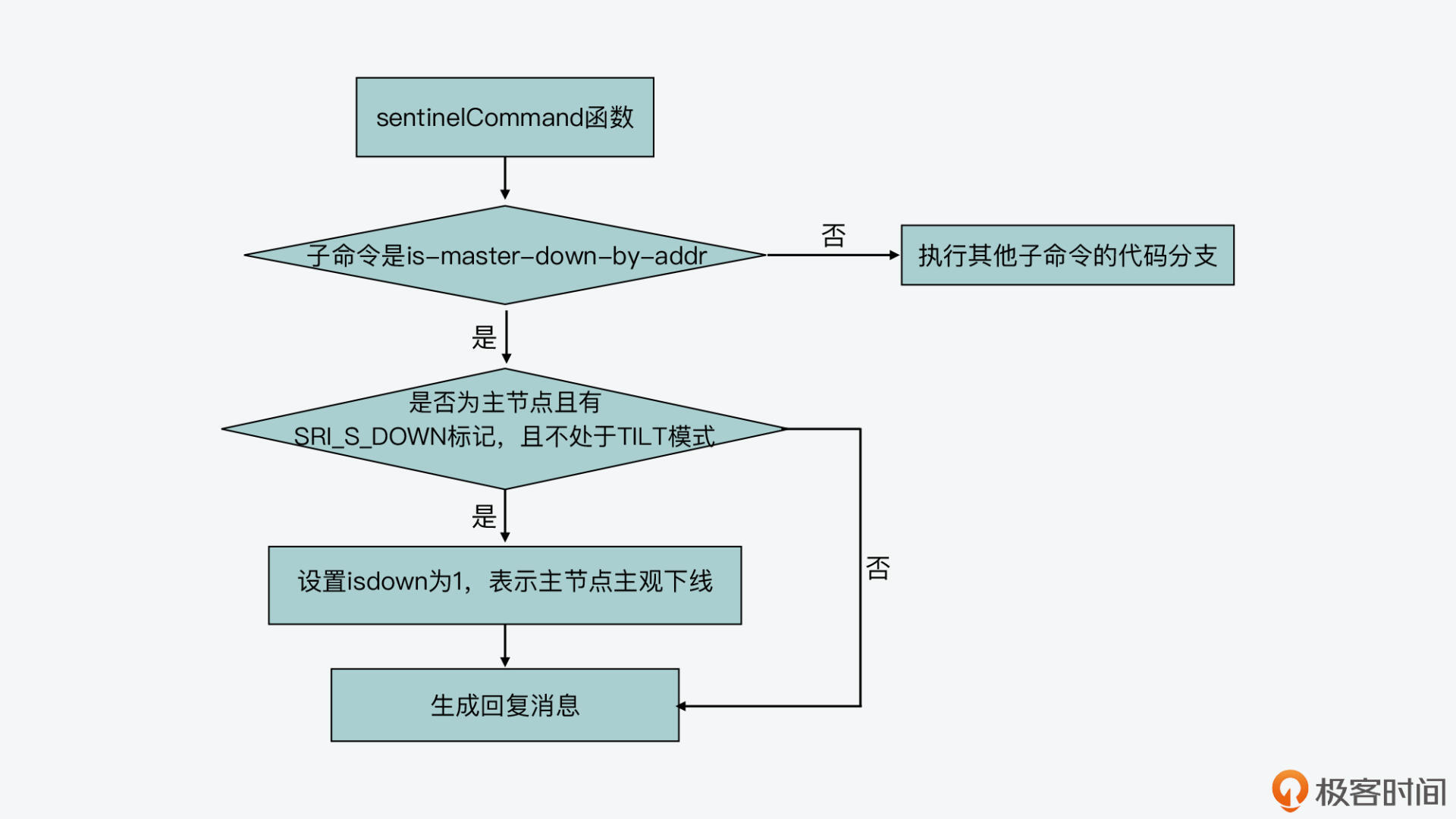

Processing of sentinel is master down by addr command

In fact, the sentry handles the commands starting with sentinel in the sentinel command function (in the sentinel.c file). sentinelCommand function will execute different branches according to different subcommands following sentinel command, and is master down by addr is a subcommand.

In the code branch corresponding to the is master down by addr subcommand, the sentinelCommand function will obtain the sentinelRedisInstance structure corresponding to the primary node according to the IP and port number of the primary node in the command.

Next, it will judge whether there is SRI in the flags variable of the master node_ S_ Down and SRI_MASTER flag, that is, the sentinelCommand function will check whether the current node is indeed the master node and whether the sentinel has marked the node as a subjective offline. If the conditions are met, it will set the isdown variable to 1, and this variable represents the Sentinel's judgment result of the subjective offline of the master node.

Then, the sentinelCommand function will return the judgment result of the current sentinel on the subjective offline of the primary node to the sentinel sending the sentinel command. The returned result mainly includes three parts: the judgment result of the current sentry on the subjective offline of the main node, the ID of the sentry Leader, and the era to which the sentry Leader belongs.

sentinelCommand function. The basic process of sentinel command processing is as follows:

void sentinelCommand(client *c) {

...

// Branch corresponding to is master down by addr subcommand

else if (!strcasecmp(c->argv[1]->ptr,"is-master-down-by-addr")) {

...

//The current sentinel judges that the primary node is a subjective offline node

if (!sentinel.tilt && ri && (ri->flags & SRI_S_DOWN) && (ri->flags & SRI_MASTER))

isdown = 1;

...

addReplyMultiBulkLen(c,3); //The sentinel command processing result returned by the sentinel contains three parts

addReply(c, isdown ? shared.cone : shared.czero); //If the sentinel judges that the primary node is a subjective offline, the first part is 1, otherwise it is 0

addReplyBulkCString(c, leader ? leader : "*"); //The second part is the Leader ID or*

addReplyLongLong(c, (long long)leader_epoch); //The third part is the era of Leader

...}

You can also refer to the following figure:

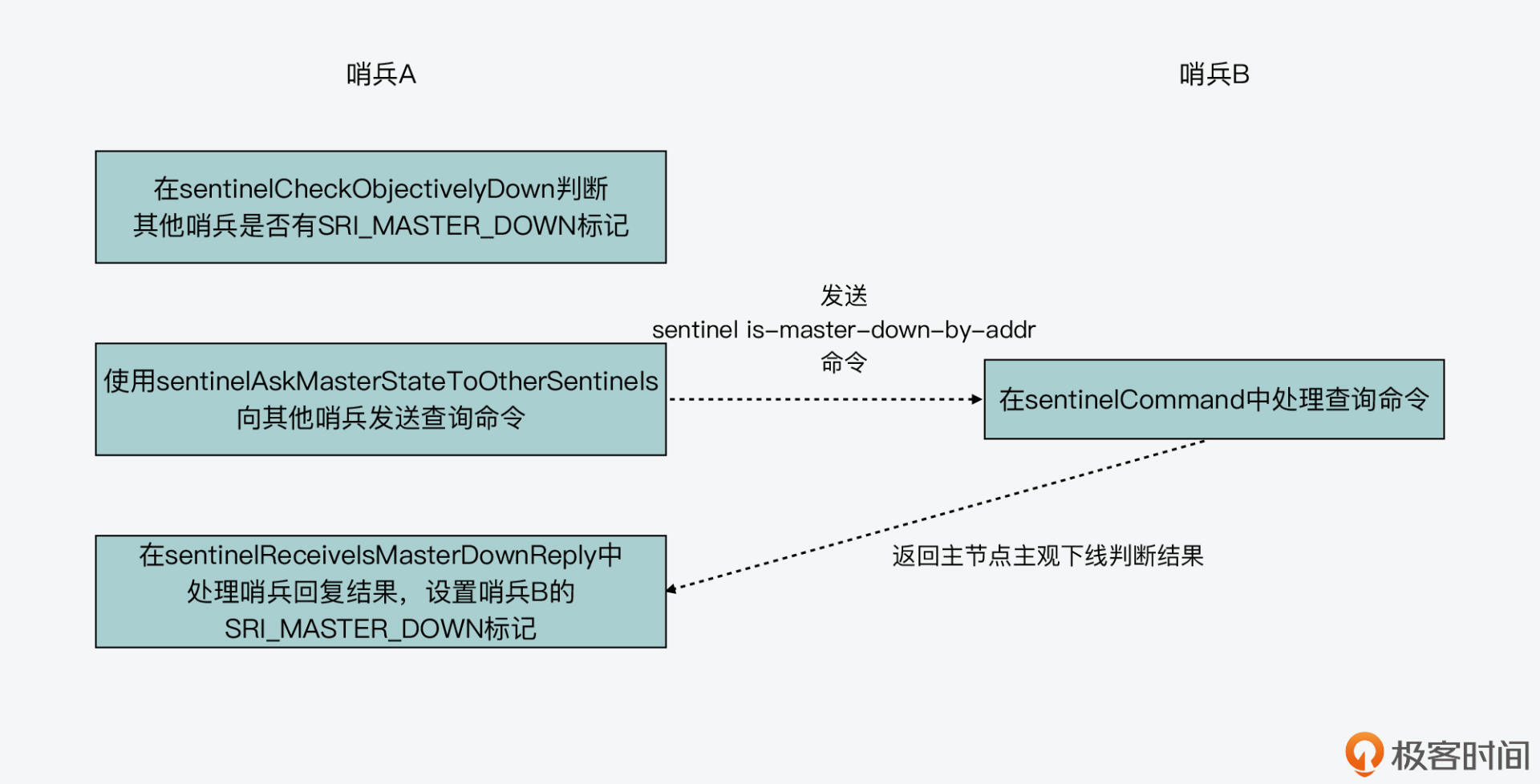

Well, you already know that the sentinel will send the sentinel is master down by addr command to other sentinels listening to the same node through the sentinelAskMasterStateToOtherSentinels function to obtain the judgment results of other sentinels on the subjective offline of the master node. Other sentinels use the sentinelCommand function to process the sentinel is master down by addr command, and include their own judgment results on the subjective offline of the master node in the returned results of command processing.

However, from the code just now, you can also see that the sentinel Leader information will be included in the sentinel command processing results returned by other sentinels. In fact, this is because the sentinel is master down by addr command sent by the sentinelAskMasterStateToOtherSentinels function can also be used to trigger the sentinel Leader election. I'll introduce this to you later.

Then, let's go back to the problem raised in the objective offline judgment of the primary node. The sentinelCheckObjectivelyDown function checks the SRI in other sentinel flags variables listening to the same primary node_ MASTER_ Down mark, however, Sri of other sentinels_ MASTER_ How is the down flag set?

This is actually related to the command result processing function sentinelreceiveismaster downreply set when the sentinel sends the sentinel is master down by addr command to other sentinels in the sentinelAskMasterStateToOtherSentinels function.

Sentinelreceiveismaster downreply function

In the sentinelreceiveismaster downreply function, it will judge the reply results returned by other sentinels. The reply result will include the three parts I just introduced, namely the judgment result of the current sentinel on the subjective offline of the main node, the ID of the sentinel Leader, and the era to which the sentinel Leader belongs. This function will further check whether the first part of the content "the judgment result of the current sentry on the subjective offline of the master node" is 1.

If yes, it indicates that the corresponding sentry has judged that the master node is subjective offline, and the current Sentry will set the flags of the corresponding sentry recorded by itself as SRI_MASTER_DOWN.

The following code shows the execution logic of sentinelreceiveismaster downreply function to judge the reply results of other sentinels. You can have a look.

/* Receive the SENTINEL is-master-down-by-addr reply, see the

* sentinelAskMasterStateToOtherSentinels() function for more information. */

// Receive sentinel is master down by addr reply. For more information, see sentinelAskMasterStateToOtherSentinels() function

void sentinelReceiveIsMasterDownReply(redisAsyncContext *c, void *reply, void *privdata) {

sentinelRedisInstance *ri = privdata;

instanceLink *link = c->data;

redisReply *r;

if (!reply || !link) return;

link->pending_commands--;

r = reply;

/* Ignore every error or unexpected reply.

* Note that if the command returns an error for any reason we'll

* end clearing the SRI_MASTER_DOWN flag for timeout anyway. */

// Ignore every error or unexpected reply. Note that if the command returns an error for any reason, we will end clearing the SRI_MASTER_DOWN flag to indicate timeout.

// r is the command processing result of other sentinels received by the current sentinel

// If the returned result contains three parts, and the types of the first, second and third parts are integer, string and integer respectively

if (r->type == REDIS_REPLY_ARRAY && r->elements == 3 &&

r->element[0]->type == REDIS_REPLY_INTEGER &&

r->element[1]->type == REDIS_REPLY_STRING &&

r->element[2]->type == REDIS_REPLY_INTEGER)

{

ri->last_master_down_reply_time = mstime();

// If the value of the first part of the returned result is 1, set SRI in the flags of the corresponding sentry_ MASTER_ Down flag

if (r->element[0]->integer == 1) {

ri->flags |= SRI_MASTER_DOWN;

} else {

ri->flags &= ~SRI_MASTER_DOWN;

}

if (strcmp(r->element[1]->str,"*")) {

/* If the runid in the reply is not "*" the Sentinel actually replied with a vote. */

// If the runid in the reply is not "*", Sentinel actually replied to the vote.

sdsfree(ri->leader);

if ((long long)ri->leader_epoch != r->element[2]->integer)

serverLog(LL_WARNING,

"%s voted for %s %llu", ri->name,

r->element[1]->str,

(unsigned long long) r->element[2]->integer);

ri->leader = sdsnew(r->element[1]->str);

ri->leader_epoch = r->element[2]->integer;

}

}

}

So here, you can know that a sentinel calls the sentinelCheckObjectivelyDown function to directly check whether the flags of other sentinels have SRI_ MASTER_ The sentinel sends the sentinel is master down by addr command to other sentinels through the sentinelAskMasterStateToOtherSentinels function, so as to ask other sentinels about the judgment result of the subjective offline of the master node. According to the command reply result, set the flags of other sentinels to Sri in the result processing function sentinelReceiveIsMasterDownReply_ MASTER_ DOWN. The following figure also shows the execution logic. You can review it as a whole.

Well, after mastering the execution logic, let's see when the sentinel election began.

Sentinel election

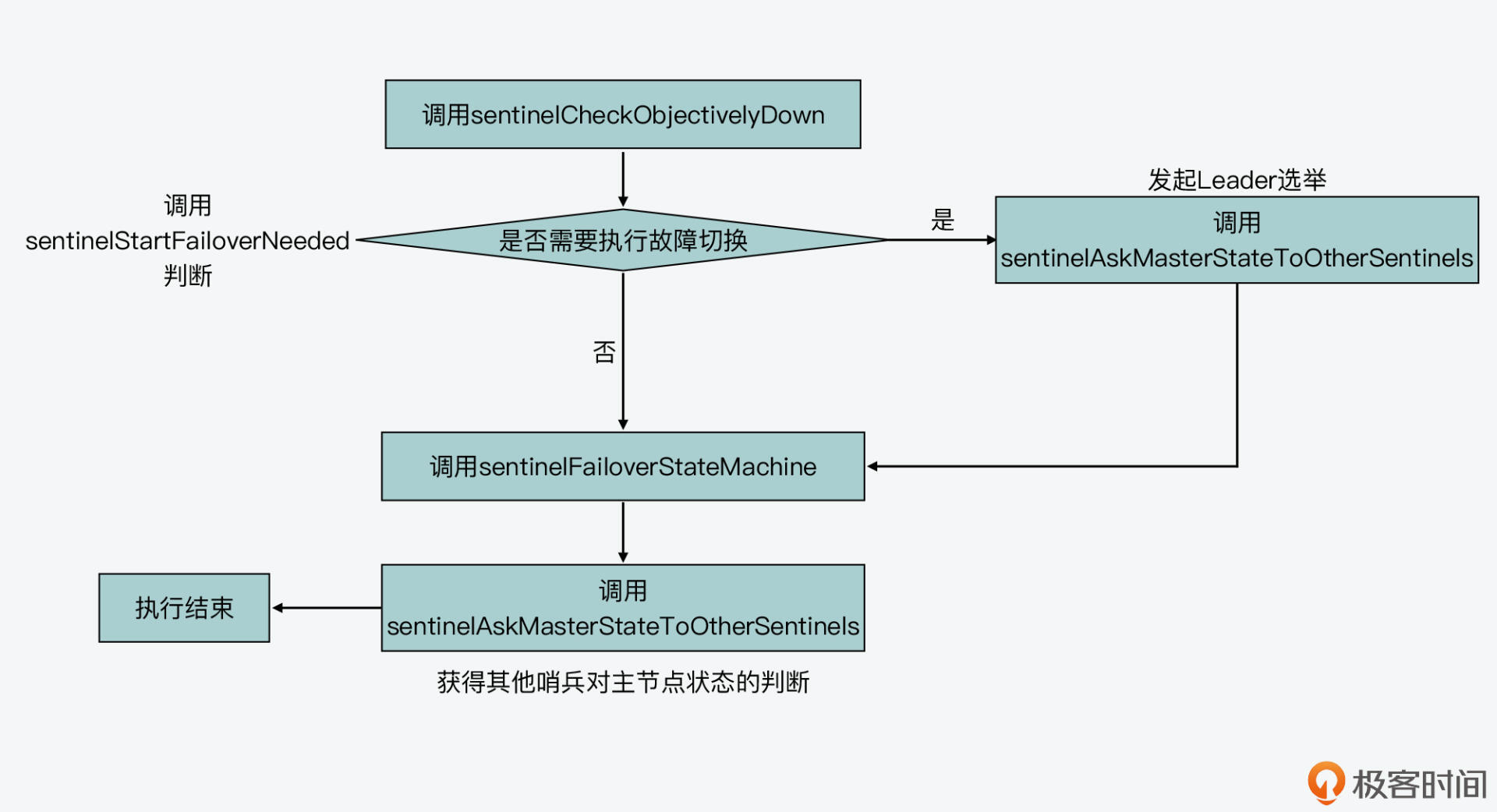

Here, in order to understand the trigger of sentinel election, let's review the calling relationship for the master node in the sentinelHandleRedisInstance function I talked about last time, as shown in the following figure:

As can be seen from the figure, the sentinelHandleRedisInstance will call the sentinelCheckObjectivelyDown function first, and then the sentinelStartFailoverIfNeeded function to judge whether to start failover. If the return value of the sentinelStartFailoverIfNeeded function is a non-zero value, the sentinelAskMasterStateToOtherSentinels function will be called. Otherwise, sentinelHandleRedisInstance will directly call the sentinelFailoverStateMachine function and call the sentinelAskMasterStateToOtherSentinels function again.

Then, in this calling relationship, sentinelStartFailoverIfNeeded will judge whether to perform failover. There are three judgment conditions, namely:

- The flags of the master node have been marked with SRI_O_DOWN;

- No failover is currently being performed;

- If the failover has started, the start time should be longer than sentinel Twice the sentinel failover timeout configuration item in the conf file.

After these three conditions are met, sentinelStartFailoverIfNeeded will call the sentinelStartFailover function to start failover, and sentinelStartFailover will replace the failover of the primary node_ Set state to SENTINEL_FAILOVER_STATE_WAIT_START, and set SRI in the flags of the master node at the same time_ FAILOVER_ IN_ The progress flag indicates that failover has started, as shown below:

// Set the Master status to initiate failover.

void sentinelStartFailover(sentinelRedisInstance *master) {

serverAssert(master->flags & SRI_MASTER);

master->failover_state = SENTINEL_FAILOVER_STATE_WAIT_START;

master->flags |= SRI_FAILOVER_IN_PROGRESS;

master->failover_epoch = ++sentinel.current_epoch;

sentinelEvent(LL_WARNING,"+new-epoch",master,"%llu",

(unsigned long long) sentinel.current_epoch);

sentinelEvent(LL_WARNING,"+try-failover",master,"%@");

master->failover_start_time = mstime()+rand()%SENTINEL_MAX_DESYNC;

master->failover_state_change_time = mstime();

}

Once the sentinelStartFailover function returns the failover of the primary node_ Set state to sentinel_ failover_state_ WAIT_ After start, the sentinelFailoverStateMachine function will execute the state machine to complete the actual switching. However, the sentinelAskMasterStateToOtherSentinels function will be called before the actual switch.

Seeing this call relationship, you may have a question: the sentinelAskMasterStateToOtherSentinels function is used to ask other sentinels about the judgment result of the subjective offline of the master node. If sentinelStartFailoverIfNeeded judges to start failover, why call the sentinelAskMasterStateToOtherSentinels function?

In fact, this is related to another function of sentinelAskMasterStateToOtherSentinels function. In addition to asking other sentinels to judge the state of the master node, this function can also be used to launch Leader election to other sentinels.

When I introduced this function to you just now, I mentioned that it will send the sentinel is master down by addr command to other sentinels. This command includes the primary node IP, the primary node port number, the current era (sentinel.current_epoch) and the instance ID. Where, if the failover of the primary node_ State is no longer SENTINEL_FAILOVER_STATE_NONE, then the instance ID will be set to the ID of the current sentry.

In the sentinel command processing function, if it is detected that the instance ID in the sentinel command is not a * sign, the sentinelVoteLeader function will be called for Leader election.

//The current instance is the primary node, and the instance ID of sentinel command is not equal to the * sign

if (ri && ri->flags & SRI_MASTER && strcasecmp(c->argv[5]->ptr,"*")) {

//Call sentinelVoteLeader for sentinel Leader election

leader = sentinelVoteLeader(ri,(uint64_t)req_epoch, c->argv[5]->ptr,

&leader_epoch);

}

Next, let's learn more about the sentinelVoteLeader function.

sentinelVoteLeader function

The sentinelVoteLeader function will actually execute the voting logic. Here I will illustrate it to you through an example.

Assuming that sentinel a judges that the master node is offline, it now sends a voting request to sentinel B. the ID of sentinel A is req_runid. When sentinel B executes sentinelVoteLeader function, this function will judge the era of sentinel a (req_epoch), the era of sentinel B (sentinel.current_epoch), and the era of Leader recorded by master (Master - > Leader_epoch). According to the definition of Raft protocol, sentinel A is the Candidate node and sentinel B is the Follower node.

When I introduced the Raft agreement to you last class, I mentioned that the voting initiated by Candidate is recorded in rounds, and Follower can only cast one vote in one round of voting. The era here plays the role of round recording. The sentinelVoteLeader function judges the era according to the requirements of the Raft agreement, so that the Follower can only vote in one round.

Then, the sentinelVoteLeader function allows sentinel B to vote on the condition that the era of Leader recorded by master is less than that of sentinel a, and the era of sentinel A is greater than or equal to that of sentinel B. These two conditions ensure that sentry B has not voted. Otherwise, the sentinelVoteLeader function will directly return the Leader ID recorded in the current master, which is also recorded after sentry B voted before.

The following code shows the logic just introduced. You can have a look.

/* Vote for the sentinel with 'req_runid' or return the old vote if already

* voted for the specified 'req_epoch' or one greater.

*

* If a vote is not available returns NULL, otherwise return the Sentinel

* runid and populate the leader_epoch with the epoch of the vote. */

// Vote for the sentry with "req_runid". If you have voted for the specified "req_epoch" or greater, return to the previous vote

// If voting is not available, NULL is returned; otherwise, Sentinel runid is returned and the leader is filled with the vote's epoch_ epoch

char *sentinelVoteLeader(sentinelRedisInstance *master, uint64_t req_epoch, char *req_runid, uint64_t *leader_epoch) {

if (req_epoch > sentinel.current_epoch) {

sentinel.current_epoch = req_epoch;

sentinelFlushConfig();

sentinelEvent(LL_WARNING,"+new-epoch",master,"%llu",

(unsigned long long) sentinel.current_epoch);

}

// The era of Leader recorded by master is less than that of sentinel a, and the era of sentinel A is greater than or equal to that of sentinel B

if (master->leader_epoch < req_epoch && sentinel.current_epoch <= req_epoch)

{

sdsfree(master->leader);

master->leader = sdsnew(req_runid);

master->leader_epoch = sentinel.current_epoch;

sentinelFlushConfig();

sentinelEvent(LL_WARNING,"+vote-for-leader",master,"%s %llu",

master->leader, (unsigned long long) master->leader_epoch);

/* If we did not voted for ourselves, set the master failover start

* time to now, in order to force a delay before we can start a

* failover for the same master. */

// If we don't vote for ourselves, please set the master failover start time to now,

// To force a delay before we can start failover for the same master.

if (strcasecmp(master->leader,sentinel.myid))

master->failover_start_time = mstime()+rand()%SENTINEL_MAX_DESYNC;

}

*leader_epoch = master->leader_epoch;

// Directly return the Leader ID recorded in the current master

return master->leader ? sdsnew(master->leader) : NULL;

}

Now, you know how the sentinelVoteLeader function uses era judgment to complete the sentinel Leader election according to the Raft protocol.

Next, the sentinel initiating the voting still processes the returned results of other sentinels voting on the Leader through the sentinelreceiveismaster downreply function. As just introduced to you, the second and third parts of this return result are the ID of the sentinel Leader and the era to which the sentinel Leader belongs. The sentinel who initiated the voting can get the voting results of other sentinels on the Leader from this result.

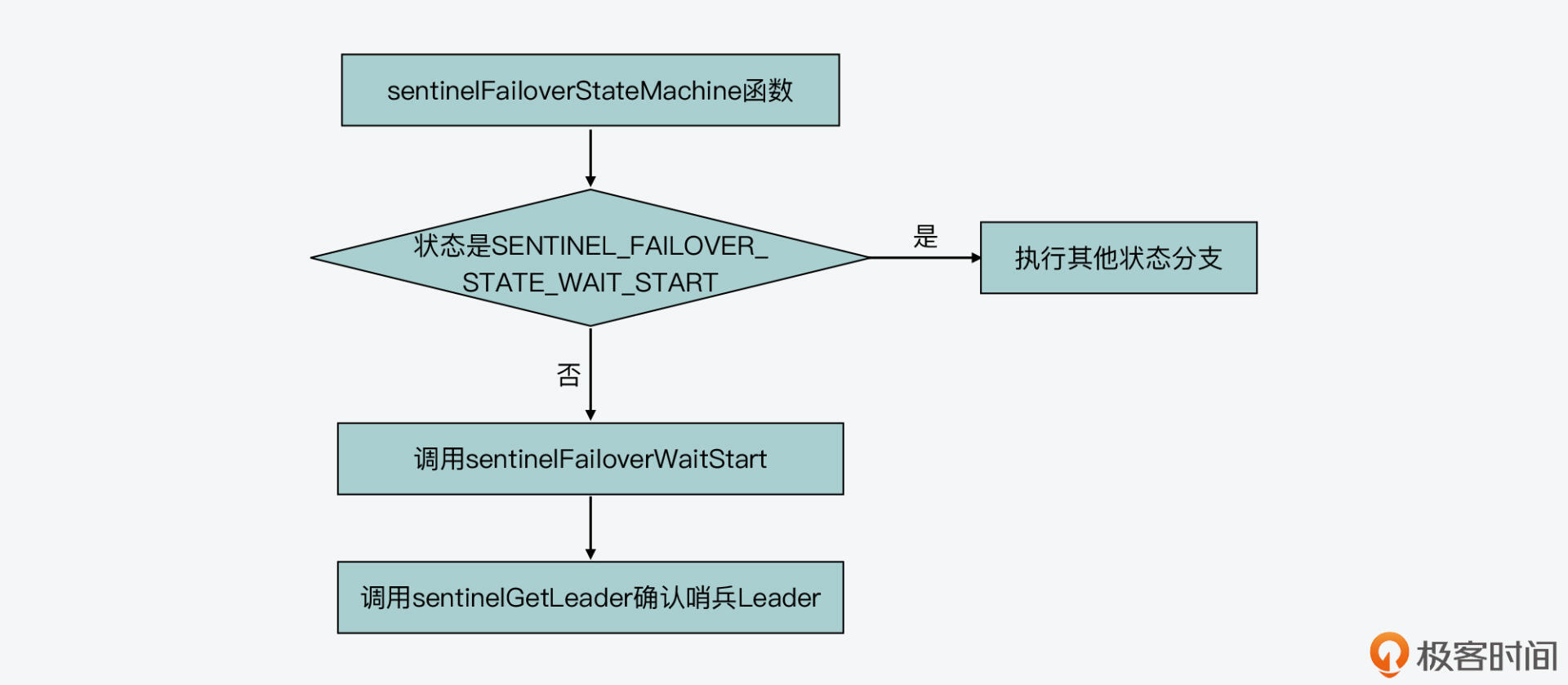

Finally, after the sentinelAskMasterStateToOtherSentinels function is called by the sentinelvoting sentinels to let other sentinels vote, the sentinelFailoverStateMachine function will be executed.

If the primary node starts to perform failover, the failover of the primary node_ State will be set to SENTINEL_FAILOVER_STATE_WAIT_START. In this state, the sentinelFailoverStateMachine function will call the sentinelFailoverWaitStart function. The sentinelFailoverWaitStart function will call the sentinelGetLeader function to judge whether the sentinel who initiated the voting is a sentinel Leader. To become a Leader, the sentinel who initiated the vote must meet two conditions:

- First, more than half of the other sentinels voted in favour

- Second, obtain the number of affirmative votes exceeding the preset quorum threshold.

These two conditions can also be seen from the code snippet in the sentinelGetLeader function, as shown below.

/* Scan all the Sentinels attached to this master to check if there

* is a leader for the specified epoch.

*

* To be a leader for a given epoch, we should have the majority of

* the Sentinels we know (ever seen since the last SENTINEL RESET) that

* reported the same instance as leader for the same epoch. */

// Scan all sentinel s connected to this master and check whether there is a leader specifying epoch.

// To be the leader of a given epoch, we should let most sentinels we know (seen since the Last SENTINEL RESET) report the same instance as the leader of the same period

char *sentinelGetLeader(sentinelRedisInstance *master, uint64_t epoch) {

dict *counters;

dictIterator *di;

dictEntry *de;

unsigned int voters = 0, voters_quorum;

char *myvote;

char *winner = NULL;

uint64_t leader_epoch;

uint64_t max_votes = 0;

serverAssert(master->flags & (SRI_O_DOWN|SRI_FAILOVER_IN_PROGRESS));

counters = dictCreate(&leaderVotesDictType,NULL);

voters = dictSize(master->sentinels)+1; /* All the other sentinels and me.*/

/* Count other sentinels votes */

di = dictGetIterator(master->sentinels);

while((de = dictNext(di)) != NULL) {

sentinelRedisInstance *ri = dictGetVal(de);

if (ri->leader != NULL && ri->leader_epoch == sentinel.current_epoch)

sentinelLeaderIncr(counters,ri->leader);

}

dictReleaseIterator(di);

/* Check what's the winner. For the winner to win, it needs two conditions:

* 1) Absolute majority between voters (50% + 1).

* 2) And anyway at least master->quorum votes. */

// Check what the winner is. The winner needs two conditions to win:

// 1) The absolute majority of sentinels (50% + 1).

// 2) Anyway, at least there are more votes in master - > quorum.

di = dictGetIterator(counters);

while((de = dictNext(di)) != NULL) {

uint64_t votes = dictGetUnsignedIntegerVal(de);

if (votes > max_votes) {

max_votes = votes;

winner = dictGetKey(de);

}

}

dictReleaseIterator(di);

/* Count this Sentinel vote:

* if this Sentinel did not voted yet, either vote for the most

* common voted sentinel, or for itself if no vote exists at all. */

// Calculate the Sentinel's vote: if the sentinel has not yet voted, either vote for the most common voting sentinel, or vote for itself, or it has not voted at all.

if (winner)

myvote = sentinelVoteLeader(master,epoch,winner,&leader_epoch);

else

myvote = sentinelVoteLeader(master,epoch,sentinel.myid,&leader_epoch);

if (myvote && leader_epoch == epoch) {

uint64_t votes = sentinelLeaderIncr(counters,myvote);

if (votes > max_votes) {

max_votes = votes;

winner = myvote;

}

}

// voters is the number of all sentinels, max_votes is the number of votes obtained

// The number of affirmative votes must be more than half of the sentinels

voters_quorum = voters/2+1;

// If the number of sentinels with less than half of the affirmative votes or less than the quorum threshold, the Leader is NULL

if (winner && (max_votes < voters_quorum || max_votes < master->quorum))

winner = NULL;

// Determine the final Leader

winner = winner ? sdsnew(winner) : NULL;

sdsfree(myvote);

dictRelease(counters);

return winner;

}

The following figure shows the calling relationship when confirming the sentinel Leader just introduced. You can have a look.

Well, here, the final sentry Leader can be determined.

Summary

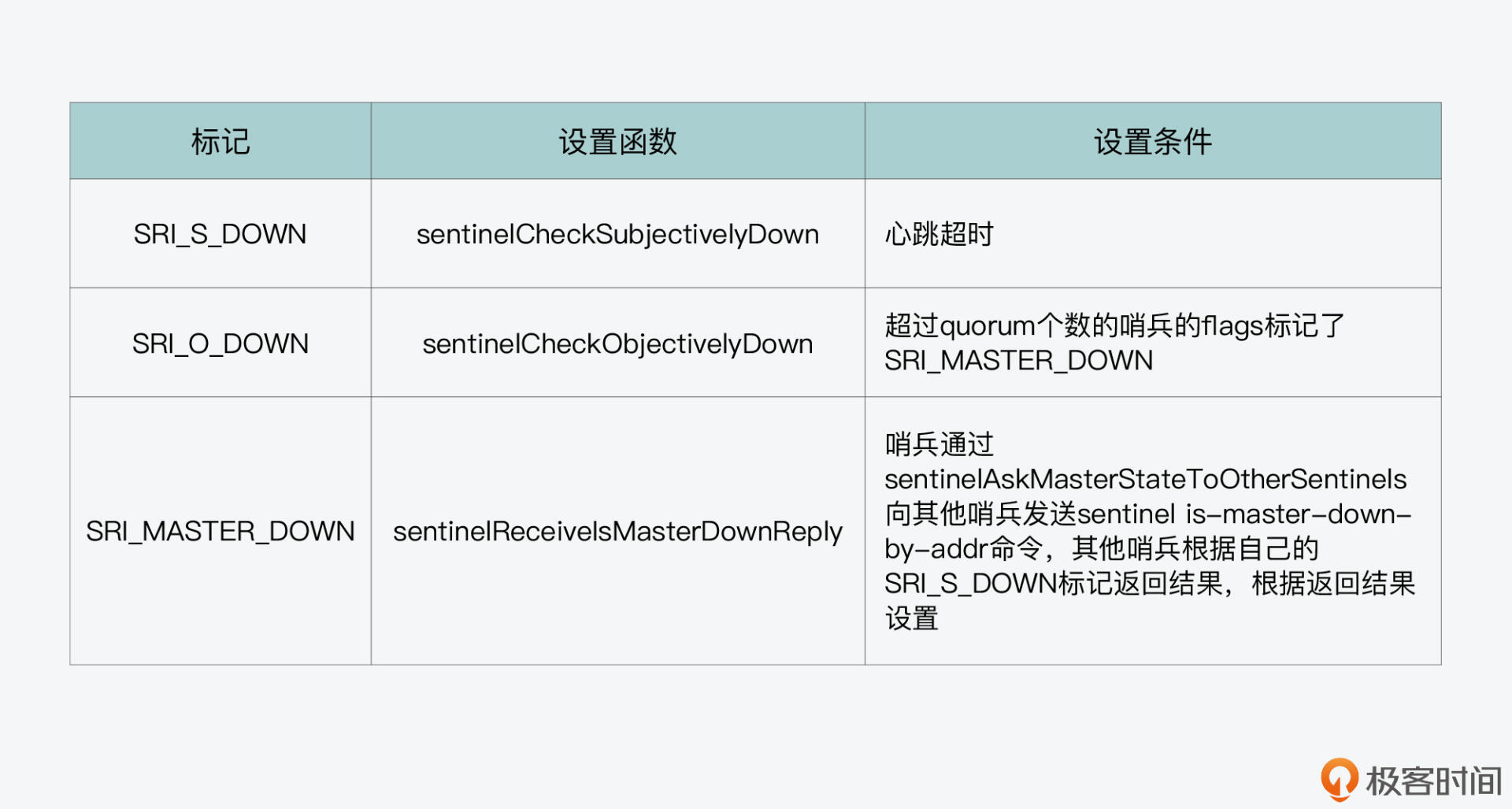

Well, that's all for today's class. Let's summarize. Today's article, based on the previous article, focuses on the objective offline judgment in the process of sentinel work and the Leader election. Because this process involves the interactive inquiry between sentinels, it is not easy to master. You need to pay close attention to the key contents I mentioned below. Firstly, the objective offline judgment involves the judgment of three markers, namely the SRI in the master node flags_ S_ Down and SRI_O_DOWN, and Sri in sentry instance flags_ MASTER_ Down, I drew the following table to show the setting functions and conditions of these three tags. You can review it as a whole.

Once the sentinel judges that the primary node is offline objectively, the sentinel will call the sentinelAskMasterStateToOtherSentinels function to elect the sentinel Leader. Here, you should note that asking other sentinels about the subjective offline status of the master node and initiating Leader voting with other sentinels are all realized through the sentinel is master down by addr command, and the Redis source code uses the same function sentinelAskMasterStateToOtherSentinels to send the command, so when you read the source code, Pay attention to distinguish whether the command sent by sentinelAskMasterStateToOtherSentinels queries the subjective offline status of the master node or votes.

Finally, the voting of sentinelVoteLeader election is completed in sentinelVoteLeader function. In order to comply with the provisions of Raft protocol, sentinelVoteLeader function mainly compares the era of sentinels and the era of leaders recorded by master, so as to meet the requirement of Raft protocol that Follower can only cast one vote in one round of voting.

Well, by today's article, we have learned about the process of sentinel Leader election. You can see that although the final execution logic of sentinel election is in a function, the trigger logic of sentinel election is included in the whole working process of sentinel, so we also need to master other operations in this process, such as subjective offline judgment Objective offline judgment, etc.

Each class asks: the sentinel calls the sentinelHandleDictOfRedisInstances function in the sentinelTimer function, performs the sentinelHandleRedisInstance function for each main node, and performs sentinelHandleRedisInstance functions on all the slave nodes of the master node. Then, will the sentinel determine the subjective offline and the objective offline of the slave nodes?

First, in the sentinelHandleDictOfRedisInstances function, it will execute a circular process. For each master node monitored by the current sentinel instance, it will execute the sentinelHandleRedisInstance function.

In this process, there is a recursive call, that is, if the current processing node is the master node, the sentinelHandleDictOfRedisInstances function will further call the sentinelHandleDictOfRedisInstances function for the slave node of the master node, so as to execute the sentinelhandledirecisinstances function for each slave node.

The code logic of this part is as follows:

/* Perform scheduled operations for all the instances in the dictionary.

* Recursively call the function against dictionaries of slaves. */

// Perform predetermined operations on all instances in the hash table. This function is called recursively against the hash table of the slave node

void sentinelHandleDictOfRedisInstances(dict *instances) {

dictIterator *di;

dictEntry *de;

sentinelRedisInstance *switch_to_promoted = NULL;

/* There are a number of things we need to perform against every master. */

// We need to do many things for each master node

// Gets the iterator of the hash table

di = dictGetIterator(instances);

while((de = dictNext(di)) != NULL) {

// Get each master node monitored by sentinel instance

sentinelRedisInstance *ri = dictGetVal(de);

// Call sentinelHandleRedisInstance to process the instance

// Whether it is a master node or a slave node, it will check whether it is offline

sentinelHandleRedisInstance(ri);

if (ri->flags & SRI_MASTER) {

//If the current node is the master node, sentinelHandleDictOfRedisInstances will be called to process all its slave nodes.

sentinelHandleDictOfRedisInstances(ri->slaves);

sentinelHandleDictOfRedisInstances(ri->sentinels);

if (ri->failover_state == SENTINEL_FAILOVER_STATE_UPDATE_CONFIG) {

switch_to_promoted = ri;

}

}

}

if (switch_to_promoted)

sentinelFailoverSwitchToPromotedSlave(switch_to_promoted);

dictReleaseIterator(di);

}

Then, when the sentinelHandleRedisInstance function is executed, it will call the sentinelchecksubjectively down function to judge whether the currently processed instance is offline. There are no additional constraints in this operation, that is, whether it is the master node or the slave node, it will be judged whether to go offline subjectively. This part of the code is as follows:

void sentinelHandleRedisInstance(sentinelRedisInstance *ri) {

...

sentinelCheckSubjectivelyDown(ri); //Whether it is a master node or a slave node, it will check whether it is offline

...

}

However, it should be noted that when sentinelHandleRedisInstance function calls sentinelCheckObjectivelyDown function to judge the objective offline status of an instance, it will check whether the current instance has a master node tag, as shown below:

void sentinelHandleRedisInstance(sentinelRedisInstance *ri) {

...

if (ri->flags & SRI_MASTER) { //Only when the current node is the primary node, can you check whether it is offline objectively

sentinelCheckObjectivelyDown(ri);

...}

}

To sum up, the calling path of sentinelHandleRedisInstance function of master node and slave node is as follows:

Master node: sentinelhandleredisinstance - > sentinelchecksubjectivelydown - > sentinelcheckobjectivelydown slave node: sentinelhandleredisinstance - > sentinelchecksubjectivelydown

Therefore, back to the answer to this question, the Sentry will judge the subjective offline of the slave node, but will not judge whether it is an objective offline.

In addition, by analyzing the code, we can see that after the slave node is judged as a subjective offline, it cannot be elected as a new master node. This process is executed in the sentinelSelectSlave function. This function will traverse the current slave node and check their marks in turn. If a slave node has a subjective offline mark, the slave node will be skipped directly and will not be selected as a new master node.

The following code shows the logic of the sentinelSelectSlave function. You can have a look.

sentinelRedisInstance *sentinelSelectSlave(sentinelRedisInstance *master) {

sentinelRedisInstance **instance =

zmalloc(sizeof(instance[0])*dictSize(master->slaves));

sentinelRedisInstance *selected = NULL;

int instances = 0;

dictIterator *di;

dictEntry *de;

mstime_t max_master_down_time = 0;

if (master->flags & SRI_S_DOWN)

max_master_down_time += mstime() - master->s_down_since_time;

max_master_down_time += master->down_after_period * 10;

di = dictGetIterator(master->slaves);

// Traverse each slave node of the master node

while((de = dictNext(di)) != NULL) {

// Get a slave node instance of the master node

sentinelRedisInstance *slave = dictGetVal(de);

mstime_t info_validity_time;

// If a slave node has a subjective downline mark, the slave node will be directly skipped and will not be selected as a new master node.

if (slave->flags & (SRI_S_DOWN|SRI_O_DOWN)) continue;

// break link

if (slave->link->disconnected) continue;

// overtime

if (mstime() - slave->link->last_avail_time > SENTINEL_PING_PERIOD*5) continue;

if (slave->slave_priority == 0) continue;

/* If the master is in SDOWN state we get INFO for slaves every second.

* Otherwise we get it with the usual period so we need to account for

* a larger delay. */

if (master->flags & SRI_S_DOWN)

info_validity_time = SENTINEL_PING_PERIOD*5;

else

info_validity_time = SENTINEL_INFO_PERIOD*3;

if (mstime() - slave->info_refresh > info_validity_time) continue;

if (slave->master_link_down_time > max_master_down_time) continue;

instance[instances++] = slave;

}

dictReleaseIterator(di);

if (instances) {

qsort(instance,instances,sizeof(sentinelRedisInstance*),

compareSlavesForPromotion);

selected = instance[0];

}

zfree(instance);

return selected;

}