preface

Recently, when I read some articles about ConcurrentHashMap, I always said: before jdk8, ConcurrentHashMap used segment lock to ensure concurrency security. The jdk8 abandons the segmented lock and ensures the concurrency security through the sycronized keyword + CAS. Although it probably means so, it doesn't feel thorough enough, so I also want to dare to talk about my personal opinions.

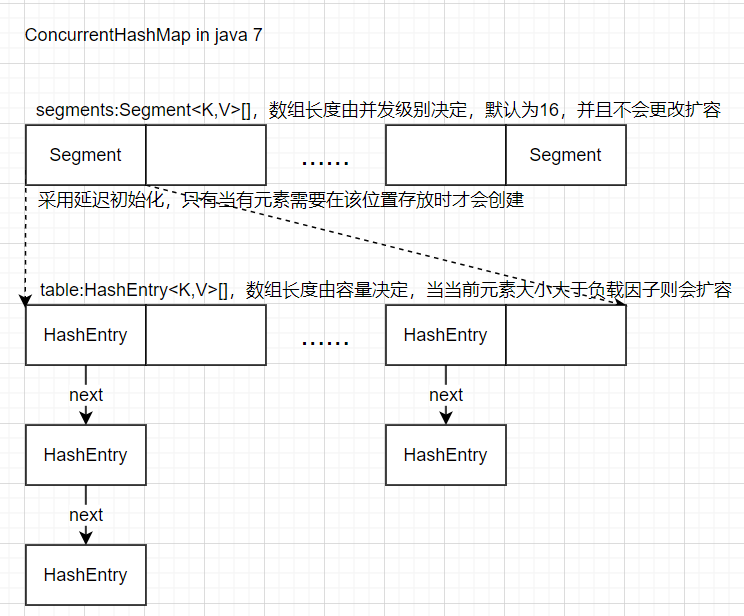

ConcurrentHashMap in Java7

First of all, I'm looking at jdk1 7.0_ Version 79.

Default construction method

public ConcurrentHashMap() {

this(DEFAULT_INITIAL_CAPACITY, DEFAULT_LOAD_FACTOR, DEFAULT_CONCURRENCY_LEVEL);

}

@SuppressWarnings("unchecked")

public ConcurrentHashMap(int initialCapacity,

float loadFactor, int concurrencyLevel) {

if (!(loadFactor > 0) || initialCapacity < 0 || concurrencyLevel <= 0)

throw new IllegalArgumentException();

if (concurrencyLevel > MAX_SEGMENTS)

concurrencyLevel = MAX_SEGMENTS;

// Find power-of-two sizes best matching arguments

int sshift = 0;

int ssize = 1;

// The size of the segments array is calculated by the concurrency level

while (ssize < concurrencyLevel) {

++sshift;

ssize <<= 1;

}

this.segmentShift = 32 - sshift;

this.segmentMask = ssize - 1;

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

// Calculate the table capacity allocated to each segment by the caller's expected capacity

int c = initialCapacity / ssize;

// Check the total capacity and round it up to ensure that the number of elements expected by the caller can be saved

if (c * ssize < initialCapacity)

++c;

// The element capacity of each segment is treated as a power of 2

int cap = MIN_SEGMENT_TABLE_CAPACITY;

while (cap < c)

cap <<= 1;

// create segments and segments[0]

Segment<K,V> s0 =

new Segment<K,V>(loadFactor, (int)(cap * loadFactor),

(HashEntry<K,V>[])new HashEntry[cap]);

Segment<K,V>[] ss = (Segment<K,V>[])new Segment[ssize];

UNSAFE.putOrderedObject(ss, SBASE, s0); // ordered write of segments[0]

this.segments = ss;

}

The default constructor uses a default concurrency level constant with a value of 16 Another constructor called by it, we know that the concurrency level determines the size of the segments array, and the size is processed to the power of 2. segments[0] is created and load factor and expansion threshold are passed in.

Let's look at the internal Segment class

constructor

static final class Segment<K,V> extends ReentrantLock implements Serializable {

Segment(float lf, int threshold, HashEntry<K,V>[] tab) {

this.loadFactor = lf;

this.threshold = threshold;

this.table = tab;

}

...

}

Load factor loadFactor, threshold and hash table are all available. Did you think of HashMap and talk about it later. Looking at the definition of the class, Segment also inherits ReentrantLock, which is the implementation of the lock itself.

Take a look at the method of HashMap concurrent

public V put(K key, V value) {

Segment<K,V> s;

if (value == null)

throw new NullPointerException();

int hash = hash(key);

// For the first time, hash to the corresponding segment

int j = (hash >>> segmentShift) & segmentMask;

if ((s = (Segment<K,V>)UNSAFE.getObject // nonvolatile; recheck

(segments, (j << SSHIFT) + SBASE)) == null) // in ensureSegment

// Since the default constructor only creates one Segment:s0, you need to ensure that the Segment object at that location exists before using it. Here is the deferred instantiation design.

s = ensureSegment(j);

return s.put(key, hash, value, false);

}

In the ConcurrentHashMap#put method, only the corresponding segment is found and the current element is not stored. Instead, the Segment#put method is called to implement it.

Segment#put

final V put(K key, int hash, V value, boolean onlyIfAbsent) {

// Lock

HashEntry<K,V> node = tryLock() ? null :

scanAndLockForPut(key, hash, value);

V oldValue;

try {

HashEntry<K,V>[] tab = table;

// The second hash, find the position in the hash table

int index = (tab.length - 1) & hash;

HashEntry<K,V> first = entryAt(tab, index);

for (HashEntry<K,V> e = first;;) {

if (e != null) {

// Element already exists in current slot

K k;

if ((k = e.key) == key ||

(e.hash == hash && key.equals(k))) {

oldValue = e.value;

if (!onlyIfAbsent) {

e.value = value;

++modCount;

}

break;

}

// Move to the next element of the linked list

e = e.next;

}

else {

// There are no elements in the current slot

if (node != null)

node.setNext(first);

else

node = new HashEntry<K,V>(hash, key, value, first);

int c = count + 1;

if (c > threshold && tab.length < MAXIMUM_CAPACITY)

// If the threshold is exceeded, expand the capacity and re hash

rehash(node);

else

// Otherwise, put it into the slot through CAS operation

setEntryAt(tab, index, node);

++modCount;

count = c;

oldValue = null;

break;

}

}

} finally {

// Unlock

unlock();

}

return oldValue;

}

Here, you can draw a picture to more intuitively reflect the data structure of ConcurrentHashMap

summary

- In Java 7, the data structure of ConcurrentHashMap is indeed array + linked list.

- The concurrency security of data is guaranteed by Segment, which is a ReentrantLock lock.

- The core idea of ensuring concurrency security is segmented lock.

Segmented lock: personally, I think this is a reflection of the idea of division and rule. For HashMap, the original process of locking all elements is decomposed. Lock only part of the data. The data of each part shall be separately guaranteed to be safe without mutual interference and influence. This can also be compared to the table lock and row lock of the database. The core idea is to reduce competition by reducing lock granularity.

And Segment is the realization of this idea. A large hash table is divided into multiple hash tables according to the concurrency level, and each Segment is only responsible for part of the data. - Disadvantages of ConcurrentHashMap under Segment lock implementation:

- It takes two hash es to find elements

- Reasonable setting of concurrency level. Although the default concurrency level is provided, is it applicable in most scenarios? In the final analysis, users need to evaluate how many locks they need to manage by segments.

- The storage cost is higher than HashMap. The minimum capacity managed by each Segment is 2, and the default concurrency level is 16, that is, 16 segments. If I only need to store 16 elements, then ConcurrentHashMap will take up twice the storage space. If more elements are stored, more space will be occupied due to the existence of load factor.

Postscript

Due to the disadvantages of Segment implementation, Java 8 gave up Segment directly. Instead, a more fine-grained segmented lock is adopted, which is also more ingenious. Through syncronized + CAS operation, the performance is greatly improved. Next, let's talk about concurrent HashMap of Java 8!