What is Nginx?

Nginx is a lightweight / high-performance reverse proxy Web server for HTTP, HTTPS, SMTP, POP3 and IMAP protocols. He realizes very efficient reverse proxy and load balancing. He can handle 20000-30000 concurrent connections. The official monitoring can support 50000 concurrent connections. Now there are many users using nginx websites in China, such as Sina, Netease, Tencent, etc

What are the advantages of Nginx?

- Cross platform and simple configuration.

- Non blocking and highly concurrent connections: handle 20000-30000 concurrent connections, and the official monitoring can support 50000 concurrent connections.

- Low memory consumption: only 10 Nginx can occupy 150M memory.

- Low cost and open source.

- High stability and low probability of downtime.

- Built in health check function: if a server goes down, a health check will be performed, and the re sent request will not be sent to the down server. Resubmit the request to another node

Nginx application scenario?

- http server. Nginx is an http service that can provide http services independently. Can do web static server.

- Virtual host. Multiple websites can be virtualized on one server, such as virtual machines used by personal websites.

- Reverse proxy, load balancing. When the number of visits to the website reaches a certain level, and a single server cannot meet the user's request, multiple server clusters need to be used. nginx can be used as the reverse proxy. In addition, multiple servers can share the load equally, and there should be no downtime for a server with high load while a server is idle.

- Security management can also be configured in nginz. For example, Nginx can be used to build API interface gateway to intercept each interface service.

How does Nginx handle requests?

server { #The first Server block starts with an independent virtual host site

listen 80; #The port that provides services. The default is 80

server_name localhost; #Domain name host name of the service provided

location / { #The first location block starts

root html; #The root directory of the installation site is the same as the root directory of the installation site

index index.html index.html; #Default home page file, multiple separated by spaces

} #First location block result

- First, when Nginx is started, it will parse the configuration file to get the port and IP address to be monitored, and then initialize the monitored socket in the Master process of Nginx (create s socket, set addr, reuse and other options, bind to the specified IP address port, and then listen).

- Then, fork (an existing process can call the fork function to create a new process. The new process created by fork is called a child process) to create multiple child processes.

- After that, the child process will compete to accept the new connection. At this point, the client can initiate a connection to nginx. When the client shakes hands with nginx three times and establishes a connection with nginx. At this time, a child process will accept successfully, get the Socket of the established connection, and then create the encapsulation of the connection by nginx, that is, ngx\_connection\_t structure.

- Then, set the read-write event processing function and add read-write events to exchange data with the client.

- Finally, Nginx or the client will take the initiative to close the connection. At this point, a connection will die.

How does Nginx achieve high concurrency?

If a server adopts the method that a process (or thread) is responsible for a request, the number of processes is the number of concurrent processes. So obviously, there will be many processes waiting. Wait for what? The most should be waiting for network transmission.

The asynchronous non blocking working mode of Nginx takes advantage of this waiting time. When waiting is needed, these processes are idle and on standby. Therefore, a few processes solve a large number of concurrency problems.

How can Nginx be used? To put it simply: for the same four processes, if one process is responsible for one request, then after four requests come in at the same time, each process will be responsible for one of them until the session is closed. During this period, if a fifth request comes in. You can't respond in time because none of the four processes have finished their work. Therefore, there is usually a scheduling process. Whenever a new request comes in, a new process will be opened to handle it.

In retrospect, is there a problem with soy purple in BIO?

Nginx is different. Every time a request comes in, there will be a worker process to process it. But not the whole process. To what extent? Process to the place where blocking may occur, such as forwarding the request to the upstream (back-end) server and waiting for the request to return. Then, the processing worker will not wait so foolishly. After sending the request, he will register an event: "if upstream returns, tell me and I'll go on.". So he went to rest. At this point, if another request comes in, he can handle it in this way again soon. Once the upstream server returns, this event will be triggered, the worker will take over, and the request will go down.

That's why Nginx is based on the event model.

Because of the nature of the work of web server, most of the life of each request is in network transmission. In fact, there is not much time spent on the server machine. This is the secret of solving high concurrency by several processes. Namely:

webserver is just a network IO intensive application, not a computing intensive one.

Asynchronous, non blocking, using epoll, and Optimization in a lot of details. It is also the technical cornerstone of Nginx.

What is forward agent?

A server located between the client and the original server. In order to obtain content from the original server, the client sends a request to the agent and specifies the target (original server), and then the agent transfers the request to the original server and returns the obtained content to the client.

Only clients can use forward proxy. The forward proxy is summarized in one sentence: the proxy side is the client side. For example: we use OpenVPN and so on.

What is reverse proxy?

Reverse Proxy means that the proxy server accepts the connection request on the Internet, then sends the request to the server on the internal network, and returns the result obtained from the server to the client requesting connection on the Internet. At this time, the proxy server appears as a Reverse Proxy server.

The reverse proxy is summarized in one sentence: the agent side is the server side.

What are the advantages of a reverse proxy server?

The reverse proxy server can hide the existence and characteristics of the source server. It acts as an intermediate layer between Internet cloud and web server. This is good for security, especially when you use web hosted services.

What is the Nginx directory structure?

[root@localhost ~]# tree /usr/local/nginx /usr/local/nginx ├── client_body_temp ├── conf #Directory of all configuration files of Nginx │ ├── fastcgi.conf #Parameters related to CGI configuration file │ ├── fastcgi.conf.default # fastcgi. Original backup file of conf │ ├── fastcgi_params #Parameter file of fastcgi │ ├── fastcgi_params.default │ ├── koi-utf │ ├── koi-win │ ├── mime.types #Media type │ ├── mime.types.default │ ├── nginx.conf #Nginx master profile │ ├── nginx.conf.default │ ├── scgi_params #scgi related parameter files │ ├── scgi_params.default │ ├── uwsgi_params #uwsgi related parameter files │ ├── uwsgi_params.default │ └── win-utf ├── fastcgi_temp #fastcgi temporary data directory ├── html #Nginx default site directory │ ├── 50x.html #The error page gracefully replaces the display file. For example, this page will be called when 502 error occurs │ └── index.html #Default home page file ├── logs #Nginx log directory │ ├── access.log #Access log file │ ├── error.log #Error log file │ └── nginx.pid #pid file. After the Nginx process is started, the ID numbers of all processes will be written to this file ├── proxy_temp #Temporary directory ├── sbin #Nginx command directory │ └── nginx #Startup command of Nginx ├── scgi_temp #Temporary directory └── uwsgi_temp #Temporary directory

Nginx configuration file nginx What attribute modules does conf have?

worker_processes 1; #Number of worker processes

events { #Event block start

worker_connections 1024; #Maximum number of connections supported per worker process

} #End of event block

http { #HTTP block start

include mime.types; #Media type library files supported by Nginx

default_type application/octet-stream; #Default media type

sendfile on; #Turn on efficient transmission mode

keepalive_timeout 65; #Connection timeout

server { #The first Server block starts with an independent virtual host site

listen 80; #The port that provides services. The default is 80

server_name localhost; #Domain name host name of the service provided

location / { #The first location block starts

root html; #The root directory of the installation site is the same as the root directory of the installation site

index index.html index.htm; #Default home page file, multiple separated by spaces

} #First location block result

error_page 500502503504 /50x.html; #When the corresponding http status code appears, use 50x HTML response to customer

location = /50x.html { #Starting from the location block, access 50x html

root html; #Specify the corresponding site directory as html

}

}

......

What is the difference between a cookie and a session?

Jointly:

Store user information. Storage form: key value format variable and variable content key value pair.

difference:

- cookie

- Stored in client browser

- Each domain name corresponds to a cookie. Other cookies cannot be accessed across domain names

- Users can view or modify cookie s

- The http response message gives your browser settings

- Key (used to unlock the lock on the browser)

- session

- Stored on the server (file, database, redis)

- Store sensitive information

- Lock head

Why doesn't Nginx use multithreading?

Apache: create multiple processes or threads, and each process or thread will allocate cpu and memory for it (threads are much smaller than processes, so worker s support higher concurrency than perfork). Concurrency will drain server resources.

Nginx: single thread is used to process requests asynchronously and non blocking (the administrator can configure the number of working processes of the main process of nginx) (epoll). CPU and memory resources will not be allocated for each request, which saves a lot of resources and reduces a lot of CPU context switching. That's why nginx supports higher concurrency.

Differences between nginx and apache

Lightweight, it also serves as a web service, and occupies less memory and resources than apache.

Anti concurrency. Nginx handles requests asynchronously and non blocking, while apache is blocking. In high concurrency, nginx can maintain low resources, low consumption and high performance.

Highly modular design, writing modules is relatively simple.

The core difference is that apache is a synchronous multi process model, one connection corresponds to one process, nginx is asynchronous, and multiple connections can correspond to one process.

What is the separation of dynamic resources and static resources?

The separation of dynamic resources and static resources is to make the dynamic pages in the dynamic website distinguish the constant resources from the frequently changing resources according to certain rules. After the dynamic and static resources are separated, we can cache them according to the characteristics of static resources. This is the core idea of static processing of the website.

The simple summary of the separation of dynamic resources and static resources is: the separation of dynamic files and static files.

Why do we have to separate dynamic and static?

In our software development, some requests need background processing (such as:. jsp,.do, etc.), and some requests do not need background processing (such as css, html, jpg, js, etc.). These files that do not need background processing are called static files, otherwise dynamic files.

Therefore, we ignore static files in background processing. Some people will say that it's over if I ignore static files in the background? Of course, this is OK, but in this way, the number of background requests increases significantly. When we have requirements for the response speed of resources, we should use this dynamic and static separation strategy to solve the dynamic and static separation. We should deploy the static resources of the website (HTML, JavaScript, CSS, img and other files) separately from the background application, improve the speed of users accessing the static code and reduce the access to the background application

Here, we put static resources into Nginx and forward dynamic resources to Tomcat server.

Of course, because CDN services such as qiniu and Alibaba cloud are now very mature, the mainstream approach is to cache static resources into CDN services to improve access speed.

Compared with the local Nginx, the CDN server has more nodes in China, which can enable users to access nearby. Moreover, CDN services can provide greater bandwidth. Unlike our own application services, the bandwidth provided is limited.

What is CDN service?

CDN, content distribution network.

Its purpose is to publish the content of the website to the edge of the network closest to users by adding a new layer of network architecture to the existing Internet, so that users can obtain the required content nearby and improve the speed of users accessing the website.

Generally speaking, because CDN services are popular now, almost all companies will use CDN services.

How does Nginx do dynamic and static separation?

You only need to specify the directory corresponding to the path. location / can be matched using regular expressions. And specify the directory in the corresponding hard disk. As follows: (all operations are on Linux)

location /image/ {

root /usr/local/static/;

autoindex on;

}

Steps:

#Create directory mkdir /usr/local/static/image #Enter directory cd /usr/local/static/image #Upload photos photo.jpg #Restart nginx sudo nginx -s reload

Open the browser and enter server\_name/image/1.jpg can access the still image

How to implement the algorithm of Nginx load balancing? What are the strategies?

In order to avoid server crash, we will share the pressure of the server through load balancing. The two servers form a cluster. When users access, they first access a forwarding server, and then the forwarding server distributes the access to servers with less pressure.

There are five strategies for implementing Nginx load balancing:

1 . Polling (default)

Each request is allocated to different back-end servers one by one in chronological order. If a back-end server goes down, it can automatically eliminate the faulty system.

upstream backserver {

server 192.168.0.12;

server 192.168.0.13;

}

2. weight

The larger the value of weight, the higher the access probability assigned. It is mainly used when the performance of each server in the back end is uneven. The second is to set different weights in the case of master-slave, so as to make rational and effective use of host resources.

#The higher the weight, the greater the probability of being visited. For example, 20% and 80% respectively.

upstream backserver {

server 192.168.0.12 weight=2;

server 192.168.0.13 weight=8;

}

3. ip\_ Hash (IP binding)

Each request is allocated according to the hash result of the access IP, so that visitors from the same IP can access a back-end server, and can effectively solve the problem of session sharing in dynamic web pages

upstream backserver {

ip_hash;

server 192.168.0.12:88;

server 192.168.0.13:80;

}

4. Fair (third party plug-in)

Upstream must be installed\_ Fair module.

Compare weight and ip\_hash is a more intelligent load balancing algorithm. fair algorithm can intelligently balance the load according to the page size and loading time, and give priority to the distribution with short response time.

#The server that responds quickly allocates the request to that server.

upstream backserver {

server server1;

server server2;

fair;

}

5.url\_ Hash (third party plug-in)

The hash package of Nginx must be installed

Allocating requests according to the hash result of the access url and directing each url to the same back-end server can further improve the efficiency of the back-end cache server.

upstream backserver {

server squid1:3128;

server squid2:3128;

hash $request_uri;

hash_method crc32;

}

How to solve the front-end cross domain problem with Nginx?

Use Nginx to forward requests. Write the cross domain interface as the interface of the calling domain, and then forward these interfaces to the real request address.

How to configure the Nginx virtual host?

- 1. Domain name based virtual hosts, which are distinguished by domain names - Applications: External Websites

- 2. Port based virtual host, which distinguishes virtual hosts through ports - Application: the management background of the company's internal website and external website

- 3. ip based virtual host.

Configure domain name based on virtual host

The / data/www /data/bbs directory needs to be established, and the domain name resolution corresponding to the ip address of the virtual machine should be added to the local hosts of windows; Index. Is added under the corresponding domain name website directory HTML file;

#When the client visits www.lijie.com COM, the listening port number is 80, and directly jump to the file in the data/www directory

server {

listen 80;

server_name www.lijie.com;

location / {

root data/www;

index index.html index.htm;

}

}

#When the client visits www.lijie.com COM, the listening port number is 80, and directly jump to the file in the data/bbs directory

server {

listen 80;

server_name bbs.lijie.com;

location / {

root data/bbs;

index index.html index.htm;

}

}

Port based virtual host

Use the port to distinguish. The browser uses the domain name or ip address: port number to access

#When the client visits www.lijie.com COM, the listening port number is 8080, and directly jump to the file in the data/www directory

server {

listen 8080;

server_name 8080.lijie.com;

location / {

root data/www;

index index.html index.htm;

}

}

#When the client visits www.lijie.com COM, the listening port number is 80, and directly jump to the real ip server address 127.0.0.1:8080

server {

listen 80;

server_name www.lijie.com;

location / {

proxy_pass http://127.0.0.1:8080;

index index.html index.htm;

}

}

What is the role of location?

The location instruction is used to execute different applications according to the URI requested by the user, that is, match according to the website URL requested by the user. If the match is successful, relevant operations will be carried out. Can you say the syntax of location?

Note: ~ represents the English letters you entered

Location regular case

#Priority 1, exact match, root path

location =/ {

return 400;

}

#Priority 2: those starting with a string and those starting with av are matched first here, case sensitive

location ^~ /av {

root /data/av/;

}

#Priority 3, case sensitive regular matching, matching / media ***** path

location ~ /media {

alias /data/static/;

}

#Priority 4, case insensitive regular matching, all * * * * jpg|gif|png all go here

location ~* .*\.(jpg|gif|png|js|css)$ {

root /data/av/;

}

#Priority 7, universal matching

location / {

return 403;

}

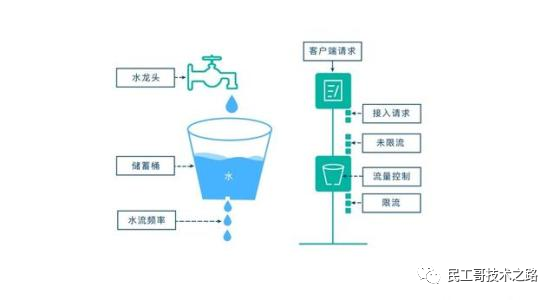

How to limit current?

Nginx current limiting is to limit the speed of user requests and prevent the server from being overwhelmed

There are three types of current limiting

- Normal restricted access frequency (normal traffic)

- Burst restricted access frequency (burst traffic)

- Limit concurrent connections

The current limiting of Nginx is based on leaky bucket flow algorithm

Three current limiting algorithms are implemented

- 1. Normal restricted access frequency (normal traffic):

Limit the number of requests sent by a user and how often I receive requests from Nginx.

Using NGX in Nginx\_ http\_ limit\_req\_ Module module to limit the access frequency. The principle of restriction is essentially based on the principle of leaky bucket algorithm. In Nginx Limit can be used in the conf configuration file\_ req\_ Zone command and limit\_ The req command limits the frequency of request processing for a single IP.

#Define the current limiting dimension. A user requests in one minute, and all the excess is omitted

limit_req_zone $binary_remote_addr zone=one:10m rate=1r/m;

#Binding current limiting dimension

server{

location/seckill.html{

limit_req zone=zone;

proxy_pass http://lj_seckill;

}

}

1r/s represents a request in one second and 1r/m receives a request in one minute. If Nginx still has other people's requests to process at this time, Nginx will refuse to process the user's request.

- 2. Burst restricted access frequency (burst traffic):

Limit the number of requests sent by a user and how often I receive them from Nginx.

The above configuration can limit the access frequency to a certain extent, but there is also a problem: if the burst traffic exceeds the request and is rejected for processing, and the burst traffic during activity cannot be processed, how should we deal with it further? Nginx provides burst parameter combined with nodelay parameter to solve the problem of traffic burst, and can set the number of requests that can be processed beyond the set number of requests. We can add the burst parameter and nodelay parameter to the previous example:

#Define the current limiting dimension. A user requests in one minute, and all the excess is omitted

limit_req_zone $binary_remote_addr zone=one:10m rate=1r/m;

#Binding current limiting dimension

server{

location/seckill.html{

limit_req zone=zone burst=5 nodelay;

proxy_pass http://lj_seckill;

}

}

Why is there one more burst=5 nodelay; What's more, this can represent that Nginx will handle the first five requests of a user immediately, and the redundant ones will fall slowly. I will handle your requests without the requests of other users. If there are other requests, I'll miss out and don't accept your requests

- 3. Limit concurrent connections

NGX in Nginx\_ http\_ limit\_conn\_ Module module provides the function of limiting the number of concurrent connections. You can use limit\_conn\_zone instruction and limit\_conn execution for configuration. Next, we can look at it through a simple example:

http {

limit_conn_zone $binary_remote_addr zone=myip:10m;

limit_conn_zone $server_name zone=myServerName:10m;

}

server {

location / {

limit_conn myip 10;

limit_conn myServerName 100;

rewrite / http://www.lijie.net permanent;

}

}

The maximum number of concurrent connections of a single virtual server can only be set to 100. Of course, only when the requested header is processed by the server will the number of connections to the virtual server be counted. As mentioned earlier, Nginx is implemented based on the principle of leaky bucket algorithm. The actual upper limit flow is generally implemented based on leaky bucket algorithm and token bucket algorithm.

Leaky bucket algorithm

The idea of leaky bucket algorithm is very simple. We compare water to request, and leaky bucket to the limit of system processing capacity. Water enters the leaky bucket first, and the water in the leaky bucket flows out at a certain rate. When the outflow rate is less than the inflow rate, due to the limited capacity of leaky bucket, the subsequent incoming water directly overflows (rejects the request), so as to realize flow restriction.

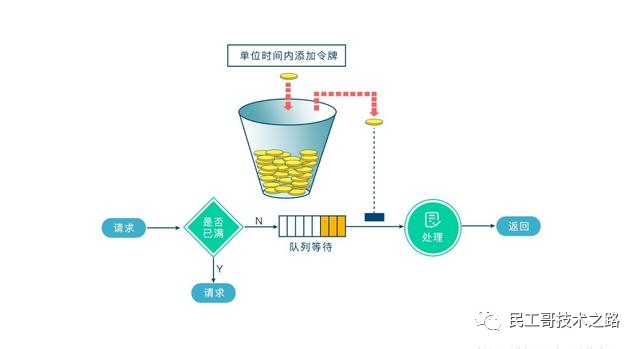

Token Bucket

The principle of token bucket algorithm is also relatively simple. We can understand it as a registered doctor in the hospital. You can see a doctor only after you get the number.

The system will maintain a token bucket and put tokens into the bucket at a constant speed. At this time, if a request comes in and wants to be processed, you need to obtain a token from the bucket first. When there is no token in the bucket, the request will be rejected. The token bucket algorithm limits the request by controlling the capacity of the bucket and the rate of issuing tokens.

How to configure Nginx high availability?

When the upstream server (real access server) fails or fails to respond in time, it should directly rotate training to the next server to ensure the high availability of the server

Nginx configuration code:

server {

listen 80;

server_name www.lijie.com;

location / {

###Specify upstream server load balancing server

proxy_pass http://backServer;

###Timeout time between nginx and upstream server (real access server): timeout time of back-end server connection_ Timeout for initiating handshake waiting for response

proxy_connect_timeout 1s;

###Timeout time of nginx sending to upstream server (real access server)

proxy_send_timeout 1s;

###Timeout for nginx to accept upstream servers (servers that are actually accessed)

proxy_read_timeout 1s;

index index.html index.htm;

}

}

How does Nginx judge that other IP addresses are inaccessible?

#If the accessed ip address is 192.168.9.115, 403 is returned

if ($remote_addr = 192.168.9.115) {

return 403;

}

How can undefined server names be used in nginx to prevent requests from being processed?

Simply define the server requesting deletion as:

The server name is left with an empty string, which matches the request without the host header field, and a special nginx non-standard code is returned to terminate the connection.

How to restrict browser access?

##Do not allow Google browser access} if Google browser returns 500

if ($http_user_agent ~ Chrome) {

return 500;

}

What is the Rewrite global variable?

$remote_addr //Get client ip $binary_remote_addr //Client ip (binary) $remote_port //Client port, e.g. 50472 $remote_user //User name authenticated by Auth Basic Module $host //Request the host header field, otherwise it is the server name, such as blog sakmon. com $request //User request information, such as get? a=1&b=2 HTTP/1.1 $request_filename //The pathname of the file currently requested is a combination of root or alias and URI request, such as: / 2013 / 81 html $status //Response status code of the request, such as 200 $body_bytes_sent //The number of body bytes sent in response. Even if the connection is interrupted, this data is accurate, such as 40 $content_length //Equal to the value of "Content_Length" of the request line $content_type //Equal to the value of "Content_Type" of the request line $http_referer //Reference address $http_user_agent //Client agent information, such as: Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/29.0.1547.76 Safari/537.36 $args //With $query_string equals to the parameter (GET) of the URL, such as a = 1 & B = 2 $document_uri //Same as $URI, this variable refers to the current request URI and does not include any parameters (see $args), such as: / 2013 / 81 html $document_root //Set the value for the root path of the current request $hostname //For example: CentOS 53 localdomain $http_cookie //Client cookie information $cookie_COOKIE //Value of cookie # cookie variable $is_args //If there is a $args parameter, this variable is equal to "?", Otherwise, it is equal to "", null value, such as ""? $limit_rate //This variable can limit the connection rate. 0 means no speed limit $query_string //The same as $args, the parameter (GET) in which "= is equal to the URL, such as a = 1 & B = 2 $request_body //Record the data information from POST $request_body_file //Temporary file name of the client requesting principal information $request_method //The action requested by the client is usually GET or POST, such as GET $request_uri //The original URI containing the request parameters, excluding the host name, such as: / 2013 / 81 html? a=1&b=2 $scheme //HTTP method (e.g. HTTP, https), e.g. http $uri //This variable refers to the current request URI and does not include any parameters (see $args), such as: / 2013 / 81 html $request_completion //Set to OK if the request ends When the request is not finished or if the request is not the last one in the request chain, it is empty, such as OK $server_protocol //The protocol used for the request is usually HTTP/1.0 or HTTP/1.1, such as HTTP/1.1 $server_addr //Server IP address, which can be determined after a system call $server_name //Server name, such as blog sakmon. com $server_port //Port number of the request, such as 80 of the server

How does Nginx implement the health check of back-end services?

- Method 1: use nginx's own module {ngx_http_proxy_module and ngx_http_upstream_module performs health check on backend nodes.

- Mode 2 (recommended), using # nginx_ upstream_ check_ The module performs health checks on the backend nodes.

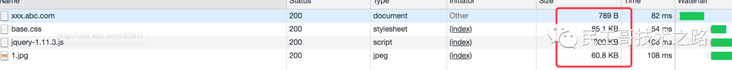

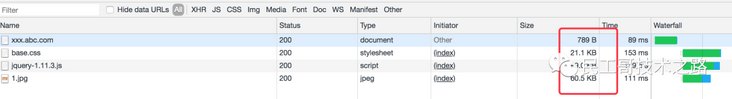

How does Nginx enable compression?

After nginx gzip compression is enabled, the size of static resources such as web pages, css and js will be greatly reduced, which can save a lot of bandwidth, improve transmission efficiency and give users a fast experience. Although it will consume cpu resources, it is worth it to give users a better experience.

The open configuration is as follows:

Put the above configuration into nginx In the http {...} node of conf.

http {

#Open gzip

gzip on;

#The smallest file with gzip compression enabled; Files smaller than the set value will not be compressed

gzip_min_length 1k;

#gzip compression level 1-10

gzip_comp_level 2;

#The type of file to compress.

gzip_types text/plain application/javascript application/x-javascript text/css application/xml text/javascript application/x-httpd-php image/jpeg image/gif image/png;

#Whether to add Vary: accept encoding in http header. It is recommended to enable it

gzip_vary on;

}

Save and restart nginx and refresh the page (to avoid caching, please force refresh) to see the effect. Take Google browser as an example. Look at the response header of the request through F12:

We can compare the corresponding file size before we start zip compression, as shown below:

Now we have opened gzip to compress the file size, as shown below:

And when we look at the response header, we will see compression like gzip, as shown below

Effect comparison before and after gzip compression: the original size of jquery is 90kb, but only 30kb after compression.

gzip is easy to use, but it is not recommended to enable the following types of resources.

1. Picture type

Reason: images such as jpg and png will be compressed, so even if gzip is enabled, the size before and after compression is not much different, so it will waste resources in vain. (Tips: you can try to compress a jpg image into zip, and observe that the size does not change much. Although the zip and gzip algorithms are different, you can see that the value of compressed images is not great)

2. Large file

Reason: it will consume a lot of cpu resources and may not have obvious effect.

ngx\_ http\_ upstream\_ What is the function of module?

ngx\_http\_upstream\_module is used to define server groups that can be referenced through fastcgi delivery, proxy delivery, uwsgi delivery, memcached delivery and scgi delivery instructions.

What is the C10K problem?

C10K problem refers to the inability to process network sockets of a large number of clients (10000) at the same time.

Does Nginx support compressing requests upstream?

You can use the Nginx module gunzip to compress requests upstream. The gunzip module is a filter that uses "content encoding: gzip" to decompress responses to clients or servers that do not support the "gzip" encoding method.

How do I get the current time in Nginx?

To get the current time of Nginx, you must use SSI module and $date_gmt and $date_local variable.

Proxy_set_header THE-TIME $date_gmt;

What is the purpose of explaining - s with the Nginx server?

Executable file used to run the Nginx -s parameter.

How to add a module on the Nginx server?

During compilation, the Nginx module must be selected, because Nginx does not support the runtime selection of the module.

How to set the number of worker processes in production?

In the case of multiple CPUs, multiple workers can be set, and the number of worker processes can be set as much as the core number of CPUs. If multiple worker processes are started on a single cpu, the operating system will schedule among multiple workers, which will reduce the system performance. If there is only one cpu, only one worker process can be started.

nginx status code

499: the processing time of the server is too long, and the client actively closes the connection.

502:

- (1). Has the fastcgi process started

- (2). Is the number of fastcgi worker processes insufficient

- (3).FastCGI takes too long to execute

fastcgi_connect_timeout 300; fastcgi_send_timeout 300; fastcgi_read_timeout 300;

- (4).FastCGI Buffer is not enough. Like apache, nginx has front-end buffer limit, and buffer parameters can be adjusted

fastcgi_buffer_size 32k; fastcgi_buffers 8 32k;

- (5). The proxy buffer is not enough. If you use Proxying, adjust it

proxy_buffer_size 16k; proxy_buffers 4 16k;

- (6).php script takes too long to execute

Add PHP FPM 0s of conf is changed to a time