preface

This article will no longer involve the principle part. If you want to understand the basic knowledge, please see the previous article. We use the function in opencv, which is also the focus on this function. We get the external parameters through this function and get our final results through the external parameters!

Opencv:SolvePNP

reference resources: https://www.jianshu.com/p/b97406d8833c

Introduction: if the 3D structure of the scene is known, the absolute pose relationship between the camera coordinate system and the world coordinate system representing the 3D scene structure can be solved by using the coordinates of multiple control points in the 3D scene and their perspective projection coordinates in the image, including the absolute translation vector t and the rotation matrix R. this kind of solution method is collectively referred to as N-point perspective pose solution (Perspective-N-Point, PNP problem)

void solvePnP(InputArray objectPoints, InputArray imagePoints, InputArray cameraMatrix, InputArray distCoeffs, OutputArray rvec, OutputArray tvec, bool useExtrinsicGuess=false, int flags = CV_ITERATIVE)

Next, let's look at these parameters:

- objectPoints - coordinates of control points in the world coordinate system. The data type of vector can be used here

- imagePoints - the coordinates of the corresponding control point in the image coordinate system. vector can be used here

- cameraMatrix - camera's internal parameter matrix

- distCoeffs - distortion factor of the camera

- rvec - the output rotation vector. Rotates the coordinate point from the world coordinate system to the camera coordinate system

- tvec - the translation vector of the output. Translates the coordinate point from the world coordinate system to the camera coordinate system

- flags - the CV_ITERATIV iteration method is used by default

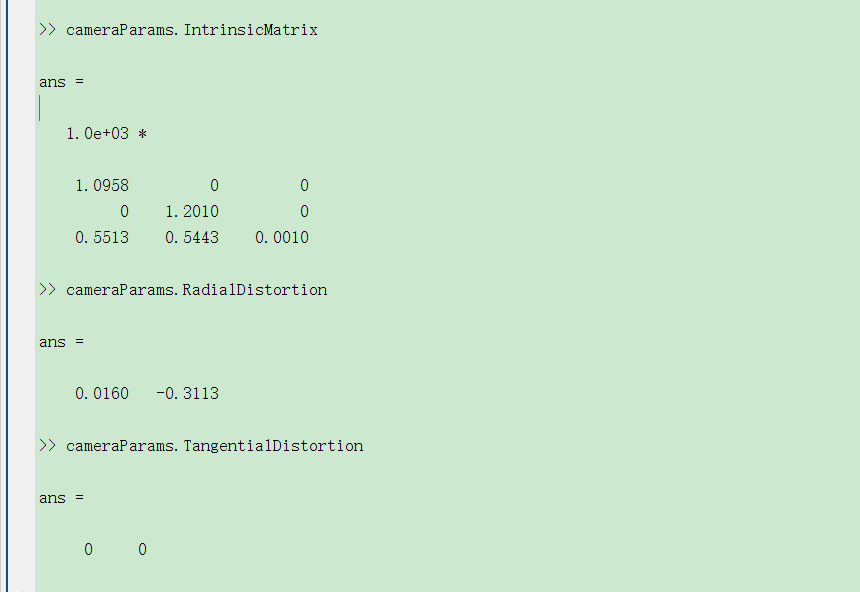

The second parameter here is not the pixel coordinate system. Don't confuse it. Then the first two may need to change the pixel coordinates. See the previous article for the specific process. When reading the previous article, you must get the internal parameter matrix of the camera. It is as follows:

Code writing

No matter how clear the theory is, it must be implemented in code

Load camera parameters

Here, we first create an xml file, and then we can read it with opencv Library:

<?xml version="1.0"?>

<opencv_storage>

<Y_DISTANCE_BETWEEN_GUN_AND_CAM>0.</Y_DISTANCE_BETWEEN_GUN_AND_CAM>

<CAMERA_MATRIX_1 type_id="opencv-matrix">

<rows>3</rows>

<cols>3</cols>

<dt>d</dt>

<data>

1096.8 0. 551.3 0.

1201.0 544.3 0. 0. 1.</data></CAMERA_MATRIX_1>

<DISTORTION_COEFF_1 type_id="opencv-matrix">

<rows>4</rows>

<cols>1</cols>

<dt>d</dt>

<data>

0.0160 -3113

0. 0.</data></DISTORTION_COEFF_1>

</opencv_storage>

Then remember to change the parameters and compare them with the parameters we got above

Defines the true size of the object

In fact, this step is very important, because in this way, we can calculate the distance and pose by assuming that the center of the object is concerned, and then write the coordinates in the world coordinate system and reverse the external parameters. (the specific focus theory will understand)

Here I use the armor plate, but the armor plate does not arrive, so I set the size to 0 first

//distance double my_distance=0; //Defines the size of the armor plate double small_armor_height = 0; double small_armor_width = 0; double big_armor_height = 0; double big_armor_width = 0;

After we get the size of the real object, let's write the coordinates in the world coordinate system. Here we use homogeneous coordinates.

/*******************************************************************

Function name: void AngleSolver::set_object_point(double height,double width)

Function: to receive parameters for external interfaces

Details: set the size in set_size

Input: length and width of object

Returning: points in the world coordinate system

********************************************************************/

void AngleSolver::set_object_point(double height,double width)

{

double half_x = width / 2.0;

double half_y = height / 2.0;

object_point.push_back(Point3f(-half_x, half_y, 0)); //Upper left

object_point.push_back(Point3f(half_x, half_y, 0)); //Upper right

object_point.push_back(Point3f(half_x, -half_y, 0)); //lower right

object_point.push_back(Point3f(-half_x, -half_y, 0)); //Lower left

}

Place image coordinates

Firstly, identify the feature points, and then put them into a container. Here, I take an armor plate as an example:

LeftLight_rect = left_light.boundingRect(); RightLight_rect = right_light.boundingRect(); //Solve 4 vertices and put them in the container double p1_x = (LeftLight_rect.tl().x + LeftLight_rect.width/2); double p1_y = LeftLight_rect.tl().y; armor_point.push_back(Point2f(p1_x,p1_y)); double p2_x = (RightLight_rect.tl().x + RightLight_rect.width/2); double p2_y = RightLight_rect.tl().y; armor_point.push_back(Point2f(p2_x,p2_y)); double p3_x = (RightLight_rect.br().x - RightLight_rect.width/2); double p3_y = RightLight_rect.br().y; armor_point.push_back(Point2f(p3_x,p3_y)); double p4_x = (LeftLight_rect.br().x - LeftLight_rect.width/2); double p4_y = LeftLight_rect.br().y; armor_point.push_back(Point2f(p4_x,p4_y));

Ranging plus angle solution

Through the pnp algorithm, we get the translation vector and naturally get the coordinates of three axes. We use these coordinates to solve:

/*******************************************************************

Function name: void AngleSolver::solve_angle()

Function: solve the angle according to the parameters in the class and world coordinates. First solve the distance, and then calculate the yaw angle and pitch angle

Details: note that in the later stage, the distance and from the grab to the camera should be added, which should be based on the specific situation (+ / -), and in the later stage, the influence of gravity should be considered

Input: None

Return: None

********************************************************************/

void AngleSolver::solve_angle()

{

//Define rotation matrix

Mat _rvec;

//Define translation vector

Mat tVec;

//Get the above external parameters

solvePnP(object_point, targetContour, CAMERA_MATRIX, DISTORTION_COEFF, _rvec, tVec, false, SOLVEPNP_ITERATIVE);

//P4P_solver: use external parameters to find what we want

//Calculate the distance according to the xyz axis projection of the translation vector

tVec.at<double>(1, 0) -= GUN_CAM_DISTANCE_Y;

double x_pos = tVec.at<double>(0, 0);

double y_pos = tVec.at<double>(1, 0);

double z_pos = tVec.at<double>(2, 0);

//Find distance

distance = sqrt(x_pos * x_pos + y_pos * y_pos + z_pos * z_pos);

//The tangent of the angle is solved by projection

double tan_pitch = y_pos / z_pos;//sqrt(x_pos*x_pos + z_pos * z_pos);

double tan_yaw = x_pos / z_pos;

//Angle to radian

x_pitch = -atan(tan_pitch) * 180 / CV_PI;

y_yaw = atan(tan_yaw) * 180 / CV_PI;

}