In the penetration test, the key is the information collection stage. The amount of information collected greatly affects the size of the attack surface. Here, we will not introduce the basic information collection steps, but only discuss how to expand the information collection surface through JS. Here are just some methods and tools I use. If there are any, please add them in the comment area.

Domain name collection

Domain name collection is divided into root domain name collection and sub domain name collection. In the early stage of penetration test, if there is only one root domain name, you can expand the collection of sub domain names by collecting other root domain names. There are many different ways to collect other root domain names. From the Perspective of JS, there will be some interface calls in JS, There are many interfaces for other domain names. In this way, as long as other domain names are found in JS and the JS files in these domain names are collected for analysis, many root domain names and sub domain names can be collected. This step is inevitably complicated manually. A master has written some tools to collect them with one click. This will be introduced below.

In addition, many root domain names can be found through the filing of tianyancha's website

Sensitive information

API information

There are usually APIs for some pages in JS. If the back end is not verified, it may cause unauthorized vulnerabilities or ultra vires. For example, if APIs for some functions in the background are found through JS code without login, you can test whether there are vulnerabilities by trying these APIs, especially the API for querying information. In many cases, First, after discovering the background, you can obtain a user with low permissions through various ways. After entering, you can audit JS code. In many cases, you will have unexpected gains. For example, sometimes, there are some APIs of super administrators in these JS. You can use some functions of super administrators by calling these APIs.

Password and other sensitive information

Regular expressions can be used to obtain some password information in JS files. Some programmers often add various comments to the source code in order to better maintain and debug the code. For example, in order to facilitate debugging, write account password directly in Javascript code. After debugging, just note this sentence instead of deleting it. Sometimes the system changes and some new interfaces are introduced. Developers annotate the old interfaces and use the new interfaces, but the server does not turn off the old interfaces, etc.

AJAX Request

Ajax requests are usually triggered and called by various events, and the triggering of these events often needs to meet certain conditions. But many times, even if the event trigger conditions are not met, we can still get the response of the server by constructing the data packet of Ajax request and sending it to the server.

For example, a password modification process may be to verify the mobile phone number first and then modify the password. If the Ajax request to modify the password is found through the information in js, you can try to directly construct the request to modify the password

Dangerous function in JS

Dangerous functions include directly executing JavaScript, loading URL, executing HTML, creating elements, and partially controllable execution

Execute JavaScript directly

Such dangerous functions directly execute the input in the form of JavaScript code, for example.

eval(payload) setTimeout(payload, 100) setInterval(payload, 100) Function(payload)() <script>payload</script> <img src=x onerror=payload>

Load URL

Such dangerous functions execute JavaScript code in the form of URL loading, but they are generally similar to JavaScript.

location=javascript:alert(/xss/) location.href=javascript:alert(/xss/) location.assign(javascript:alert(/xss/)) location.replace(javascript:alert(/xss/))

Execute HTML

This kind of dangerous function directly executes the input in the form of HTML code, which can execute the code under certain circumstances.

xx.innerHTML=payload xx.outerHTML=payload document.write(payload) document.writeln(payload)

Create element

Most of these calls create a DOM element and add it to the page. When the source of script is controllable or the construction of elements is controllable, problems may occur.

scriptElement.src domElement.appendChild domElement.insertBefore domElement.replaceChild

Partially controllable execution

Such calls have certain dynamic components and are not highly controllable, but they are valuable in some cases. Therefore, monitoring is added to the tool.

(new Array()).reduce(func) (new Array()).reduceRight(func) (new Array()).map(func) (new Array()).filter(func)

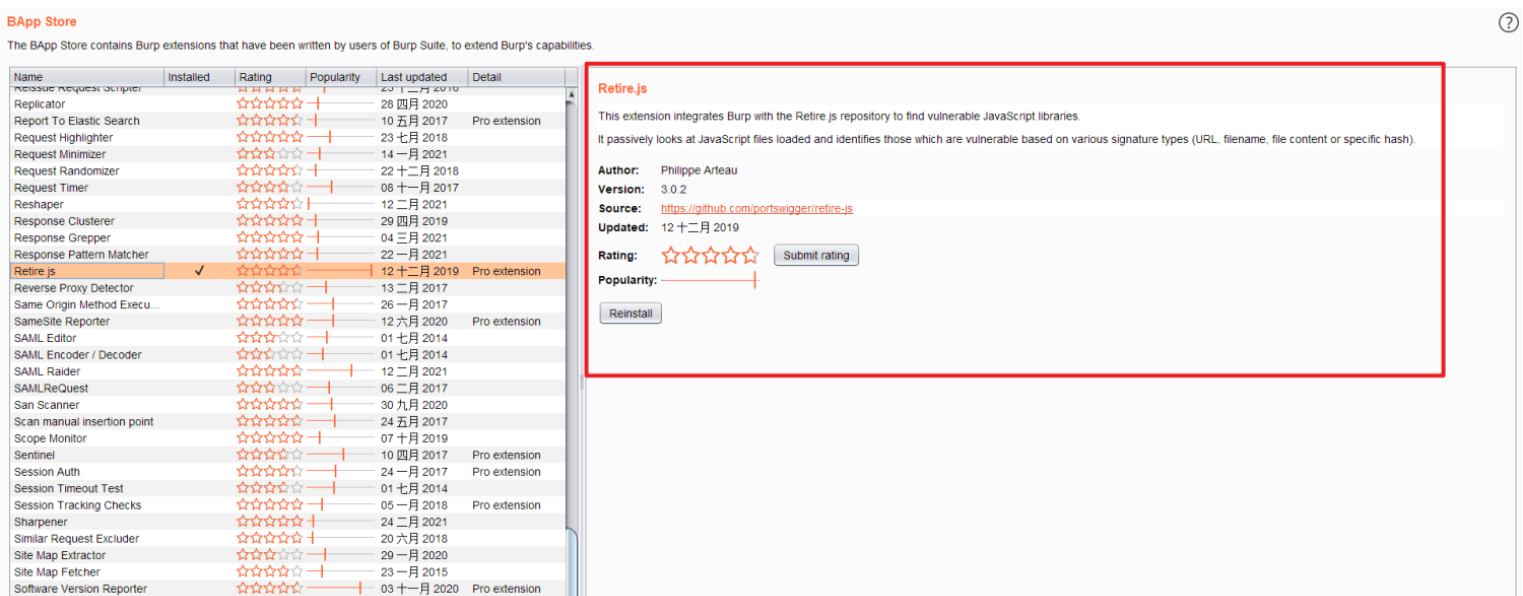

Vulnerable framework

There are several vulnerabilities in jQuery 1, jQuery 2 or jQuery 3, including cross site scripting, type pollution and DoS. There are also many vulnerabilities in popular modules of jQuery, such as jQuery mobile, jQuery fileUpload and jQuery colorbox function library. There are cross site scripting or arbitrary program code execution vulnerabilities. Retire.js is a tool that can identify the old JavaScript framework used by the application. You can install plug-ins on burpsuite for debugging

Collect JS files

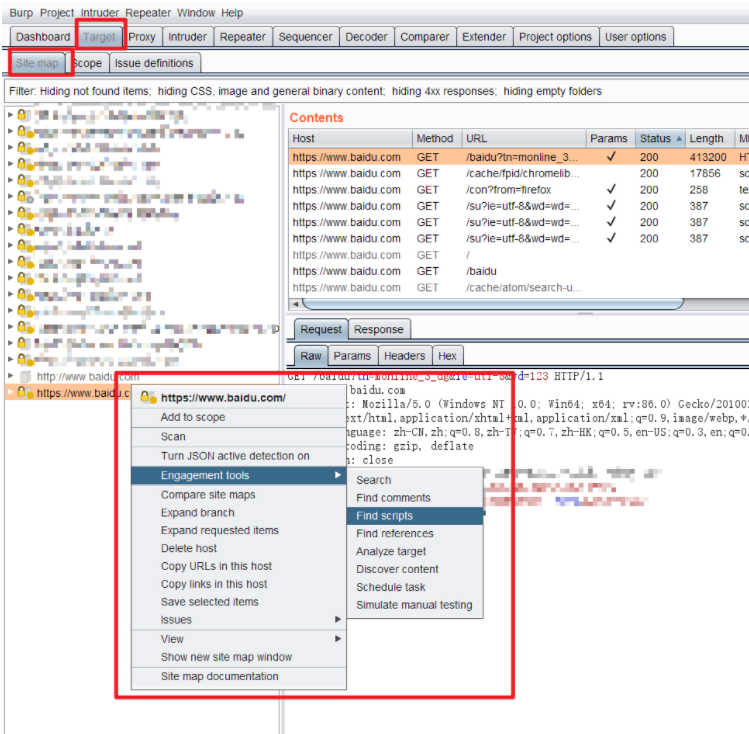

Collecting JS files using burpsuite

The JS of a website can be collected using Burpsuite

Then export the results to get the JS file collected on the current page

Use history library

https://archive.org/web/

Wayback Machine can be understood as an Internet history database. You can find all links of a website in it. After obtaining them, some files may have been deleted, so you can use tools or commands to batch verify whether these files exist. You can use hakcheckurl

Installation: go get github.com/hakluke/hakcheckurl use: cat jslinks.txt | hakcheckurl

Sensitive information collection tools

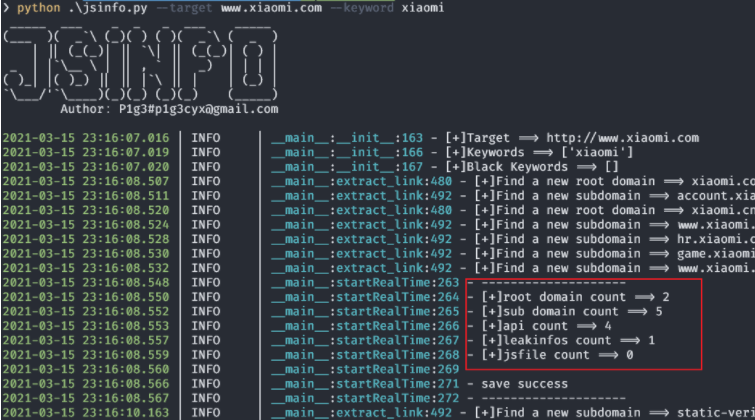

JSINFO-SCAN

https://github.com/p1g3/JSINFO-SCAN

This tool uses a recursive method. It only needs one domain name at the beginning, and then it will recursively crawl JS files for analysis after running, and then save the collected main domain name, sub domain name, api and other sensitive information. The collected main domain name and sub domain name will continue to recurse, and keywords and blacklists can be set to collect root domain names Expand the subdomain name. The blacklist is used to stop secondary crawling when the returned package contains these keywords

usage method

python3 jsinfo.py --target www.xxx.com --keywords baidu --black_keywords test

In actual use, this tool can collect a lot of information, especially the root domain name and sub domain name, but the running speed is very slow. You can run on the server in combination with the nohup command, but one thing is that the saved result is to save the collected information from the internal storage to the file after Ctrl+C. After using the nohup command, You can use the PID number of the kill -9 process to interrupt the program, otherwise the program will run all the time.

As shown in the figure, the tool will display the amount of information collected, and the newly discovered root domain name and sub domain name will also be displayed. After interrupting the program, the results will be saved automatically. In addition, it can be seen from the code that the tool will find some sensitive information through these regular expressions

self.leak_info_patterns = {'mail': r'([-_a-zA-Z0-9\.]{1,64}@%s)', 'author': '@author[: ]+(.*?) ',

'accesskey_id': 'accesskeyid.*?["\'](.*?)["\']',

'accesskey_secret': 'accesskeyid.*?["\'](.*?)["\']',

'access_key': 'access_key.*?["\'](.*?)["\']', 'google_api': r'AIza[0-9A-Za-z-_]{35}',

'google_captcha': r'6L[0-9A-Za-z-_]{38}|^6[0-9a-zA-Z_-]{39}$',

'google_oauth': r'ya29\.[0-9A-Za-z\-_]+',

'amazon_aws_access_key_id': r'AKIA[0-9A-Z]{16}',

'amazon_mws_auth_toke': r'amzn\\.mws\\.[0-9a-f]{8}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{12}',

'amazon_aws_url': r's3\.amazonaws.com[/]+|[a-zA-Z0-9_-]*\.s3\.amazonaws.com',

'amazon_aws_url2': r"("r"[a-zA-Z0-9-\.\_]+\.s3\.amazonaws\.com"r"|s3://[a-zA-Z0-9-\.\_]+"r"|s3-[a-zA-Z0-9-\.\_\/]+"r"|s3.amazonaws.com/[a-zA-Z0-9-\.\_]+"r"|s3.console.aws.amazon.com/s3/buckets/[a-zA-Z0-9-\.\_]+)",

'facebook_access_token': r'EAACEdEose0cBA[0-9A-Za-z]+',

'authorization_basic': r'basic [a-zA-Z0-9=:_\+\/-]{5,100}',

'authorization_bearer': r'bearer [a-zA-Z0-9_\-\.=:_\+\/]{5,100}',

'authorization_api': r'api[key|_key|\s+]+[a-zA-Z0-9_\-]{5,100}',

'mailgun_api_key': r'key-[0-9a-zA-Z]{32}',

'twilio_api_key': r'SK[0-9a-fA-F]{32}',

'twilio_account_sid': r'AC[a-zA-Z0-9_\-]{32}',

'twilio_app_sid': r'AP[a-zA-Z0-9_\-]{32}',

'paypal_braintree_access_token': r'access_token\$production\$[0-9a-z]{16}\$[0-9a-f]{32}',

'square_oauth_secret': r'sq0csp-[ 0-9A-Za-z\-_]{43}|sq0[a-z]{3}-[0-9A-Za-z\-_]{22,43}',

'square_access_token': r'sqOatp-[0-9A-Za-z\-_]{22}|EAAA[a-zA-Z0-9]{60}',

'stripe_standard_api': r'sk_live_[0-9a-zA-Z]{24}',

'stripe_restricted_api': r'rk_live_[0-9a-zA-Z]{24}',

'github_access_token': r'[a-zA-Z0-9_-]*:[a-zA-Z0-9_\-]+@github\.com*',

'rsa_private_key': r'-----BEGIN RSA PRIVATE KEY-----',

'ssh_dsa_private_key': r'-----BEGIN DSA PRIVATE KEY-----',

'ssh_dc_private_key': r'-----BEGIN EC PRIVATE KEY-----',

'pgp_private_block': r'-----BEGIN PGP PRIVATE KEY BLOCK-----',

'json_web_token': r'ey[A-Za-z0-9-_=]+\.[A-Za-z0-9-_=]+\.?[A-Za-z0-9-_.+/=]*$',

'slack_token': r"\"api_token\":\"(xox[a-zA-Z]-[a-zA-Z0-9-]+)\"",

'SSH_privKey': r"([-]+BEGIN [^\s]+ PRIVATE KEY[-]+[\s]*[^-]*[-]+END [^\s]+ PRIVATE KEY[-]+)",

'possible_Creds': r"(?i)("r"password\s*[`=:\"]+\s*[^\s]+|"r"password is\s*[`=:\"]*\s*[^\s]+|"r"pwd\s*[`=:\"]*\s*[^\s]+|"r"passwd\s*[`=:\"]+\s*[^\s]+)", }

LinkFinder

https://github.com/GerbenJavado/LinkFinder LinkFinder It's a Python Scripts designed to discover JavaScript Endpoints and their parameters in the file. In this way, penetration testers and Bug Hunters can collect new, hidden endpoints on the website they are testing. Lead to a new test environment that may contain new vulnerabilities. It will jsbeautifier for python Used in conjunction with fairly large regular expressions.

You can run using Python 3

git clone https://github.com/GerbenJavado/LinkFinder.git cd LinkFinder pip3 install -r requirements.txt python setup.py install

Use example

- Find the endpoint in the online JavaScript file and output the HTML results to results Basic usage of HTML:

python linkfinder.py -i https://example.com/1.js -o results.html - CLI / STDOUT output (without jsbeautifier, which makes it very fast):

python linkfinder.py -i https://example.com/1.js -o cli - Analyze the entire domain and its JS files:

python linkfinder.py -i https://example.com -d

Use the contents of the file as input

python linkfinder.py -i burpfile -b - Enumerate the entire folder of JavaScript files, find the endpoint starting with / api /, and finally save the results to results In HTML:

python linkfinder.py -i 'Desktop/*.js' -r ^/api/ -o results.html

relative-url-extractor

https://github.com/jobertabma/relative-url-extractor

This tool can extract interfaces directly from online js files or from local files. When extracting, the code will be beautified automatically

Reproduced at: https://bbs.ichunqiu.com/forum.php?mod=viewthread&tid=60424&highlight=%E4%BF%A1%E6%81%AF%E6%94%B6%E9%9B%86%E4%B9%8B%E9%80%9A%E8%BF%87JS